Generating Image Descriptions: Difference between revisions

| (46 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

This Page is Under Construction. | This Page is Under Construction. | ||

== Introduction | == Introduction == | ||

People often say that a picture is worth a thousand words, but with the current tools available, NLP and image recognition algorithms are unable to extract nearly that much meaningful text from images. Contrarily, when a human glances at an image, they can easily identify numerous details and relationships within a detailed visual scene. Despite numerous advancements in image classification, object detection, and natural language processing, this particular task has proven challenging for existing visual recognition models. The majority of similar visual recognition models focus on labeling objects | People often say that a picture is worth a thousand words, but with the current tools available, NLP and image recognition algorithms are unable to extract nearly that much meaningful text from images. Contrarily, when a human glances at an image, they can easily identify numerous details and relationships within a detailed visual scene. Despite numerous advancements in image classification, object detection, and natural language processing, this particular task has proven challenging for existing visual recognition models. The majority of similar visual recognition models focus on labeling objects, or generating image descriptions based on limited vocabularies and fixed sentence models. The authors of this paper believed that the assumptions of these types of models were too restrictive, leading to an inability to generate rich descriptions that the human mind is capable of. | ||

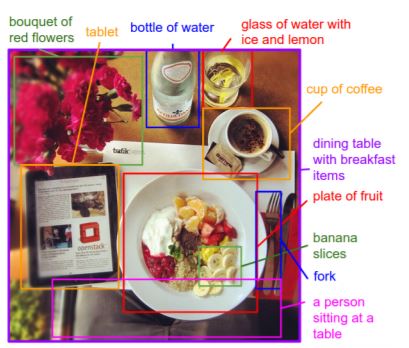

In this paper, the restriction of hard-coded word templates, and sentence structures are removed. Using this assumption-free ideal, the Kapersky and Li create a model that can generate richer descriptions drawn from a wider vocabulary. Due to the lack of directly relevant training data for this task, the authors leveraged the large quantity of images with detailed captions available online. Although these images contained no explicit information about image region labels or relationships between regions, they could treat individual captions as weak labels in which contiguous segments of words correspond to some particular, but unknown location in the image. By inferring the location of alignment between the corpus and image, a generative model is created that can generate detailed | In this paper, the restriction of hard-coded word templates, and sentence structures are removed. Using this assumption-free ideal, the Kapersky and Li create a model that can generate richer descriptions drawn from a wider vocabulary. Due to the lack of directly relevant training data for this task, the authors leveraged the large quantity of images with detailed captions available online. Although these images contained no explicit information about image region labels or relationships between regions, they could treat individual captions as weak labels in which contiguous segments of words correspond to some particular, but unknown location in the image. By inferring the location of alignment between the corpus and image, a generative model is created that can generate detailed annotations as seen in Figure 1. | ||

[[File:ImageCaption1.JPG]] | [[File:ImageCaption1.JPG]] | ||

| Line 13: | Line 13: | ||

Furthermore, a multimodal Recurrent Neural Network (RNN) structure is used to take an input image, and generate text descriptions along with their corresponding regions. Simulations show that the generated sentences outperform rigid, retrieval based models. | Furthermore, a multimodal Recurrent Neural Network (RNN) structure is used to take an input image, and generate text descriptions along with their corresponding regions. Simulations show that the generated sentences outperform rigid, retrieval based models. | ||

== Relevant | == Relevant Background Information== | ||

Before analyzing Kapersky and Li's visual recognition model in more depth, we first discuss relevant background knowledge. | |||

'''Regional Convolutional Neural Networks (RCNN) ''' | |||

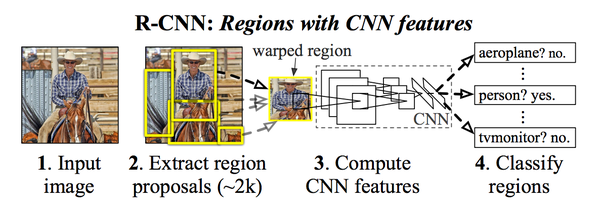

Unlike standard CNNs, RCNNs aim to go beyond classification. Images often have multiple notable regions, and thus it is important to reason with multiple overlapping objects, and different backgrounds to identify region boundaries, differences, and relations to one another. | |||

So, to summarize, R-CNN is just the following steps: | |||

1) Generate a set of proposals for bounding boxes to detect relevant object regions. | |||

'''RNN([https://en.wikipedia.org/wiki/Recurrent_neural_network Recurrent Neural Network]):''' | 2) Run bounded images through a pre-trained CNN and an SVM to see what object each box is. | ||

3) Run the box through a linear regression model to output tighter coordinates for the box once the object has been classified. | |||

[[File:RCNN.png |600px]]] | |||

'''Recurrent Neural Networks (RNN) ([https://en.wikipedia.org/wiki/Recurrent_neural_network Recurrent Neural Network]):''' | |||

The main feature of Recurrent neural network is for each step the decision of last step also become a source of input in the next step. Along with the original input, recurrent neural network have two sources of input. | The main feature of Recurrent neural network is for each step the decision of last step also become a source of input in the next step. Along with the original input, recurrent neural network have two sources of input. | ||

Give a simple '''example''', if you decide to cook apple pie, burger and chicken in that order. If it’s sunny then next day you cook the same dish, otherwise you cook the next dish. This is a simple recurrent neural network. The dish you cooked today is a also an input for the next day’s decision making. (detail in [https://www.youtube.com/watch?v=UNmqTiOnRfg here]) | Give a simple '''example''', if you decide to cook apple pie, burger and chicken in that order. If it’s sunny then next day you cook the same dish, otherwise you cook the next dish. This is a simple recurrent neural network. The dish you cooked today is a also an input for the next day’s decision making. (detail in [https://www.youtube.com/watch?v=UNmqTiOnRfg here]) | ||

So '''mathematically speaking''', The decision a recurrent net reached at time step t-1 affects the decision it will reach one moment later at time step t. | So '''mathematically speaking''', The decision a recurrent net reached at time step t-1 affects the decision it will reach one moment later at time step t. In other words, the RNN holds past information in memory and uses it as an input to predict outputs in future time frames. | ||

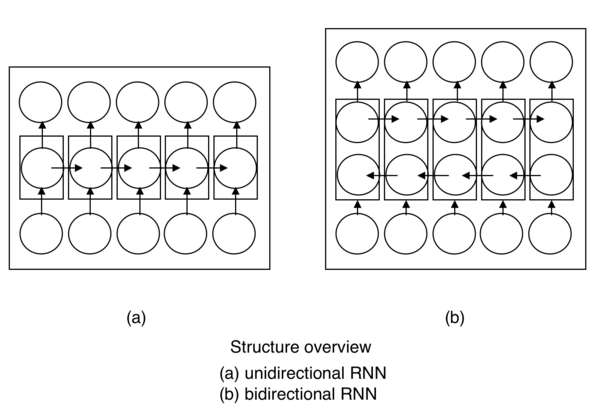

'''Bidirectional Recurrent Neural Networks (BRNN) ([https://en.wikipedia.org/wiki/Bidirectional_recurrent_neural_networks Bidirection Recurrent Neural Network])''' | |||

The motivation for using a BRNN comes from Schuster and Paliwal, who wanted to increase the amount of information available to the network. A regular recurrent neural network can only move in one direction; neurons are only able to pass information take past information into the current input, the future information cannot be reached from the current state. However, BRNN allow nodes to communicate both forwards and backwards in time. This allows for the network to make effectively check if its outputs are sensible by moving backwards in time to check the output sequence. If the sequence violates the probablistic reasoning of the network, then the network will potentially correct past outputs. A diagram to contrast the RNN and BRNN network layouts is shown in the diagram below: | |||

[[File:RNN_BRNN.png |600px]]] | |||

'''Markov Random Field (MRF)([https://en.wikipedia.org/wiki/Markov_random_field Markov Random Field])''' | |||

MRF interactions between neighboring words encourage an alignment to the same region. | |||

The most important part of a MRF formula is a hyperparameter that controls the affinity towards longer word phrases. This parameter allows us to interpolate between single-word alignments and aligning the entire sentence to a single, maximally scoring region when is large. | |||

== Literature Review== | |||

The problem discussed in the paper is an interdisciplinary problem that combines ideas from: | |||

i) Dense image annotations | |||

ii) Generating descriptions | |||

iii) Grounding natural language in images | |||

iv) Neural networks in visual and language domains | |||

The problem forms the foundation of many applications such as: visual search, visual cognition chatbots, photo sharing in social media, visual aid for visually impaired, and surveillance monitoring for corporations. | |||

=== I: Dense Image Annotations === | |||

"Matching words and pictures" (Barnard et al., 2003), and "Connecting modalities: Semisupervised segmentation and annotation of images using unaligned text corpora" (Socher et al. 2010) are two of many earlier works which explored multimodal correspondence between words and image segments. Unfortunately, these pioneering works primarily focused on accuracy of labeled regions, rather than rich region level description generations desired by this paper. Leveraging holistic scene understanding used in works such as "Towards total scene understanding: Classification, annotation and segmentation in an automatic framework" (L.-J. Li et. al, 2009), quantify scene relationships with a joint probability distribution based on relationships between scene image class C, objects O, regions R, image patches X, annotation tags T, as well as latent variables. | |||

=== II: Generating Descriptions === | |||

Generating image descriptions and annotations has become a hot research topic, but many related works treat it as a retrieval problem instead of a generative model. Papers such as "Framing image description as a ranking task: data, models and evaluation metrics" (Hodosh et. al, 2013) use an arbitrary similarity measure to directly transfer a caption from the most relevant training image to a new test image, while papers such as "Collective generation of natural image descriptions"(Kuznetsova et. al, 2012), Other templates such as "From image annotation to image description" (Gupta et. al, 2012) and "Midge: Generating image descriptions from computer vision detections" (Mitchell et. al, 2012) use fixed sentence templates and grammar structure to describe images. All of the aforementioned methods are far too restrictive in the variety of outputs. | |||

'' | Kapersky and Li's model instead builds off the paper "Multimodal neural language models" (by Kiros et al.,2014). Adapting Kiros' log bilinear model that can generate full sentence descriptions for images with fixed window context, this paper creates an RNN model that conditions the probability distribution over the next word in a sentence | ||

on all previously generated words. | |||

== | === III: Grounding Natural Language in Images=== | ||

The methods this paper uses for associating words with the visual domain is inspired by "DeViSE: A Deep Visual-Semantic Embedding Model" (Frome et al, 2013). The paper first trains a model to learn semantically-meaningful, vector representations of words in parallel with a visual recognition neural network. Combining these two networks, we replace the activation layer of the visual recognition neural network with a layer used to predict the semantic representations in the text domain. Adopting this algorithm, Kapersky and Li decompose images and sentences, and infers alignment between contiguous visual and textual fragments. | |||

=== IV: Neural Networks in Visual and Language Domains=== | |||

In the recent decade, neural networks have become an integral component of machine learning tasks in both visual and language domains. Numerous classes of CNNs have been researched in image classification and object detection. On the other hand, using pre-trained word representation models such as Word2Vec, the sequential nature of RNNs have become popular in language modelling. | |||

== The Model: Alignment == | == The Model: Alignment == | ||

| Line 54: | Line 93: | ||

Given an image, a Region Convolutional Neural Network (Girshick et al) is used to detect every possible object that appears in the image. From all the possible objects detected, the top 19 are selected, along the full image. | Given an image, a Region Convolutional Neural Network (Girshick et al) is used to detect every possible object that appears in the image. From all the possible objects detected, the top 19 are selected, along the full image. | ||

To create a common embedding, every image is represented by a set of h-dimensional vectors <math> \{v_i | i = 1 ... 20\}</math> where each <math> v_i </math> is represented by a convolutional neural network. | To create a common embedding, every image is represented by a set of h-dimensional vectors <math> \{v_i | i = 1 ... 20\}</math> where each <math> v_i </math> is represented by a convolutional neural network as follows | ||

[[File:images.png|200px]] | |||

where <math> CCN(I_b) </math> transforms the image inside the bounding box into a 4096-dimensional activations of fully connected layer. The matrix <math> W_m </math> has dimension <math> h \times 4096</math>. | |||

'''Sentences ''' | '''Sentences ''' | ||

| Line 63: | Line 106: | ||

Let <math> t = 1 ... N </math> representing the position of the word in the sentence. | Let <math> t = 1 ... N </math> representing the position of the word in the sentence. | ||

[[File:sentence.png]] | |||

[[File:sentence.png|200px]] | |||

<math> I_t </math> is an indicator column that has 1 at the index of the t-th word. | <math> I_t </math> is an indicator column that has 1 at the index of the t-th word. | ||

| Line 79: | Line 123: | ||

Now that we have the images and sentences in the same embedding space, we need to define an objective such that a sentence-image pair will have a high score if they are relevant. | Now that we have the images and sentences in the same embedding space, we need to define an objective such that a sentence-image pair will have a high score if they are relevant. | ||

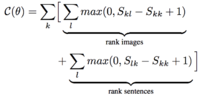

The paper made a simplification on a previous objective function as outlined in a paper by Girshick et al | The paper made a simplification on a previous objective function as outlined in a paper by Girshick et al and it is stated as | ||

[[File: objective.png |200px]] | |||

where | |||

=== | <math> g_k </math> is the set of image fragments of image <math> k </math>. | ||

<math> g_l </math> is the set of sentence fragments over sentence <math> l </math>. | |||

Here every word <math> s_t </math> aligns to the best image region. | |||

If <math> k = l </math> denotes a corresponding image and sentence pair then the final max-margin, structured loss is: | |||

[[File: structured_loss.png |200px]] | |||

Thus this new objective will allow proper alignment of image-sentence to have a higher score than misaligned pairs, by a margin. | |||

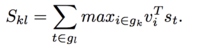

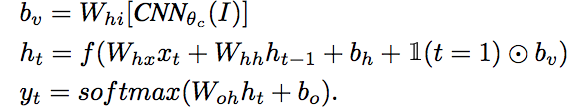

== The Model: Recurrent Neural Network == | |||

Input : Imagines and corresponding textual description | Input : Imagines and corresponding textual description | ||

| Line 94: | Line 152: | ||

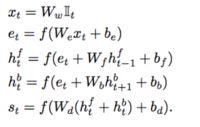

That is when training, taking the image pixels and input vectors, computing hidden states and outputs by iterating the following | That is when training, taking the image pixels and input vectors, computing hidden states and outputs by iterating the following | ||

<math> b_v | [[File: RNNNNNNNN.png]] | ||

Note that the image context vector <math> b_v </math> is only provided at the first iteration, which the author believes to be better than at each time step | |||

Also, it can help to also pass <math> b_v </math> , <math> (W_{h x} x_t) </math> through the activation function | |||

Typical size of hidden layer is 512 neurons | |||

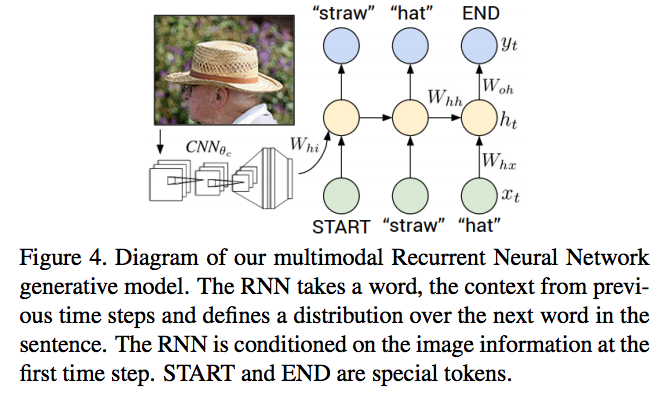

''' RNN Training ''' | |||

The RNN is trained to combine a word <math> x_t </math>, the previous context <math> h_{t-1} </math> to predict the next word <math> y_t </math> | |||

We condition the RNN’s predictions on the image information <math> b_v </math> via bias interactions on the first step. | |||

Traning process : | |||

[[File: RNNNNNNNN1.png]] | |||

Set <math>h_0 = 0 </math>, <math> x_1 </math> to a special START vector, and <math> y_1 </math> as the first word in the sequence | |||

Set <math> x_2 </math> to the word vector of the first word and expect the network to predict the second word, etc. | |||

Let <math> x_T </math> represents the last word, the target label is set to a special END token. | |||

The cost function is to maximize the log probability assigned to the target labels | |||

''' RNN Test ''' | |||

To predict a sentence, compute the image representation <math> b_v </math> | |||

Set <math>h_0 = 0 </math>, <math> x_1 </math> to the START vector and compute the distribution over the first word <math> y_1 </math> | |||

Sample a word from the distribution, set its embedding vector as <math> x_2 </math>, and repeat this process until the END token is generated. | |||

In practice beam search can improve results. | |||

== Optimization == | |||

Used SGD with mini-batches of 100 image-sentence pairs and momentum of 0.9 to optimize the alignment model. | |||

Cross-validated the learning rate and the weight decay. | |||

Used dropout regularization in all layers except in the recurrent layers and clip gradients element-wise at 5 | |||

Achieved the best results using RMSprop, which is an adaptive step size method that scales the update of each weight by a running average of its gradient norm. | |||

== Experimental Results | == Experimental Results == | ||

Three datasets are used for testing: Flickr8K, Flickr30K, MSCOCO. They contain 8,000, 31,000, and 123,000 images respectively and each image is annotated with 5 sentences using Amazon Mechanical Turk. Note that all annotations are converted to lower-case and non-alphanumeric characters are removed. | |||

===Image-Sentence Alignment Evaluation=== | |||

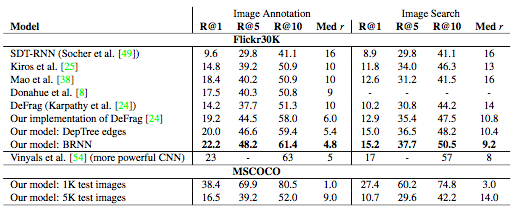

First, the authors assessed the quality of inferred text and image alignment by ranking. Metrics used are median rank of the closest result in the list (median r) and the proportion of times a correct item is found among the top K results (Recall@K). | |||

Last, the Multimodal RNN is trained on the aligning image regions with texts. | [[File:Multimodal RNN Results Table 1.png]] | ||

Table 1. Image-Sentence ranking experiment results. R@K is Recall@K (high is good). Med r is the median rank (low is good). | |||

<u>Main Findings </u> <br /> | |||

#Full model (BRNN) outperforms previous works | |||

#*BRNN performs better than Socher et al. and Kiros et al., which trained on similar loss but used RNN and LSTM to encode sentences respectively | |||

#*Compared to other work that uses AlexNets, BRNN shows consistent improvement | |||

#Performance improvement attributed to simpler cost function | |||

#*The only difference between “Our model: DepTree edges” and “Our implementation of DeFrag” is the simpler cost function as described above *** | |||

#Model considers small or relatively rare objects | |||

#*Enabled by model’s ability to learn region and word vector magnitudes from inner product interaction | |||

===Generated Descriptions: Fullframe Evaluation=== | |||

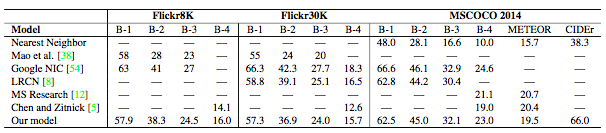

Second, the authors tested the model’s ability to describe image and region. This is done by training the Multimodal RNN to generate descriptions of full images. Scores are given based on how well the generated sentence matches a set of five reference annotations. | |||

[[File:Multimodal RNN Results Table 2.png]] | |||

Table 2. Evaluation of full image predictions on 1,000 test images. B-n is the BLEU score that used up to n-grams (high is good). | |||

<u>Main Findings </u> <br /> | |||

#Model performance declines with beam size | |||

#*60% (vs. 25%) of generated sentences with beam size 7 (vs. beam size 1) can be found in the training data | |||

#Multimodal RNN outperforms nearest neighbor retrieval baseline | |||

#*“Our model” outperforms “Nearest Neigbor” on all metrics in Table 2 | |||

#*Compared to nearest neighbor, RNN takes a fraction of second to evaluate an image | |||

#Multimodal RNN priorities simplicity and speed at a slight cost in performance | |||

#*“Google NIC” is most similar to the RNN model, except “Google NIC” uses LSTM and GoogLeNet => better performance | |||

#*Both “LRCN” and “Mao et al.” use less powerful AlexNet features than the RNN model, hence overall poorer performance in comparison | |||

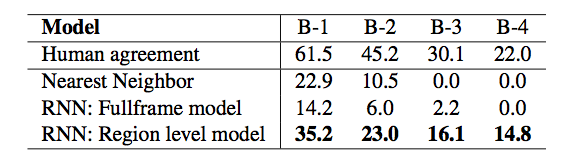

===Region Evaluation=== | |||

Last, the Multimodal RNN is trained on the aligning image regions with texts. Amazon Mechanical Turk is used to obtain snippets of texts for specified regions of an image. Compared to full image captions, region annotations are more comprehensive. | |||

[[File:Multimodal RNN Results Table 3.png]] | |||

Table 3. BLEU score evaluation of image region annotations. | |||

Note that region model outperforms full frame model. This is because fullframe model was trained on full images, thus it would not perform as well for smaller image regions. | |||

==Limitations== | |||

Though results are optimistic, there are several limitations with the Multimodal RNN model: | |||

#Limited to generating one input array of pixels at fixed resolution | |||

#Uses additive bias interaction to gather image information, which is less expressive and more complicated multiplicative interactions | |||

#Built on two separate models – regional versus fullframe; lack of flexibility going directly from one to another | |||

== Conclusions == | == Conclusions == | ||

Throughout the entire paper, authors have introduced the following concepts: | Throughout the entire paper, authors have introduced the following concepts: | ||

1.Generates natural language descriptions of image regions based on '''weak labels'''. | 1.Generates natural-language descriptions of image regions based on '''weak labels'''. | ||

Q: What labels exactly? | Q: What labels exactly? | ||

| Line 160: | Line 309: | ||

5. Embedding. (n.d.). Retrieved from https://en.wikipedia.org/wiki/Embedding | 5. Embedding. (n.d.). Retrieved from https://en.wikipedia.org/wiki/Embedding | ||

6. R. Girshick, J. Donahue, T. Darrell, and J. Malik. Rich feature hierarchies for accurate object detection and semantic segmentation. In CVPR, 2014. | |||

Latest revision as of 10:58, 29 March 2018

This Page is Under Construction.

Introduction

People often say that a picture is worth a thousand words, but with the current tools available, NLP and image recognition algorithms are unable to extract nearly that much meaningful text from images. Contrarily, when a human glances at an image, they can easily identify numerous details and relationships within a detailed visual scene. Despite numerous advancements in image classification, object detection, and natural language processing, this particular task has proven challenging for existing visual recognition models. The majority of similar visual recognition models focus on labeling objects, or generating image descriptions based on limited vocabularies and fixed sentence models. The authors of this paper believed that the assumptions of these types of models were too restrictive, leading to an inability to generate rich descriptions that the human mind is capable of.

In this paper, the restriction of hard-coded word templates, and sentence structures are removed. Using this assumption-free ideal, the Kapersky and Li create a model that can generate richer descriptions drawn from a wider vocabulary. Due to the lack of directly relevant training data for this task, the authors leveraged the large quantity of images with detailed captions available online. Although these images contained no explicit information about image region labels or relationships between regions, they could treat individual captions as weak labels in which contiguous segments of words correspond to some particular, but unknown location in the image. By inferring the location of alignment between the corpus and image, a generative model is created that can generate detailed annotations as seen in Figure 1.

In particular, this paper introduces a neural network that infers latent text relationships with corresponding image region relationships. This is achieved through an optimization problem which associates probabilities related to a multimodal image embedding space to a carefully constructed objective function. Validation of this approach is conducted on image-sentence retrieval tasks.

Furthermore, a multimodal Recurrent Neural Network (RNN) structure is used to take an input image, and generate text descriptions along with their corresponding regions. Simulations show that the generated sentences outperform rigid, retrieval based models.

Relevant Background Information

Before analyzing Kapersky and Li's visual recognition model in more depth, we first discuss relevant background knowledge.

Regional Convolutional Neural Networks (RCNN) Unlike standard CNNs, RCNNs aim to go beyond classification. Images often have multiple notable regions, and thus it is important to reason with multiple overlapping objects, and different backgrounds to identify region boundaries, differences, and relations to one another.

So, to summarize, R-CNN is just the following steps:

1) Generate a set of proposals for bounding boxes to detect relevant object regions.

2) Run bounded images through a pre-trained CNN and an SVM to see what object each box is.

3) Run the box through a linear regression model to output tighter coordinates for the box once the object has been classified.

Recurrent Neural Networks (RNN) (Recurrent Neural Network): The main feature of Recurrent neural network is for each step the decision of last step also become a source of input in the next step. Along with the original input, recurrent neural network have two sources of input.

Give a simple example, if you decide to cook apple pie, burger and chicken in that order. If it’s sunny then next day you cook the same dish, otherwise you cook the next dish. This is a simple recurrent neural network. The dish you cooked today is a also an input for the next day’s decision making. (detail in here)

So mathematically speaking, The decision a recurrent net reached at time step t-1 affects the decision it will reach one moment later at time step t. In other words, the RNN holds past information in memory and uses it as an input to predict outputs in future time frames.

Bidirectional Recurrent Neural Networks (BRNN) (Bidirection Recurrent Neural Network) The motivation for using a BRNN comes from Schuster and Paliwal, who wanted to increase the amount of information available to the network. A regular recurrent neural network can only move in one direction; neurons are only able to pass information take past information into the current input, the future information cannot be reached from the current state. However, BRNN allow nodes to communicate both forwards and backwards in time. This allows for the network to make effectively check if its outputs are sensible by moving backwards in time to check the output sequence. If the sequence violates the probablistic reasoning of the network, then the network will potentially correct past outputs. A diagram to contrast the RNN and BRNN network layouts is shown in the diagram below:

Markov Random Field (MRF)(Markov Random Field)

MRF interactions between neighboring words encourage an alignment to the same region.

The most important part of a MRF formula is a hyperparameter that controls the affinity towards longer word phrases. This parameter allows us to interpolate between single-word alignments and aligning the entire sentence to a single, maximally scoring region when is large.

Literature Review

The problem discussed in the paper is an interdisciplinary problem that combines ideas from:

i) Dense image annotations ii) Generating descriptions iii) Grounding natural language in images iv) Neural networks in visual and language domains

The problem forms the foundation of many applications such as: visual search, visual cognition chatbots, photo sharing in social media, visual aid for visually impaired, and surveillance monitoring for corporations.

I: Dense Image Annotations

"Matching words and pictures" (Barnard et al., 2003), and "Connecting modalities: Semisupervised segmentation and annotation of images using unaligned text corpora" (Socher et al. 2010) are two of many earlier works which explored multimodal correspondence between words and image segments. Unfortunately, these pioneering works primarily focused on accuracy of labeled regions, rather than rich region level description generations desired by this paper. Leveraging holistic scene understanding used in works such as "Towards total scene understanding: Classification, annotation and segmentation in an automatic framework" (L.-J. Li et. al, 2009), quantify scene relationships with a joint probability distribution based on relationships between scene image class C, objects O, regions R, image patches X, annotation tags T, as well as latent variables.

II: Generating Descriptions

Generating image descriptions and annotations has become a hot research topic, but many related works treat it as a retrieval problem instead of a generative model. Papers such as "Framing image description as a ranking task: data, models and evaluation metrics" (Hodosh et. al, 2013) use an arbitrary similarity measure to directly transfer a caption from the most relevant training image to a new test image, while papers such as "Collective generation of natural image descriptions"(Kuznetsova et. al, 2012), Other templates such as "From image annotation to image description" (Gupta et. al, 2012) and "Midge: Generating image descriptions from computer vision detections" (Mitchell et. al, 2012) use fixed sentence templates and grammar structure to describe images. All of the aforementioned methods are far too restrictive in the variety of outputs.

Kapersky and Li's model instead builds off the paper "Multimodal neural language models" (by Kiros et al.,2014). Adapting Kiros' log bilinear model that can generate full sentence descriptions for images with fixed window context, this paper creates an RNN model that conditions the probability distribution over the next word in a sentence on all previously generated words.

III: Grounding Natural Language in Images

The methods this paper uses for associating words with the visual domain is inspired by "DeViSE: A Deep Visual-Semantic Embedding Model" (Frome et al, 2013). The paper first trains a model to learn semantically-meaningful, vector representations of words in parallel with a visual recognition neural network. Combining these two networks, we replace the activation layer of the visual recognition neural network with a layer used to predict the semantic representations in the text domain. Adopting this algorithm, Kapersky and Li decompose images and sentences, and infers alignment between contiguous visual and textual fragments.

IV: Neural Networks in Visual and Language Domains

In the recent decade, neural networks have become an integral component of machine learning tasks in both visual and language domains. Numerous classes of CNNs have been researched in image classification and object detection. On the other hand, using pre-trained word representation models such as Word2Vec, the sequential nature of RNNs have become popular in language modelling.

The Model: Alignment

Overview

Given a picture, there are usually multiple things that can appear in the picture. Thus one of the first problems that need to be addressed is how to align the visual data with the language data. Sentences often refer to a particular location to the image, however the algorithm must be able to identify which location it is referring to. The following section assumes that we are given an input dataset of images and sentence descriptions, and it will describe how the alignment model matches the two.

There are two main parts to this task:

i) A neural network that maps sentences and images into a "common embedding"

ii) An objective which learns this "common embedding" so that semantically similar concepts will occupy similar regions within the space

Part I: Representing Images and Sentences

Images

Given an image, a Region Convolutional Neural Network (Girshick et al) is used to detect every possible object that appears in the image. From all the possible objects detected, the top 19 are selected, along the full image.

To create a common embedding, every image is represented by a set of h-dimensional vectors [math]\displaystyle{ \{v_i | i = 1 ... 20\} }[/math] where each [math]\displaystyle{ v_i }[/math] is represented by a convolutional neural network as follows

where [math]\displaystyle{ CCN(I_b) }[/math] transforms the image inside the bounding box into a 4096-dimensional activations of fully connected layer. The matrix [math]\displaystyle{ W_m }[/math] has dimension [math]\displaystyle{ h \times 4096 }[/math].

Sentences

We want to embed the sentences into the same h-dimensional space as above. The paper explains how this can be done using a Bidirectional Recurrent Neural Network.

Given a sequence of [math]\displaystyle{ N }[/math] words, each word is enriched by a variably-sized context around that word.

Let [math]\displaystyle{ t = 1 ... N }[/math] representing the position of the word in the sentence.

[math]\displaystyle{ I_t }[/math] is an indicator column that has 1 at the index of the t-th word.

[math]\displaystyle{ W_w }[/math] is a word embedding matrix that is initialized with a 300-dimensional matrix of word2vec weights.

[math]\displaystyle{ h^f_t }[/math] represents a forward pass of the neural network.

[math]\displaystyle{ h^b_t }[/math] represents a backward pass of the neural network.

Note that in the paper a Relu ([math]\displaystyle{ f: x \rightarrow max(0, x) }[/math]) activation function is used.

Part II: Alignment Objective

Now that we have the images and sentences in the same embedding space, we need to define an objective such that a sentence-image pair will have a high score if they are relevant.

The paper made a simplification on a previous objective function as outlined in a paper by Girshick et al and it is stated as

where

[math]\displaystyle{ g_k }[/math] is the set of image fragments of image [math]\displaystyle{ k }[/math].

[math]\displaystyle{ g_l }[/math] is the set of sentence fragments over sentence [math]\displaystyle{ l }[/math].

Here every word [math]\displaystyle{ s_t }[/math] aligns to the best image region.

If [math]\displaystyle{ k = l }[/math] denotes a corresponding image and sentence pair then the final max-margin, structured loss is:

Thus this new objective will allow proper alignment of image-sentence to have a higher score than misaligned pairs, by a margin.

The Model: Recurrent Neural Network

Input : Imagines and corresponding textual description

Key challenge : the design of a model that can predict variable-sized sequence of description

Previous : define a probability distribution of next word distribution given the current word and the context

Extension : Additionally condition the generative process on an image

That is when training, taking the image pixels and input vectors, computing hidden states and outputs by iterating the following

Note that the image context vector [math]\displaystyle{ b_v }[/math] is only provided at the first iteration, which the author believes to be better than at each time step

Also, it can help to also pass [math]\displaystyle{ b_v }[/math] , [math]\displaystyle{ (W_{h x} x_t) }[/math] through the activation function

Typical size of hidden layer is 512 neurons

RNN Training

The RNN is trained to combine a word [math]\displaystyle{ x_t }[/math], the previous context [math]\displaystyle{ h_{t-1} }[/math] to predict the next word [math]\displaystyle{ y_t }[/math]

We condition the RNN’s predictions on the image information [math]\displaystyle{ b_v }[/math] via bias interactions on the first step.

Traning process :

Set [math]\displaystyle{ h_0 = 0 }[/math], [math]\displaystyle{ x_1 }[/math] to a special START vector, and [math]\displaystyle{ y_1 }[/math] as the first word in the sequence

Set [math]\displaystyle{ x_2 }[/math] to the word vector of the first word and expect the network to predict the second word, etc.

Let [math]\displaystyle{ x_T }[/math] represents the last word, the target label is set to a special END token.

The cost function is to maximize the log probability assigned to the target labels

RNN Test

To predict a sentence, compute the image representation [math]\displaystyle{ b_v }[/math]

Set [math]\displaystyle{ h_0 = 0 }[/math], [math]\displaystyle{ x_1 }[/math] to the START vector and compute the distribution over the first word [math]\displaystyle{ y_1 }[/math]

Sample a word from the distribution, set its embedding vector as [math]\displaystyle{ x_2 }[/math], and repeat this process until the END token is generated.

In practice beam search can improve results.

Optimization

Used SGD with mini-batches of 100 image-sentence pairs and momentum of 0.9 to optimize the alignment model.

Cross-validated the learning rate and the weight decay.

Used dropout regularization in all layers except in the recurrent layers and clip gradients element-wise at 5

Achieved the best results using RMSprop, which is an adaptive step size method that scales the update of each weight by a running average of its gradient norm.

Experimental Results

Three datasets are used for testing: Flickr8K, Flickr30K, MSCOCO. They contain 8,000, 31,000, and 123,000 images respectively and each image is annotated with 5 sentences using Amazon Mechanical Turk. Note that all annotations are converted to lower-case and non-alphanumeric characters are removed.

Image-Sentence Alignment Evaluation

First, the authors assessed the quality of inferred text and image alignment by ranking. Metrics used are median rank of the closest result in the list (median r) and the proportion of times a correct item is found among the top K results (Recall@K).

Table 1. Image-Sentence ranking experiment results. R@K is Recall@K (high is good). Med r is the median rank (low is good).

Main Findings

- Full model (BRNN) outperforms previous works

- BRNN performs better than Socher et al. and Kiros et al., which trained on similar loss but used RNN and LSTM to encode sentences respectively

- Compared to other work that uses AlexNets, BRNN shows consistent improvement

- Performance improvement attributed to simpler cost function

- The only difference between “Our model: DepTree edges” and “Our implementation of DeFrag” is the simpler cost function as described above ***

- Model considers small or relatively rare objects

- Enabled by model’s ability to learn region and word vector magnitudes from inner product interaction

Generated Descriptions: Fullframe Evaluation

Second, the authors tested the model’s ability to describe image and region. This is done by training the Multimodal RNN to generate descriptions of full images. Scores are given based on how well the generated sentence matches a set of five reference annotations.

Table 2. Evaluation of full image predictions on 1,000 test images. B-n is the BLEU score that used up to n-grams (high is good).

Main Findings

- Model performance declines with beam size

- 60% (vs. 25%) of generated sentences with beam size 7 (vs. beam size 1) can be found in the training data

- Multimodal RNN outperforms nearest neighbor retrieval baseline

- “Our model” outperforms “Nearest Neigbor” on all metrics in Table 2

- Compared to nearest neighbor, RNN takes a fraction of second to evaluate an image

- Multimodal RNN priorities simplicity and speed at a slight cost in performance

- “Google NIC” is most similar to the RNN model, except “Google NIC” uses LSTM and GoogLeNet => better performance

- Both “LRCN” and “Mao et al.” use less powerful AlexNet features than the RNN model, hence overall poorer performance in comparison

Region Evaluation

Last, the Multimodal RNN is trained on the aligning image regions with texts. Amazon Mechanical Turk is used to obtain snippets of texts for specified regions of an image. Compared to full image captions, region annotations are more comprehensive.

Table 3. BLEU score evaluation of image region annotations.

Note that region model outperforms full frame model. This is because fullframe model was trained on full images, thus it would not perform as well for smaller image regions.

Limitations

Though results are optimistic, there are several limitations with the Multimodal RNN model:

- Limited to generating one input array of pixels at fixed resolution

- Uses additive bias interaction to gather image information, which is less expressive and more complicated multiplicative interactions

- Built on two separate models – regional versus fullframe; lack of flexibility going directly from one to another

Conclusions

Throughout the entire paper, authors have introduced the following concepts:

1.Generates natural-language descriptions of image regions based on weak labels.

Q: What labels exactly?

A: Dataset with images and sentences.

2. This model has few hard-coded assumptions. Novel ranking through a common, multi-modal embedding.

We have taken several generative steps underlying here:

Align visual and language data:

---Detect image using RCNN, with CNN pre-trained.

---Compute the sentenced describing the image in the same dimensional embedding layers, using BRNN.

---MRNN for generating descriptions:

Main challenges —— variable size not fixed Solution: based on RNN and time series, define the next series based on current variables that we have.

Experiments:

---Image-sentence alignment ranking: High among all other methods, with a top 5 median.

---Full-frame evaluation-Image: slightly outperform many of the existing models.

---Region evaluation: Though still significant lower than human opinion, but surpassed most of the existing models.

Steps to take afterwards

Limited input properties (e.g. one input array of pixels, images need to have a fixed resolution) ***very big issue as currently a lot of images are varied in the size and resolution.***

The RNN receives the image information only through additive bias interactions, which are known to be less expressive. That being said, the interaction the model chose is case sensitive, which is hard to achieve in real life.

In this model, they divided the image-sentence data set into two parts, descriptive sentences and visual images. Is there any possibility to skip the division and solve the thing using one single model?

Reference

1. Karpathy, A., & Li, F. (2015, April 14). Deep Visual-Semantic Alignments for Generating Image Descriptions. Retrieved March 19, 2018, from https://arxiv.org/pdf/1412.2306v2.pdf

2. A Beginner’s Guide to Recurrent Networks and LSTMs. (n.d.). Retrieved from https://deeplearning4j.org/lstm.html#recurrent

3. Recurrent neural network. (n.d.). Retrieved from https://en.wikipedia.org/wiki/Recurrent_neural_network

4. Bidirectional recurrent neural networks. (n.d.). Retrieved from https://en.wikipedia.org/wiki/Bidirectional_recurrent_neural_networks

5. Embedding. (n.d.). Retrieved from https://en.wikipedia.org/wiki/Embedding

6. R. Girshick, J. Donahue, T. Darrell, and J. Malik. Rich feature hierarchies for accurate object detection and semantic segmentation. In CVPR, 2014.