stat946w18/MaskRNN: Instance Level Video Object Segmentation: Difference between revisions

No edit summary |

|||

| (13 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

== Introduction == | == Introduction == | ||

Deep Learning has produced state of the art results in many computer vision tasks like image classification, object localization, object detection, object segmentation, semantic segmentation and instance level video object segmentation. Image classification classify the image based on the prominent objects. Object localization is the task of finding objects’ location in the frame. Object Segmentation task involves providing a pixel map which represents the pixel wise location of the objects in the image. Semantic segmentation task attempts at segmenting the image into meaningful parts. Instance level video object segmentation is the task of consistent object segmentation in video sequences. | Deep Learning has produced state of the art results in many computer vision tasks like image classification, object localization, object detection, object segmentation, semantic segmentation and instance level video object segmentation. Image classification classify the image based on the prominent objects. Object localization is the task of finding objects’ location in the frame. Object Segmentation task involves providing a pixel map which represents the pixel wise location of the objects in the image. Semantic segmentation task attempts at segmenting the image into meaningful parts. Instance level video object segmentation is the task of consistent object segmentation in video sequences. Deforming shapes, fast movements, and occlusion from multiple objects, are just some of the significant challenges in instance level video object segmentation. | ||

There are 2 different types of video object segmentation | There are 2 different types of video object segmentation, Unsupervised and Semi-supervised. | ||

* In unsupervised video object segmentation, the task is to find the salient objects and track the main objects in the video. | |||

* In a semi-supervised setting, the ground truth mask of the salient objects is provided for the first frame. The task is thus simplified to only track the objects required. | |||

In this paper, the authors look at an unsupervised video object segmentation technique. | |||

== Background Papers == | == Background Papers == | ||

| Line 23: | Line 27: | ||

The model of the MaskTrack network is similar to a modular VGG-16 and is referred to as MaskTrack ConvNet in the paper. The network is trained offline on saliency segmentation datasets: ECSSD, MSRA 10K, SOD and PASCAL-S. The input mask for the binary segmentation mask channel is generated via non-rigid deformation and affine transformation of the ground truth segmentation mask. Similar data-augmentation techniques are also used during online training. Just like OSVOS, MaskTrack uses the first frame as ground truth (with augmented images) to fine-tune the network to improve prediction score for the particular video sequence. | The model of the MaskTrack network is similar to a modular VGG-16 and is referred to as MaskTrack ConvNet in the paper. The network is trained offline on saliency segmentation datasets: ECSSD, MSRA 10K, SOD and PASCAL-S. The input mask for the binary segmentation mask channel is generated via non-rigid deformation and affine transformation of the ground truth segmentation mask. Similar data-augmentation techniques are also used during online training. Just like OSVOS, MaskTrack uses the first frame as ground truth (with augmented images) to fine-tune the network to improve prediction score for the particular video sequence. | ||

A parallel ConvNet network is used to generate predicted segment mask based on the optical flow magnitude. The optical flow between 2 frames is calculated using the EpicFlow algorithm. The output of the two networks is combined using averaging operation to generate the final predicted segmented mask. | A parallel ConvNet network is used to generate a predicted segment mask based on the optical flow magnitude. The optical flow between 2 frames is calculated using the EpicFlow algorithm. The output of the two networks is combined using an averaging operation to generate the final predicted segmented mask. | ||

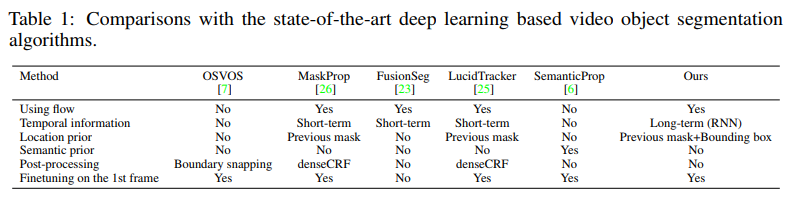

Table 1 gives a summary comparison of the different state of the art algorithms. The noteworthy information included in this table is that the technique presented in this paper is the only one which takes into account long-term temporal information. This is accomplished with a recurrent neural net. Furthermore, the bounding box is also estimated instead of just a segmentation mask. The authors claim that this allows the incorporation of a location prior from the tracked object. | Table 1 below gives a summary comparison of the different state of the art algorithms. The noteworthy information included in this table is that the technique presented in this paper is the only one which takes into account long-term temporal information. This is accomplished with a recurrent neural net. Furthermore, the bounding box is also estimated instead of just a segmentation mask. The authors claim that this allows the incorporation of a location prior from the tracked object. | ||

[[File:Paper19-SegmentationComp.png]] | [[File:Paper19-SegmentationComp.png]] | ||

| Line 49: | Line 53: | ||

[[File:MaskRNNDeepNet.jpg | 850px]] | [[File:MaskRNNDeepNet.jpg | 850px]] | ||

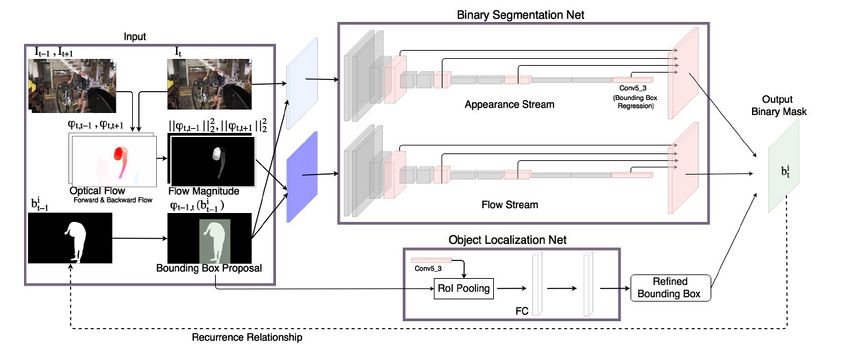

The above picture shows a single deep net employed for predicting the segment mask for one salient object in the video frame. The network consists of 2 networks: binary segmentation network and object localization network. The binary segmentation network is split into two streams: appearance and flow stream. The input of the appearance stream is the RGB frame at time t and the wrapped prediction of the binary segmentation mask from time <math>t-1</math>. The wrapping function uses the optical flow between frame <math>t-1</math> and frame <math>t</math> to generate a new binary segmentation mask for frame <math>t</math>. The input to the flow stream is the concatenation of the optical flow magnitude between frames <math>t-1</math> to <math>t</math> and frames <math>t</math> to <math>t+1</math> and the wrapped prediction of the segmentation mask from frame <math>t-1</math>. The magnitude of the optical flow is replicated into an RBG format before feeding it to the flow stream. The network architecture closely resembles a VGG-16 network without the pooling or fully connected layers at the end. The fully connected layers are replaced with convolutional and bilinear interpolation upsampling layers which are then linearly combined to form a feature representation that is the same size | The above picture shows a single deep net employed for predicting the segment mask for one salient object in the video frame. The network consists of 2 networks: binary segmentation network and object localization network. The binary segmentation network is split into two streams: appearance and flow stream. The input of the appearance stream is the RGB frame at time t and the wrapped prediction of the binary segmentation mask from time <math>t-1</math>. The wrapping function uses the optical flow between frame <math>t-1</math> and frame <math>t</math> to generate a new binary segmentation mask for frame <math>t</math>. The input to the flow stream is the concatenation of the optical flow magnitude between frames <math>t-1</math> to <math>t</math> and frames <math>t</math> to <math>t+1</math> and the wrapped prediction of the segmentation mask from frame <math>t-1</math>. The magnitude of the optical flow is replicated into an RBG format before feeding it to the flow stream. The network architecture closely resembles a VGG-16 network without the pooling or fully connected layers at the end. The fully connected layers are replaced with convolutional and bilinear interpolation upsampling layers which are then linearly combined to form a feature representation that is the same size as the input image. This feature representation is then used to generate a binary segment mask. This technique is borrowed from the Fully Convolutional Network mentioned above. The output of the flow stream and the appearance stream is linearly combined and sigmoid function is applied to the result to generate a binary mask for ith object. All parts of the network are fully differentiable and thus it can be fully trained in every pass. | ||

=== MaskRNN: Object Localization Network: === | === MaskRNN: Object Localization Network: === | ||

| Line 70: | Line 74: | ||

There are 3 different techniques for performance analysis for Video Object Segmentation techniques: | There are 3 different techniques for performance analysis for Video Object Segmentation techniques: | ||

1. Region Similarity (Jaccard Index): Region similarity or Intersection-over-union is used to capture precision of the area covered by the prediction segmentation mask compared to the ground truth segmentation mask. | 1. Region Similarity (Jaccard Index): Region similarity or Intersection-over-union is used to capture precision of the area covered by the prediction segmentation mask compared to the ground truth segmentation mask. It calculates the average across all frames of the dataset. This is particularly challenging for small sized foreground objects. | ||

\begin{equation} | |||

IoU = \frac{|M \cap G|}{|M| + |G| - |M \cap G|} | |||

\label{equation:Jaccard} | |||

\end{equation} | |||

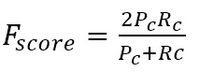

2. Contour Accuracy (F-score): This metric measures the accuracy in the boundary of the predicted segment mask and the ground truth segment mask | 2. Contour Accuracy (F-score): This metric measures the accuracy in the boundary of the predicted segment mask and the ground truth segment mask, by calculating the the precision and the recall of the two sets of points on the contours of the ground truth segment and the output segment via a bipartite graph matching. It is a measure of accurate delineation of the foreground objects. | ||

[[File:Fscore.jpg | 200px]] | [[File:Fscore.jpg | 200px|center]] | ||

3. Temporal Stability : This estimates the degree of deformation needed to transform the segmentation masks from one frame to the next and is measured by the dissimilarity of the set of points on the contours of the segmentation between two adjacent frames. | 3. Temporal Stability : This estimates the degree of deformation needed to transform the segmentation masks from one frame to the next and is measured by the dissimilarity of the set of points on the contours of the segmentation between two adjacent frames. | ||

| Line 86: | Line 93: | ||

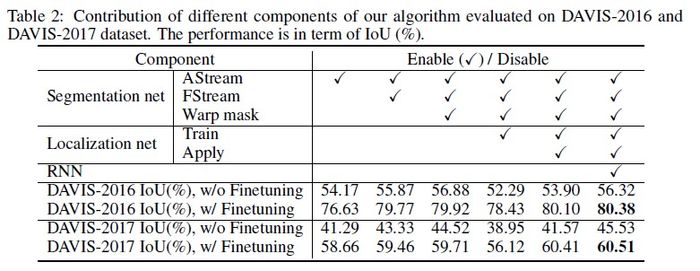

The ablation study summarized how the different components contributed to the algorithm evaluated on DAVIS-2016 and DAVIS-2017 datasets. | The ablation study summarized how the different components contributed to the algorithm evaluated on DAVIS-2016 and DAVIS-2017 datasets. | ||

[[File:MaskRNNTable2.jpg | 700px]] | [[File:MaskRNNTable2.jpg | 700px|center]] | ||

The above table presents the contribution of each component of the network to the final prediction score. | The above table presents the contribution of each component of the network to the final prediction score. Online fine-tuning improves the performance by a large margin, as the network becomes adjusted to the appearance of the specific object being tracked. Addition of RNN/Localization Net and FStream all seem to positively affect the performance of the deep net. The FStream provides information on motion boundaries which help in videos with cluttered backgrounds, the RNN provides more consistent segmentation masks over time. The localization net has a more ambiguous effect on the network; adding the bounding box regression loss decreases the performance of the segmentation net but applying the bounding box to restrict the segmentation mask improves the results over those achieved by only using the segmentation net. In other words, the localization net should only be used in conjunction with the segmentation net while the segmentation net can be used by itself. | ||

=== Quantitative Evaluation === | === Quantitative Evaluation === | ||

| Line 114: | Line 121: | ||

== Conclusion == | == Conclusion == | ||

In this paper a novel approach to instance level video object segmentation task is presented which performs better than current state of the art. The long-term recurrence relationship is learnt using an RNN. The object localization network is added to improve accuracy of the system. Using online fine-tuning the network is adjusted to predict better for the current video sequence. | In this paper a novel approach to instance level video object segmentation task is presented which performs better than current state of the art. The long-term recurrence relationship is learnt using an RNN. The object localization network is added to improve accuracy of the system. Due to the recurrent component and the combination of segmentation and localization nets, the approach takes advantage of the long-term temporal information and the location prior to improve the results. Using online fine-tuning the network is adjusted to predict better for the current video sequence. | ||

== Critique == | == Critique == | ||

The paper provides a technique to track multiple objects in a video. The novelty is to add back-propagation through time to improve the tracking accuracy and using a localization network to remove any outliers in the segmented binary mask. However, the network | The paper provides a technique to track multiple objects in a video. The novelty is to add back-propagation through time to improve the tracking accuracy and using a localization network to remove any outliers in the segmented binary mask. However, the network architecture it too large and isn't able to run in real-time. There are N deep-Nets for N objects and each deep-Net contains 2 parallel VGG-16 convolutional networks. | ||

== Implementation == | == Implementation == | ||

Latest revision as of 00:15, 21 April 2018

Introduction

Deep Learning has produced state of the art results in many computer vision tasks like image classification, object localization, object detection, object segmentation, semantic segmentation and instance level video object segmentation. Image classification classify the image based on the prominent objects. Object localization is the task of finding objects’ location in the frame. Object Segmentation task involves providing a pixel map which represents the pixel wise location of the objects in the image. Semantic segmentation task attempts at segmenting the image into meaningful parts. Instance level video object segmentation is the task of consistent object segmentation in video sequences. Deforming shapes, fast movements, and occlusion from multiple objects, are just some of the significant challenges in instance level video object segmentation.

There are 2 different types of video object segmentation, Unsupervised and Semi-supervised.

- In unsupervised video object segmentation, the task is to find the salient objects and track the main objects in the video.

- In a semi-supervised setting, the ground truth mask of the salient objects is provided for the first frame. The task is thus simplified to only track the objects required.

In this paper, the authors look at an unsupervised video object segmentation technique.

Background Papers

Video object segmentation has been performed using spatio-temporal graphs [5, 6, 7, 8] and deep learning. The graph based methods construct 3D spatio-temporal graphs in order to model the inter and the intra-frame relationship of pixels or superpixels in a video. Hence they are computationally slower than deep learning methods and are unable to run at real-time. There are 2 main deep learning techniques for semi-supervised video object segmentation: One Shot Video Object Segmentation (OSVOS) and Learning Video Object Segmentation from Static Images (MaskTrack). Following is a brief description of the new techniques introduced by these papers for semi-supervised video object segmentation task.

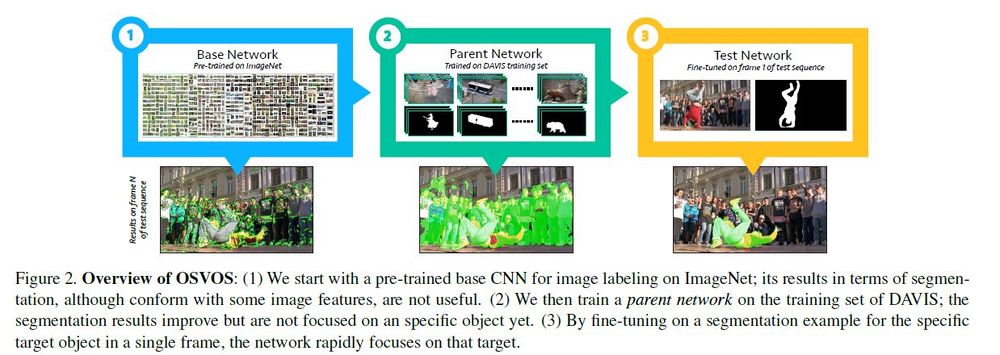

OSVOS (One-Shot Video Object Segmentation)

This paper introduces the technique of using a frame-by-frame object segmentation without any temporal information from the previous frames of the video. The paper uses a VGG-16 network with pre-trained weights from image classification task. This network is then converted into a fully-connected network (FCN) by removing the fully connected dense layers at the end and adding convolution layers to generate a segment mask of the input. This network is then trained on the DAVIS 2016 dataset.

During testing, the trained VGG-16 FCN is fine-tuned using the first frame of the video using the ground truth. Because this is a semi-supervised case, the segmented mask (ground truth) for the first frame is available. The first frame data is augmented by zooming/rotating/flipping the first frame and the associated segment mask.

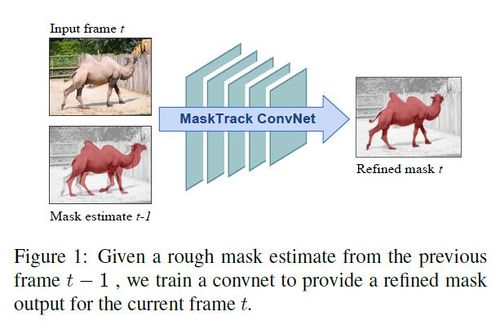

MaskTrack (Learning Video Object Segmentation from Static Images)

MaskTrack takes the output of the previous frame to improve its predictions and to generate the segmentation mask for the next frame. Thus the input to the network is 4 channel wide (3 RGB channels from the frame at time [math]\displaystyle{ t }[/math] plus one binary segmentation mask from frame [math]\displaystyle{ t-1 }[/math]). The output of the network is the binary segmentation mask for frame at time [math]\displaystyle{ t }[/math]. Using the binary segmentation mask (referred to as guided object segmentation in the paper), the network is able to use some temporal information from the previous frame to improve its segmentation mask prediction for the next frame.

The model of the MaskTrack network is similar to a modular VGG-16 and is referred to as MaskTrack ConvNet in the paper. The network is trained offline on saliency segmentation datasets: ECSSD, MSRA 10K, SOD and PASCAL-S. The input mask for the binary segmentation mask channel is generated via non-rigid deformation and affine transformation of the ground truth segmentation mask. Similar data-augmentation techniques are also used during online training. Just like OSVOS, MaskTrack uses the first frame as ground truth (with augmented images) to fine-tune the network to improve prediction score for the particular video sequence.

A parallel ConvNet network is used to generate a predicted segment mask based on the optical flow magnitude. The optical flow between 2 frames is calculated using the EpicFlow algorithm. The output of the two networks is combined using an averaging operation to generate the final predicted segmented mask.

Table 1 below gives a summary comparison of the different state of the art algorithms. The noteworthy information included in this table is that the technique presented in this paper is the only one which takes into account long-term temporal information. This is accomplished with a recurrent neural net. Furthermore, the bounding box is also estimated instead of just a segmentation mask. The authors claim that this allows the incorporation of a location prior from the tracked object.

Dataset

The three major datasets used in this paper are DAVIS-2016, DAVIS-2017 and Segtrack v2. DAVIS-2016 dataset provides video sequences with only one segment mask for all salient objects. DAVIS-2017 improves the ground truth data by providing segmentation mask for each salient object as a separate color segment mask. Segtrack v2 also provides multiple segmentation mask for all salient objects in the video sequence. These datasets try to recreate real-life scenarios like occlusions, low resolution videos, background clutter, motion blur, fast motion etc.

MaskRNN: Introduction

Most techniques mentioned above don’t work directly on instance level segmentation of the objects through the video sequence. The above approaches focus on image segmentation on each frame and using additional information (mask propagation and optical flow) from the preceding frame perform predictions for the current frame. To address the instance level segmentation problem, MaskRNN proposes a framework where the salient objects are tracked and segmented by capturing the temporal information in the video sequence using a recurrent neural network.

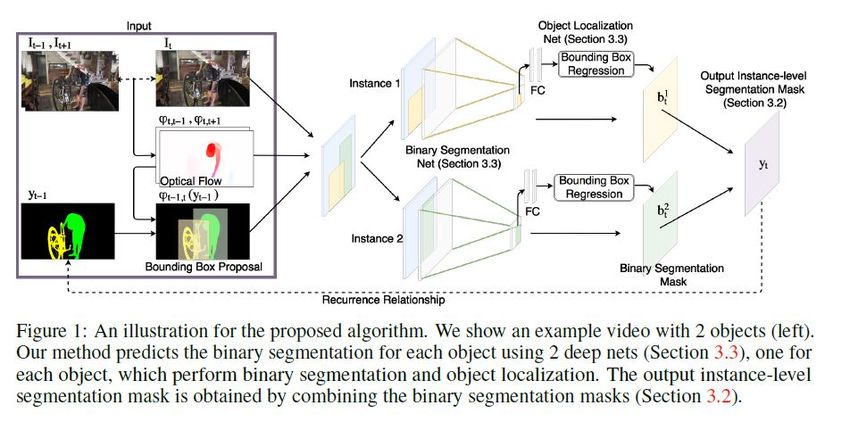

MaskRNN: Overview

In a video sequence [math]\displaystyle{ I = \{I_1, I_2, …, I_T\} }[/math], the sequence of [math]\displaystyle{ T }[/math] frames are given as input to the network, where the video sequence contains [math]\displaystyle{ N }[/math] salient objects. The ground truth for the first frame [math]\displaystyle{ y_1^* }[/math] is also provided for [math]\displaystyle{ N }[/math] salient objects. In this paper, the problem is formulated as a time dependency problem and using a recurrent neural network, the prediction of the previous frame influences the prediction of the next frame. The approach also computes the optical flow between frames (optical flow is the apparent motion of objects between two consecutive frames in the form of a 2D vector field representing the displacement in brightness patterns for each pixel, apparent because it depends on the relative motion between the observer and the scene) and uses that as the input to the neural network. The optical flow is also used to align the output of the predicted mask. “The warped prediction, the optical flow itself, and the appearance of the current frame are then used as input for [math]\displaystyle{ N }[/math] deep nets, one for each of the [math]\displaystyle{ N }[/math] objects.”[1 - MaskRNN] Each deep net is a made of an object localization network and a binary segmentation network. The binary segmentation network is used to generate the segmentation mask for an object. The object localization network is used to alleviate outliers from the predictions. The final prediction of the segmentation mask is generated by merging the predictions of the 2 networks. For [math]\displaystyle{ N }[/math] objects, there are N deep nets which predict the mask for each salient object. The predictions are then merged into a single prediction using an [math]\displaystyle{ \text{argmax} }[/math] operation at test time.

MaskRNN: Multiple Instance Level Segmentation

Image segmentation requires producing a pixel level segmentation mask and this can become a multi-class problem. Instead, using the approach from [2- Mask R-CNN] this approach is converted into a multiple binary segmentation problem. A separate segmentation mask is predicted separately for each salient object and thus we get a binary segmentation problem. The binary segments are combined using an [math]\displaystyle{ \text{argmax} }[/math] operation where each pixel is assigned to the object containing the largest predicted probability.

MaskRNN: Binary Segmentation Network

The above picture shows a single deep net employed for predicting the segment mask for one salient object in the video frame. The network consists of 2 networks: binary segmentation network and object localization network. The binary segmentation network is split into two streams: appearance and flow stream. The input of the appearance stream is the RGB frame at time t and the wrapped prediction of the binary segmentation mask from time [math]\displaystyle{ t-1 }[/math]. The wrapping function uses the optical flow between frame [math]\displaystyle{ t-1 }[/math] and frame [math]\displaystyle{ t }[/math] to generate a new binary segmentation mask for frame [math]\displaystyle{ t }[/math]. The input to the flow stream is the concatenation of the optical flow magnitude between frames [math]\displaystyle{ t-1 }[/math] to [math]\displaystyle{ t }[/math] and frames [math]\displaystyle{ t }[/math] to [math]\displaystyle{ t+1 }[/math] and the wrapped prediction of the segmentation mask from frame [math]\displaystyle{ t-1 }[/math]. The magnitude of the optical flow is replicated into an RBG format before feeding it to the flow stream. The network architecture closely resembles a VGG-16 network without the pooling or fully connected layers at the end. The fully connected layers are replaced with convolutional and bilinear interpolation upsampling layers which are then linearly combined to form a feature representation that is the same size as the input image. This feature representation is then used to generate a binary segment mask. This technique is borrowed from the Fully Convolutional Network mentioned above. The output of the flow stream and the appearance stream is linearly combined and sigmoid function is applied to the result to generate a binary mask for ith object. All parts of the network are fully differentiable and thus it can be fully trained in every pass.

MaskRNN: Object Localization Network:

Using a similar technique to the Fast-RCNN method of object localization, where the region of interest (RoI) pooling of the features of the region proposals (i.e. the bounding box proposals here) is performed and passed through fully connected layers to perform regression, the Object localization network generates a bounding box of the salient object in the frame. This bounding box is enlarged by a factor of 1.25 and combined with the output of binary segmentation mask. Only the segment mask available in the bounding box is used for prediction and the pixels outside of the bounding box are marked as zero. MaskRNN uses the convolutional feature output of the appearance stream as the input to the RoI-pooling layer to generate the predicted bounding box. A pixel is classified as foreground if it is both predicted to be in the foreground by the binary segmentation net and within the enlarged estimated bounding box from the object localization net.

Training and Finetuning

For training the network depicted in Figure 1, backpropagation through time is used in order to preserve the recurrence relationship connecting the frames of the video sequence. Predictive performance is further improved by following the algorithm for semi-supervised setting for video object segmentation with fine-tuning achieved by using the first frame segmentation mask of the ground truth. In this way, the network is further optimized using the ground truth data.

MaskRNN: Implementation Details

Offline Training

The deep net is first trained offline on a set of static images. The ground truth is randomly perturbed locally to generate the imperfect mask from frame [math]\displaystyle{ t-1 }[/math]. Two different networks are trained offline separately for DAVIS-2016 and DAVIS-2017 datasets for a fair evaluation of both datasets. After both the object localization net and binary segmentation networks have trained, the temporal information in the network is used to further improve the segmented prediction results. Because of GPU memory constraints, the RNN is only able to backpropagate the gradients back 7 frames and learn long-term temporal information.

For optical flow, a pre-trained flowNet2.0 is used to compute the optical flow between frames. (A flowNet (Dosovitskiy 2015) is a deep neural network trained to predict optical flow. The simplest form of flowNet has an architecture consisting of two parts. The first part accepts the two images between which the optical flow is to be computed as input, as applies a sequence of convolution and max-pooling operations, as in a standard convolutional neural network. In the second part, repeated up-convolution operations are applied, increasing the dimensions of the feature-maps. Besides the output of the previous upconvolution, each upconvolution is also fed as input the output of the corresponding down-convolution from the first part of the network. Thus part of the architecture resembles that of a U-net (Ronneberger, 2015). The output of the network is the predicted optical flow. )

Online Finetuning

The deep nets (without the RNN) are then fine-tuned during test time by online training the networks on the ground truth of the first frame and some augmentations of the first frame data. The learning rate is set to [math]\displaystyle{ 10^{-5} }[/math] for online training for 200 iterations and the learning rate is gradually decayed over time. Data augmentation techniques similar to those in offline training, namely random resizing, rotating, cropping and flipping is applied. Also, it should be noted that the RNN is not employed during online finetuning since only a single frame of training data is available.

MaskRNN: Experimental Results

Evaluation Metrics

There are 3 different techniques for performance analysis for Video Object Segmentation techniques:

1. Region Similarity (Jaccard Index): Region similarity or Intersection-over-union is used to capture precision of the area covered by the prediction segmentation mask compared to the ground truth segmentation mask. It calculates the average across all frames of the dataset. This is particularly challenging for small sized foreground objects.

\begin{equation} IoU = \frac{|M \cap G|}{|M| + |G| - |M \cap G|} \label{equation:Jaccard} \end{equation}

2. Contour Accuracy (F-score): This metric measures the accuracy in the boundary of the predicted segment mask and the ground truth segment mask, by calculating the the precision and the recall of the two sets of points on the contours of the ground truth segment and the output segment via a bipartite graph matching. It is a measure of accurate delineation of the foreground objects.

3. Temporal Stability : This estimates the degree of deformation needed to transform the segmentation masks from one frame to the next and is measured by the dissimilarity of the set of points on the contours of the segmentation between two adjacent frames.

Region similarity measures the true segmented area in the prediction, while Contour Accuracy measures the accuracy of the contours/segmented mask boundary.

Ablation Study

The ablation study summarized how the different components contributed to the algorithm evaluated on DAVIS-2016 and DAVIS-2017 datasets.

The above table presents the contribution of each component of the network to the final prediction score. Online fine-tuning improves the performance by a large margin, as the network becomes adjusted to the appearance of the specific object being tracked. Addition of RNN/Localization Net and FStream all seem to positively affect the performance of the deep net. The FStream provides information on motion boundaries which help in videos with cluttered backgrounds, the RNN provides more consistent segmentation masks over time. The localization net has a more ambiguous effect on the network; adding the bounding box regression loss decreases the performance of the segmentation net but applying the bounding box to restrict the segmentation mask improves the results over those achieved by only using the segmentation net. In other words, the localization net should only be used in conjunction with the segmentation net while the segmentation net can be used by itself.

Quantitative Evaluation

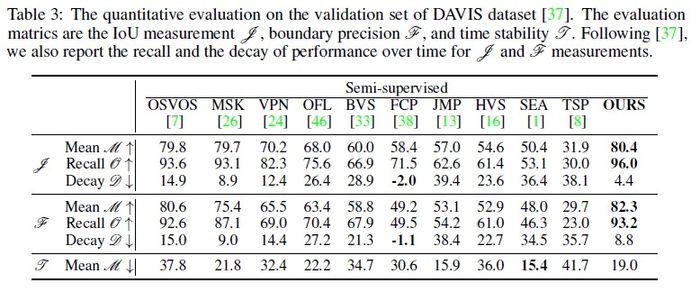

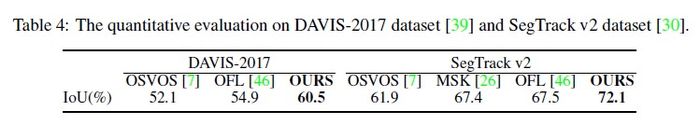

The authors use DAVIS-2016, DAVIS-2017 and Segtrack v2 to compare the performance of the proposed approach to other methods based on foreground-background video object segmentation and multiple instance-level video object segmentation.

The above table shows the results for contour accuracy mean and region similarity. The MaskRNN method seems to outperform all previously proposed methods. The performance gain is significant by employing a Recurrent Neural Network for learning recurrence relationship and using a object localization network to improve prediction results.

The following table shows the improvements in the state of the art achieved by MaskRNN on the DAVIS-2017 and the SegTrack v2 dataset.

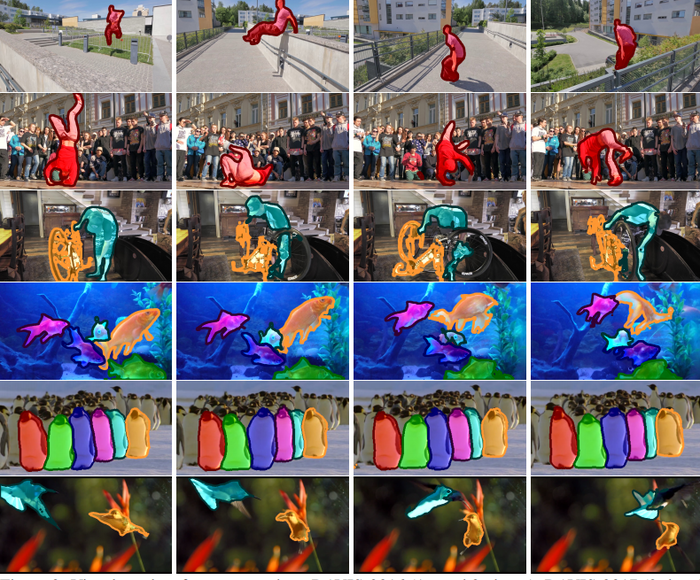

Qualitative Evaluation

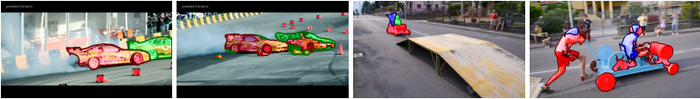

The authors showed example qualitative results from the DAVIS and Segtrack datasets.

Below are some success cases of object segmentation under complex motion, cluttered background, and/or multiple object occlusion.

Below are a few failure cases. The authors explain two reasons for failure: a) when similar objects of interest are contained in the frame (left two images), and b) when there are large variations in scale and viewpoint (right two images).

Conclusion

In this paper a novel approach to instance level video object segmentation task is presented which performs better than current state of the art. The long-term recurrence relationship is learnt using an RNN. The object localization network is added to improve accuracy of the system. Due to the recurrent component and the combination of segmentation and localization nets, the approach takes advantage of the long-term temporal information and the location prior to improve the results. Using online fine-tuning the network is adjusted to predict better for the current video sequence.

Critique

The paper provides a technique to track multiple objects in a video. The novelty is to add back-propagation through time to improve the tracking accuracy and using a localization network to remove any outliers in the segmented binary mask. However, the network architecture it too large and isn't able to run in real-time. There are N deep-Nets for N objects and each deep-Net contains 2 parallel VGG-16 convolutional networks.

Implementation

The implementation of this paper was produced as part of the NIPS Paper Implementation Challenge. This implementation can be found at the following open source project: https://github.com/philferriere/tfvos.

References

- Dosovitskiy, Alexey, et al. "Flownet: Learning optical flow with convolutional networks." Proceedings of the IEEE International Conference on Computer Vision. 2015.

- Hu, Y., Huang, J., & Schwing, A. "MaskRNN: Instance level video object segmentation". Conference on Neural Information Processing Systems (NIPS). 2017

- Ferriere, P. (n.d.). Semi-Supervised Video Object Segmentation (VOS) with Tensorflow. Retrieved March 20, 2018, from https://github.com/philferriere/tfvos

- Ronneberger, Olaf, Philipp Fischer, and Thomas Brox. "U-net: Convolutional networks for biomedical image segmentation." International Conference on Medical image computing and computer-assisted intervention. Springer, Cham, 2015.

- Lee, Yong Jae, Jaechul Kim, and Kristen Grauman. "Key-segments for video object segmentation." Computer Vision (ICCV), 2011 IEEE International Conference on. IEEE, 2011.

- Grundmann, Matthias, et al. "Efficient hierarchical graph-based video segmentation." Computer Vision and Pattern Recognition (CVPR), 2010 IEEE Conference on. IEEE, 2010.

- Li, Fuxin, et al. "Video segmentation by tracking many figure-ground segments." Computer Vision (ICCV), 2013 IEEE International Conference on. IEEE, 2013.

- Tsai, David, et al. "Motion coherent tracking using multi-label MRF optimization." International journal of computer vision 100.2 (2012): 190-202.