stat441w18/mastering-chess-and-shogi-self-play: Difference between revisions

| (31 intermediate revisions by one other user not shown) | |||

| Line 15: | Line 15: | ||

7. Stoev, Victor | 7. Stoev, Victor | ||

8. Zhang, | 8. Zhang, Hui | ||

= Introduction = | = Introduction = | ||

| Line 34: | Line 34: | ||

=== Theory of Reinforcement Learning === | === Theory of Reinforcement Learning === | ||

Reinforcement learning is a paradigm where an agent performs some action and receives feedback based on a reward or penalty. The agent then uses this feedback to improve its actions in order to maximize rewards. In machine learning, reinforcement learning algorithms are well suited to strategic games such as chess, shogi and Go because given a game state, the expected reward of an action typically depends on the next several plays. To win consistently, the player has to sacrifice short term outcomes to optimize long term rewards, requiring them to think several moves ahead. An important concept in reinforcement learning is the exploration/exploitation tradeoff. In order for the agent to learn how to act optimally in a given state or board position, the player must be able to exploit reward-maximizing actions and also explore untested ones. | |||

Benefits | |||

- Compared to supervised learning you don’t need a data set | |||

- No human bias | |||

Issues | |||

- Easy to get caught in local mins if not enough exploration | |||

- Rewards can be very far away, AI may not learn due to discount rate on reward or not reaching the reward during play | |||

=== Theory of Alpha-Beta Search === | === Theory of Alpha-Beta Search === | ||

Alpha-beta search trees use minima/maxima logic to eliminate subsequent nodes. Values of alpha and beta are assigned for each node, representing maxima and minima, respectively. Alpha-beta search trees work particularly well with two-opponent games such as Chess, Go, and Shogi, as alternating layers reflect changes in turns. | |||

The set-up for this tree is as follows: | |||

1) The two opponents are assigned the “maxima” and “minima” roles. The “maxima” opponent is assigned a value of alpha. The “minima” opponent is assigned a beta. | |||

2) Layers within the tree alternate in function between maximum and minimum. A given node will take either the max or min of connected nodes in the next lowest layer (dependent on the function). | |||

3) Maximum/minimum are evaluated left to right within a layer. If a node’s value fails to improve on the current maximum/minimum for the entire layer, then it is eliminated and the path will not be pursued | |||

4) Values of alpha and beta proceed up the tree until reaching the root. Before computing, alpha is set to negative infinity and beta is set to infinity.; | |||

[[File:abs.jpeg | frame | center |Fig 1. ]] | |||

=== Theory of Monte Carlo Search Trees === | === Theory of Monte Carlo Search Trees === | ||

Monte carlo tree search is a heuristic search algorithm; in a basic form it simulates a game using random plays, recording each play and only keeping the winning outcomes and their information. The algorithm receives a board position as an input and performs many multi-phase playouts on the given state. This is based on the idea that for a given board, if statistics are available for all states that are one move away, one can consider it to be a multi-armed bandit problem. | |||

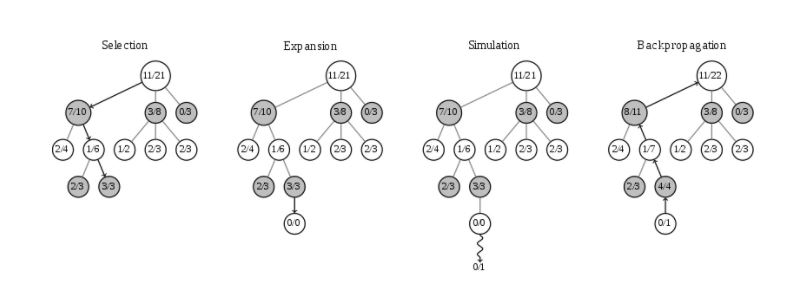

As the tree iterates, it learns and plays more optimally based on a search tree that is recursively expanded through random sampling of the search space based on playouts. The procedure consists of four major steps: selection, expansion, simulation and backpropagation. | |||

- Selection is the process of deciding the action a to take from a particular state, starting from the root node. The process is guided by a predetermined allocation strategy, which is based on the sequence of past plays. | |||

- From each child node, simulate a random game known as a rollout, which is represented as an edge in the tree diagram. Each node represents the win probability in playouts for the respective player. | |||

- Eventually as the the algorithm traverses to the leaf nodes of the tree where there is no past information for possible states so the allocation algorithm can no longer be applied. | |||

- Expansion occurs once the algorithm traverses to the leaf nodes where the allocation algorithm no longer applies. The next potential mixed state is estimated through simulation. | |||

- The tree expands by adding child nodes, the initial chess board after action a, until the tree reaches the outcome nodes of either a win/draw/loss (leafs) or till a certain limiting depth restriction. | |||

- Using results from the rollouts, the weight of the nodes and the evaluation function are updated. This is the back propagation step in the process. | |||

- The parameters <math> | |||

\theta </math> of the network is randomly initiated and then trained with reinforcement learning through self-play. | |||

The algorithm returns a probability vector <math> | |||

\pi </math> which represents the distribution of the moves with respect to the root state. The probability vector can be proportional or greedy depending on the choice of strategy of traversion, whether a light playout is desired or a more computationally heavy one. The move <math> | |||

a </math>, is chosen with low visit count through the traversion, high move probability and high value according to the neural network. | |||

[[File:mcst.jpeg | frame | center |Fig 2. ]] | |||

=== Differences Between Game Algorithms === | === Differences Between Game Algorithms === | ||

| Line 49: | Line 102: | ||

! AlphaGo !! AlphaGo Zero | ! AlphaGo !! AlphaGo Zero | ||

|- | |- | ||

| | | Supervised learning using human expert positions || Tabula rasa (learns from scratch with self-play reinforcement learning) | ||

|- | |||

| Additional input features || Only uses the board position as an input | |||

|- | |||

| Separate policy and value networks || Only one neural network | |||

|- | |- | ||

| | | Tree search using Monte Carlo rollouts || Simpler tree search relying on the single neural network to judge positions and moves | ||

|} | |} | ||

| Line 67: | Line 124: | ||

|- | |- | ||

| Hyper-parameter of the search is tuned with Bayesian optimisation|| Same hyper-parameters are reused for all games without tuning (except for adding noise) | | Hyper-parameter of the search is tuned with Bayesian optimisation|| Same hyper-parameters are reused for all games without tuning (except for adding noise) | ||

|} | |} | ||

| Line 74: | Line 129: | ||

=== Training the Model === | === Training the Model === | ||

AlphaZero generalizes the algorithm used in similar previous programs such as AlphaGo Zero using the differences above to accommodate more games other than Go. One generalization is that AlphaZero only knows the basic rules of the game and just takes the board position as an input. As the paper says, “the program trains using a deep neural network <math> | |||

(p, v) = f_θ(s) </math> with parameters θ” which are randomly initialized. It then takes "the board position s as an input and output[s] a vector of move probabilities p with components <math> | |||

p_a = Pr(a|s) </math>for each action a, and a scalar value v estimating the expected outcome z from position s, <math> | |||

v ≈ E[z|s] </math>” (Silver, 2017). | |||

AlphaZero uses a Monte-Carlo tree search algorithm discussed above along with the predicted values of p from the neural network to select each move for both players. Firstly it will take in the current game state (along with the eight previous game states and which player’s turn it is), and output the approximate state value v and the probability vector of the next move from the policy p. Each child node is initialized with the number of visits N = 0, the value W = 0, and p from the neural network. Selection and backup continue as explained previously, selecting child nodes with the highest probabilistic upper confidence tree score <math> | |||

U_i = W_i/N_i +cP_i*sqrt(ln(N_p)/(1+N_i)) </math> until we hit a leaf, whose value is set to the predicted value from the neural network and then expanded. During backup, each parent’s N is incremented, and its value changed according to the child nodes’ predicted values and the policy vector. The tree search algorithm is given a certain amount of time to run, after which the move selected will be that has the best value. At each move, the values of v, p, and the improved policy predictions <math> | |||

\pi_i=N_i^{(1/ \tau)} </math> are stored. | |||

When the game ends, and the outcome z is computed, the parameters θ are updated using gradient descent to minimize the loss function <math> | |||

l = (z-v)^2-\pi^T log p + c||θ||^2 </math>. This loss function combines the mean squared error on the valuation function v (real-valued prediction) and cross entropy losses on action policy vector p (classification on the action), and includes an <math> | |||

L^2 </math> regularization term <math> | |||

c||θ||^2 </math>. | |||

The network structure used was not explicitly specified in the paper, but it is likely that AlphaZero utilized a residual convolution neural network as was implemented in AlphaGo Zero. | |||

=== Scoring and Evaluation === | === Scoring and Evaluation === | ||

Performance of AlphaZero was done against its predecessor algorithms: Stockfish, Elmo, AlphaGo, and AlphaGo Zero. It was found that AlphaZero was able to search 80,000 positions/second when applied in games of chess compared to the gold-standard Stockfish which required 70 million positions/second. In the game of Shogi, AlphaZero required 40,000 positions/second while Emlo required 35 million positions/second. These results are indicative of a much more efficient algorithm. | |||

The Elo scale was also used as a measurement of the success of the algorithm. These ratings were computed from a 1 second per move tournament between AlphaZero and one of the other algorithms. | |||

= Criticisms = | = Criticisms = | ||

The training times are given using resources such as 5,000 first-generation TPUs to generate self-play games and 64 second-generation TPUs to train the neural networks. But what is the result for someone who wants to reproduce this but does not have the necessary supercomputer? 5,000 TPU at 4 hours is 2 years processing time with only one TPU. Speaking of reproducibility, since none of the code is available publicly it is not possible to reproduce the results or test it. | |||

Second, the AlphaZero was being run on a higher processing power than Stockfish, making a non-level playing field. The time allocation was also a minute per move when most human vs engine games have a total allotted time and each player can choose how much time to spend on each move. | |||

Next, the paper does not discuss how they ended up with the form they did. Did they try out multiple methods and this was the best of the bunch? Are there potentially better ways to set up states and neural nets? | |||

Finally, there’s no mention of how this can be scaled to a Markov Decision Process situation where the outcomes are partially random and partially based on the decisions of a player. The paper struggles to identify its own shortfalls/areas where they could be better. | |||

= Sources = | = Sources = | ||

Collados, Jose Camacho. “Is AlphaZero Really a Scientific Breakthrough in AI?” Medium, 11 Dec. 2017,medium.com/@josecamachocollados/is-alphazero-really-a-scientific-breakthrough-in-ai-bf66ae1c84f2. | |||

Ha, Karel. “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm.” AI Seminar. AI Seminar, 19 Dec. 2017, Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm. | Ha, Karel. “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm.” AI Seminar. AI Seminar, 19 Dec. 2017, Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm. | ||

Latest revision as of 13:58, 15 March 2018

Presented by

1. Bhalla, Amar

2. Florian, Alex

3. Iaboni, Alessandra

4. Lu, Thomas

5. Morjaria, Deep

6. Poole, Zach

7. Stoev, Victor

8. Zhang, Hui

Introduction

History of Algorithms in Chess-like Games

With challenging games such as Chess, there has long been a fascination with determining the optimal playing method. The challenge, until this point, has been creating a system to achieve “superhuman” performance with minimal training from human expertise or experience. Deep Blue, an IBM supercomputer, defeated a world champion in 1997 marking a huge step forward in artificial intelligence that would continue to be built upon for the coming decades. The methods in Deep Blue, as well as other methods around the same time, still utilized knowledge from chess grandmasters. It was through such methods that Stockfish was also developed. This program is the 2016 Top Chess Engine Championship world-champion and used as the gold standard against which any new algorithms are evaluated.

The Japanese game Shogi is even more complex than chess from a computational standpoint. It’s gold standard, is the Computer Shogi Association world champion, Elmo. Constructed in much the same way as the chess programs, Elmo is also highly dependent real human input and modifications compared to solely computation learning methods.

The game Go is more easily predicted without the use human expertise to beat human player. Such models are well suited to being built with neural networks. The rules of Go are translationally invariant and reflectionally symmetric. These qualities are not present in Chess and Shogi as the rules of these differ based on position on the board or piece and are asymmetric as the left and right side of the board allow for different moves. Go also has simplistic properties such as allowing stones to be placed at all locations on the board and terminating in a win or loss. Chess and Shogi have a more complex field of playable locations for each piece on a given turn, captured pieces may be returned to the field of play in certain scenarios, and the game is designed to allow for draws (stalemates). All of these are indicative of the properties that make neural networks more easily applicable to the game Go.

Purpose of the AlphaZero Algorithm

This paper primarily focuses on the general reinforcement algorithm implemented in the AlphaZero program. This program was developed as a generalization of AlphaGo (a program designed to be the best at Go) for the purpose of winning at other games such as Chess and Shogi. This paper will compare the training and performance of AlphaZero against other programs that, at the time of the development of AlphaZero, were considered to be the best “players” in the world: human or otherwise.

Model

Theory of Reinforcement Learning

Reinforcement learning is a paradigm where an agent performs some action and receives feedback based on a reward or penalty. The agent then uses this feedback to improve its actions in order to maximize rewards. In machine learning, reinforcement learning algorithms are well suited to strategic games such as chess, shogi and Go because given a game state, the expected reward of an action typically depends on the next several plays. To win consistently, the player has to sacrifice short term outcomes to optimize long term rewards, requiring them to think several moves ahead. An important concept in reinforcement learning is the exploration/exploitation tradeoff. In order for the agent to learn how to act optimally in a given state or board position, the player must be able to exploit reward-maximizing actions and also explore untested ones.

Benefits

- Compared to supervised learning you don’t need a data set

- No human bias

Issues

- Easy to get caught in local mins if not enough exploration

- Rewards can be very far away, AI may not learn due to discount rate on reward or not reaching the reward during play

Theory of Alpha-Beta Search

Alpha-beta search trees use minima/maxima logic to eliminate subsequent nodes. Values of alpha and beta are assigned for each node, representing maxima and minima, respectively. Alpha-beta search trees work particularly well with two-opponent games such as Chess, Go, and Shogi, as alternating layers reflect changes in turns.

The set-up for this tree is as follows:

1) The two opponents are assigned the “maxima” and “minima” roles. The “maxima” opponent is assigned a value of alpha. The “minima” opponent is assigned a beta.

2) Layers within the tree alternate in function between maximum and minimum. A given node will take either the max or min of connected nodes in the next lowest layer (dependent on the function).

3) Maximum/minimum are evaluated left to right within a layer. If a node’s value fails to improve on the current maximum/minimum for the entire layer, then it is eliminated and the path will not be pursued

4) Values of alpha and beta proceed up the tree until reaching the root. Before computing, alpha is set to negative infinity and beta is set to infinity.;

Theory of Monte Carlo Search Trees

Monte carlo tree search is a heuristic search algorithm; in a basic form it simulates a game using random plays, recording each play and only keeping the winning outcomes and their information. The algorithm receives a board position as an input and performs many multi-phase playouts on the given state. This is based on the idea that for a given board, if statistics are available for all states that are one move away, one can consider it to be a multi-armed bandit problem.

As the tree iterates, it learns and plays more optimally based on a search tree that is recursively expanded through random sampling of the search space based on playouts. The procedure consists of four major steps: selection, expansion, simulation and backpropagation.

- Selection is the process of deciding the action a to take from a particular state, starting from the root node. The process is guided by a predetermined allocation strategy, which is based on the sequence of past plays.

- From each child node, simulate a random game known as a rollout, which is represented as an edge in the tree diagram. Each node represents the win probability in playouts for the respective player.

- Eventually as the the algorithm traverses to the leaf nodes of the tree where there is no past information for possible states so the allocation algorithm can no longer be applied.

- Expansion occurs once the algorithm traverses to the leaf nodes where the allocation algorithm no longer applies. The next potential mixed state is estimated through simulation.

- The tree expands by adding child nodes, the initial chess board after action a, until the tree reaches the outcome nodes of either a win/draw/loss (leafs) or till a certain limiting depth restriction.

- Using results from the rollouts, the weight of the nodes and the evaluation function are updated. This is the back propagation step in the process.

- The parameters [math]\displaystyle{ \theta }[/math] of the network is randomly initiated and then trained with reinforcement learning through self-play.

The algorithm returns a probability vector [math]\displaystyle{ \pi }[/math] which represents the distribution of the moves with respect to the root state. The probability vector can be proportional or greedy depending on the choice of strategy of traversion, whether a light playout is desired or a more computationally heavy one. The move [math]\displaystyle{ a }[/math], is chosen with low visit count through the traversion, high move probability and high value according to the neural network.

Differences Between Game Algorithms

AlphaGo vs. AlphaGo Zero

| AlphaGo | AlphaGo Zero |

|---|---|

| Supervised learning using human expert positions | Tabula rasa (learns from scratch with self-play reinforcement learning) |

| Additional input features | Only uses the board position as an input |

| Separate policy and value networks | Only one neural network |

| Tree search using Monte Carlo rollouts | Simpler tree search relying on the single neural network to judge positions and moves |

AlphaGo Zero vs. Alpha Zero

| AlphaGo Zero | AlphaZero |

|---|---|

| Estimates and optimises probability of winning assuming binary win/loss | Estimates and optimises expected outcome, including draws |

| Augments training data with rotations and reflections since Go is invariant to these changes | Does not augment training data since other games (like chess) are asymmetric |

| The model is updated after completed iteration; if new player wins against “best” player by a margin of 55%, they are replaced | The model is continually updated (not waiting for iteration to complete); it does not include the evaluation or best player selection |

| Hyper-parameter of the search is tuned with Bayesian optimisation | Same hyper-parameters are reused for all games without tuning (except for adding noise) |

Training and Results

Training the Model

AlphaZero generalizes the algorithm used in similar previous programs such as AlphaGo Zero using the differences above to accommodate more games other than Go. One generalization is that AlphaZero only knows the basic rules of the game and just takes the board position as an input. As the paper says, “the program trains using a deep neural network [math]\displaystyle{ (p, v) = f_θ(s) }[/math] with parameters θ” which are randomly initialized. It then takes "the board position s as an input and output[s] a vector of move probabilities p with components [math]\displaystyle{ p_a = Pr(a|s) }[/math]for each action a, and a scalar value v estimating the expected outcome z from position s, [math]\displaystyle{ v ≈ E[z|s] }[/math]” (Silver, 2017).

AlphaZero uses a Monte-Carlo tree search algorithm discussed above along with the predicted values of p from the neural network to select each move for both players. Firstly it will take in the current game state (along with the eight previous game states and which player’s turn it is), and output the approximate state value v and the probability vector of the next move from the policy p. Each child node is initialized with the number of visits N = 0, the value W = 0, and p from the neural network. Selection and backup continue as explained previously, selecting child nodes with the highest probabilistic upper confidence tree score [math]\displaystyle{ U_i = W_i/N_i +cP_i*sqrt(ln(N_p)/(1+N_i)) }[/math] until we hit a leaf, whose value is set to the predicted value from the neural network and then expanded. During backup, each parent’s N is incremented, and its value changed according to the child nodes’ predicted values and the policy vector. The tree search algorithm is given a certain amount of time to run, after which the move selected will be that has the best value. At each move, the values of v, p, and the improved policy predictions [math]\displaystyle{ \pi_i=N_i^{(1/ \tau)} }[/math] are stored.

When the game ends, and the outcome z is computed, the parameters θ are updated using gradient descent to minimize the loss function [math]\displaystyle{ l = (z-v)^2-\pi^T log p + c||θ||^2 }[/math]. This loss function combines the mean squared error on the valuation function v (real-valued prediction) and cross entropy losses on action policy vector p (classification on the action), and includes an [math]\displaystyle{ L^2 }[/math] regularization term [math]\displaystyle{ c||θ||^2 }[/math].

The network structure used was not explicitly specified in the paper, but it is likely that AlphaZero utilized a residual convolution neural network as was implemented in AlphaGo Zero.

Scoring and Evaluation

Performance of AlphaZero was done against its predecessor algorithms: Stockfish, Elmo, AlphaGo, and AlphaGo Zero. It was found that AlphaZero was able to search 80,000 positions/second when applied in games of chess compared to the gold-standard Stockfish which required 70 million positions/second. In the game of Shogi, AlphaZero required 40,000 positions/second while Emlo required 35 million positions/second. These results are indicative of a much more efficient algorithm.

The Elo scale was also used as a measurement of the success of the algorithm. These ratings were computed from a 1 second per move tournament between AlphaZero and one of the other algorithms.

Criticisms

The training times are given using resources such as 5,000 first-generation TPUs to generate self-play games and 64 second-generation TPUs to train the neural networks. But what is the result for someone who wants to reproduce this but does not have the necessary supercomputer? 5,000 TPU at 4 hours is 2 years processing time with only one TPU. Speaking of reproducibility, since none of the code is available publicly it is not possible to reproduce the results or test it.

Second, the AlphaZero was being run on a higher processing power than Stockfish, making a non-level playing field. The time allocation was also a minute per move when most human vs engine games have a total allotted time and each player can choose how much time to spend on each move.

Next, the paper does not discuss how they ended up with the form they did. Did they try out multiple methods and this was the best of the bunch? Are there potentially better ways to set up states and neural nets?

Finally, there’s no mention of how this can be scaled to a Markov Decision Process situation where the outcomes are partially random and partially based on the decisions of a player. The paper struggles to identify its own shortfalls/areas where they could be better.

Sources

Collados, Jose Camacho. “Is AlphaZero Really a Scientific Breakthrough in AI?” Medium, 11 Dec. 2017,medium.com/@josecamachocollados/is-alphazero-really-a-scientific-breakthrough-in-ai-bf66ae1c84f2.

Ha, Karel. “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm.” AI Seminar. AI Seminar, 19 Dec. 2017, Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm.

Silver, David, et al. "Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm." arXiv preprint arXiv:1712.01815 (2017).

Silver, David, et al. "Mastering the game of Go with deep neural networks and tree search." nature 529.7587 (2016): 484-489.

Silver, David, et al. "Mastering the game of go without human knowledge." Nature 550.7676 (2017): 354.