Bag of Tricks for Efficient Text Classification: Difference between revisions

(Removed previously by mistake) |

|||

| (184 intermediate revisions by 8 users not shown) | |||

| Line 1: | Line 1: | ||

== Introduction == | == Introduction and Motivation == | ||

Neural | Text Classification is utilized by millions of web users on a daily basis. An example of an application of text classification is web search and content ranking. When a user searches a specific word that best describes the content they are looking for, text classification helps with categorizing the appropriate content. | ||

Neural networks have been utilized more recently for Text-Classifications and demonstrated very good performances. However, it is slow at both training and testing time, therefore limiting their usage for very large datasets. The motivation for this paper is to determine whether a simpler text classifier, which is inexpensive in terms of training and test time, can approximate the performance of these more complex neural networks. | |||

The authors suggest that linear classifiers are very effective if the right features are used. The simplicity of linear classifiers allows a model to be scaled to very large data set while maintaining its good performance. | |||

The basis of the analysis for this paper was applying the classifier fastText to the two tasks: tag predictions and sentiment analysis, and comparing its performance and efficiency with other text classifiers. The paper claims that this method “can train on billion word within ten minutes, while achieving performance on par with the state of the art.” | |||

== Background == | |||

=== Natural-Language Processing and Text Classification === | |||

[https://en.wikipedia.org/wiki/Natural-language_processing Natural Language Processing] (NLP) is concerned with being able to process large amounts of natural language data, involving speech recognition, natural language understanding, and natural-language generation. Text understanding involves being able to understand the explicit or implicit meaning of elements of text such as words, phrases, sentences, and paragraphs, and making inferences about these properties of texts (Norvig, 1987). One of the main topics in NLP is text classification, which is assigning predefined categories to free-text documents, with research ranging in this field from designing the best features to choosing the best machine learning classifiers (Zhang et al. 2015). Traditionally, techniques for text classification are based on simple statistics on words that use linear classifiers such as Bag of Words and N-grams. | |||

With the advancement of deep learning and the availability of large data sets, methods of handling text understanding using deep learning techniques have become popular in recent years. These deep learning models have been shown to significantly perform better than the traditional models in several studies. The following are the deep learning models that are compared to fastText in the Experiment Section: | |||

=== Char-CNN === | |||

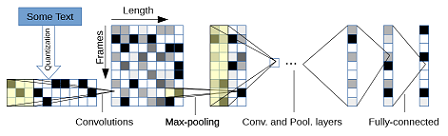

For input a ConvNet model accepts a sequence of one-hot vectors. A one-hot vector of the i-th symbol in an alphabet is a binary vector whose elements are all zeros except for the i-th element which is set to one. The model in (Zhang and Lecun, 2015) is composed of many layers of convolutional and [http://www.citationmachine.net/apa/cite-a-journal/manual max-pooling]. The max-pooling allowed them to train deeper models. Each layer first extracts features from small, overlapping windows of the input sequence and pools over small, non-overlapping windows by taking the maximum activations in the window. This is applied recursively for many times. The final convolutional layer’s activation is flattened to form a vector which is then fed into a small number of fully-connected layers followed by the classification layer. | |||

[[File: zhang_and_lecun_2016.png]] An illustration of the Char-CNN model used in (Zhang and Lecun, 2015). | |||

=== Char-CRNN === | |||

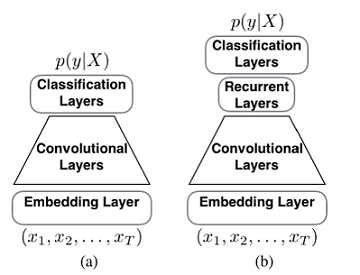

A character-level convolutional neural network (CRNN) is a a convolutional neural network with a recurrent layer on top. Many layers of convolutions can capture long-term dependencies but can end up with a very deep network as the sequence of characters grow to hundreds or thousands in a document(Xiao and Cho, 2016). A recurrent layer can efficiently capture long-term dependencies, requiring only a small number of convolution layers. However, the recurrent layer is computationally expensive. | |||

[[File:xiao and cho 2016 pic.png]] | |||

Graphical illustration of (a) a CNN and (b) proposed CRNN in (Xiao and Cho, 2016) for character-level document classification | |||

=== VDCNN === | |||

The authors of (Conneau et al., 2016) believe that future research should invest into making text processing models deeper by proposing a Very Deep Convolutional Neural Network (VDCNN). Their model operates at low atomic representations of text (i.e. characters) and use a deep stack of convolutions. Using up to 29 layers in their study, they have shown that accuracy typically increases as the model gets much deeper. | |||

=== Tagspace === | |||

[http://emnlp2014.org/papers/pdf/EMNLP2014194.pdf Tagspace] is a convolution neural network which aims to predict hashtags in social network posts. In this context, hashtags are used diversely, as identifiers, sentiments, topic annotations, and more. Tagspace uses "embeddings" which are vector representations of text which are then combined with some function which produces a point on the embedding space. Because Tagspace also captures the semantic context of hashtags, it is a strong model in terms of NLP learning. Tagspace was also applied to a document recommendation problem where the next item a user will interact with was predicted based on their previous history. | |||

== Model == | == Model == | ||

=== Model Architecture of fastText === | === Model Architecture of fastText === | ||

Traditionally, text classification methods were centered around linear classifiers. Linear classifier is limited by its inability to share parameters among features and classes. As a result, classes with very few examples will often get classified in a large output field. The authors of this paper will attempt to improve on the performance of linear classifiers with the key features of rank constraint and fast loss approximation. Before we get into that, we must better understand the idea of model training of linear classifiers. | |||

Linear classifier is limited by its inability to share parameters among features and classes. As a result, classes with very few examples | |||

Consider each text and each label as a vector in space. The model is training the coordinates of the text vector, in order for the text vector to be close to the vector of its associated label. The text vector and its label vector is inputted into the softmax function, which returns a score. The score is then normalized across the score for that same text with every other possible label. The result is the probability that the text will have its associated label. Then stochastic gradient descent algorithm is used to keep updating the coordinates until the probability of correct label for every text is maximized. This is clearly computationally expensive, as the score for every possible label in the training set must be computed for a text. | |||

A softmax function returns a probability that a text is associated with label j, with K labels in the training set is: | |||

<math> P(y=j | \mathbf{x}) = \dfrac{e^{\mathbf{x}^T \mathbf{w}_j}}{\sum_{k=1}^K e^{\mathbf{x}^T \mathbf{w}_k}} </math> | |||

,where x represents the text vector and w represents the label vector. | |||

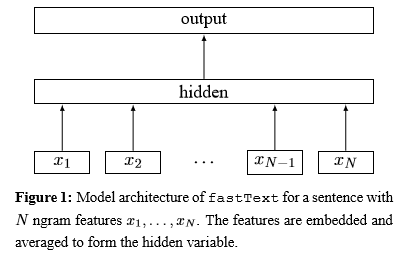

Now let’s look at the improved method of linear classifiers with a rank constraint and fast loss approximation. Refer to the image below. | |||

[[File:model image.png]] | |||

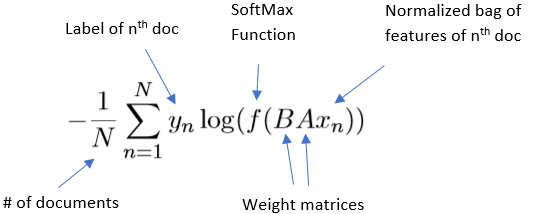

Using the weight matrices, the ngram features of the input are first looked up to find word representations, then averaged into hidden text representation. It is then fed to a linear classifier. Finally, the softmax function is used to compute the probability distribution over the predefined classes. For a set of N documents, the model minimizes the negative log likelihood over the classes. The classifier trains on multiple CPUs using stochastic gradient descent and a linearly decaying learning rate. | |||

[[File:formula explained.png]] | |||

Two changes that were applied in this model architecture are the hierarchical softmax function, which improves performance with a large number of classes, and the hashing trick to manage mappings of n-grams to local word order. These two nuances will be more thoroughly explained in the upcoming sections. | |||

=== Softmax and Hierarchy Softmax === | === Softmax and Hierarchy Softmax === | ||

As mentioned above, the softmax function is used to compute the probability density over predefined classes. It calculates the probability of <math>y_n</math> as each possible class and outputs probability estimates for each class. Due to the nature of the softmax function, the denominator serves to normalize the probabilities, allowing a single <math>y_n</math> to receive probabilities for each label, where these probabilities sum to one. This provides a means to choose the highest probability as the corresponding label for <math>y_n</math>. | |||

However, the softmax function does have a computational complexity of <math> O(Kd) </math> where <math>K</math> is the number of classes and <math>d</math> is the number of dimensions in the hidden layer of the model. This is due to the nature of the softmax function since each function calculation requires normalizing the probabilities over all potential classes. This runtime is not ideal when the number of classes is large, and for this reason a hierarchical softmax function is used. We can see the differences in computational efficiency in the following set-up for hierarchical softmax. | |||

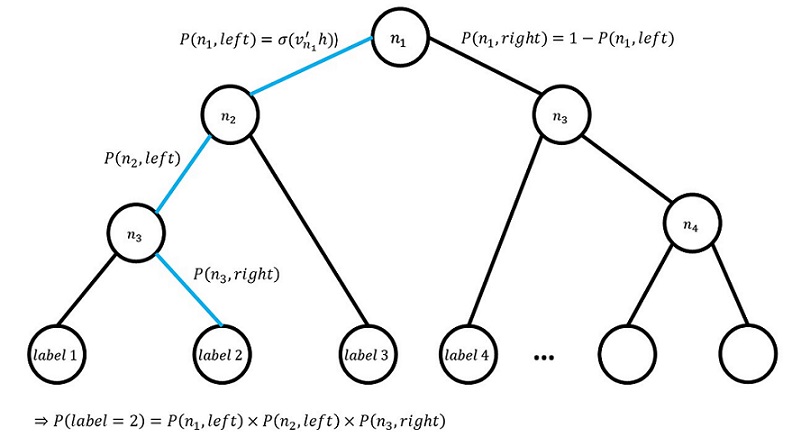

Suppose we have a binary tree structure based on Huffman coding for the softmax function, where each node has at most two children or leaves. Huffman coding trees provide a means to optimize binary trees, where the lowest frequency classes are placed deeper into the tree and the highest frequency classes are placed near the root of the tree. This minimizes the path for more frequently labelled classes. Huffman coding trees are efficient since computational runtime is reduced to <math> O(d \log_2(K)) </math>. | |||

A probability for each path, whether we are travelling right or left from a node, is calculated. This is done by applying the sigmoid function to the product of the output vector <math>v_{n_i}</math> of each inner node <math> n </math> and the output value of the hidden layer of the model, <math>h</math>. The idea of this method is to represent the output classes as the leaves on this tree and a random walk then assigns probabilities for these classes based on the path taken from the root of the tree. The probability of a certain class is then calculated as: | |||

<div style="text-align: center;"> <math> P(n_{l+1}) = \displaystyle\prod_{i=1}^{l} P(n_i) </math> <br> </div> | |||

<i>where <math>n</math> represents the leaf node that a class is located on with depth <math> l+1 </math> and <math> n_1, n_2, …, n_l </math> represents the parent nodes of that leaf </i> | |||

In the below figure, we can see an example of a binary tree for the hierarchical softmax model. An example path from root node <math>n_1</math> to label 2 is highlighted in blue. In this case we can see that each path has an associated probability calculation and the total probability of label 2 is in line with the class probability calculation above. | |||

[[File:figure2.jpg|Binary Tree Example for the Hierarchical Softmax Model]] | |||

Note that during the training process, vector representations for inner nodes, <math>v_{n_i}</math>, are updated by introducing an error function, <math>Err</math> and deriving the error function to obtain the following: | |||

<div style="text-align: center;"> <math> \frac{\partial Err}{\partial v_{n_i}^{'}}=\frac{\partial Err}{\partial v_{n_i}^{'}h} \cdot \frac{\partial v_{n_i}^{'}h }{\partial v_{n_i}^{'}} </math> <br></div> | |||

We can use this to update the vector values with the following: | |||

<div style="text-align: center;"> <math> v_{n_i}'(new) = v_{n_i}'(old) - η \frac{\partial Err}{\partial v_{n_i}'} </math><br></div> | |||

== N-Gram, Bag of Words, and TFIDF == | |||

=== Bag of Words=== | |||

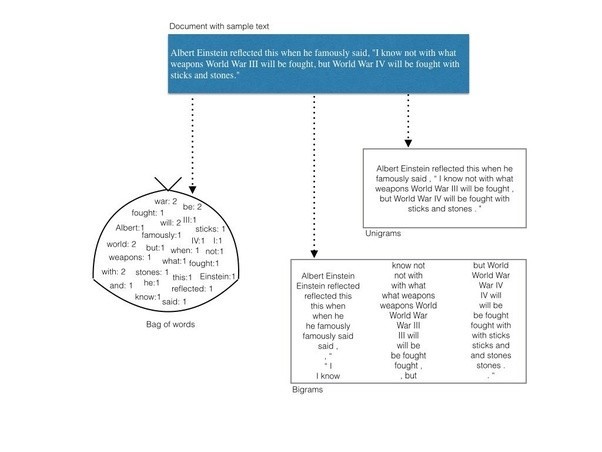

[[File:NGRAM.jpg]] | |||

[[https://www.quora.com/In-Text-Classification-What-is-the-difference-between-Bag-of-Words-BOW-and-N-Grams/ Source]] | |||

'''Bag of word''' is an algorithm for simplifying a text dataset by counting how many times a word appears in a document. The n most frequent words are extracted from the training subset to be used as the “dictionary” for the testing set. This dictionary allow us to compare document for document classification and topic modeling. This is one of the method that the authors used for preparing text for input. Each vector of word count is normalized such that all the elements of the vector adds up to one (taking the frequency percentage of the word). If these frequencies exceeds a certain level it will activate nodes in neural network and influence classification. | |||

'''Bag of | |||

The main '''weakness''' of bag of word is that it losses information due to it being single word and invariant to order. We will demonstrate that shortly. Bag of word will also have high error percentage if the training set does not include the entire dictionary of the testing set. | |||

=== N-Gram === | |||

'''N-gram''' is another model for simplifying text replication by storing n-local words adjacent to the initial word (or character, N-gram can be character based. Each words in the document is read one at a time just like bag of words, however a certain range of its neighbors will also be scanned as well. This range is known as the n-grams. Compared to bag of words, any N over 1 (noted as Unigram) will contain more information than bag of words. | |||

In the picture above, it gives an example of a Unigram (1-gram) which is the absolute simplest possible version of this model. Unigram does not consider previous words and just chooses random words based on how common they are in general. It also shows a Bigram (2-gram), where the previous word is considered. Trigram would consider the previous two words, etc etc. Up to N-grams, where it considers N-1 previous words. | |||

Let a sentence be denoted as a product of words 1 to word n, <math> | |||

\omega_1^n = | |||

\omega_1 \cdots \omega_n </math> . By probabilities properties, we can model the probability of the word sequence 1 with Bigram as <math> P(\omega_1^n) =P( | |||

\omega_1) P( \omega_2 | \omega_1) P( \omega_3 | \omega_1^2) \cdots P( \omega_n | \omega_1^{n-1}) </math> . For example, take the sentence, "How long can this go on?" We can model it as followed: | |||

P(How long can this go on?”)= P(How)P(long | How)P(can | long)P(this | can)P(go | this)P(on | go)P(? | on) | |||

Going back to the chain event probability. We can reduce the above equation as the Product of the conditional probabilities as follows for the Bigram case: | |||

<math>P(\omega_1^n) = \prod_{k=1}^n P(\omega_k | \omega_{k-1} )</math>. | |||

We can generalize this to the stronger case for N-th gram as: | |||

<math>P(\omega_1^n) = \prod_{k=1}^n P(\omega_k | \omega_{k-(N-1)}^{k-1} )</math>. | |||

The '''weakness''' with N-gram is that many times local context does not provide any useful predictive clues. For example, if you want the model to learn plural usage of the following sentence: | |||

The | |||

The '''woman''' who lives on the fifth floor of the apartment '''is''' pretty. | |||

The '''women''' who lives on the fifth floor of the apartment '''are''' pretty. | |||

You will need to use 11-th gram and it is very unfeasible for ordinary machines. Which brings us to the next problem, as N increases, the predictive power of the model increases, however the number of parameters required grows exponentially with the number of words prior context. | |||

=== BoW, Unigram, Bigram Example === | |||

An example of this is found in the below example | |||

A = “I love apple” | A = “I love apple” | ||

| Line 125: | Line 196: | ||

|} | |} | ||

Notice now, A and B are unique because bigram takes into consideration | Notice now, A and B are unique because bigram takes into consideration one space of local words. However, A and C also have similar elements, being I love. IF we were to further increase N in N-gram we will have an easier time in classifying the distinction between the two. Higher, the consequences of operating in higher dimension of N gram is that the run time will increase. | ||

=== Feature Hashing === | |||

From above, we can see that even though N-gram keeps words order features; however, the dimension of our feature vector or matrix increases as N increases. Not all combinations of words are common in real life, which may cause our feature matrix be very disperse, and computationally expensive. In order to address this problem, we introduce the '''hash trick''', aka '''feature hashing'''. | |||

Feature hashing, can be used in sentence classification which maps feature vectors to indices of an array or a matrix of fixed size by applying a hash function to features. The general idea is to map feature vectors from high dimensional to lower dimensional to reduce the dimension of the input vector, and therefore, reduce the cost of storing large vectors. | |||

A '''hash function''' is any function that maps an arbitrary array of keys to a list of fixed size. For hash trick, it can be generally expressed as <math> h(n):\{1,...,N\} \rightarrow \{1,...,M\} </math>. A very simple hash function is the modulo operation. Considering if we are mapping a key to a hash table of M slots. Then, a simple hash function can be defined as | |||

h(key) = key % M | |||

Since for N-grams model, word count is usually used as features, the hashed feature map can be easily calculated as | |||

<math> \phi_i^{h}(x) = \underset{j:h(j)=i}{\sum} x_j </math>, where <math> i </math> is the corresponding index of the hashed feature map. | |||

Take above bigram example, if we want to hash each feature vector to a hash table of length 3. If we are using the simple hash function described as above, our hashed feature map is now | |||

{| class="wikitable" | |||

! A !! B !! C | |||

|- | |||

| 1 | |||

| 1 | |||

| 0 | |||

|- | |||

| 1 | |||

| 0 | |||

| 1 | |||

|- | |||

| 1 | |||

| 1 | |||

| 0 | |||

|} | |||

There are many choices of hash functions, but the general idea is to have a good hash function that distributes keys evenly across the hash table. | |||

Hash collision happens when two distinct keys are mapped to the same indices. For above example, "I love" and "love i" are mapped to the same index 0. | |||

In order to get an unbiased estimate, the paper uses a signed hash kernel as introduced in [https://alex.smola.org/papers/2009/Weinbergeretal09.pdf Weinberger et al.2009], which introduces another hash function <math> \xi(n):\{1,...,N\} \rightarrow \{-1, 1\} </math> to determine the sign of the return index. The hashed feature map <math> \phi </math> now becomes | |||

<math> \phi_i^{h, \xi}(x) = \underset{j:h(j)=i}{\sum} \xi(j) \cdot x_j </math> | |||

Consider if our <math> \xi(n) </math> is as below: | |||

def xi(n): | |||

if n > M: | |||

return -1 | |||

else: | |||

return 1 | |||

Our hash table now becomes | |||

{| class="wikitable" | |||

! A !! B !! C | |||

|- | |||

| 1 | |||

| 1 | |||

| 0 | |||

|- | |||

| -1 | |||

| 0 | |||

| 1 | |||

|- | |||

| 1 | |||

| -1 | |||

| 0 | |||

|} | |||

Ideally, collisions will "cancel out", and therefore, achieve an unbiased estimate. | |||

=== TF-IDF === | |||

For normal N-grams, word counts are used as features. However, another way that can be used to represent the features is called TFIDF, which is the short cut for '''term frequency–inverse document frequency'''. It represent the importance of a word to the document. | |||

'''Term Frequency(TF)''' generally measures the times that a word occurs in a document. An '''Inverse Document Frequency(IDF)''' can be considered as an adjustment to the term frequency such that a word won't be deemed as important if that word is a generally common word, for example, "the". | |||

TFIDF is calculated as the product of term frequency and inverse document frequency, generally expressed as <math>\mathrm{tfidf}(t,d,D) = \mathrm{tf}(t,d) \cdot \mathrm{idf}(t, D)</math> | |||

In this paper, TFIDF is calculated in the same way as [https://arxiv.org/pdf/1509.01626.pdf Zhang et al., 2015], with | |||

* <math> \mathrm{tf}(t,d) = f_{t,d} </math>, where <math> f_{t,d} </math> is the raw count of <math> t </math> for document <math> d </math>. | |||

* <math> \mathrm{idf}(t, D) = log(\frac{N}{| \{d\in D:t\in d \} |}) </math>, where <math> N </math> is the total number of documents and <math> | \{d\in D:t\in d \} | </math> is the total number of documents that contains word <math> t </math>. | |||

== Experiment == | |||

In this experiment fastText was compared on two classification problems with various other text classifiers. | |||

The first classification problem being Sentiment Analysis, where it is compared to the existing text classifiers. Second, we evaluate fastText to a larger output space on a tag prediction dataset. | |||

The Vowpal Wabbit library, written in C++ can also be used to implement our model. However, compared to this library, our tailored implementation is at least 2-5× faster in practice. | |||

=== Sentiment Analysis === | |||

==== Competing Models and fastText ==== | |||

The sentiment analysis uses an experiment that contains 8 datasets that are ideal to test text classification, they range anywhere from news categories, to Yelp reviews. | |||

There are 5 models used in this comparison from (Zhang, X., Zhao, J., and LeCun, Y. 2015) we have the Bag of Words (BoW) model, as well as the N-grams and TFIDF models. This experiment also features convolutional network with the character level convolutional network (char – CNN) from (Conneau et al. 2016) as well as the very-deep convolutional network (VDCNN) from (Zhang and LeCun 2015). | |||

The 5 models are compared with fastText, which is run with 10 hidden units. In the experiment it is evaluated with and without bigrams. fastText is run for 5 epochs with a learning rate selected on a validation set from {0.05, 0.1,0.25,0.5}. fastText has been run with the same parameters on all datasets. | |||

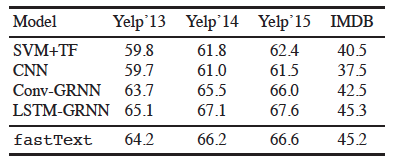

The results reported the main baselines and a comparison with (Zhang, X., Zhao, J., and LeCun, Y. 2015) based on recurrent networks Convolutional Gated Recurrent Neural Network (conv-GRNN) and the Long Short Term Memory Gated Recurrent Neural Network (LTSM-GRNN). | |||

==== Baselines ==== | |||

[[File:SA Table1.png]] | |||

'''Table 1''': Test accuracy [%] on sentiment datasets. FastText has been run with the same parameters for all the datasets. It has 10 hidden units and we evaluate it with and without bigrams. Note (Zhang, X., Zhao, J., and LeCun, Y. 2015) is shortened to (Zhang et al., 2015) | |||

In the above table we observe that adding the bigram to fastText improves the performance on all datasets by 1 – 4%. While comparing it to the two convolutions, fastText performed better than char – CNN, but slightly worse than VDCNN. It was noted that by adding more n-grams, could lead to a slight increase in accuracy. For example, the addition of a trigram reported a performance on the Sogou dataset of 97.1%, which is 3 basis points higher than what VDCNN reported. | |||

==== Comparison with (Zhang et al., 2015) based on recurrent networks ==== | |||

[[File:SA Table2.png]] | |||

'''Table 2''': Comparison with (Zhang, X., Zhao, J., and LeCun, Y. 2015). The hyper-parameters were chosen on the validation set. | |||

The hyper parameters were tuned on the validation set and it was observed that using N-grams up to 5 would result in an optimal performance. It is clear with the above results that the fastText is competitive against the methods discussed in (Zhang, X., Zhao, J., and LeCun, Y. 2015). | |||

==== Training Time ==== | |||

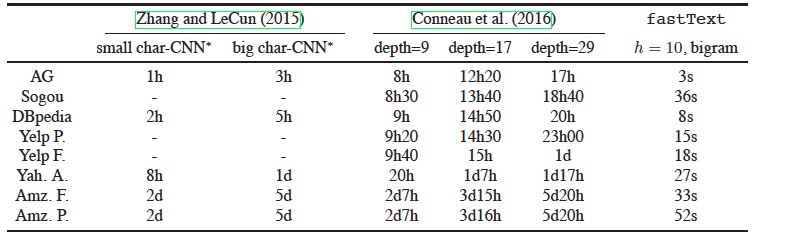

[[File:SA Table3.png]] | |||

'''Table 3''': Training time on sentiment analysis datasets compared to char-CNN and VDCNN. We report the overall training time, | |||

except for char-CNN where we report the time per epoch. | |||

One of the proposed benefits of this method was the processing time, it was claimed to be faster than other methods based neural networks, thus fastText was compared to the two convolutional networks, char – CNN and VDCNN in training time. Both of the comparable convolutional networks were trained on a NVIDIA Tesla K40 GPU, while fastText was trained on a CPU with 20 threads. It is important to note that only char-CNN was reported as time per epoch. It is evident that fastText is many times quicker in training the mentioned datasets. There were alternatives present to improve the convolutional network’s performance, however, they do not compare to fastText, which can take less than a minute to train on these large datasets. | |||

It is clear that fastText can closely replicate the accuracy standards of neural network methods without being computationally inefficient. | |||

=== Tag prediction === | |||

====Dataset==== | |||

Scalability to large datasets is an important feature of a model. In order to test that, evaluation was carried the YFCC100M dataset which consists of approximately 100 million images containing captions, titles and tags for each. Here, the spotlight was on using fastText text classifier to predict the tags associated with each image without actually using the image itself, both rather the information associated with the image such as the title and caption of the image. | |||

The methodology behind this classification problem was to remove the words and tags that occur less than 100 times and split the data into a train, validation and test set. The train set consists of approximately 90% of the dataset, the validation set consists of approximately 1%, and the test set of 0.5%. Table 4 shows an example of 5 items in the validation set with their Inputs (title and caption), Prediction (the tag class they are classified to) and Tags (real image tags), highlighting when the predicted class is in fact one of the tags. | |||

{| class="wikitable" | |||

|- | |||

! Input | |||

! Prediction | |||

! Tags | |||

|- | |||

| taiyoucon 2011 digitals: individuals digital photos from the anime convention taiyoucon 2011 in mesa, arizona. if you know the model and/or the character, please comment. | |||

| #cosplay | |||

| #24mm #anime #animeconvention #arizona #canon #con #convention #cos '''#cosplay''' #costume #mesa #play #taiyou #taiyoucon | |||

|- | |||

| 2012 twin cities pride 2012 twin cities pride parade | |||

| #minneapolis | |||

| #2012twincitiesprideparade '''#minneapolis''' #mn #usa | |||

|- | |||

| beagle enjoys the snowfall | |||

| #snow | |||

| #2007 #beagle #hillsboro #january #maddison #maddy #oregon '''#snow''' | |||

|- | |||

| christmas | |||

| #christmas | |||

| #cameraphone #mobile | |||

|- | |||

| euclid avenue | |||

| #newyorkcity | |||

| #cleveland #euclidavenue | |||

|} | |||

Removing infrequently occurring tags and words eliminates some noise and helps our model learn better. A [https://github.com/facebookresearch/fastText script] has been released explaining the breakup of the data. After cleaning, the vocabulary size is 297,141 and there are 312,116 unique tags. | |||

==== Baselines==== | |||

A frequency-based approach which simply predicts the most frequent tag is used as a baseline. | |||

The model we compare fastText to in tag prediction is [http://www.aclweb.org/anthology/D14-1194 Tagspace]. It is similar to our model but is based on the [http://www.thespermwhale.com/jaseweston/papers/wsabie-ijcai.pdf WSABIE] which predicts tags based on both images and their text annotations. The Tagspace model is a convolution neural network, which predicts hashtags in semantic contexts of social network posts. For faster and better comparable performance, the experiment uses a linear version of this model. Tagspace also predicts multiple hashtags so this experiment does not fully compare the capabilities of the Tagspace model. | |||

==== Results ==== | |||

{| class="wikitable" | |||

|- | |||

! Model | |||

! prec@1 | |||

! Running time - Train | |||

! Running time - Test | |||

|- | |||

| Freq. baseline | |||

| 2.2 | |||

| - | |||

| - | |||

|- | |||

| Tagspace, h = 50 | |||

| 30.1 | |||

| 3h8 | |||

| 6h | |||

|- | |||

| Tagspace, h = 200 | |||

| 35.6 | |||

| 5h32 | |||

| 15h | |||

|- | |||

| fastText, h = 50 | |||

| 30.8 | |||

| 6m40 | |||

| 48s | |||

|- | |||

| fastText, h = 50, bigram | |||

| 35.6 | |||

| 7m47 | |||

| 50s | |||

|- | |||

| fastText, h = 200 | |||

| 40.7 | |||

| 10m34 | |||

| 1m29 | |||

|- | |||

| fastText, h = 200, bigram | |||

| 45.1 | |||

| 13m38 | |||

| 1m37 | |||

|} | |||

The above table presents a comparison of our fastText model to other baselines. The "Precision-at-one" (Prec@1) metric reports the proportion that highest ranking tag the the model predicts is in fact one of the real tags for an item. | |||

We can see the frequency baseline has the lowest accuracy. On running fastText for 5 epochs and we compare it to Tagspace results. fastText with bigrams is also used and models are tested with 50 and 200 hidden layers. With 50 hidden layers, both fastText (without bigrams) and Tagspace performed similarly while fastText with bigrams performed better at 50 hidden layers than Tagspace did at 200. At 200 hidden layers, fastText performs better still and with bigrams, accuracy is improved. | |||

Finally, both the train and test times of our model performed significantly better than Tagspace which must compute the scores for each class. fastText on the other hand, has a fast inference which gives it an advantage for a large number of classes. In particular, test times for fastText are significantly faster than that of Tagspace. | |||

== Conclusion == | |||

The authors propose a simple baseline method for text classification that perform well on large-scale datasets. Word features are averaged together to represent sentences which is then fed to a linear classifier. The authors experiment and test their model fastText against other models with evaluation protocol similar to Zhang et al. (2015) for sentiment analysis and then evaluated on its ability to scale to large output on a tag prediction data set. fastText achieved comparable results in terms of accuracy and was found to train significantly faster. | |||

== Commentary and Criticism == | |||

=== fastText and baseline models === | |||

The performance of fastText was compared to several baselines related to text classification. While this paper focused on the ways fastText outperformed these classifier, the baseline classifiers were not always evaluated at their optimal performance. | |||

* '''Tagspace''' was used as a comparison classifier in a tag prediction problem. However, the goal of this model is to predict hashtags as semantic embedding rather than image tags as in the experiment. Additionally, only a linear version of Tagspace was used as a comparison. | |||

== Sources == | |||

Chen, W., Grangier, D., & Auli, M. (2016). Strategies for Training Large Vocabulary Neural Language Models. Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). doi:10.18653/v1/p16-1186 | |||

Conneau, A., Schwenk, H., Barrault L., and Lecun Y. (2016). Very deep convolutional networks for natural language processing. arXiv preprint arXiv:1606.01781. | |||

Huffman coding. (n.d.). Retrieved March 23, 2018, from http://homes.sice.indiana.edu/yye/lab/teaching/spring2014-C343/huffman.php | |||

Joulin, A., Grave, E., Bojanowski, P., & Mikolov, T. (2016). Bag of Tricks for Efficient Text Classification. ''Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 2, Short Papers''. doi:10.18653/v1/e17-2068 | |||

Norvig, P. Inference in text understanding. In AAAI, pp. 561–565, 1987. | |||

Rong, X. (2016). Word2vec Parameter Learning Explained. doi:arXiv:1411.2738v4 | |||

Weston, J., Bengio, S., Usunier, N. (2011). Wsabie: Scaling Up To Large Vocabulary Image Annotation. ''Proceedings of the International Joint Conference on Artificial Intelligence, IJCAI (2011)''. url: http://www.thespermwhale.com/jaseweston/papers/wsabie-ijcai.pdf | |||

Weston, J., Chopra, S., & Adams, K. (2014). #TagSpace: Semantic Embeddings from Hashtags. ''Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP)''. doi:10.3115/v1/d14-1194 | |||

Xiao, Y. and Cho, K. (2016). Efficient character-level document classification by combining convolution and recurrent layers. arXiv preprint arXiv:1602.00367. | |||

Zhang, X. and LeCun, Y. (2015). Text understanding from scratch. arXiv preprint arXiv:1502.01710. | |||

Zhang, X., Zhao, J., and LeCun, Y. (2015). Character-level convolutional networks for text classification. In NIPS. | |||

Kilian Weinberger; Anirban Dasgupta; John Langford; Alex Smola; Josh Attenberg (2009). Feature Hashing for Large Scale Multitask Learning. Proc. ICML. | |||

== Further Reading == | |||

* [https://fasttext.cc/ fastText official website] - Resources for using fastText | |||

* [https://research.fb.com/fasttext/ Facebook Research: fastText] - Overview of fastText released by Facebook Research. | |||

* [https://github.com/facebookresearch/fastText fastText GitHub] | |||

* [https://wiki.math.uwaterloo.ca/statwiki/index.php?title=stat441w18/A_New_Method_of_Region_Embedding_for_Text_Classification A New Method of Region Embedding for Text Classification (Summary)] - Paper summary describing a method of preserving local structure information with small text regions for text classification tasks. | |||

* [https://wiki.math.uwaterloo.ca/statwiki/index.php?title=stat441w18/Convolutional_Neural_Networks_for_Sentence_Classification Convolution Neural Networks for Sentence Classification (Summary)] - Paper summary describing applying four variations of Convolutional Neural Networks to several NLP tasks such as sentiment analysis, customer review prediction, movie reviews, and more. | |||

Latest revision as of 21:32, 27 March 2018

Introduction and Motivation

Text Classification is utilized by millions of web users on a daily basis. An example of an application of text classification is web search and content ranking. When a user searches a specific word that best describes the content they are looking for, text classification helps with categorizing the appropriate content.

Neural networks have been utilized more recently for Text-Classifications and demonstrated very good performances. However, it is slow at both training and testing time, therefore limiting their usage for very large datasets. The motivation for this paper is to determine whether a simpler text classifier, which is inexpensive in terms of training and test time, can approximate the performance of these more complex neural networks.

The authors suggest that linear classifiers are very effective if the right features are used. The simplicity of linear classifiers allows a model to be scaled to very large data set while maintaining its good performance.

The basis of the analysis for this paper was applying the classifier fastText to the two tasks: tag predictions and sentiment analysis, and comparing its performance and efficiency with other text classifiers. The paper claims that this method “can train on billion word within ten minutes, while achieving performance on par with the state of the art.”

Background

Natural-Language Processing and Text Classification

Natural Language Processing (NLP) is concerned with being able to process large amounts of natural language data, involving speech recognition, natural language understanding, and natural-language generation. Text understanding involves being able to understand the explicit or implicit meaning of elements of text such as words, phrases, sentences, and paragraphs, and making inferences about these properties of texts (Norvig, 1987). One of the main topics in NLP is text classification, which is assigning predefined categories to free-text documents, with research ranging in this field from designing the best features to choosing the best machine learning classifiers (Zhang et al. 2015). Traditionally, techniques for text classification are based on simple statistics on words that use linear classifiers such as Bag of Words and N-grams.

With the advancement of deep learning and the availability of large data sets, methods of handling text understanding using deep learning techniques have become popular in recent years. These deep learning models have been shown to significantly perform better than the traditional models in several studies. The following are the deep learning models that are compared to fastText in the Experiment Section:

Char-CNN

For input a ConvNet model accepts a sequence of one-hot vectors. A one-hot vector of the i-th symbol in an alphabet is a binary vector whose elements are all zeros except for the i-th element which is set to one. The model in (Zhang and Lecun, 2015) is composed of many layers of convolutional and max-pooling. The max-pooling allowed them to train deeper models. Each layer first extracts features from small, overlapping windows of the input sequence and pools over small, non-overlapping windows by taking the maximum activations in the window. This is applied recursively for many times. The final convolutional layer’s activation is flattened to form a vector which is then fed into a small number of fully-connected layers followed by the classification layer.

An illustration of the Char-CNN model used in (Zhang and Lecun, 2015).

An illustration of the Char-CNN model used in (Zhang and Lecun, 2015).

Char-CRNN

A character-level convolutional neural network (CRNN) is a a convolutional neural network with a recurrent layer on top. Many layers of convolutions can capture long-term dependencies but can end up with a very deep network as the sequence of characters grow to hundreds or thousands in a document(Xiao and Cho, 2016). A recurrent layer can efficiently capture long-term dependencies, requiring only a small number of convolution layers. However, the recurrent layer is computationally expensive.

Graphical illustration of (a) a CNN and (b) proposed CRNN in (Xiao and Cho, 2016) for character-level document classification

Graphical illustration of (a) a CNN and (b) proposed CRNN in (Xiao and Cho, 2016) for character-level document classification

VDCNN

The authors of (Conneau et al., 2016) believe that future research should invest into making text processing models deeper by proposing a Very Deep Convolutional Neural Network (VDCNN). Their model operates at low atomic representations of text (i.e. characters) and use a deep stack of convolutions. Using up to 29 layers in their study, they have shown that accuracy typically increases as the model gets much deeper.

Tagspace

Tagspace is a convolution neural network which aims to predict hashtags in social network posts. In this context, hashtags are used diversely, as identifiers, sentiments, topic annotations, and more. Tagspace uses "embeddings" which are vector representations of text which are then combined with some function which produces a point on the embedding space. Because Tagspace also captures the semantic context of hashtags, it is a strong model in terms of NLP learning. Tagspace was also applied to a document recommendation problem where the next item a user will interact with was predicted based on their previous history.

Model

Model Architecture of fastText

Traditionally, text classification methods were centered around linear classifiers. Linear classifier is limited by its inability to share parameters among features and classes. As a result, classes with very few examples will often get classified in a large output field. The authors of this paper will attempt to improve on the performance of linear classifiers with the key features of rank constraint and fast loss approximation. Before we get into that, we must better understand the idea of model training of linear classifiers.

Consider each text and each label as a vector in space. The model is training the coordinates of the text vector, in order for the text vector to be close to the vector of its associated label. The text vector and its label vector is inputted into the softmax function, which returns a score. The score is then normalized across the score for that same text with every other possible label. The result is the probability that the text will have its associated label. Then stochastic gradient descent algorithm is used to keep updating the coordinates until the probability of correct label for every text is maximized. This is clearly computationally expensive, as the score for every possible label in the training set must be computed for a text.

A softmax function returns a probability that a text is associated with label j, with K labels in the training set is:

[math]\displaystyle{ P(y=j | \mathbf{x}) = \dfrac{e^{\mathbf{x}^T \mathbf{w}_j}}{\sum_{k=1}^K e^{\mathbf{x}^T \mathbf{w}_k}} }[/math] ,where x represents the text vector and w represents the label vector.

Now let’s look at the improved method of linear classifiers with a rank constraint and fast loss approximation. Refer to the image below.

Using the weight matrices, the ngram features of the input are first looked up to find word representations, then averaged into hidden text representation. It is then fed to a linear classifier. Finally, the softmax function is used to compute the probability distribution over the predefined classes. For a set of N documents, the model minimizes the negative log likelihood over the classes. The classifier trains on multiple CPUs using stochastic gradient descent and a linearly decaying learning rate.

Two changes that were applied in this model architecture are the hierarchical softmax function, which improves performance with a large number of classes, and the hashing trick to manage mappings of n-grams to local word order. These two nuances will be more thoroughly explained in the upcoming sections.

Softmax and Hierarchy Softmax

As mentioned above, the softmax function is used to compute the probability density over predefined classes. It calculates the probability of [math]\displaystyle{ y_n }[/math] as each possible class and outputs probability estimates for each class. Due to the nature of the softmax function, the denominator serves to normalize the probabilities, allowing a single [math]\displaystyle{ y_n }[/math] to receive probabilities for each label, where these probabilities sum to one. This provides a means to choose the highest probability as the corresponding label for [math]\displaystyle{ y_n }[/math].

However, the softmax function does have a computational complexity of [math]\displaystyle{ O(Kd) }[/math] where [math]\displaystyle{ K }[/math] is the number of classes and [math]\displaystyle{ d }[/math] is the number of dimensions in the hidden layer of the model. This is due to the nature of the softmax function since each function calculation requires normalizing the probabilities over all potential classes. This runtime is not ideal when the number of classes is large, and for this reason a hierarchical softmax function is used. We can see the differences in computational efficiency in the following set-up for hierarchical softmax.

Suppose we have a binary tree structure based on Huffman coding for the softmax function, where each node has at most two children or leaves. Huffman coding trees provide a means to optimize binary trees, where the lowest frequency classes are placed deeper into the tree and the highest frequency classes are placed near the root of the tree. This minimizes the path for more frequently labelled classes. Huffman coding trees are efficient since computational runtime is reduced to [math]\displaystyle{ O(d \log_2(K)) }[/math].

A probability for each path, whether we are travelling right or left from a node, is calculated. This is done by applying the sigmoid function to the product of the output vector [math]\displaystyle{ v_{n_i} }[/math] of each inner node [math]\displaystyle{ n }[/math] and the output value of the hidden layer of the model, [math]\displaystyle{ h }[/math]. The idea of this method is to represent the output classes as the leaves on this tree and a random walk then assigns probabilities for these classes based on the path taken from the root of the tree. The probability of a certain class is then calculated as:

where [math]\displaystyle{ n }[/math] represents the leaf node that a class is located on with depth [math]\displaystyle{ l+1 }[/math] and [math]\displaystyle{ n_1, n_2, …, n_l }[/math] represents the parent nodes of that leaf

In the below figure, we can see an example of a binary tree for the hierarchical softmax model. An example path from root node [math]\displaystyle{ n_1 }[/math] to label 2 is highlighted in blue. In this case we can see that each path has an associated probability calculation and the total probability of label 2 is in line with the class probability calculation above.

Note that during the training process, vector representations for inner nodes, [math]\displaystyle{ v_{n_i} }[/math], are updated by introducing an error function, [math]\displaystyle{ Err }[/math] and deriving the error function to obtain the following:

We can use this to update the vector values with the following:

N-Gram, Bag of Words, and TFIDF

Bag of Words

[Source]

[Source]

Bag of word is an algorithm for simplifying a text dataset by counting how many times a word appears in a document. The n most frequent words are extracted from the training subset to be used as the “dictionary” for the testing set. This dictionary allow us to compare document for document classification and topic modeling. This is one of the method that the authors used for preparing text for input. Each vector of word count is normalized such that all the elements of the vector adds up to one (taking the frequency percentage of the word). If these frequencies exceeds a certain level it will activate nodes in neural network and influence classification.

The main weakness of bag of word is that it losses information due to it being single word and invariant to order. We will demonstrate that shortly. Bag of word will also have high error percentage if the training set does not include the entire dictionary of the testing set.

N-Gram

N-gram is another model for simplifying text replication by storing n-local words adjacent to the initial word (or character, N-gram can be character based. Each words in the document is read one at a time just like bag of words, however a certain range of its neighbors will also be scanned as well. This range is known as the n-grams. Compared to bag of words, any N over 1 (noted as Unigram) will contain more information than bag of words.

In the picture above, it gives an example of a Unigram (1-gram) which is the absolute simplest possible version of this model. Unigram does not consider previous words and just chooses random words based on how common they are in general. It also shows a Bigram (2-gram), where the previous word is considered. Trigram would consider the previous two words, etc etc. Up to N-grams, where it considers N-1 previous words.

Let a sentence be denoted as a product of words 1 to word n, [math]\displaystyle{ \omega_1^n = \omega_1 \cdots \omega_n }[/math] . By probabilities properties, we can model the probability of the word sequence 1 with Bigram as [math]\displaystyle{ P(\omega_1^n) =P( \omega_1) P( \omega_2 | \omega_1) P( \omega_3 | \omega_1^2) \cdots P( \omega_n | \omega_1^{n-1}) }[/math] . For example, take the sentence, "How long can this go on?" We can model it as followed:

P(How long can this go on?”)= P(How)P(long | How)P(can | long)P(this | can)P(go | this)P(on | go)P(? | on)

Going back to the chain event probability. We can reduce the above equation as the Product of the conditional probabilities as follows for the Bigram case:

[math]\displaystyle{ P(\omega_1^n) = \prod_{k=1}^n P(\omega_k | \omega_{k-1} ) }[/math].

We can generalize this to the stronger case for N-th gram as:

[math]\displaystyle{ P(\omega_1^n) = \prod_{k=1}^n P(\omega_k | \omega_{k-(N-1)}^{k-1} ) }[/math].

The weakness with N-gram is that many times local context does not provide any useful predictive clues. For example, if you want the model to learn plural usage of the following sentence:

The woman who lives on the fifth floor of the apartment is pretty.

The women who lives on the fifth floor of the apartment are pretty.

You will need to use 11-th gram and it is very unfeasible for ordinary machines. Which brings us to the next problem, as N increases, the predictive power of the model increases, however the number of parameters required grows exponentially with the number of words prior context.

BoW, Unigram, Bigram Example

An example of this is found in the below example

A = “I love apple”

B = “apple love I”

C = “I love sentence”

| A | B | C | |

| I | 1 | 1 | 1 |

| love | 1 | 1 | 0 |

| apple | 1 | 1 | 0 |

| sentence | 0 | 0 | 1 |

Notice how A and B are the same vector. This is just like bag of word and the aforementioned problem of order does not matter!

| A | B | C | |

| I love | 1 | 0 | 1 |

| love apple | 1 | 0 | 0 |

| apple love | 0 | 1 | 0 |

| love i | 0 | 1 | 0 |

| love sentence | 0 | 0 | 1 |

Notice now, A and B are unique because bigram takes into consideration one space of local words. However, A and C also have similar elements, being I love. IF we were to further increase N in N-gram we will have an easier time in classifying the distinction between the two. Higher, the consequences of operating in higher dimension of N gram is that the run time will increase.

Feature Hashing

From above, we can see that even though N-gram keeps words order features; however, the dimension of our feature vector or matrix increases as N increases. Not all combinations of words are common in real life, which may cause our feature matrix be very disperse, and computationally expensive. In order to address this problem, we introduce the hash trick, aka feature hashing.

Feature hashing, can be used in sentence classification which maps feature vectors to indices of an array or a matrix of fixed size by applying a hash function to features. The general idea is to map feature vectors from high dimensional to lower dimensional to reduce the dimension of the input vector, and therefore, reduce the cost of storing large vectors.

A hash function is any function that maps an arbitrary array of keys to a list of fixed size. For hash trick, it can be generally expressed as [math]\displaystyle{ h(n):\{1,...,N\} \rightarrow \{1,...,M\} }[/math]. A very simple hash function is the modulo operation. Considering if we are mapping a key to a hash table of M slots. Then, a simple hash function can be defined as

h(key) = key % M

Since for N-grams model, word count is usually used as features, the hashed feature map can be easily calculated as

[math]\displaystyle{ \phi_i^{h}(x) = \underset{j:h(j)=i}{\sum} x_j }[/math], where [math]\displaystyle{ i }[/math] is the corresponding index of the hashed feature map.

Take above bigram example, if we want to hash each feature vector to a hash table of length 3. If we are using the simple hash function described as above, our hashed feature map is now

| A | B | C |

|---|---|---|

| 1 | 1 | 0 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

There are many choices of hash functions, but the general idea is to have a good hash function that distributes keys evenly across the hash table.

Hash collision happens when two distinct keys are mapped to the same indices. For above example, "I love" and "love i" are mapped to the same index 0.

In order to get an unbiased estimate, the paper uses a signed hash kernel as introduced in Weinberger et al.2009, which introduces another hash function [math]\displaystyle{ \xi(n):\{1,...,N\} \rightarrow \{-1, 1\} }[/math] to determine the sign of the return index. The hashed feature map [math]\displaystyle{ \phi }[/math] now becomes

[math]\displaystyle{ \phi_i^{h, \xi}(x) = \underset{j:h(j)=i}{\sum} \xi(j) \cdot x_j }[/math]

Consider if our [math]\displaystyle{ \xi(n) }[/math] is as below:

def xi(n):

if n > M:

return -1

else:

return 1

Our hash table now becomes

| A | B | C |

|---|---|---|

| 1 | 1 | 0 |

| -1 | 0 | 1 |

| 1 | -1 | 0 |

Ideally, collisions will "cancel out", and therefore, achieve an unbiased estimate.

TF-IDF

For normal N-grams, word counts are used as features. However, another way that can be used to represent the features is called TFIDF, which is the short cut for term frequency–inverse document frequency. It represent the importance of a word to the document.

Term Frequency(TF) generally measures the times that a word occurs in a document. An Inverse Document Frequency(IDF) can be considered as an adjustment to the term frequency such that a word won't be deemed as important if that word is a generally common word, for example, "the".

TFIDF is calculated as the product of term frequency and inverse document frequency, generally expressed as [math]\displaystyle{ \mathrm{tfidf}(t,d,D) = \mathrm{tf}(t,d) \cdot \mathrm{idf}(t, D) }[/math]

In this paper, TFIDF is calculated in the same way as Zhang et al., 2015, with

- [math]\displaystyle{ \mathrm{tf}(t,d) = f_{t,d} }[/math], where [math]\displaystyle{ f_{t,d} }[/math] is the raw count of [math]\displaystyle{ t }[/math] for document [math]\displaystyle{ d }[/math].

- [math]\displaystyle{ \mathrm{idf}(t, D) = log(\frac{N}{| \{d\in D:t\in d \} |}) }[/math], where [math]\displaystyle{ N }[/math] is the total number of documents and [math]\displaystyle{ | \{d\in D:t\in d \} | }[/math] is the total number of documents that contains word [math]\displaystyle{ t }[/math].

Experiment

In this experiment fastText was compared on two classification problems with various other text classifiers.

The first classification problem being Sentiment Analysis, where it is compared to the existing text classifiers. Second, we evaluate fastText to a larger output space on a tag prediction dataset.

The Vowpal Wabbit library, written in C++ can also be used to implement our model. However, compared to this library, our tailored implementation is at least 2-5× faster in practice.

Sentiment Analysis

Competing Models and fastText

The sentiment analysis uses an experiment that contains 8 datasets that are ideal to test text classification, they range anywhere from news categories, to Yelp reviews.

There are 5 models used in this comparison from (Zhang, X., Zhao, J., and LeCun, Y. 2015) we have the Bag of Words (BoW) model, as well as the N-grams and TFIDF models. This experiment also features convolutional network with the character level convolutional network (char – CNN) from (Conneau et al. 2016) as well as the very-deep convolutional network (VDCNN) from (Zhang and LeCun 2015).

The 5 models are compared with fastText, which is run with 10 hidden units. In the experiment it is evaluated with and without bigrams. fastText is run for 5 epochs with a learning rate selected on a validation set from {0.05, 0.1,0.25,0.5}. fastText has been run with the same parameters on all datasets.

The results reported the main baselines and a comparison with (Zhang, X., Zhao, J., and LeCun, Y. 2015) based on recurrent networks Convolutional Gated Recurrent Neural Network (conv-GRNN) and the Long Short Term Memory Gated Recurrent Neural Network (LTSM-GRNN).

Baselines

Table 1: Test accuracy [%] on sentiment datasets. FastText has been run with the same parameters for all the datasets. It has 10 hidden units and we evaluate it with and without bigrams. Note (Zhang, X., Zhao, J., and LeCun, Y. 2015) is shortened to (Zhang et al., 2015)

In the above table we observe that adding the bigram to fastText improves the performance on all datasets by 1 – 4%. While comparing it to the two convolutions, fastText performed better than char – CNN, but slightly worse than VDCNN. It was noted that by adding more n-grams, could lead to a slight increase in accuracy. For example, the addition of a trigram reported a performance on the Sogou dataset of 97.1%, which is 3 basis points higher than what VDCNN reported.

Comparison with (Zhang et al., 2015) based on recurrent networks

Table 2: Comparison with (Zhang, X., Zhao, J., and LeCun, Y. 2015). The hyper-parameters were chosen on the validation set.

The hyper parameters were tuned on the validation set and it was observed that using N-grams up to 5 would result in an optimal performance. It is clear with the above results that the fastText is competitive against the methods discussed in (Zhang, X., Zhao, J., and LeCun, Y. 2015).

Training Time

Table 3: Training time on sentiment analysis datasets compared to char-CNN and VDCNN. We report the overall training time, except for char-CNN where we report the time per epoch.

One of the proposed benefits of this method was the processing time, it was claimed to be faster than other methods based neural networks, thus fastText was compared to the two convolutional networks, char – CNN and VDCNN in training time. Both of the comparable convolutional networks were trained on a NVIDIA Tesla K40 GPU, while fastText was trained on a CPU with 20 threads. It is important to note that only char-CNN was reported as time per epoch. It is evident that fastText is many times quicker in training the mentioned datasets. There were alternatives present to improve the convolutional network’s performance, however, they do not compare to fastText, which can take less than a minute to train on these large datasets.

It is clear that fastText can closely replicate the accuracy standards of neural network methods without being computationally inefficient.

Tag prediction

Dataset

Scalability to large datasets is an important feature of a model. In order to test that, evaluation was carried the YFCC100M dataset which consists of approximately 100 million images containing captions, titles and tags for each. Here, the spotlight was on using fastText text classifier to predict the tags associated with each image without actually using the image itself, both rather the information associated with the image such as the title and caption of the image.

The methodology behind this classification problem was to remove the words and tags that occur less than 100 times and split the data into a train, validation and test set. The train set consists of approximately 90% of the dataset, the validation set consists of approximately 1%, and the test set of 0.5%. Table 4 shows an example of 5 items in the validation set with their Inputs (title and caption), Prediction (the tag class they are classified to) and Tags (real image tags), highlighting when the predicted class is in fact one of the tags.

| Input | Prediction | Tags |

|---|---|---|

| taiyoucon 2011 digitals: individuals digital photos from the anime convention taiyoucon 2011 in mesa, arizona. if you know the model and/or the character, please comment. | #cosplay | #24mm #anime #animeconvention #arizona #canon #con #convention #cos #cosplay #costume #mesa #play #taiyou #taiyoucon |

| 2012 twin cities pride 2012 twin cities pride parade | #minneapolis | #2012twincitiesprideparade #minneapolis #mn #usa |

| beagle enjoys the snowfall | #snow | #2007 #beagle #hillsboro #january #maddison #maddy #oregon #snow |

| christmas | #christmas | #cameraphone #mobile |

| euclid avenue | #newyorkcity | #cleveland #euclidavenue |

Removing infrequently occurring tags and words eliminates some noise and helps our model learn better. A script has been released explaining the breakup of the data. After cleaning, the vocabulary size is 297,141 and there are 312,116 unique tags.

Baselines

A frequency-based approach which simply predicts the most frequent tag is used as a baseline.

The model we compare fastText to in tag prediction is Tagspace. It is similar to our model but is based on the WSABIE which predicts tags based on both images and their text annotations. The Tagspace model is a convolution neural network, which predicts hashtags in semantic contexts of social network posts. For faster and better comparable performance, the experiment uses a linear version of this model. Tagspace also predicts multiple hashtags so this experiment does not fully compare the capabilities of the Tagspace model.

Results

| Model | prec@1 | Running time - Train | Running time - Test |

|---|---|---|---|

| Freq. baseline | 2.2 | - | - |

| Tagspace, h = 50 | 30.1 | 3h8 | 6h |

| Tagspace, h = 200 | 35.6 | 5h32 | 15h |

| fastText, h = 50 | 30.8 | 6m40 | 48s |

| fastText, h = 50, bigram | 35.6 | 7m47 | 50s |

| fastText, h = 200 | 40.7 | 10m34 | 1m29 |

| fastText, h = 200, bigram | 45.1 | 13m38 | 1m37 |

The above table presents a comparison of our fastText model to other baselines. The "Precision-at-one" (Prec@1) metric reports the proportion that highest ranking tag the the model predicts is in fact one of the real tags for an item.

We can see the frequency baseline has the lowest accuracy. On running fastText for 5 epochs and we compare it to Tagspace results. fastText with bigrams is also used and models are tested with 50 and 200 hidden layers. With 50 hidden layers, both fastText (without bigrams) and Tagspace performed similarly while fastText with bigrams performed better at 50 hidden layers than Tagspace did at 200. At 200 hidden layers, fastText performs better still and with bigrams, accuracy is improved.

Finally, both the train and test times of our model performed significantly better than Tagspace which must compute the scores for each class. fastText on the other hand, has a fast inference which gives it an advantage for a large number of classes. In particular, test times for fastText are significantly faster than that of Tagspace.

Conclusion

The authors propose a simple baseline method for text classification that perform well on large-scale datasets. Word features are averaged together to represent sentences which is then fed to a linear classifier. The authors experiment and test their model fastText against other models with evaluation protocol similar to Zhang et al. (2015) for sentiment analysis and then evaluated on its ability to scale to large output on a tag prediction data set. fastText achieved comparable results in terms of accuracy and was found to train significantly faster.

Commentary and Criticism

fastText and baseline models

The performance of fastText was compared to several baselines related to text classification. While this paper focused on the ways fastText outperformed these classifier, the baseline classifiers were not always evaluated at their optimal performance.

- Tagspace was used as a comparison classifier in a tag prediction problem. However, the goal of this model is to predict hashtags as semantic embedding rather than image tags as in the experiment. Additionally, only a linear version of Tagspace was used as a comparison.

Sources

Chen, W., Grangier, D., & Auli, M. (2016). Strategies for Training Large Vocabulary Neural Language Models. Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). doi:10.18653/v1/p16-1186

Conneau, A., Schwenk, H., Barrault L., and Lecun Y. (2016). Very deep convolutional networks for natural language processing. arXiv preprint arXiv:1606.01781.

Huffman coding. (n.d.). Retrieved March 23, 2018, from http://homes.sice.indiana.edu/yye/lab/teaching/spring2014-C343/huffman.php

Joulin, A., Grave, E., Bojanowski, P., & Mikolov, T. (2016). Bag of Tricks for Efficient Text Classification. Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 2, Short Papers. doi:10.18653/v1/e17-2068

Norvig, P. Inference in text understanding. In AAAI, pp. 561–565, 1987.

Rong, X. (2016). Word2vec Parameter Learning Explained. doi:arXiv:1411.2738v4

Weston, J., Bengio, S., Usunier, N. (2011). Wsabie: Scaling Up To Large Vocabulary Image Annotation. Proceedings of the International Joint Conference on Artificial Intelligence, IJCAI (2011). url: http://www.thespermwhale.com/jaseweston/papers/wsabie-ijcai.pdf

Weston, J., Chopra, S., & Adams, K. (2014). #TagSpace: Semantic Embeddings from Hashtags. Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP). doi:10.3115/v1/d14-1194

Xiao, Y. and Cho, K. (2016). Efficient character-level document classification by combining convolution and recurrent layers. arXiv preprint arXiv:1602.00367.

Zhang, X. and LeCun, Y. (2015). Text understanding from scratch. arXiv preprint arXiv:1502.01710.

Zhang, X., Zhao, J., and LeCun, Y. (2015). Character-level convolutional networks for text classification. In NIPS.

Kilian Weinberger; Anirban Dasgupta; John Langford; Alex Smola; Josh Attenberg (2009). Feature Hashing for Large Scale Multitask Learning. Proc. ICML.

Further Reading

- fastText official website - Resources for using fastText

- Facebook Research: fastText - Overview of fastText released by Facebook Research.

- A New Method of Region Embedding for Text Classification (Summary) - Paper summary describing a method of preserving local structure information with small text regions for text classification tasks.

- Convolution Neural Networks for Sentence Classification (Summary) - Paper summary describing applying four variations of Convolutional Neural Networks to several NLP tasks such as sentiment analysis, customer review prediction, movie reviews, and more.