Conditional Image Synthesis with Auxiliary Classifier GANs: Difference between revisions

(→Model) |

|||

| (87 intermediate revisions by 14 users not shown) | |||

| Line 1: | Line 1: | ||

'''Abstract:''' "In this paper we introduce new methods for the improved training of generative adversarial networks (GANs) for image synthesis. We construct a variant of GANs employing label conditioning that results in 128×128 resolution image samples exhibiting global coherence. We expand on previous work for image quality assessment to provide two new analyses for assessing the discriminability and diversity of samples from class-conditional image synthesis models. These analyses demonstrate that high resolution samples provide class information not present in low resolution samples. Across 1000 ImageNet classes, 128×128 samples are more than twice as discriminable as artificially resized 32×32 samples. In addition, 84.7% of the classes have samples exhibiting diversity comparable to real ImageNet data." [[#References | (Odena et al., 2016)]] | '''Abstract:''' "In this paper, we introduce new methods for the improved training of generative adversarial networks (GANs) for image synthesis. Generative adversarial networks (GANs) are a class of artificial intelligence algorithms used in unsupervised machine learning, implemented by a system of two neural networks contesting with each other in a zero-sum game framework [17]. We construct a variant of GANs employing label conditioning that results in 128×128 resolution image samples exhibiting global coherence. We expand on previous work for image quality assessment to provide two new analyses for assessing the discriminability and diversity of samples from class-conditional image synthesis models. These analyses demonstrate that high resolution samples provide class information not present in low resolution samples. Across 1000 ImageNet classes, 128×128 samples are more than twice as discriminable as artificially resized 32×32 samples. In addition, 84.7% of the classes have samples exhibiting diversity comparable to real ImageNet data." [[#References | (Odena et al., 2016)]] | ||

= Introduction = | = Introduction = | ||

=== Motivation === | === Motivation === | ||

The authors introduce a GAN architecture for generating high resolution images from the ImageNet dataset. They show that this architecture makes it possible to split the generation process into many sub-models. They further suggest that GANs have trouble generating globally coherent images | The authors introduce a GAN architecture for generating high resolution images from the ImageNet dataset. They show that this architecture makes it possible to split the generation process into many sub-models. They further suggest that GANs have trouble generating globally coherent images and that this architecture is responsible for the coherence of their samples. They demonstrate that adding more structure | ||

to the GAN latent space along with a specialized cost function results in higher quality samples and that generating higher resolution images allow the model to encode more class-specific information, making them more visually discriminable than lower resolution images even after they have been resized to the same resolution. | |||

The second half of the paper introduces metrics for assessing visual discriminability and diversity of synthesized images. The discussion of image diversity in particular is important due to the tendency for GANs to 'collapse' to only produce one image that best fools the discriminator [[#References|(Goodfellow et al., 2014)]]. | The second half of the paper introduces metrics for assessing visual discriminability and diversity of synthesized images. The discussion of image diversity, in particular, is important due to the tendency for GANs to 'collapse' to only produce one image that best fools the discriminator [[#References|(Goodfellow et al., 2014)]]. | ||

=== Previous Work === | === Previous Work === | ||

| Line 12: | Line 13: | ||

Of all image synthesis methods (e.g. variational autoencoders, autoregressive models, invertible density estimators), GANs have become one of the most popular and successful due to their flexibility and the ease with which they can be sampled from. A standard GAN framework pits a generative model $G$ against a discriminative adversary $D$. The goal of $G$ is to learn a mapping from a latent space $Z$ to a real space $X$ to produce examples (generally images) indistinguishable from training data. The goal of the $D$ is to iteratively learn to predict when a given input image is from the training set or a synthesized image from $G$. Jointly the models are trained to solve the game-theoretical minimax problem, as defined by [[#References|Goodfellow et al. (2014)]]: $$\underset{G}{\text{min }}\underset{D}{\text{max }}V(G,D)=\mathbb{E}_{X\sim p_{data}(x)}[log(D(X))]+\mathbb{E}_{Z\sim p_{Z}(z)}[log(1-D(G(Z)))]$$ | Of all image synthesis methods (e.g. variational autoencoders, autoregressive models, invertible density estimators), GANs have become one of the most popular and successful due to their flexibility and the ease with which they can be sampled from. A standard GAN framework pits a generative model $G$ against a discriminative adversary $D$. The goal of $G$ is to learn a mapping from a latent space $Z$ to a real space $X$ to produce examples (generally images) indistinguishable from training data. The goal of the $D$ is to iteratively learn to predict when a given input image is from the training set or a synthesized image from $G$. Jointly the models are trained to solve the game-theoretical minimax problem, as defined by [[#References|Goodfellow et al. (2014)]]: $$\underset{G}{\text{min }}\underset{D}{\text{max }}V(G,D)=\mathbb{E}_{X\sim p_{data}(x)}[log(D(X))]+\mathbb{E}_{Z\sim p_{Z}(z)}[log(1-D(G(Z)))]$$ | ||

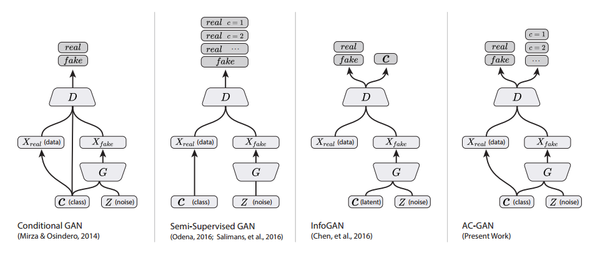

While this initial framework has clearly demonstrated great potential, other authors have proposed changes to the method to improve it. Many such papers propose changes to the training process [[#References|(Salimans et al., 2016)]][[#References|(Karras et al., 2017)]], which is notoriously difficult for some problems. Others propose changes to the model itself. [[#References|Mirza & Osindero (2014)]] augment the model by supplying the class of observations to both the generator and discriminator to produce class-conditional samples. According to [[STAT946F17/Conditional Image Generation with PixelCNN Decoders|van den Oord et al. (2016)]], conditioning image generation on classes can greatly improve their quality. Other authors have explored using even richer side information in the generation process with good results [[Learning What and Where to Draw|(Reed et al., 2016)]]. | This approach can also be interpreted as two neural networks contesting with each other in a zero-sum game. While this initial framework has clearly demonstrated great potential, other authors have proposed changes to the method to improve it. Many such papers propose changes to the training process [[#References|(Salimans et al., 2016)]][[#References|(Karras et al., 2017)]], which is notoriously difficult for some problems. Others propose changes to the model itself. [[#References|Mirza & Osindero (2014)]] augment the model by supplying the class of observations to both the generator and discriminator to produce class-conditional samples. According to [[STAT946F17/Conditional Image Generation with PixelCNN Decoders|van den Oord et al. (2016)]], conditioning image generation on classes can greatly improve their quality. Other authors have explored using even richer side information in the generation process with good results [[Learning What and Where to Draw|(Reed et al., 2016)]]. A summary diagram of the difference in the architecture can be seen in the following figure. | ||

[[FILE: ACGAN.png|center|600px]] | |||

Another model modification relevant to this paper is to force the discriminator network to reconstruct side information by adding an auxiliary network to classify generated (and real) images. The authors make the claim that forcing a model to perform additional tasks is known to improve performance on the original task [[#References|(Szegedy et al., 2014)]][[#References|(Sutskever et al., 2014)]][[#References|(Ramsundar et al., 2016)]]. They further suggest that using pre-trained image classifiers (rather than classifiers trained on both real and generated images) could improve results over and above what is shown in this paper. | Another model modification relevant to this paper is to force the discriminator network to reconstruct side information by adding an auxiliary network to classify generated (and real) images. The authors make the claim that forcing a model to perform additional tasks is known to improve performance on the original task [[#References|(Szegedy et al., 2014)]][[#References|(Sutskever et al., 2014)]][[#References|(Ramsundar et al., 2016)]]. They further suggest that using pre-trained image classifiers (rather than classifiers trained on both real and generated images) could improve results over and above what is shown in this paper. | ||

| Line 24: | Line 26: | ||

The authors propose an auxiliary classifier GAN (AC-GAN) which is a slight variation on previous architectures. Like [[#References|Mirza & Osindero (2014)]], the generator takes the image class to be generated as input in addition to the latent encoding $Z$. Like [[#References|Odena (2016)]] and [[#References|Salimans et al. (2016)]], the discriminator is trained to predict not only whether an observation is real or fake, but to classify each observation as well. The marginal contribution of this paper is to combine these in one model. | The authors propose an auxiliary classifier GAN (AC-GAN) which is a slight variation on previous architectures. Like [[#References|Mirza & Osindero (2014)]], the generator takes the image class to be generated as input in addition to the latent encoding $Z$. Like [[#References|Odena (2016)]] and [[#References|Salimans et al. (2016)]], the discriminator is trained to predict not only whether an observation is real or fake, but to classify each observation as well. The marginal contribution of this paper is to combine these in one model. | ||

Formally, let $C\sim p_c$ represent the target class label of each generated observation and $Z$ represent the usual noise vector from the latent space. Then the generator function takes both as | Formally, let $C\sim p_c$ represent the target class label of each generated observation and $Z$ represent the usual noise vector from the latent space. Then the generator function takes both as arguments to produce image samples: $X_{fake}=G(c,z)$.The discriminator gives a probability distribution over the source $S$ (real or fake) of the image as well as the class label $C$ being generated. $$D(X)={P(S|X),P(C|X)}$$ | ||

The objective function for the model thus has two parts, one corresponding to the source $L_S$ and the other to the class $L_C$. $D$ is trained to maximize $L_S + L_C$, while $G$ is trained to maximize $L_C-L_S$. Using the notation of Goodfellow et al. (2014), | The objective function for the model thus has two parts, one corresponding to the source $L_S$ and the other to the class $L_C$. $D$ is trained to maximize $L_S + L_C$, while $G$ is trained to maximize $L_C-L_S$. Using the notation of [[#References|Goodfellow et al. (2014)]], the loss terms are defined as: | ||

$$L_S=\mathbb{E}_{X\sim p_{data}(x)}[log(P(S=real|X))]+\mathbb{E}_{C,Z\sim p_{C,Z}(c,z)}[log(P(S=fake|G(C,Z)))]$$ | $$L_S=\mathbb{E}_{X\sim p_{data}(x)}[log(P(S=\mbox{real}|X))]+\mathbb{E}_{C,Z\sim p_{C,Z}(c,z)}[log(P(S=\mbox{fake}|G(C,Z)))]$$ | ||

$$L_C=\mathbb{E}_{X\sim p_{data}(x)}[log(P(C|X))]+\mathbb{E}_{C,Z\sim p_{C,Z}(c,z)}[log(P(C|G(C,Z)))]$$ | $$L_C=\mathbb{E}_{X\sim p_{data}(x)}[log(P(C=c|X))]+\mathbb{E}_{C,Z\sim p_{C,Z}(c,z)}[log(P(C=c|G(C,Z)))]$$ | ||

Because G accepts both $C$ and $Z$ as arguments, it is able to learn a mapping $Z\rightarrow X$ that is independent of $C$. The authors | Because G accepts both $C$ and $Z$ as arguments, it is able to learn a mapping $Z\rightarrow X$ that is independent of $C$. The authors argue that all class-specific information should be represented by $C$, allowing $Z$ to represent other factors such as pose, background, etc. | ||

Lastly the authors split the generation process into many class-specific submodels. They point out that the structure of their model permits this split, though it should technically be possible for even the standard GAN framework by dividing the training data into groups according to their known class labels. | Lastly, the authors split the generation process into many class-specific submodels. They point out that the structure of their model permits this split, though it should technically be possible for even the standard GAN framework by dividing the training data into groups according to their known class labels. Other works also employ class splitting with regards to GANs. High level categorical class labels have been shown to improve GAN performance due to the increased abstraction they provide ([[#References|Grinblat et al. 2017]]). | ||

The changes above result in a model capable of generating (some) image samples with both high resolution and global coherence. | The changes above result in a model capable of generating (some) image samples with both high resolution and global coherence. | ||

| Line 38: | Line 40: | ||

== GAN Quality Metrics == | == GAN Quality Metrics == | ||

A much larger part of the | A much larger part of the paper is spent on measuring the quality of a GAN's output. As the authors say, evaluating a generative model's quality is difficult due to a large number of probabilistic measures (such as average log-likelihood, Parzen window estimates, and visual fidelity [[#References| (Theis et al., 2015)]]) and "a lack of a perceptually meaningful image similarity metric". | ||

=== Image Discriminability Metric === | === Image Discriminability Metric === | ||

The authors develop two metrics in this paper to address these shortcomings. The first of these is a discriminability metric, the goal of which is to assess the degree to which generated images are identifiable as the class they are meant to represent. Ideally a team of non-expert humans could handle this, but the difficulty of such | The authors develop two metrics in this paper to address these shortcomings. The first of these is a discriminability metric, the goal of which is to assess the degree to which generated images are identifiable as the class they are meant to represent. Ideally, a team of non-expert humans could handle this, but the difficulty of such an approach makes the need for an automated metric apparent. The metric proposed by the authors is to measure the accuracy of a pre-trained image classifier trained on the pristine training data. For this, they select a [https://github.com/openai/improved-gan/ modified version of Inception-v3][[#References|(Szegedy et al., 2015)]]. | ||

Other metrics already exist for assessing image quality, the most popular of which is probably the Inception Score [[#References| (Salimans et al., 2016)]]. The authors list two main advantages of their approach | Other metrics already exist for assessing image quality, the most popular of which is probably the Inception Score [[#References| (Salimans et al., 2016)]]. The Inception score is given by $e^ {E_x[KL(p(y|x) || p(y))]}$ where $x$ is a particular image, $p(y|x)$ is the conditional output distribution over the classes in a pre-trained Inception network given $x$, and $p(y)$ is the marginal distribution over the classes. The authors list two main advantages of their approach to the Inception Score. The first is that accuracy figures are easier to interpret than the Inception Score, which is fairly self-evident. The second advantage of using Inception accuracy instead of Inception Score is that Inception accuracy may be calculated for individual classes, giving a better picture of where the model is strong and where it is weak. | ||

=== Image Diversity Metric === | === Image Diversity Metric === | ||

The second metric proposed by the authors measures the diversity of the generated images. As mentioned above, image diversity is an important quality in a GAN, a common failure they suffer from is 'collapsing', where the generator learns to only output one image that is good at fooling the discriminator [[#References|(Goodfellow et al., 2014)]][[#References|(Salimans et al., 2016)]]. The metric proposed in this section is intended to be complementary to the Inception accuracy, | The second metric proposed by the authors measures the diversity of the generated images. As mentioned above, image diversity is an important quality in a GAN, a common failure they suffer from is 'collapsing', where the generator learns to only output one image that is good at fooling the discriminator [[#References|(Goodfellow et al., 2014)]][[#References|(Salimans et al., 2016)]]. The metric proposed in this section is intended to be complementary to the Inception accuracy, as Inception accuracy would not detect generator collapse. | ||

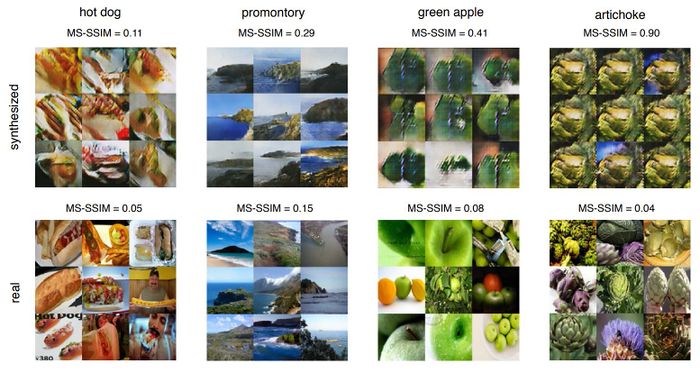

For their diversity metric, the authors co-opt | For their diversity metric, the authors co-opt an existing metric used to measure the similarity between two images: multi-scale structural similarity (MS-SSIM)[[#References|(Wang et al., 2003)]]. The authors do not go into detail about how the MS-SSIM measure is calculated, except to say that it is one of the more successful ways to predict human perceptual similarity judgments. It can take values in the interval $[0,1]$, and higher values indicate the two images being compared a perceptually more similar. For images generated from a GAN then, the metric should ideally be low, as diversity is desired. | ||

The authors' contribution is to use this metric to assess the diversity of a GAN's output. It is a pairwise comparison between two images, so their solution is to compare 100 images (that is $100\cdot 99$ paired comparisons) from each class and take the mean MS-SSIM score. | |||

The authors make two points about their use of this metric. First, the way they apply the metric is different from how it was originally intended to be used. It is possible that it will not behave as desired because of this. As evidence to the contrary, they state that: | |||

# Visually the metric seems to work. Pairs with high MS-SSIM seem more similar. | |||

# Comparisons are only made between images in the same class, keeping their application of the metric closer to its original use case of measuring the quality of compression algorithms. | |||

# The metric is not saturated. Scores on their generated data vary across the unit interval. If scores were all very close to zero the metric would not be much use. | |||

The second point they raise is that the mean MS-SSIM metric is not intended as a proxy for the entropy of the generator distribution in pixel space. That measure is hard to compute, and in any case is sensitive to trivial changes in the pixels, whereas the true intention of this metric is to measure perceptual similarity. Another approach is proposed by Dosovitskiy and Brox (2016) where class of loss functions, called deep perceptual similarity metrics (DeePSiM), compute distances between image features extracted (rather than distances in image space) to suggest attained diversity in generated images. | |||

== Experimental Results on GAN Properties == | == Experimental Results on GAN Properties == | ||

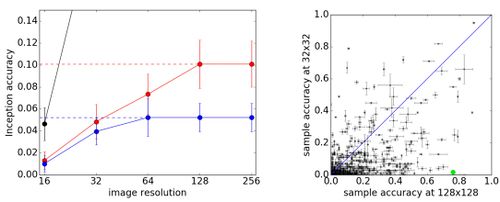

[[File:Figure_2_(Bottom).JPG|thumb|500px|right|alt=(Odena et al., 2016) Figure 2: (Left) Inception accuracy (y-axis) of two generators with resolution 128 x 128 (red) and 64 x 64 (blue). Images are resized to the same spatial resolution (x-axis). (Right) Class accuracies from the 128 x 128 AC-GAN at full resolution (x-axis) and downsized to 32 x 32 (y-axis).|(Odena et al., 2016) Figure 2: (Left) Inception accuracy (y-axis) of two generators with resolution 128 x 128 (red) and 64 x 64 (blue). Images are resized to the same spatial resolution (x-axis). (Right) Class accuracies from the 128 x 128 AC-GAN at full resolution (x-axis) and downsized to 32 x 32 (y-axis).]] | |||

The authors conducted several experiments on their model and proposed metrics. These are summarized in this section. | |||

=== Higher Resolution Images are more Discriminable === | |||

As it is one of the main attractions of this paper, the authors investigate how generating samples at different resolutions affects their discriminability. To achieve this, two models are trained, one that generates 64 x 64 resolution images and one that generates 128 x 128 resolution images. These images can be rescaled using bilinear interpolation to make them directly comparable. The authors find that the 128 x 128 AC-GAN (on average) achieves higher discriminability (per the Inception accuracy metric [[#Image Discriminability Metric|introduced above]]) at all resized resolutions. The authors claim that theirs is the first work to investigate how much an image generator is 'making use of its given output resolution'. In fact, this method can be applied to any type of synthesis model, if there is an easily computable notion of sample quality and some method for 'reducing resolution'. | |||

=== Generated Images are both Diverse and Discriminable === | |||

A second experiment conducted in the paper aims to investigate how the two metrics they propose interact with each other. They simply calculate the Inception accuracy and mean MS-SSIM score for a batch of generated images from every class in their data and report the correlation between the scores. They find the scores are anti-correlated with $\rho=-0.16$. Because the mean MS-SSIM metric is low for diverse samples, they conclude that accuracy and diversity are actually positively correlated, which is contradictory to the hypothesis that GANs that collapse achieves better sample quality. | |||

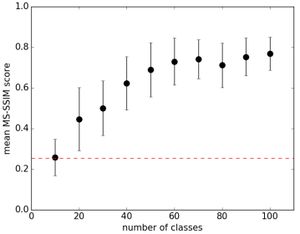

[[File:Figure_10.JPG|thumb|300px|right|alt=(Odena et al., 2016) Figure 10: Mean MS-SSIM scores for 10 ImageNet classes (y-axis) plotted against the number of classes handled by each sub-model (x-axis).|(Odena et al., 2016) Figure 10: Mean MS-SSIM scores for 10 ImageNet classes (y-axis) plotted against the number of classes handled by each sub-model (x-axis).]] | |||

=== Effect of Class Splits on Image Sample Quality === | |||

The final experiment conducted in the paper investigates how the number of classes a fixed GAN model has to generate impacts the diversity of the generated images. In their main model, the authors split the data such that each AC-GAN only had to generate images from 10 classes. Here they experimented with changing the number of classes each sub-model has to generate while holding the architecture of the sub-models fixed. They report the mean MS-SSIM score of the generated images from the original classes (when the model had only 10 to generate) at each split level. Perhaps unsurprisingly, the diversity of the outputs drops as the number of classes the model has to handle increases. Giving the model more parameters to handle the larger (and more diverse) set of classes might possibly eliminate this effect. | |||

Finally, they state that they were unable to get a regular GAN to converge on the task of generating images from one class per model. This could be due to the difficulty of training GANs and the limited amount of training data available for each class rather than any theoretical property of class splits. | |||

= Results = | = Results = | ||

=== Model === | |||

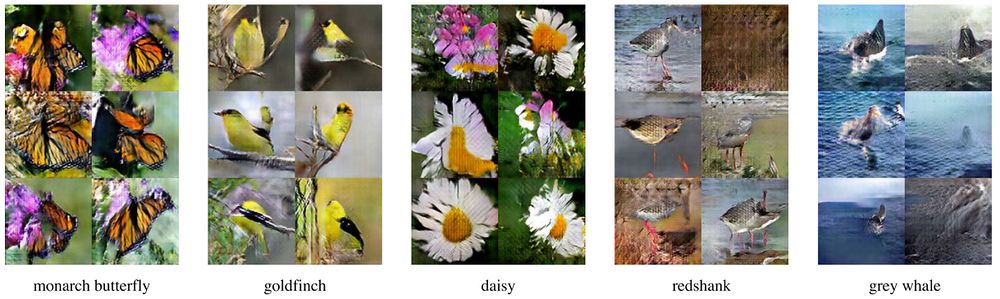

The authors apply their AC-GAN model to the ImageNet [[#References|(Russakovsky et al., 2015)]] dataset. The architecture of the generator consists of a series of deconvolution layers that transform the noise and class c into an image. The data have 1000 classes which the authors split into 100 groups of 10. An AC-GAN model is trained on each group of 10 to give results reported for the paper. The authors give some examples of the images generated from this setup. They note that these are selected to show the success of the model, not give a balanced representation of how good it is: | |||

[[File:Figure_1_AC-GAN.JPG|thumb|1000px|center|alt=(Odena et al., 2016) Figure 1: Selected images generated by the AC-GAN model for the ImageNet dataset.|(Odena et al., 2016) Figure 1: Selected images generated by the AC-GAN model for the ImageNet dataset.]] | |||

The apparent value of the model is its ability to generate high-resolution samples with global coherence. From the images given above, one must acknowledge they are impressively realistic. The authors link to a [https://photos.google.com/share/AF1QipPzTToH3wxrKoF8l5nWvSNz7D_oS-KB3YMQ6Ji-4XK3AtgJmlb5QCdqRQLqLSjfkw?key=YnhJb2UyMnZwb01oZ2xhUjBraE9fdWU1VVpNZTVB full set] of images generated from every ImageNet class as well. As one would expect from their acknowledgment that images above are selected to be most impressive, not all samples at the linked page are quite so coherent. | |||

[[File:Figure_9_(Bottom).JPG|thumb|400px|right|alt=(Odena et al., 2016) Figure 9 (Bottom): Each column is a different class. Each row is generated by a different latent encoding $z$.|(Odena et al., 2016) Figure 9 (Bottom): Each column is a different class. Each row is generated by a different latent encoding $z$.]] | |||

The authors compare their model with state-of-the-art results from [[#References| Salimans et al. (2016)]] on the CIFAR-10 dataset at a 32 x 32 resolution. To score the two models they use Inception Score instead of log-likelihood, which they claim is too inaccurate to be reported. Their model achieves a score of $8.25 \pm 0.07$ versus the previous state-of-the-art of $8.09 \pm 0.07$. | |||

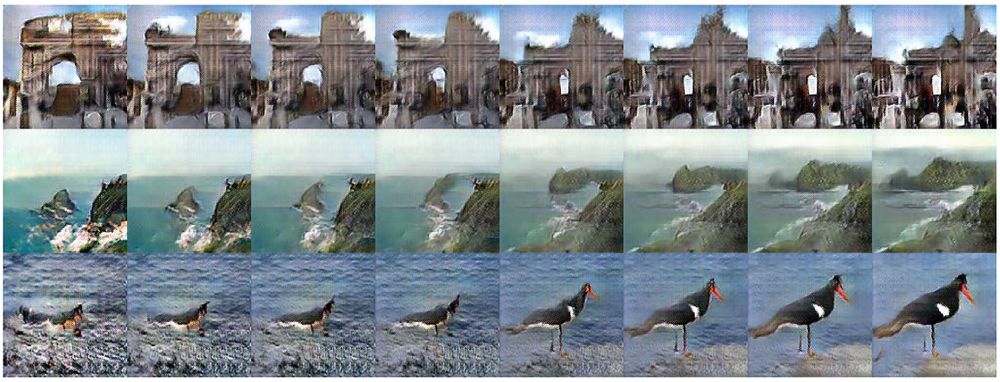

[[#References|Odena et al. (2016)]] argue that the class conditional generator allows $G$ to learn a representation of $Z$ independent of $C$ in section 3, and give evidence of the claim later in section 4.5 by showing that images generated with a fixed latent vector $z$ but different class labels $c$ have similar global structure (e.g. orientation of the subject) but the subjects (bird species) vary according to the label. Interestingly, the background (especially in the top row) also varies with the class label. This can possibly be attributed to the bird species coming from different areas, hence a seagull might be expected to have an ocean background. Clearly here the model benefits from the fact that the authors grouped similar classes together. A more interesting analysis might show the same comparison between different classes, such as birds and forklifts, to see how the global structure is encoded across them. | |||

The authors also include a discussion of whether their model is overfitting the training data. Their first test is to find the nearest neighbour in the training set of a generated image by the L1 measure in pixel space and visually compare the two images. This is a fairly naive approach, since the L1 loss in pixel space is extremely unlikely to identify whether two images are perceptually similar. Here would have been a good place to use the MS-SSIM metric to identify the nearest neighbours, since it is intended to measure perceptual similarity. The images they provide from this analysis are below. | |||

[[File:Figure_8.JPG|thumb|500px|center|alt=(Odena et al., 2016) Figure 8: Images generated by the AC-GAN model (top) and their nearest neighbour (L1 measure in pixel space) in the ImageNet dataset (bottom).|(Odena et al., 2016) Figure 8: Images generated by the AC-GAN model (top) and their nearest neighbour (L1 measure in pixel space) in the ImageNet dataset (bottom).]] | |||

A second check they make is that interpolating between two generated images in the latent space does not result in any discrete transitions or holes in the image interpolation. Such results would be indicative of overfitting. The | |||

images they give as evidence that this is not the case are below. | |||

[[File:Figure_9_(Top).JPG|thumb|1000px|center|alt=(Odena et al., 2016) Figure 9 (Top): Latent space interpolations of the AC-GAN model.|(Odena et al., 2016) Figure 9 (Top): Latent space interpolations of the AC-GAN model.]] | |||

Moreover, another way to study the overfitting problem is to explore the latent space affect on the AC-GAN by exploiting the structure of the model. The information representation in AC-GAN includes class information | |||

and a class-independent latent representation $z$. Sampling network with $z$ fixed but altering the class label corresponds to generating samples with the same ‘style’ across multiple classes. Figure 9 (Bottom) shows the class changes for each column, elements of the global structure (e.g. position, layout, background) are preserved. | |||

[[File:Fig 9 bottom.png|thumb|1000px|center|alt=(Odena et al., 2016) Figure 9 (Bottom): Class-independent information contains global structure about the synthesized image.|(Odena et al., 2016) Figure 9 (Top): Latent space interpolations of the AC-GAN model.]] | |||

=== Image Diversity Metric === | |||

Another result the authors' report is the performance of their image diversity metric. It is difficult to evaluate quantitatively, but visually we see that the scores do appear to capture the perceptual diversity of the generated class. For example, the 'artichoke' generator appears to have collapsed, and has a high score, while the 'promontory' generator seems fairly diverse and has a low score: | |||

[[File:Figure_3.JPG|thumb|700px|center|alt=(Odena et al., 2016) Figure 3: Mean MS-SSIM scores and sample images from selected generated (top) and real (bottom) ImageNet classes.|(Odena et al., 2016) Figure 3: Mean MS-SSIM scores and sample images from selected generated (top) and real (bottom) ImageNet classes.]] | |||

= Critique = | = Critique = | ||

=== Model === | === Model === | ||

[ | A major attraction of this paper is the impressive quality of samples generated by the model. GANs often generate samples that are locally plausible but globally not realistic (e.g. a generated image of a dog has fur but the overall shape is not distinguishable). As we have seen in this critique, and as acknowledged by the authors, the most impressive samples are not representative of the model's overall performance. | ||

The model itself is not a very big advancement of the field. It combines two ideas that are both already prevalent in the research without any other justification than that it seems like a natural thing to do. As [https://openreview.net/forum?id=BkDDM04Ke other reviewers] have noted, investigating how much value the proposed model adds by comparing it with other models that only implement one (or neither) of the changes would have made this paper a slightly more interesting read. The AC-GAN model can perform semi-supervised learning by ignoring the component of the loss arising from class labels when a label is unavailable for a given training image. | |||

Another criticism I have about the paper is about how they report their results. To compare with [[#References|Salimans et al. (2016)]] they use Inception Score rather than log-likelihood, which they claim is the standard. Even if their model performed worse by that measure it ought to be included with the caveat they mentioned. The models are evaluated on a different dataset and at a lower spatial resolution than was used for the rest of the paper. By the Inception Score their results are better on average but might not be significantly different given how close they are. Finally, they did not apply the mean MS-SSIM score they developed in this paper to evaluate their model against [[#References|Salimans et al. (2016)]]. This would have been a natural point to make, but instead, they generate four samples from each model as their evidence. | |||

[[# | An analysis the authors could have included that was touched upon but not explored in section 4.6 of the paper, and in the [[#Results|Results]] section of this summary, is how the similarity of the classes grouped in each sub-model impact the quality of generated samples. The example I gave above was to compare generated images with the same latent code but very different classes, such as birds and forklifts, to see how the global structure transferred across dissimilar classes. | ||

The last point to make about the model section is that the authors make some unsupported claims in their discussions of the model's properties. Specifically, they state that their modification to the standard GAN formulation appears to stabilize training but offer no evidence. Another example is their claim that "AC-GANs learn a representation for $z$ that is independent of the class label". They cite [[#References|Kingma et al (2014)]] as evidence of this. From my review of that paper, it does not appear that the authors gave evidence for such a claim. | |||

=== GAN Quality Metrics === | === GAN Quality Metrics === | ||

The Inception accuracy metric proposed in this paper has the drawback that it is only applicable in a conditional GAN setting since the standard GAN framework has no ground-truth labels. It is also true that using a pre-trained classifier is only a proxy for determining how much generated images look like the class they are meant to represent since classifiers are not perfect. Consider the phenomenon of adversarial attacks on classifiers to see this point. However, the advantages the authors list, that the Inception accuracy can be computed on a per-class basis and is easier to interpret than the Inception Score do have merit. The metric does make sense for the task the authors use it for. | |||

The same can be said for the mean MS-SSIM metric developed in this paper. Visually it appears to be a good indicator of diversity in the GAN's outputs. The authors claim that the mean MS-SSIM is a fast and easy-to-compute metric for perceptual variability and collapsing behaviour in a GAN. It is unclear how fast the metric can be computed since for each class the MS-SSIM has to be computed 100*99 times, once for each pair of images. The authors do not discuss how quickly it can actually be done. | |||

=== Experimental Results on GAN Properties === | |||

The authors included three analyses which I have termed experiments. Of these, the first concluded that images generated at a higher resolution are more discriminable than images generated at lower resolutions, even when they have been resized to be comparable. This does not seem like a very revolutionary conclusion. For one thing, the space of lower resolution images contained in the higher resolution space. In essence, the high resolution model could generate lower resolution images by setting blocks of 4 pixels to the same intensity. It seems unsurprising then that the lower resolution is less discriminable on average. Another reason for this could be that the high resolution model has more parameters, and is trained on higher resolution data, so it has more information with which to reconstruct class information. Finally the authors give a graph of accuracies to show this property, and the average line appears compelling, however, the standard errors about the lines suggest they may not be significantly different. | |||

The second experiment is on the interaction between the Inception accuracy and mean MS-SSIM metric. The author found that they are negatively correlated, and thus that classes that are high quality also tend to be diverse. This is contrary to prevailing wisdom, and since the correlation between them is weak, it appears that it may be only a fluke of the metrics. | |||

The final experiment is on the effect of class splits on image diversity. The authors found that increasing the number of classes handled by each model reduced the diversity of generated images. They make the claim at the beginning of the paper that they show the number of classes is what makes ImageNet synthesis difficult for GANs. This analysis does point in that direction but is not quite conclusive about the issue. Another analysis they could have included towards showing this is how their Inception accuracy metric and the Inception Score are affected by the number of class splits in their model. Perhaps instead of splitting classes among multiple networks, in the future, they could augment the classes using more abstract categorical classes as in [[#References|Grinblat et al (2017)]]. | |||

= Conclusion = | = Conclusion = | ||

This paper's main contributions were to introduce a slight variation on previous GAN models, as well as two metrics that can be used to assess the quality of generated images. The modified GAN, dubbed the Auxiliary Classifier GAN, was shown to produce high quality, high resolution samples from ImageNet, but not consistently. The authors could have done more to show why their proposed architecture was an improvement over previous methods. | |||

The metrics introduced are both fairly straightforward and appear to function as they are intended. This being said, the authors could have used them more consistently throughout the paper (such as using the MS-SSIM to find nearest neighbours instead of the L1 pixel space loss). This paper was accepted to ICML 2017 but rejected by ICLR 2018 due to the incremental nature of the model development and the ad hoc nature of the other analyses presented above. | |||

In the approach description, the authors use the sum of $L_c + L_s$ as the Loss for $D$; $L_c - L_s$ as the loss for $G$. | |||

It would be interesting if the authors can use a convex combination of them to encourage more real image with the constraint on the class label. | |||

= References = | = References = | ||

| Line 87: | Line 168: | ||

# Odena, A. (2016). Semi-supervised learning with generative adversarial networks. arXiv preprint [https://arxiv.org/abs/1606.01583 arXiv:1606.01583]. | # Odena, A. (2016). Semi-supervised learning with generative adversarial networks. arXiv preprint [https://arxiv.org/abs/1606.01583 arXiv:1606.01583]. | ||

# Theis, L., Oord, A. V. D., & Bethge, M. (2015). A note on the evaluation of generative models. arXiv preprint [https://arxiv.org/abs/1511.01844 arXiv:1511.01844]. | # Theis, L., Oord, A. V. D., & Bethge, M. (2015). A note on the evaluation of generative models. arXiv preprint [https://arxiv.org/abs/1511.01844 arXiv:1511.01844]. | ||

# Wang, Z., Simoncelli, E. P., & Bovik, A. C. (2003, November). Multiscale structural similarity for image quality assessment. In Signals, Systems and Computers, 2004. Conference Record of the Thirty-Seventh Asilomar Conference on (Vol. 2, pp. 1398-1402). IEEE. | |||

# Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., ... & Berg, A. C. (2015). Imagenet large scale visual recognition challenge. International Journal of Computer Vision, 115(3), 211-252. | |||

# G.L. Grinblat, L.C. Uzal, P.M. Granitto. Class-splitting generative adversarial networks. arXiv preprint [https://arxiv.org/abs/1709.07359 : arXiv:1709.07359]. | |||

# Dosovitskiy, Alexey, and Thomas Brox. "Generating images with perceptual similarity metrics based on deep networks." Advances in Neural Information Processing Systems. 2016. | |||

# https://en.wikipedia.org/wiki/Generative_adversarial_network | |||

APA | |||

=== Online resources === | |||

* [https://github.com/buriburisuri/ac-gan github.com/buriburisuri/ac-gan (tensorflow+sugartensor)] | |||

* [https://github.com/kimhc6028/acgan-pytorch github.com/kimhc6028/acgan-pytorch (pytorch)] | |||

* [https://www.youtube.com/watch?v=myP2TN0_MaE Conditional Image Synthesis with Auxiliary Classifier GANs, by Augustus Odena (Video)] | |||

Latest revision as of 09:24, 4 December 2017

Abstract: "In this paper, we introduce new methods for the improved training of generative adversarial networks (GANs) for image synthesis. Generative adversarial networks (GANs) are a class of artificial intelligence algorithms used in unsupervised machine learning, implemented by a system of two neural networks contesting with each other in a zero-sum game framework [17]. We construct a variant of GANs employing label conditioning that results in 128×128 resolution image samples exhibiting global coherence. We expand on previous work for image quality assessment to provide two new analyses for assessing the discriminability and diversity of samples from class-conditional image synthesis models. These analyses demonstrate that high resolution samples provide class information not present in low resolution samples. Across 1000 ImageNet classes, 128×128 samples are more than twice as discriminable as artificially resized 32×32 samples. In addition, 84.7% of the classes have samples exhibiting diversity comparable to real ImageNet data." (Odena et al., 2016)

Introduction

Motivation

The authors introduce a GAN architecture for generating high resolution images from the ImageNet dataset. They show that this architecture makes it possible to split the generation process into many sub-models. They further suggest that GANs have trouble generating globally coherent images and that this architecture is responsible for the coherence of their samples. They demonstrate that adding more structure to the GAN latent space along with a specialized cost function results in higher quality samples and that generating higher resolution images allow the model to encode more class-specific information, making them more visually discriminable than lower resolution images even after they have been resized to the same resolution.

The second half of the paper introduces metrics for assessing visual discriminability and diversity of synthesized images. The discussion of image diversity, in particular, is important due to the tendency for GANs to 'collapse' to only produce one image that best fools the discriminator (Goodfellow et al., 2014).

Previous Work

Of all image synthesis methods (e.g. variational autoencoders, autoregressive models, invertible density estimators), GANs have become one of the most popular and successful due to their flexibility and the ease with which they can be sampled from. A standard GAN framework pits a generative model $G$ against a discriminative adversary $D$. The goal of $G$ is to learn a mapping from a latent space $Z$ to a real space $X$ to produce examples (generally images) indistinguishable from training data. The goal of the $D$ is to iteratively learn to predict when a given input image is from the training set or a synthesized image from $G$. Jointly the models are trained to solve the game-theoretical minimax problem, as defined by Goodfellow et al. (2014): $$\underset{G}{\text{min }}\underset{D}{\text{max }}V(G,D)=\mathbb{E}_{X\sim p_{data}(x)}[log(D(X))]+\mathbb{E}_{Z\sim p_{Z}(z)}[log(1-D(G(Z)))]$$

This approach can also be interpreted as two neural networks contesting with each other in a zero-sum game. While this initial framework has clearly demonstrated great potential, other authors have proposed changes to the method to improve it. Many such papers propose changes to the training process (Salimans et al., 2016)(Karras et al., 2017), which is notoriously difficult for some problems. Others propose changes to the model itself. Mirza & Osindero (2014) augment the model by supplying the class of observations to both the generator and discriminator to produce class-conditional samples. According to van den Oord et al. (2016), conditioning image generation on classes can greatly improve their quality. Other authors have explored using even richer side information in the generation process with good results (Reed et al., 2016). A summary diagram of the difference in the architecture can be seen in the following figure.

Another model modification relevant to this paper is to force the discriminator network to reconstruct side information by adding an auxiliary network to classify generated (and real) images. The authors make the claim that forcing a model to perform additional tasks is known to improve performance on the original task (Szegedy et al., 2014)(Sutskever et al., 2014)(Ramsundar et al., 2016). They further suggest that using pre-trained image classifiers (rather than classifiers trained on both real and generated images) could improve results over and above what is shown in this paper.

Contributions

The contributions of this paper are in three main areas. First, the authors propose slight changes to previously existing GAN architectures, resulting in a model capable of generating samples of impressive quality. Second, the authors propose two metrics to assess the quality of samples generated from a GAN. Lastly, they present empirical results on GANs which are of some interest.

Model

The authors propose an auxiliary classifier GAN (AC-GAN) which is a slight variation on previous architectures. Like Mirza & Osindero (2014), the generator takes the image class to be generated as input in addition to the latent encoding $Z$. Like Odena (2016) and Salimans et al. (2016), the discriminator is trained to predict not only whether an observation is real or fake, but to classify each observation as well. The marginal contribution of this paper is to combine these in one model.

Formally, let $C\sim p_c$ represent the target class label of each generated observation and $Z$ represent the usual noise vector from the latent space. Then the generator function takes both as arguments to produce image samples: $X_{fake}=G(c,z)$.The discriminator gives a probability distribution over the source $S$ (real or fake) of the image as well as the class label $C$ being generated. $$D(X)={P(S|X),P(C|X)}$$

The objective function for the model thus has two parts, one corresponding to the source $L_S$ and the other to the class $L_C$. $D$ is trained to maximize $L_S + L_C$, while $G$ is trained to maximize $L_C-L_S$. Using the notation of Goodfellow et al. (2014), the loss terms are defined as: $$L_S=\mathbb{E}_{X\sim p_{data}(x)}[log(P(S=\mbox{real}|X))]+\mathbb{E}_{C,Z\sim p_{C,Z}(c,z)}[log(P(S=\mbox{fake}|G(C,Z)))]$$ $$L_C=\mathbb{E}_{X\sim p_{data}(x)}[log(P(C=c|X))]+\mathbb{E}_{C,Z\sim p_{C,Z}(c,z)}[log(P(C=c|G(C,Z)))]$$

Because G accepts both $C$ and $Z$ as arguments, it is able to learn a mapping $Z\rightarrow X$ that is independent of $C$. The authors argue that all class-specific information should be represented by $C$, allowing $Z$ to represent other factors such as pose, background, etc.

Lastly, the authors split the generation process into many class-specific submodels. They point out that the structure of their model permits this split, though it should technically be possible for even the standard GAN framework by dividing the training data into groups according to their known class labels. Other works also employ class splitting with regards to GANs. High level categorical class labels have been shown to improve GAN performance due to the increased abstraction they provide (Grinblat et al. 2017).

The changes above result in a model capable of generating (some) image samples with both high resolution and global coherence.

GAN Quality Metrics

A much larger part of the paper is spent on measuring the quality of a GAN's output. As the authors say, evaluating a generative model's quality is difficult due to a large number of probabilistic measures (such as average log-likelihood, Parzen window estimates, and visual fidelity (Theis et al., 2015)) and "a lack of a perceptually meaningful image similarity metric".

Image Discriminability Metric

The authors develop two metrics in this paper to address these shortcomings. The first of these is a discriminability metric, the goal of which is to assess the degree to which generated images are identifiable as the class they are meant to represent. Ideally, a team of non-expert humans could handle this, but the difficulty of such an approach makes the need for an automated metric apparent. The metric proposed by the authors is to measure the accuracy of a pre-trained image classifier trained on the pristine training data. For this, they select a modified version of Inception-v3(Szegedy et al., 2015).

Other metrics already exist for assessing image quality, the most popular of which is probably the Inception Score (Salimans et al., 2016). The Inception score is given by $e^ {E_x[KL(p(y|x) || p(y))]}$ where $x$ is a particular image, $p(y|x)$ is the conditional output distribution over the classes in a pre-trained Inception network given $x$, and $p(y)$ is the marginal distribution over the classes. The authors list two main advantages of their approach to the Inception Score. The first is that accuracy figures are easier to interpret than the Inception Score, which is fairly self-evident. The second advantage of using Inception accuracy instead of Inception Score is that Inception accuracy may be calculated for individual classes, giving a better picture of where the model is strong and where it is weak.

Image Diversity Metric

The second metric proposed by the authors measures the diversity of the generated images. As mentioned above, image diversity is an important quality in a GAN, a common failure they suffer from is 'collapsing', where the generator learns to only output one image that is good at fooling the discriminator (Goodfellow et al., 2014)(Salimans et al., 2016). The metric proposed in this section is intended to be complementary to the Inception accuracy, as Inception accuracy would not detect generator collapse.

For their diversity metric, the authors co-opt an existing metric used to measure the similarity between two images: multi-scale structural similarity (MS-SSIM)(Wang et al., 2003). The authors do not go into detail about how the MS-SSIM measure is calculated, except to say that it is one of the more successful ways to predict human perceptual similarity judgments. It can take values in the interval $[0,1]$, and higher values indicate the two images being compared a perceptually more similar. For images generated from a GAN then, the metric should ideally be low, as diversity is desired.

The authors' contribution is to use this metric to assess the diversity of a GAN's output. It is a pairwise comparison between two images, so their solution is to compare 100 images (that is $100\cdot 99$ paired comparisons) from each class and take the mean MS-SSIM score.

The authors make two points about their use of this metric. First, the way they apply the metric is different from how it was originally intended to be used. It is possible that it will not behave as desired because of this. As evidence to the contrary, they state that:

- Visually the metric seems to work. Pairs with high MS-SSIM seem more similar.

- Comparisons are only made between images in the same class, keeping their application of the metric closer to its original use case of measuring the quality of compression algorithms.

- The metric is not saturated. Scores on their generated data vary across the unit interval. If scores were all very close to zero the metric would not be much use.

The second point they raise is that the mean MS-SSIM metric is not intended as a proxy for the entropy of the generator distribution in pixel space. That measure is hard to compute, and in any case is sensitive to trivial changes in the pixels, whereas the true intention of this metric is to measure perceptual similarity. Another approach is proposed by Dosovitskiy and Brox (2016) where class of loss functions, called deep perceptual similarity metrics (DeePSiM), compute distances between image features extracted (rather than distances in image space) to suggest attained diversity in generated images.

Experimental Results on GAN Properties

The authors conducted several experiments on their model and proposed metrics. These are summarized in this section.

Higher Resolution Images are more Discriminable

As it is one of the main attractions of this paper, the authors investigate how generating samples at different resolutions affects their discriminability. To achieve this, two models are trained, one that generates 64 x 64 resolution images and one that generates 128 x 128 resolution images. These images can be rescaled using bilinear interpolation to make them directly comparable. The authors find that the 128 x 128 AC-GAN (on average) achieves higher discriminability (per the Inception accuracy metric introduced above) at all resized resolutions. The authors claim that theirs is the first work to investigate how much an image generator is 'making use of its given output resolution'. In fact, this method can be applied to any type of synthesis model, if there is an easily computable notion of sample quality and some method for 'reducing resolution'.

Generated Images are both Diverse and Discriminable

A second experiment conducted in the paper aims to investigate how the two metrics they propose interact with each other. They simply calculate the Inception accuracy and mean MS-SSIM score for a batch of generated images from every class in their data and report the correlation between the scores. They find the scores are anti-correlated with $\rho=-0.16$. Because the mean MS-SSIM metric is low for diverse samples, they conclude that accuracy and diversity are actually positively correlated, which is contradictory to the hypothesis that GANs that collapse achieves better sample quality.

Effect of Class Splits on Image Sample Quality

The final experiment conducted in the paper investigates how the number of classes a fixed GAN model has to generate impacts the diversity of the generated images. In their main model, the authors split the data such that each AC-GAN only had to generate images from 10 classes. Here they experimented with changing the number of classes each sub-model has to generate while holding the architecture of the sub-models fixed. They report the mean MS-SSIM score of the generated images from the original classes (when the model had only 10 to generate) at each split level. Perhaps unsurprisingly, the diversity of the outputs drops as the number of classes the model has to handle increases. Giving the model more parameters to handle the larger (and more diverse) set of classes might possibly eliminate this effect.

Finally, they state that they were unable to get a regular GAN to converge on the task of generating images from one class per model. This could be due to the difficulty of training GANs and the limited amount of training data available for each class rather than any theoretical property of class splits.

Results

Model

The authors apply their AC-GAN model to the ImageNet (Russakovsky et al., 2015) dataset. The architecture of the generator consists of a series of deconvolution layers that transform the noise and class c into an image. The data have 1000 classes which the authors split into 100 groups of 10. An AC-GAN model is trained on each group of 10 to give results reported for the paper. The authors give some examples of the images generated from this setup. They note that these are selected to show the success of the model, not give a balanced representation of how good it is:

The apparent value of the model is its ability to generate high-resolution samples with global coherence. From the images given above, one must acknowledge they are impressively realistic. The authors link to a full set of images generated from every ImageNet class as well. As one would expect from their acknowledgment that images above are selected to be most impressive, not all samples at the linked page are quite so coherent.

The authors compare their model with state-of-the-art results from Salimans et al. (2016) on the CIFAR-10 dataset at a 32 x 32 resolution. To score the two models they use Inception Score instead of log-likelihood, which they claim is too inaccurate to be reported. Their model achieves a score of $8.25 \pm 0.07$ versus the previous state-of-the-art of $8.09 \pm 0.07$.

Odena et al. (2016) argue that the class conditional generator allows $G$ to learn a representation of $Z$ independent of $C$ in section 3, and give evidence of the claim later in section 4.5 by showing that images generated with a fixed latent vector $z$ but different class labels $c$ have similar global structure (e.g. orientation of the subject) but the subjects (bird species) vary according to the label. Interestingly, the background (especially in the top row) also varies with the class label. This can possibly be attributed to the bird species coming from different areas, hence a seagull might be expected to have an ocean background. Clearly here the model benefits from the fact that the authors grouped similar classes together. A more interesting analysis might show the same comparison between different classes, such as birds and forklifts, to see how the global structure is encoded across them.

The authors also include a discussion of whether their model is overfitting the training data. Their first test is to find the nearest neighbour in the training set of a generated image by the L1 measure in pixel space and visually compare the two images. This is a fairly naive approach, since the L1 loss in pixel space is extremely unlikely to identify whether two images are perceptually similar. Here would have been a good place to use the MS-SSIM metric to identify the nearest neighbours, since it is intended to measure perceptual similarity. The images they provide from this analysis are below.

A second check they make is that interpolating between two generated images in the latent space does not result in any discrete transitions or holes in the image interpolation. Such results would be indicative of overfitting. The images they give as evidence that this is not the case are below.

Moreover, another way to study the overfitting problem is to explore the latent space affect on the AC-GAN by exploiting the structure of the model. The information representation in AC-GAN includes class information and a class-independent latent representation $z$. Sampling network with $z$ fixed but altering the class label corresponds to generating samples with the same ‘style’ across multiple classes. Figure 9 (Bottom) shows the class changes for each column, elements of the global structure (e.g. position, layout, background) are preserved.

Image Diversity Metric

Another result the authors' report is the performance of their image diversity metric. It is difficult to evaluate quantitatively, but visually we see that the scores do appear to capture the perceptual diversity of the generated class. For example, the 'artichoke' generator appears to have collapsed, and has a high score, while the 'promontory' generator seems fairly diverse and has a low score:

Critique

Model

A major attraction of this paper is the impressive quality of samples generated by the model. GANs often generate samples that are locally plausible but globally not realistic (e.g. a generated image of a dog has fur but the overall shape is not distinguishable). As we have seen in this critique, and as acknowledged by the authors, the most impressive samples are not representative of the model's overall performance.

The model itself is not a very big advancement of the field. It combines two ideas that are both already prevalent in the research without any other justification than that it seems like a natural thing to do. As other reviewers have noted, investigating how much value the proposed model adds by comparing it with other models that only implement one (or neither) of the changes would have made this paper a slightly more interesting read. The AC-GAN model can perform semi-supervised learning by ignoring the component of the loss arising from class labels when a label is unavailable for a given training image.

Another criticism I have about the paper is about how they report their results. To compare with Salimans et al. (2016) they use Inception Score rather than log-likelihood, which they claim is the standard. Even if their model performed worse by that measure it ought to be included with the caveat they mentioned. The models are evaluated on a different dataset and at a lower spatial resolution than was used for the rest of the paper. By the Inception Score their results are better on average but might not be significantly different given how close they are. Finally, they did not apply the mean MS-SSIM score they developed in this paper to evaluate their model against Salimans et al. (2016). This would have been a natural point to make, but instead, they generate four samples from each model as their evidence.

An analysis the authors could have included that was touched upon but not explored in section 4.6 of the paper, and in the Results section of this summary, is how the similarity of the classes grouped in each sub-model impact the quality of generated samples. The example I gave above was to compare generated images with the same latent code but very different classes, such as birds and forklifts, to see how the global structure transferred across dissimilar classes.

The last point to make about the model section is that the authors make some unsupported claims in their discussions of the model's properties. Specifically, they state that their modification to the standard GAN formulation appears to stabilize training but offer no evidence. Another example is their claim that "AC-GANs learn a representation for $z$ that is independent of the class label". They cite Kingma et al (2014) as evidence of this. From my review of that paper, it does not appear that the authors gave evidence for such a claim.

GAN Quality Metrics

The Inception accuracy metric proposed in this paper has the drawback that it is only applicable in a conditional GAN setting since the standard GAN framework has no ground-truth labels. It is also true that using a pre-trained classifier is only a proxy for determining how much generated images look like the class they are meant to represent since classifiers are not perfect. Consider the phenomenon of adversarial attacks on classifiers to see this point. However, the advantages the authors list, that the Inception accuracy can be computed on a per-class basis and is easier to interpret than the Inception Score do have merit. The metric does make sense for the task the authors use it for.

The same can be said for the mean MS-SSIM metric developed in this paper. Visually it appears to be a good indicator of diversity in the GAN's outputs. The authors claim that the mean MS-SSIM is a fast and easy-to-compute metric for perceptual variability and collapsing behaviour in a GAN. It is unclear how fast the metric can be computed since for each class the MS-SSIM has to be computed 100*99 times, once for each pair of images. The authors do not discuss how quickly it can actually be done.

Experimental Results on GAN Properties

The authors included three analyses which I have termed experiments. Of these, the first concluded that images generated at a higher resolution are more discriminable than images generated at lower resolutions, even when they have been resized to be comparable. This does not seem like a very revolutionary conclusion. For one thing, the space of lower resolution images contained in the higher resolution space. In essence, the high resolution model could generate lower resolution images by setting blocks of 4 pixels to the same intensity. It seems unsurprising then that the lower resolution is less discriminable on average. Another reason for this could be that the high resolution model has more parameters, and is trained on higher resolution data, so it has more information with which to reconstruct class information. Finally the authors give a graph of accuracies to show this property, and the average line appears compelling, however, the standard errors about the lines suggest they may not be significantly different.

The second experiment is on the interaction between the Inception accuracy and mean MS-SSIM metric. The author found that they are negatively correlated, and thus that classes that are high quality also tend to be diverse. This is contrary to prevailing wisdom, and since the correlation between them is weak, it appears that it may be only a fluke of the metrics.

The final experiment is on the effect of class splits on image diversity. The authors found that increasing the number of classes handled by each model reduced the diversity of generated images. They make the claim at the beginning of the paper that they show the number of classes is what makes ImageNet synthesis difficult for GANs. This analysis does point in that direction but is not quite conclusive about the issue. Another analysis they could have included towards showing this is how their Inception accuracy metric and the Inception Score are affected by the number of class splits in their model. Perhaps instead of splitting classes among multiple networks, in the future, they could augment the classes using more abstract categorical classes as in Grinblat et al (2017).

Conclusion

This paper's main contributions were to introduce a slight variation on previous GAN models, as well as two metrics that can be used to assess the quality of generated images. The modified GAN, dubbed the Auxiliary Classifier GAN, was shown to produce high quality, high resolution samples from ImageNet, but not consistently. The authors could have done more to show why their proposed architecture was an improvement over previous methods.

The metrics introduced are both fairly straightforward and appear to function as they are intended. This being said, the authors could have used them more consistently throughout the paper (such as using the MS-SSIM to find nearest neighbours instead of the L1 pixel space loss). This paper was accepted to ICML 2017 but rejected by ICLR 2018 due to the incremental nature of the model development and the ad hoc nature of the other analyses presented above.

In the approach description, the authors use the sum of $L_c + L_s$ as the Loss for $D$; $L_c - L_s$ as the loss for $G$. It would be interesting if the authors can use a convex combination of them to encourage more real image with the constraint on the class label.

References

- Odena, A., Olah, C., & Shlens, J. (2016). Conditional image synthesis with auxiliary classifier gans. arXiv preprint arXiv:1610.09585.

- Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., ... & Bengio, Y. (2014). Generative adversarial nets. In Advances in neural information processing systems (pp. 2672-2680).

- Salimans, T., Goodfellow, I., Zaremba, W., Cheung, V., Radford, A., & Chen, X. (2016). Improved techniques for training gans. In Advances in Neural Information Processing Systems (pp. 2234-2242).

- Karras, T., Aila, T., Laine, S., & Lehtinen, J. (2017). Progressive Growing of GANs for Improved Quality, Stability, and Variation. arXiv preprint arXiv:1710.10196.

- Mirza, M., & Osindero, S. (2014). Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784.

- van den Oord, A., Kalchbrenner, N., Espeholt, L., Vinyals, O., & Graves, A. (2016). Conditional image generation with pixelcnn decoders. In Advances in Neural Information Processing Systems (pp. 4790-4798).

- Reed, S. E., Akata, Z., Mohan, S., Tenka, S., Schiele, B., & Lee, H. (2016). Learning what and where to draw. In Advances in Neural Information Processing Systems (pp. 217-225).

- Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., ... & Rabinovich, A. (2015). Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1-9).

- Sutskever, I., Vinyals, O., & Le, Q. V. (2014). Sequence to sequence learning with neural networks. In Advances in neural information processing systems (pp. 3104-3112).

- Ramsundar, B., Kearnes, S., Riley, P., Webster, D., Konerding, D., & Pande, V. (2015). Massively multitask networks for drug discovery. arXiv preprint arXiv:1502.02072

- Odena, A. (2016). Semi-supervised learning with generative adversarial networks. arXiv preprint arXiv:1606.01583.

- Theis, L., Oord, A. V. D., & Bethge, M. (2015). A note on the evaluation of generative models. arXiv preprint arXiv:1511.01844.

- Wang, Z., Simoncelli, E. P., & Bovik, A. C. (2003, November). Multiscale structural similarity for image quality assessment. In Signals, Systems and Computers, 2004. Conference Record of the Thirty-Seventh Asilomar Conference on (Vol. 2, pp. 1398-1402). IEEE.

- Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., ... & Berg, A. C. (2015). Imagenet large scale visual recognition challenge. International Journal of Computer Vision, 115(3), 211-252.

- G.L. Grinblat, L.C. Uzal, P.M. Granitto. Class-splitting generative adversarial networks. arXiv preprint : arXiv:1709.07359.

- Dosovitskiy, Alexey, and Thomas Brox. "Generating images with perceptual similarity metrics based on deep networks." Advances in Neural Information Processing Systems. 2016.

- https://en.wikipedia.org/wiki/Generative_adversarial_network

APA