Imagination-Augmented Agents for Deep Reinforcement Learning: Difference between revisions

| (60 intermediate revisions by 21 users not shown) | |||

| Line 1: | Line 1: | ||

=Introduction= | =Introduction= | ||

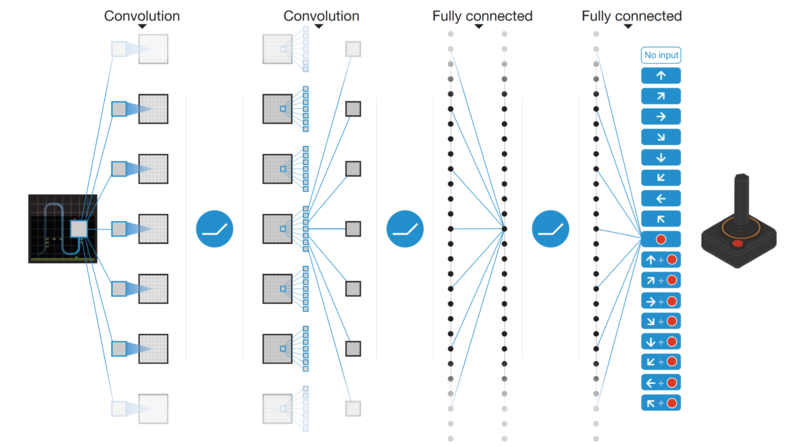

An interesting research area in | An interesting research area in reinforcement learning is developing intelligent agents for playing video games. Before the introduction of deep learning, video game agents were commonly coded based on Monte-Carlo Tree Search(MCTS) of pre-set rules. MCTS is used for making optimal decisions in artificial intelligence problems, and the focus is on the analysis of the most promising moves. The basic algorithm is selection, expansion, simulation, and backpropagation. Recent research has shown deep reinforcement learning to be very successful at playing video games like Atari 2600. To be specific, the method (Figure 1) is called Deep Q-Learning (DQN) which learns the optimal actions based on current observations (raw pixels) [[#Reference|[Mnih et al., (2015)]]]. However, there are some complex games where DQN fails to learn: some games need to solve a sub-problem without explicit reward or contain irreversible domains, where actions can be catastrophic. A typical example of these games is [https://en.wikipedia.org/wiki/Sokoban Sokoban]. Similar to how humans play the game, RL model needs planning and inference. This kind of game raises challenges to RL. | ||

[[File:DQN.png|800px|center|thumb|Figure 1: Deep Q-Learning Architecture]] | [[File:DQN.png|800px|center|thumb|Figure 1: Deep Q-Learning Architecture]] | ||

In Reinforcement Learning, the algorithms can be divided into two categories: '''model-free''' algorithm and '''model-based''' algorithm. DQN, mentioned above(Figure 1), is a model-free method. It takes raw pixels as input and maps them to values or actions. As a drawback, large amounts of training data is required. In addition, the policies are not generalized to new tasks in the same environment. A model-based method is trying to build a model for the environment. By querying the model, agents can avoid irreversible, poor decisions. As an approximation of the environment, it can enable better generalization across states. However, this method only shows success in limited settings, where an exact transition model is given or in simple domains. In complex environments, model-based methods suffer from model errors from function approximation. Currently, there is no model-based method that is robust against imperfections. | In Reinforcement Learning, the algorithms can be divided into two categories: '''model-free''' algorithm and '''model-based''' algorithm. The model-based reinforcement learning tries to infer environment to gain the reward while model-free reinforcement learning does not use the environment to learn the action that results in the best reward. More specifically, model-based methods learn the model (the reward function: $R(s, s^{'})$ and the Transition probability $P(s^{'} | s, a)$ where $s', s$ and $a$ are next state, current state and action respectively.) of the environment, while model-free methods never explicitly learn the model of the environment. DQN, mentioned above(Figure 1), is a model-free method. It takes raw pixels as input and maps them to values or actions. As a drawback, large amounts of training data is required. In addition, the policies are not generalized to new tasks in the same environment. A model-based method is trying to build a model for the environment. By querying the model, agents can avoid irreversible, poor decisions. As an approximation of the environment, it can enable better generalization across states. However, this method only shows success in limited settings, where an exact transition model is given or in simple domains. In complex environments, model-based methods suffer from model errors from function approximation. These errors compound during planning, causing poor agent performance. Currently, there is no model-based method that is robust against imperfections. | ||

In this paper, the authors introduce a novel deep reinforcement learning architecture called Imagination-Augmented Agents(I2As). Literally, this method enables agents to learn to interpret predictions from a learned environment model to construct implicit plans. It is a combination of model-free and model-based aspects. The advantage of this method is that it learns in an end-to-end way to extract information from model simulations without making any assumptions about the structure or the perfections of the environment model. | In this paper, the authors introduce a novel deep reinforcement learning architecture called Imagination-Augmented Agents (I2As). Literally, this method enables agents to learn to interpret predictions from a learned environment model to construct implicit plans. It is a combination of model-free and model-based aspects. The advantage of this method is that it learns in an end-to-end way to extract information from model simulations without making any assumptions about the structure or the perfections of the environment model. | ||

As shown in the results, this method outperforms DQN in the games: Sokoban, and | As shown in the results, this method outperforms DQN in the games: Sokoban, and MiniPacman. In addition, the experiments all show that I2A is able to successfully use imperfect models. | ||

=Motivation= | =Motivation= | ||

Although the structure of this method is complex, the motivation is intuitive: since the agent suffers from irreversible decisions, attempts in simulated | A capability to "imagine" and reason about the future is an important property of an intelligent and sophisticated RL algorithms. Beyond that, they must be able to construct a plan using this knowledge. In a model-based approach, "internal model" is used to analyze how actions lead to future outcomes in order to reason and plan. These internal models work so well because provided environments are generally "perfect" - they have clearly defined rules which allow outcomes to be predicted very accurately in almost every circumstance. But the real world is complex, rules are not so clearly defined and unpredictable problems often arise. Even for the most intelligent agents, imagining in these complex environments is a long and costly process. Hence this paper puts forward an idea of combining the model-free and model-based approach that could work under complex situations using imagination augmentation. Although the structure of this method is complex, the motivation is intuitive: since the agent suffers from irreversible decisions, attempts in simulated states may be helpful. To improve the expensive search space in traditional MCTS methods, adding decision from policy network can reduce search steps. In order to keep context information, rollout results are encoded by an LSTM encoder. The final output is combining the result from the model-free network and model-based network. | ||

To improve the expensive search space in traditional MCTS methods, adding decision from policy network can reduce search steps. In order to keep context information, rollout results are encoded by an LSTM encoder. The final output is combining the result from model-free network and model-based network. | |||

=Related Work= | =Related Work= | ||

There are some works that try to apply deep learning to model-based reinforcement learning. The popular approach is to learn a neural network from the environment and apply the network in classical planning algorithms. These works can not handle the mismatch between the learned model and the ground truth. Liu et al.(2017) use context information from trajectories, but in terms of imitation learning. | There are some works that try to apply deep learning to model-based reinforcement learning. The popular approach is to learn a neural network from the environment and apply the network in classical planning algorithms. These works can not handle the mismatch between the learned model and the ground truth. [[#Reference|[Liu et al.(2017)]]] use context information from trajectories, but in terms of imitation learning. | ||

To deal with imperfect models, Deisenroth and Rasmussen(2011) try to capture model uncertainty by applying high-computational Gaussian Process models. | To deal with imperfect models, [[#Reference|[Deisenroth and Rasmussen(2011)]]] try to capture model uncertainty by applying high-computational Gaussian Process models. In order to develop such a policy search method, the authors of this paper used analytic gradients of an approximation to the expected return for indirect policy search. This means by learning a probabilistic dynamics model and explicitly incorporating model uncertainty into long-term planning, this policy search method can cope with very little data and facilitates learning from scratch in only a few trials. | ||

Similar ideas can be found in a study by Hamrick et al.(2017): they present a neural network that queries expert models, but just focus on meta-control for continuous contextual bandit problems. Pascanu et al.(2017) extend this work by focusing on explicit planning in sequential environments. | Similar ideas can be found in a study by [[#Reference|[Hamrick et al.(2017)]]]: they present a neural network that queries expert models, but just focus on meta-control for continuous contextual bandit problems. Pascanu et al.(2017) extend this work by focusing on explicit planning in sequential environments. | ||

This paper claims to build upon the work of [[#Reference|[Tamar et al. (2016)]]]. In these works, neural networks whose architectures mimic classical iterative planning algorithms are presented. Such models are trained by reinforcement learning or to predict user-defined, high-level features. The authors did not define any explicit environment model. | |||

=Approach= | =Approach= | ||

| Line 26: | Line 27: | ||

===Imagination Core=== | ===Imagination Core=== | ||

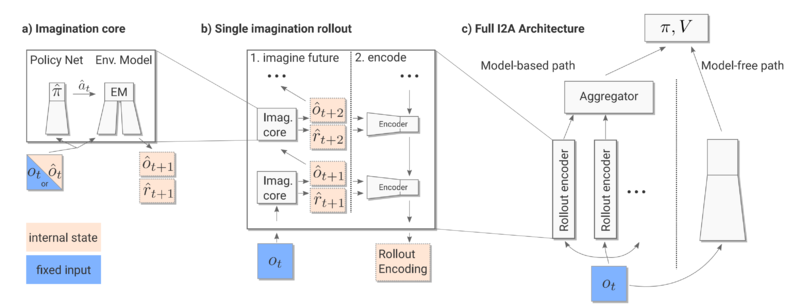

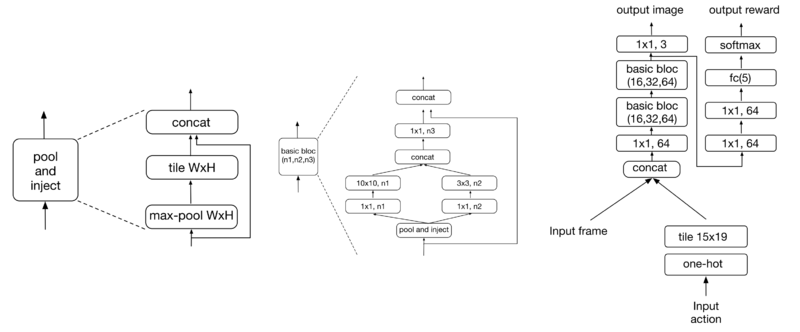

The imagination core(Figure 2 left) is the key role in the model-based path. It consists of two parts: environment model and rollout policy. The former is an approximation of the environment and the latter is used to simulate imagined trajectories, which are interpreted by a neural network and provided as additional context to a policy network. | The imagination-augmented agents adopt a concept called the "imagination encoder", which is a neural network which learns to extract relevant information that impacts the agent's future decisions, and ignores information that is irrelevant. In particular, these agents have the following features: (i) they have the ability to learn to interpret their internal simulations which captures the environmental dynamics, (ii) they adapt to the number of imagined trajectors which makes the imagination more efficient, and finally (iii) they have the ability to learn different strategies to construct plans by choosing the appropriate trajectory. The imagination core(Figure 2 left) is the key role in the model-based path. It consists of two parts: environment model and rollout policy. The former is an approximation of the environment and the latter is used to simulate imagined trajectories, which are interpreted by a neural network and provided as additional context to a policy network. | ||

====environment model==== | ====environment model==== | ||

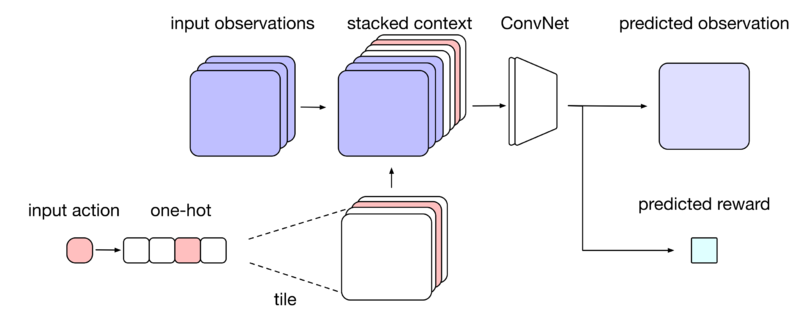

In order to augment agents with imagination, the method relies on environment models that, given current information, can be queried to make predictions about the future. In this work, the environment model is built based on action-conditional next-step predictors, which receive input contains current observation and current action, and predict the next observation and the next reward(Figure 3). | In order to augment agents with imagination, the method relies on environment models that, given current information, can be queried to make predictions about the future. In this work, the environment model is built based on action-conditional next-step predictors, which receive input contains current observation and current action, and predict the next observation and the next reward(Figure 3). | ||

[[File:environment model.png|800px|center|thumb|Figure 3: Environment Model]] | [[File:environment model.png|800px|center|thumb|Figure 3: Environment Model]] | ||

The authors can either pretrain the environment model before embedding it (with frozen weights) within the I2A architecture or jointly train it with the agent by adding $l_{model}$ to the total loss as an auxiliary loss. In practice, they found that pre-training the environment model led to faster | |||

runtime of the I2A architecture, so they adopted this strategy. | |||

====rollout policy==== | ====rollout policy==== | ||

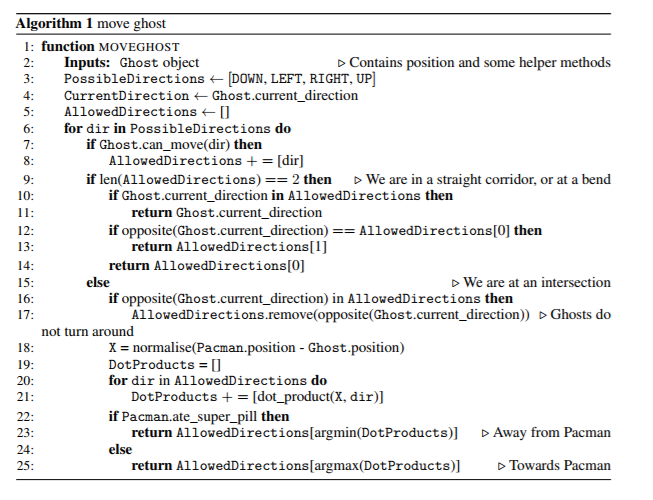

The rollout process is regarded as the simulated trajectories. In this work, the rollout is performed for each possible action in the environment. | The rollout process is regarded as the simulated trajectories. In this work, the rollout is performed for each possible action in the environment. | ||

A rollout policy $\hat \pi$ is a function that takes current observation $O$ and outputs an action $a$ that potentially leads to maximal reward. In this architecture, the rollout policy can be a DQN network. In the experiment, the rollout | A rollout policy $\hat \pi$ is a function that takes current observation $O$ and outputs an action $a$ that potentially leads to maximal reward. In this architecture, the rollout policy can be a DQN network. In the experiment, the rollout policy $\hat \pi$ is broadcasted and shared. After experiments on the types of rollout policies(random, pre-trained), the authors found the efficient strategy is to distill the policy into a model-free policy, which consists in creating a small model-free network $\hat \pi(O_t)$, and adding to the total loss a cross entropy auxiliary loss between the imagination-augmented policy $\pi(O_t)$ as computed on the current observation, and the policy $\hat \pi(O_t)$ as computed on the same observation. | ||

$$ | |||

l_{dist} (\pi, \hat \pi)(O_t) = \lambda_{dist} \sum_a \pi(a|O_t)log(\hat \pi(a|O_t)) | |||

$$ | |||

Together as the imagination core, these two parts produces $n$ trajectories $\hat \tau_1,...,\hat \tau_n$. Each imagined trajectory $\hat \tau$ is a sequence of features $(\hat f_{t+1},...,\hat f_{t+\tau})$, where $t$ is the current time, $\tau$ the length of rollout, and $\hat f_{t+i}$ the output of the environment model(the predicted observation and reward). In order to guarantee success in imperfections, the architecture does not assume the learned model to be perfect. The output will not only depend on the predicted reward. | Together as the imagination core, these two parts produces $n$ trajectories $\hat \tau_1,...,\hat \tau_n$. Each imagined trajectory $\hat \tau$ is a sequence of features $(\hat f_{t+1},...,\hat f_{t+\tau})$, where $t$ is the current time, $\tau$ the length of rollout, and $\hat f_{t+i}$ the output of the environment model(the predicted observation and reward). In order to guarantee success in imperfections, the architecture does not assume the learned model to be perfect. The output will not only depend on the predicted reward. | ||

===Trajectories Encoder=== | ===Trajectories Encoder=== | ||

From the intuition to keep the sequence information in the trajectories, the architecture uses a rollout encoder $\varepsilon$ that processes the imagined rollout as a whole and learns to interpret it(Figure 2 middle). Each trajectory is encoded as a rollout embedding $e_i=\varepsilon(\hat \tau_i)$. Then, the aggregator $A$ combines the rollout embedding s into a single imagination code $c_{ia}=A(e_1,...,e_n)$. | From the intuition to keep the sequence information in the trajectories, the architecture uses a rollout encoder $\varepsilon$ that processes the imagined rollout as a whole and learns to interpret it(Figure 2 middle). Each trajectory is encoded as a rollout embedding $e_i=\varepsilon(\hat \tau_i)$. Then, the aggregator $A$ combines the rollout embedding s into a single imagination code $c_{ia}=A(e_1,...,e_n)$ by simply concatenating all the summaries. | ||

In the experiments, the encoder is an LSTM that takes the predicted output from environment model as the input. One observation is that the order of the sequence $\hat f_{t+1}$ to $\hat f_{t+\tau}$ makes relatively little impact on the performance. The encodes mimics the Bellman type backup operations in DQN. | In the experiments, the encoder is an LSTM that takes the predicted output from environment model as the input. One observation is that the order of the sequence $\hat f_{t+1}$ to $\hat f_{t+\tau}$ makes relatively little impact on the performance. The encodes mimics the Bellman type backup operations in DQN. | ||

An alternative attempt would be to combine guided policy search with the linear quadratic regulator[7], which is coincidentally a joint model-free and model-based trajectory update mechanism for reinforcement learning. | |||

===Model-Free Path=== | ===Model-Free Path=== | ||

| Line 48: | Line 56: | ||

In conclusion, the I2A learns to combine information for two paths, and without the model-based path, I2A simply reduce to a standard model-free network(such as A3C). The imperfect approximation results in a rollout policy with higher entropy, potentially striking a balance between exploration and exploitation. | In conclusion, the I2A learns to combine information for two paths, and without the model-based path, I2A simply reduce to a standard model-free network(such as A3C, more explanations [https://medium.com/emergent-future/simple-reinforcement-learning-with-tensorflow-part-8-asynchronous-actor-critic-agents-a3c-c88f72a5e9f2 here]). The imperfect approximation results in a rollout policy with higher entropy, potentially striking a balance between exploration and exploitation. | ||

=Experiments= | |||

These following experiments were tested in Sokoban and MiniPacman games. All results are averages taken from top three agents. These agents were trained over 32 to 64 workers, and the network was optimized by RMSprop. | |||

As the pre-training strategy, the training data of I2A was pre-generated from trajectories of a partially trained standard model-free agent, the data is also taken into account for the budget. The total number of frames that were needed in pre-training is counted in the later process. Meanwhile, the authors show that the environment model can be reused to solve multiple tasks in the same environment. | |||

In the game Sokoban, the environment is a 10 x 10 grid world. All agents were trained directly on raw pixels(image size 80 x 80 with 3 channels). To make sure the network is not just simply "memorize" all states, the game procedurally generates a new level each episode. Out of 40 million levels generated, less than 0.7% were repeated. Therefore, a good agent should solve the unseen level as well. | |||

The reward settings for reinforcement learning algorithms are as follows: | |||

* Every time step, a penalty of -0.1 is applied to the agent.(encourage agents to finish levels faster) | |||

* Whenever the agent pushes a box on target, it receives a reward of +1.(encourage agents to push boxes onto targets) | |||

* Whenever the agent pushes a box off target, it receives a penalty of -1.(avoid artificial reward loop that would be induced by repeatedly pushing a box off and on target) | |||

* Finishing the level gives the agent a reward of +10 and the level terminates.(strongly reward solving a level) | |||

To show the advantage of I2A, the authors set a model-free standard architecture as a baseline. The architecture is a multi-layer convolutional neural network (CNN), taking the current observation $O_t$ as input, followed by a fully connected (FC) hidden layer, which typically makes use of a score function. This FC layer feeds into two heads: into an FC layer with one output per action computing the policy logits $\log \pi(a_t|O_t, \theta)$; and into another FC layer with a single output that computes the value function $V(O_t; \theta_v)$. | |||

* for MiniPacman: the CNN has two layers, both with 3x3 kernels, 16 output channels and strides 1 and 2; the following FC layer has 256 units | |||

* for Sokoban: the CNN has three layers with kernel sizes 8x8, 4x4, 3x3, strides of 4, 2, 1 and number of output channels 32, 64, 64; the following FC has 512 units | |||

===Sokoban=== | |||

Sokoban is a video game which is classified as a transport puzzle. The game involves the player moving pieces of boxes to get them to their target locations in an aerial view. The boxes can only be pushed and many moves become irreversible if the player don't properly plan them, which might render the puzzle unsolvable. The player is confined to the board and may move horizontally or vertically onto empty squares (never through walls or boxes). The player can also move into a box, which pushes it into the square beyond. Boxes may not be pushed into other boxes or walls, and they cannot be pulled. The number of boxes is equal to the number of storage locations. The puzzle is solved when all boxes are at storage locations. | |||

The environment model for Sokoban is shown in figure 4 | |||

[[File:sokoban_em.png|400px|center|thumb|Figure 4: The Sokoban environment model]] | |||

Besides, to demonstrate the influence of larger architecture in I2A, the authors set a copy-model agent that uses the same architecture of I2A but the environment model is replaced by identical map. This agent is regarded as an I2A agent without imagination. | |||

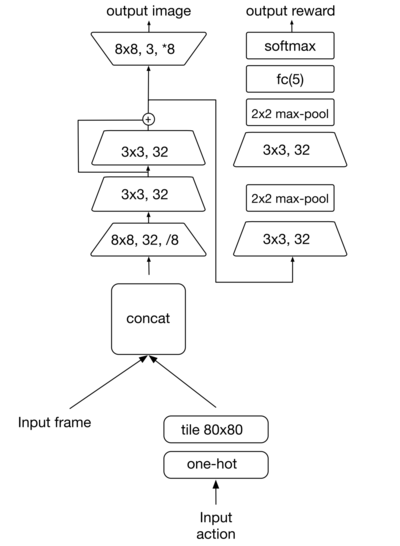

[[File:sokoban_result.png|800px|center|thumb|Figure 5: Sokoban learning curves. Left: training curves of I2A and baselines. Right: I2A training curves for various values of imagination depth]] | |||

The results are shown in Figure 4(left). I2A agents can solve much more levels compared to common DQN. Also, it far outperforms the copy-model version, suggesting that the environment model is crucial. The authors also trained an I2A where the environment model was predicting no rewards, only observations. This also performed worse. However, after much longer training (3e9 steps), these agents did recover the performance of the original I2A, which was never the case for the baseline agent even with that many steps. Hence, reward prediction is very helpful but not absolutely necessary in this task, and imagined observations alone are informative enough to obtain high performance on Sokoban. Note this is in contrast to many classical planning and model-based reinforcement learning methods, which often rely on reward prediction. | |||

====Length of Rollout==== | |||

A further experiment was investigating how the length of individual rollouts affects performance. The authors performed a parameter searching. Figure 5(right) shows the influence of the rollout length. The strategy using 3 rollout steps improves the speed of learning and improves the performance significantly than 1 step, and 5 is the optimal number. This implies rollout can be very helpful and informative. This rollout enables the agent to learn moves it cannot recover from. | |||

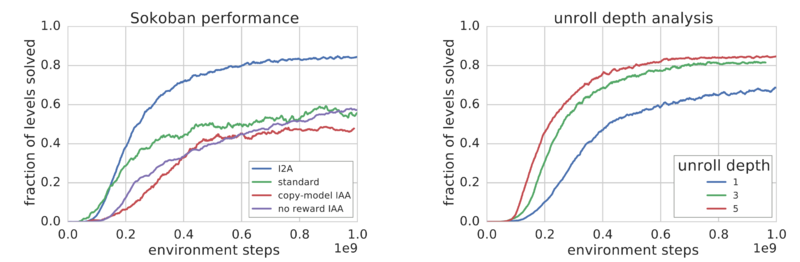

[[File:sokoban_noisy.png|800px|center|thumb|Figure 6: Experiments with a noisy environment model Left: each row shows an example 5-step rollout after conditioning on an environment observation. Errors accumulate and lead to various artifacts, including missing or duplicate sprites. Right: comparison of Monte-Carlo (MC) search and I2A when using either the accurate or the noisy model for rollouts.]] | |||

====Imperfections==== | |||

To demonstrate I2A can handle less reliable predictions, the authors set experiment where the I2A used a poor environment model(smaller number of parameters), where the error may accumulate across the rollout(Figure 6 left). The authors suggest that it is learning a rollout encoder that enables I2As to deal with imperfect model predictions. We can compare them to a setup without a rollout decoder. As shown in figure 6(right), even with relatively poor environment model, the performance of I2A is stable, unlike traditional Monte-Carlo search, which explicitly estimates the value of each action from rollouts, rather than learning an arbitrary encoding of the rollouts. An interesting result is that a rollout length 5 no longer outperforms a length of 3, which matches our common sense. | |||

====Perfections==== | |||

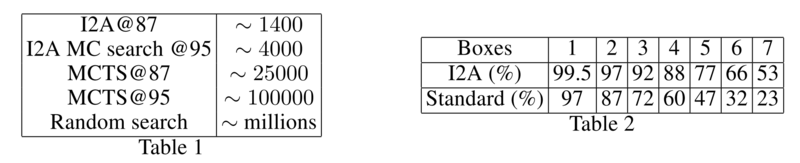

As I2A shows the robustness towards environment models, the authors tested an I2A agent with a nearly perfect environment model, and the results are in Table 1 and Table 2. Traditional Mento-Carlo Tree Search is tested as the baseline. From the table, although it is able to solve many levels, the search steps are very huge. On the contrary, I2A with the nearly perfect model can achieve the same fraction with much fewer steps. | |||

====Generalization==== | |||

Lastly, the authors probe the generalization capabilities of I2As, beyond handling random level layouts in Sokoban. The agents were trained on levels with 4 boxes. Table 2 shows the performance of I2A when such an agent was tested on levels with different numbers of boxes, and that of the standard model-free agent for comparison. It turns out that I2As generalizes well; at 7 boxes, the I2A agent is still able to solve more than half of the levels, nearly as many as the standard agent on 4 boxes. | |||

[[File:i2a_table.png|800px|center|thumb]] | |||

===MiniPacman=== | |||

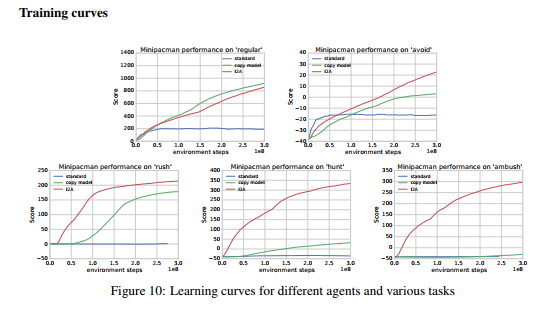

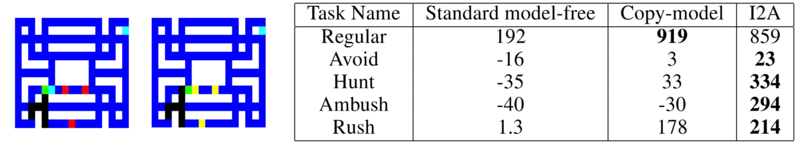

MiniPacman is a game modified from the classical game PacMan. In the game(Figure 8, left), the player explores a maze that contains food while being chased by ghosts. The maze also contains power pills; when eaten, for a fixed number of steps, the player moves faster, and the ghosts run away and can be eaten. These dynamics are common to all tasks. Each task is defined by a vector $w \in R^5$, associating a reward to each of the following five events: moving, eating food, eating a power pill, eating a ghost, and being eaten by a ghost. As such, the reward vector wrew can be interpreted as an ‘instruction’ about which task to solve in the same environment. | |||

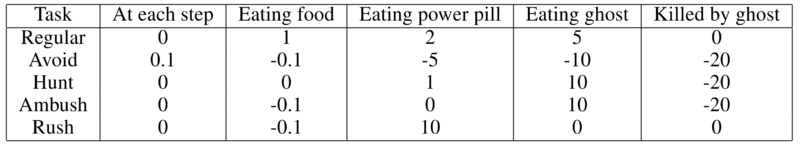

The goal of this part is the attempt that tries to apply the same I2A model to different tasks. The five tasks are described as follows: | |||

* Regular: level is cleared when all the food is eaten; | |||

* Avoid: level is cleared after 128 steps; | |||

* Hunt: level is cleared when all ghosts are eaten or after 80 steps. | |||

* Ambush: level is cleared when all ghosts are eaten or after 80 steps. | |||

* Rush: level is cleared when all power pills are eaten. | |||

[[File:minipacman_reward.png|800px|center|thumb|Table 3: the reward settings in different tasks]] | |||

Different from the task in Sokoban, in order to capture long-range dependencies across pixels, the authors also made use of a layer that is called pool-and-inject, which applies global max-pooling over each feature map and broadcasts the resulting values as feature maps of the same size and concatenates the result to the input. Pool-and-inject layers are therefore size-preserving layers which communicate the max-value of each layer globally to the next convolutional layer. The environment model for MiniPacman is shown in Figure 7. | |||

[[File:minipacman_model.png|800px|center|thumb|Figure 7: The MiniPacman environment model]] | |||

To illustrate the benefits of model-based methods in this multi-task setting, the authors trained a single environment model to predict both observations (frames) and events, where the environment model is effectively shared across all tasks. Results in Figure 7(right) illustrates the benefit of the I2A architecture, outperforming the standard agent in all tasks. Note that for tasks 4 & 5, the rewards are particularly sparse, and the anticipation of ghost dynamics is especially important. The I2A agent can leverage its environment and reward model to explore the environment much more effectively. | |||

[[File:minipacman.png|800px|center|thumb|Figure 8: Minipacman environment Left: Two frames from a minipacman game: the player is green, dangerous ghosts red, food dark blue, empty corridors black, power pills in cyan. After eating a power pill (right frame), the player can eat the 4 weak ghosts (yellow). Right: Performance after 300 million environment steps for different agents and all tasks. Note I2A clearly outperforms the other two agents on all tasks with sparse rewards.]] | |||

[[File:imagination-946.PNG]] | |||

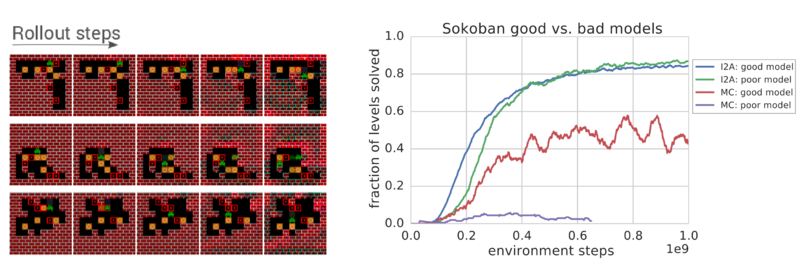

The training curves for the various experimental tasks described in this paper are provided in the figure above. | |||

=Conclusion= | =Conclusion= | ||

In this paper, the authors applied recent success in CNN and reinforcement learning and raised a novel approach, which is a combination of model-free and model-based methods, called Imagination-augmented RL. Unlike classical model-based RL and planning methods, I2A is able to successfully use imperfect models to support model-free decisions. This approach outperforms model-free baselines in the games, MiniPacman and on the challenging, combinatorial domain of Sokoban. As experiments suggest, this method is able to successfully use imperfect models to interpret future states and rewards. | |||

I2As trade-off environment interactions for computation by pondering before acting and thus, the imagination core part is essential in irreversible domains, where actions can have catastrophic outcomes. Compared to traditional Monte-Carlo search methods, the search space in I2A only grows linearly with the extension of the length of rollouts whereas I2As require far fewer function calls. This work may significantly broaden the applicability of model-based RL concepts and ideas. | |||

=Insight= | =Insight= | ||

This is a paper with very interesting ideas. However, it seems that the work is really hard to reproduce for an individual researcher. Since the architecture works as a whole, it is very difficult to debug each single part. Meanwhile, the training process is kind of long with up to 1e9 steps, which is also a huge requirement for computing resources. | |||

In terms of the architecture itself, the design the CNN for the tasks seems to be very empirical. The authors did not include the reasons or rules for this part. Yet why authors applied residual connection in this shadow network is unknown. According to the paper, even the CNN network is quite simple, some details in LSTM encoder are omitted. Therefore, the backpropagation process is not so clear across the whole model. | |||

Back to the settings of environment model, the authors used pre-trained model instead of the jointly training way. Would it be hard to train both models simultaneously? | |||

Lastly, the authors raised a new layer as Pool-and-inject layer, the motivation and plausibility are not so clear. It would be better if the authors can compare it with common pooling layer. | |||

In spite of some missing details, this is a solid work with a novel idea and many tricks. In addition, the settings of the experiment are quite inspiring where we can learn from. | |||

The use of memory networks instead of LSTM can alleviate the problem of remembering long-term rewards. Performing inference over the memory can lead to more accurate insight generation for internal simulations which is performed by the imagination augmented agents | |||

=Reference= | =Reference= | ||

# A commentary of the paper by the authors can be found on: https://www.youtube.com/watch?v=agXIYMCICcc | |||

# Buesing, L., Badia, A.P., Battaglia, P.W., Guez, A., Heess, N., Li, Y., Pascanu, R., Racanière, S., Reichert, D.P., Rezende, D.J., Silver, D., Vinyals, O., Weber, T., & Wierstra, D. (2017). Imagination-Augmented Agents for Deep Reinforcement Learning. CoRR, abs/1707.06203. | |||

# YuXuan Liu, Abhishek Gupta, Pieter Abbeel, and Sergey Levine. Imitation from observation: Learning to imitate behaviors from raw video via context translation. arXiv preprint arXiv:1707.03374, 2017. | |||

# Marc Deisenroth and Carl E Rasmussen. Pilco: A model-based and data-efficient approach to policy search. In Proceedings of the 28th International Conference on machine learning (ICML-11), pages 465–472, 2011. | |||

# Jessica B. Hamrick, Andy J. Ballard, Razvan Pascanu, Oriol Vinyals, Nicolas Heess, and Peter W. Battaglia. Metacontrol for adaptive imagination-based optimization. In Proceedings of the 5th International Conference on Learning Representations (ICLR 2017), 2017. | |||

# Razvan Pascanu, Yujia Li, Oriol Vinyals, Nicolas Heess, David Reichert, Theophane Weber, Sebastien Racaniere, Lars Buesing, Daan Wierstra, and Peter Battaglia. Learning model-based planning from scratch. arXiv preprint, 2017. | |||

# Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., ... & Petersen, S. (2015). Human-level control through deep reinforcement learning. Nature, 518(7540), 529-533. | |||

#Introduction to MCTS http://mcts.ai/about/index.html | |||

#Yevgen Chebotar, Karol Hausman, Marvin Zhang, Gaurav Sukhatme, Stefan Schaal, Sergey Levine. "Combining Model-Based and Model-Free Updates for Trajectory-Centric Reinforcement Learning". arxiv pre-print; arXiv:1703.03078 [cs.RO] | |||

#Aviv Tamar, Yi Wu, Garrett Thomas, Sergey Levine, and Pieter Abbeel. Value iteration networks. In Advances in Neural Information Processing Systems, pages 2154–2162, 2016. | |||

#YuXuan Liu, Abhishek Gupta, Pieter Abbeel, and Sergey Levine. Imitation from observation: Learning to imitate behaviors from raw video via context translation. arXiv preprint arXiv:1707.03374, 2017. | |||

#Marc Deisenroth and Carl E Rasmussen. Pilco: A model-based and data-efficient approach to policy search. In Proceedings of the 28th International Conference on machine learning (ICML-11), pages 465–472, 2011. | |||

#Jessica B. Hamrick, Andy J. Ballard, Razvan Pascanu, Oriol Vinyals, Nicolas Heess, and Peter W. Battaglia. Metacontrol for adaptive imagination-based optimization. In Proceedings of the 5th International Conference on Learning Representations (ICLR 2017), 2017. | |||

#Aviv Tamar, Yi Wu, Garrett Thomas, Sergey Levine, and Pieter Abbeel. Value iteration networks. In Advances in Neural Information Processing Systems, pages 2154–2162, 2016. | |||

= Appendix = | |||

This paper provides a rich appendix that expounds upon the authors implementation in much greater detail. | |||

== A Training and the rollout policy distribution details == | |||

As in other reinforcement learning works each agent used in the paper defines a stochastic policy. While training the models, to increase the probability of an action being taken, A3C applies an update $\Delta \theta$ to the parameters $\theta$ using policy gradient $g(\theta)$: | |||

$ g(\theta) = \nabla_{\theta}log(\pi)(a_{t}|o_{t};\theta)A(o_{t}; \theta_{v})$, | |||

where $A(o_{t}; \theta_{v})$ denotes an estimate of the advantage function. We learn a value function $V(o_t;\theta_v)$ and hence use it to compute the advantage. | |||

== C MiniPacman additional details == | |||

=== Task collection === | |||

[[File:task_collection.PNG]] | |||

Latest revision as of 20:24, 28 November 2017

Introduction

An interesting research area in reinforcement learning is developing intelligent agents for playing video games. Before the introduction of deep learning, video game agents were commonly coded based on Monte-Carlo Tree Search(MCTS) of pre-set rules. MCTS is used for making optimal decisions in artificial intelligence problems, and the focus is on the analysis of the most promising moves. The basic algorithm is selection, expansion, simulation, and backpropagation. Recent research has shown deep reinforcement learning to be very successful at playing video games like Atari 2600. To be specific, the method (Figure 1) is called Deep Q-Learning (DQN) which learns the optimal actions based on current observations (raw pixels) [Mnih et al., (2015)]. However, there are some complex games where DQN fails to learn: some games need to solve a sub-problem without explicit reward or contain irreversible domains, where actions can be catastrophic. A typical example of these games is Sokoban. Similar to how humans play the game, RL model needs planning and inference. This kind of game raises challenges to RL.

In Reinforcement Learning, the algorithms can be divided into two categories: model-free algorithm and model-based algorithm. The model-based reinforcement learning tries to infer environment to gain the reward while model-free reinforcement learning does not use the environment to learn the action that results in the best reward. More specifically, model-based methods learn the model (the reward function: $R(s, s^{'})$ and the Transition probability $P(s^{'} | s, a)$ where $s', s$ and $a$ are next state, current state and action respectively.) of the environment, while model-free methods never explicitly learn the model of the environment. DQN, mentioned above(Figure 1), is a model-free method. It takes raw pixels as input and maps them to values or actions. As a drawback, large amounts of training data is required. In addition, the policies are not generalized to new tasks in the same environment. A model-based method is trying to build a model for the environment. By querying the model, agents can avoid irreversible, poor decisions. As an approximation of the environment, it can enable better generalization across states. However, this method only shows success in limited settings, where an exact transition model is given or in simple domains. In complex environments, model-based methods suffer from model errors from function approximation. These errors compound during planning, causing poor agent performance. Currently, there is no model-based method that is robust against imperfections.

In this paper, the authors introduce a novel deep reinforcement learning architecture called Imagination-Augmented Agents (I2As). Literally, this method enables agents to learn to interpret predictions from a learned environment model to construct implicit plans. It is a combination of model-free and model-based aspects. The advantage of this method is that it learns in an end-to-end way to extract information from model simulations without making any assumptions about the structure or the perfections of the environment model. As shown in the results, this method outperforms DQN in the games: Sokoban, and MiniPacman. In addition, the experiments all show that I2A is able to successfully use imperfect models.

Motivation

A capability to "imagine" and reason about the future is an important property of an intelligent and sophisticated RL algorithms. Beyond that, they must be able to construct a plan using this knowledge. In a model-based approach, "internal model" is used to analyze how actions lead to future outcomes in order to reason and plan. These internal models work so well because provided environments are generally "perfect" - they have clearly defined rules which allow outcomes to be predicted very accurately in almost every circumstance. But the real world is complex, rules are not so clearly defined and unpredictable problems often arise. Even for the most intelligent agents, imagining in these complex environments is a long and costly process. Hence this paper puts forward an idea of combining the model-free and model-based approach that could work under complex situations using imagination augmentation. Although the structure of this method is complex, the motivation is intuitive: since the agent suffers from irreversible decisions, attempts in simulated states may be helpful. To improve the expensive search space in traditional MCTS methods, adding decision from policy network can reduce search steps. In order to keep context information, rollout results are encoded by an LSTM encoder. The final output is combining the result from the model-free network and model-based network.

Related Work

There are some works that try to apply deep learning to model-based reinforcement learning. The popular approach is to learn a neural network from the environment and apply the network in classical planning algorithms. These works can not handle the mismatch between the learned model and the ground truth. [Liu et al.(2017)] use context information from trajectories, but in terms of imitation learning.

To deal with imperfect models, [Deisenroth and Rasmussen(2011)] try to capture model uncertainty by applying high-computational Gaussian Process models. In order to develop such a policy search method, the authors of this paper used analytic gradients of an approximation to the expected return for indirect policy search. This means by learning a probabilistic dynamics model and explicitly incorporating model uncertainty into long-term planning, this policy search method can cope with very little data and facilitates learning from scratch in only a few trials.

Similar ideas can be found in a study by [Hamrick et al.(2017)]: they present a neural network that queries expert models, but just focus on meta-control for continuous contextual bandit problems. Pascanu et al.(2017) extend this work by focusing on explicit planning in sequential environments.

This paper claims to build upon the work of [Tamar et al. (2016)]. In these works, neural networks whose architectures mimic classical iterative planning algorithms are presented. Such models are trained by reinforcement learning or to predict user-defined, high-level features. The authors did not define any explicit environment model.

Approach

The summary of the architecture of I2A can be seen in Figure 2.

The observation $O_t$ (Figure 2 right) is fed into two paths, the model-free path is just common DQN which predicts the best action given $O_t$, whereas the model-based path performs a rollout strategy, the aggregator combines the $n$ rollout encoded outputs($n$ equals to the number of actions in the action space), and forwards the results to next layer. Together they are used to generate a policy function $\pi$ to output an action. In each rollout operation, the imagination core is used to predict the future state and reward.

Imagination Core

The imagination-augmented agents adopt a concept called the "imagination encoder", which is a neural network which learns to extract relevant information that impacts the agent's future decisions, and ignores information that is irrelevant. In particular, these agents have the following features: (i) they have the ability to learn to interpret their internal simulations which captures the environmental dynamics, (ii) they adapt to the number of imagined trajectors which makes the imagination more efficient, and finally (iii) they have the ability to learn different strategies to construct plans by choosing the appropriate trajectory. The imagination core(Figure 2 left) is the key role in the model-based path. It consists of two parts: environment model and rollout policy. The former is an approximation of the environment and the latter is used to simulate imagined trajectories, which are interpreted by a neural network and provided as additional context to a policy network.

environment model

In order to augment agents with imagination, the method relies on environment models that, given current information, can be queried to make predictions about the future. In this work, the environment model is built based on action-conditional next-step predictors, which receive input contains current observation and current action, and predict the next observation and the next reward(Figure 3).

The authors can either pretrain the environment model before embedding it (with frozen weights) within the I2A architecture or jointly train it with the agent by adding $l_{model}$ to the total loss as an auxiliary loss. In practice, they found that pre-training the environment model led to faster runtime of the I2A architecture, so they adopted this strategy.

rollout policy

The rollout process is regarded as the simulated trajectories. In this work, the rollout is performed for each possible action in the environment.

A rollout policy $\hat \pi$ is a function that takes current observation $O$ and outputs an action $a$ that potentially leads to maximal reward. In this architecture, the rollout policy can be a DQN network. In the experiment, the rollout policy $\hat \pi$ is broadcasted and shared. After experiments on the types of rollout policies(random, pre-trained), the authors found the efficient strategy is to distill the policy into a model-free policy, which consists in creating a small model-free network $\hat \pi(O_t)$, and adding to the total loss a cross entropy auxiliary loss between the imagination-augmented policy $\pi(O_t)$ as computed on the current observation, and the policy $\hat \pi(O_t)$ as computed on the same observation.

$$ l_{dist} (\pi, \hat \pi)(O_t) = \lambda_{dist} \sum_a \pi(a|O_t)log(\hat \pi(a|O_t)) $$

Together as the imagination core, these two parts produces $n$ trajectories $\hat \tau_1,...,\hat \tau_n$. Each imagined trajectory $\hat \tau$ is a sequence of features $(\hat f_{t+1},...,\hat f_{t+\tau})$, where $t$ is the current time, $\tau$ the length of rollout, and $\hat f_{t+i}$ the output of the environment model(the predicted observation and reward). In order to guarantee success in imperfections, the architecture does not assume the learned model to be perfect. The output will not only depend on the predicted reward.

Trajectories Encoder

From the intuition to keep the sequence information in the trajectories, the architecture uses a rollout encoder $\varepsilon$ that processes the imagined rollout as a whole and learns to interpret it(Figure 2 middle). Each trajectory is encoded as a rollout embedding $e_i=\varepsilon(\hat \tau_i)$. Then, the aggregator $A$ combines the rollout embedding s into a single imagination code $c_{ia}=A(e_1,...,e_n)$ by simply concatenating all the summaries. In the experiments, the encoder is an LSTM that takes the predicted output from environment model as the input. One observation is that the order of the sequence $\hat f_{t+1}$ to $\hat f_{t+\tau}$ makes relatively little impact on the performance. The encodes mimics the Bellman type backup operations in DQN. An alternative attempt would be to combine guided policy search with the linear quadratic regulator[7], which is coincidentally a joint model-free and model-based trajectory update mechanism for reinforcement learning.

Model-Free Path

The model-free path contains a network that only takes the current observation as input that generates the potential optimal action. This network can be same as the one in imagination core.

In conclusion, the I2A learns to combine information for two paths, and without the model-based path, I2A simply reduce to a standard model-free network(such as A3C, more explanations here). The imperfect approximation results in a rollout policy with higher entropy, potentially striking a balance between exploration and exploitation.

Experiments

These following experiments were tested in Sokoban and MiniPacman games. All results are averages taken from top three agents. These agents were trained over 32 to 64 workers, and the network was optimized by RMSprop. As the pre-training strategy, the training data of I2A was pre-generated from trajectories of a partially trained standard model-free agent, the data is also taken into account for the budget. The total number of frames that were needed in pre-training is counted in the later process. Meanwhile, the authors show that the environment model can be reused to solve multiple tasks in the same environment.

In the game Sokoban, the environment is a 10 x 10 grid world. All agents were trained directly on raw pixels(image size 80 x 80 with 3 channels). To make sure the network is not just simply "memorize" all states, the game procedurally generates a new level each episode. Out of 40 million levels generated, less than 0.7% were repeated. Therefore, a good agent should solve the unseen level as well.

The reward settings for reinforcement learning algorithms are as follows:

- Every time step, a penalty of -0.1 is applied to the agent.(encourage agents to finish levels faster)

- Whenever the agent pushes a box on target, it receives a reward of +1.(encourage agents to push boxes onto targets)

- Whenever the agent pushes a box off target, it receives a penalty of -1.(avoid artificial reward loop that would be induced by repeatedly pushing a box off and on target)

- Finishing the level gives the agent a reward of +10 and the level terminates.(strongly reward solving a level)

To show the advantage of I2A, the authors set a model-free standard architecture as a baseline. The architecture is a multi-layer convolutional neural network (CNN), taking the current observation $O_t$ as input, followed by a fully connected (FC) hidden layer, which typically makes use of a score function. This FC layer feeds into two heads: into an FC layer with one output per action computing the policy logits $\log \pi(a_t|O_t, \theta)$; and into another FC layer with a single output that computes the value function $V(O_t; \theta_v)$.

- for MiniPacman: the CNN has two layers, both with 3x3 kernels, 16 output channels and strides 1 and 2; the following FC layer has 256 units

- for Sokoban: the CNN has three layers with kernel sizes 8x8, 4x4, 3x3, strides of 4, 2, 1 and number of output channels 32, 64, 64; the following FC has 512 units

Sokoban

Sokoban is a video game which is classified as a transport puzzle. The game involves the player moving pieces of boxes to get them to their target locations in an aerial view. The boxes can only be pushed and many moves become irreversible if the player don't properly plan them, which might render the puzzle unsolvable. The player is confined to the board and may move horizontally or vertically onto empty squares (never through walls or boxes). The player can also move into a box, which pushes it into the square beyond. Boxes may not be pushed into other boxes or walls, and they cannot be pulled. The number of boxes is equal to the number of storage locations. The puzzle is solved when all boxes are at storage locations.

The environment model for Sokoban is shown in figure 4

Besides, to demonstrate the influence of larger architecture in I2A, the authors set a copy-model agent that uses the same architecture of I2A but the environment model is replaced by identical map. This agent is regarded as an I2A agent without imagination.

The results are shown in Figure 4(left). I2A agents can solve much more levels compared to common DQN. Also, it far outperforms the copy-model version, suggesting that the environment model is crucial. The authors also trained an I2A where the environment model was predicting no rewards, only observations. This also performed worse. However, after much longer training (3e9 steps), these agents did recover the performance of the original I2A, which was never the case for the baseline agent even with that many steps. Hence, reward prediction is very helpful but not absolutely necessary in this task, and imagined observations alone are informative enough to obtain high performance on Sokoban. Note this is in contrast to many classical planning and model-based reinforcement learning methods, which often rely on reward prediction.

Length of Rollout

A further experiment was investigating how the length of individual rollouts affects performance. The authors performed a parameter searching. Figure 5(right) shows the influence of the rollout length. The strategy using 3 rollout steps improves the speed of learning and improves the performance significantly than 1 step, and 5 is the optimal number. This implies rollout can be very helpful and informative. This rollout enables the agent to learn moves it cannot recover from.

Imperfections

To demonstrate I2A can handle less reliable predictions, the authors set experiment where the I2A used a poor environment model(smaller number of parameters), where the error may accumulate across the rollout(Figure 6 left). The authors suggest that it is learning a rollout encoder that enables I2As to deal with imperfect model predictions. We can compare them to a setup without a rollout decoder. As shown in figure 6(right), even with relatively poor environment model, the performance of I2A is stable, unlike traditional Monte-Carlo search, which explicitly estimates the value of each action from rollouts, rather than learning an arbitrary encoding of the rollouts. An interesting result is that a rollout length 5 no longer outperforms a length of 3, which matches our common sense.

Perfections

As I2A shows the robustness towards environment models, the authors tested an I2A agent with a nearly perfect environment model, and the results are in Table 1 and Table 2. Traditional Mento-Carlo Tree Search is tested as the baseline. From the table, although it is able to solve many levels, the search steps are very huge. On the contrary, I2A with the nearly perfect model can achieve the same fraction with much fewer steps.

Generalization

Lastly, the authors probe the generalization capabilities of I2As, beyond handling random level layouts in Sokoban. The agents were trained on levels with 4 boxes. Table 2 shows the performance of I2A when such an agent was tested on levels with different numbers of boxes, and that of the standard model-free agent for comparison. It turns out that I2As generalizes well; at 7 boxes, the I2A agent is still able to solve more than half of the levels, nearly as many as the standard agent on 4 boxes.

MiniPacman

MiniPacman is a game modified from the classical game PacMan. In the game(Figure 8, left), the player explores a maze that contains food while being chased by ghosts. The maze also contains power pills; when eaten, for a fixed number of steps, the player moves faster, and the ghosts run away and can be eaten. These dynamics are common to all tasks. Each task is defined by a vector $w \in R^5$, associating a reward to each of the following five events: moving, eating food, eating a power pill, eating a ghost, and being eaten by a ghost. As such, the reward vector wrew can be interpreted as an ‘instruction’ about which task to solve in the same environment. The goal of this part is the attempt that tries to apply the same I2A model to different tasks. The five tasks are described as follows:

- Regular: level is cleared when all the food is eaten;

- Avoid: level is cleared after 128 steps;

- Hunt: level is cleared when all ghosts are eaten or after 80 steps.

- Ambush: level is cleared when all ghosts are eaten or after 80 steps.

- Rush: level is cleared when all power pills are eaten.

Different from the task in Sokoban, in order to capture long-range dependencies across pixels, the authors also made use of a layer that is called pool-and-inject, which applies global max-pooling over each feature map and broadcasts the resulting values as feature maps of the same size and concatenates the result to the input. Pool-and-inject layers are therefore size-preserving layers which communicate the max-value of each layer globally to the next convolutional layer. The environment model for MiniPacman is shown in Figure 7.

To illustrate the benefits of model-based methods in this multi-task setting, the authors trained a single environment model to predict both observations (frames) and events, where the environment model is effectively shared across all tasks. Results in Figure 7(right) illustrates the benefit of the I2A architecture, outperforming the standard agent in all tasks. Note that for tasks 4 & 5, the rewards are particularly sparse, and the anticipation of ghost dynamics is especially important. The I2A agent can leverage its environment and reward model to explore the environment much more effectively.

The training curves for the various experimental tasks described in this paper are provided in the figure above.

Conclusion

In this paper, the authors applied recent success in CNN and reinforcement learning and raised a novel approach, which is a combination of model-free and model-based methods, called Imagination-augmented RL. Unlike classical model-based RL and planning methods, I2A is able to successfully use imperfect models to support model-free decisions. This approach outperforms model-free baselines in the games, MiniPacman and on the challenging, combinatorial domain of Sokoban. As experiments suggest, this method is able to successfully use imperfect models to interpret future states and rewards.

I2As trade-off environment interactions for computation by pondering before acting and thus, the imagination core part is essential in irreversible domains, where actions can have catastrophic outcomes. Compared to traditional Monte-Carlo search methods, the search space in I2A only grows linearly with the extension of the length of rollouts whereas I2As require far fewer function calls. This work may significantly broaden the applicability of model-based RL concepts and ideas.

Insight

This is a paper with very interesting ideas. However, it seems that the work is really hard to reproduce for an individual researcher. Since the architecture works as a whole, it is very difficult to debug each single part. Meanwhile, the training process is kind of long with up to 1e9 steps, which is also a huge requirement for computing resources.

In terms of the architecture itself, the design the CNN for the tasks seems to be very empirical. The authors did not include the reasons or rules for this part. Yet why authors applied residual connection in this shadow network is unknown. According to the paper, even the CNN network is quite simple, some details in LSTM encoder are omitted. Therefore, the backpropagation process is not so clear across the whole model.

Back to the settings of environment model, the authors used pre-trained model instead of the jointly training way. Would it be hard to train both models simultaneously?

Lastly, the authors raised a new layer as Pool-and-inject layer, the motivation and plausibility are not so clear. It would be better if the authors can compare it with common pooling layer.

In spite of some missing details, this is a solid work with a novel idea and many tricks. In addition, the settings of the experiment are quite inspiring where we can learn from.

The use of memory networks instead of LSTM can alleviate the problem of remembering long-term rewards. Performing inference over the memory can lead to more accurate insight generation for internal simulations which is performed by the imagination augmented agents

Reference

- A commentary of the paper by the authors can be found on: https://www.youtube.com/watch?v=agXIYMCICcc

- Buesing, L., Badia, A.P., Battaglia, P.W., Guez, A., Heess, N., Li, Y., Pascanu, R., Racanière, S., Reichert, D.P., Rezende, D.J., Silver, D., Vinyals, O., Weber, T., & Wierstra, D. (2017). Imagination-Augmented Agents for Deep Reinforcement Learning. CoRR, abs/1707.06203.

- YuXuan Liu, Abhishek Gupta, Pieter Abbeel, and Sergey Levine. Imitation from observation: Learning to imitate behaviors from raw video via context translation. arXiv preprint arXiv:1707.03374, 2017.

- Marc Deisenroth and Carl E Rasmussen. Pilco: A model-based and data-efficient approach to policy search. In Proceedings of the 28th International Conference on machine learning (ICML-11), pages 465–472, 2011.

- Jessica B. Hamrick, Andy J. Ballard, Razvan Pascanu, Oriol Vinyals, Nicolas Heess, and Peter W. Battaglia. Metacontrol for adaptive imagination-based optimization. In Proceedings of the 5th International Conference on Learning Representations (ICLR 2017), 2017.

- Razvan Pascanu, Yujia Li, Oriol Vinyals, Nicolas Heess, David Reichert, Theophane Weber, Sebastien Racaniere, Lars Buesing, Daan Wierstra, and Peter Battaglia. Learning model-based planning from scratch. arXiv preprint, 2017.

- Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., ... & Petersen, S. (2015). Human-level control through deep reinforcement learning. Nature, 518(7540), 529-533.

- Introduction to MCTS http://mcts.ai/about/index.html

- Yevgen Chebotar, Karol Hausman, Marvin Zhang, Gaurav Sukhatme, Stefan Schaal, Sergey Levine. "Combining Model-Based and Model-Free Updates for Trajectory-Centric Reinforcement Learning". arxiv pre-print; arXiv:1703.03078 [cs.RO]

- Aviv Tamar, Yi Wu, Garrett Thomas, Sergey Levine, and Pieter Abbeel. Value iteration networks. In Advances in Neural Information Processing Systems, pages 2154–2162, 2016.

- YuXuan Liu, Abhishek Gupta, Pieter Abbeel, and Sergey Levine. Imitation from observation: Learning to imitate behaviors from raw video via context translation. arXiv preprint arXiv:1707.03374, 2017.

- Marc Deisenroth and Carl E Rasmussen. Pilco: A model-based and data-efficient approach to policy search. In Proceedings of the 28th International Conference on machine learning (ICML-11), pages 465–472, 2011.

- Jessica B. Hamrick, Andy J. Ballard, Razvan Pascanu, Oriol Vinyals, Nicolas Heess, and Peter W. Battaglia. Metacontrol for adaptive imagination-based optimization. In Proceedings of the 5th International Conference on Learning Representations (ICLR 2017), 2017.

- Aviv Tamar, Yi Wu, Garrett Thomas, Sergey Levine, and Pieter Abbeel. Value iteration networks. In Advances in Neural Information Processing Systems, pages 2154–2162, 2016.

Appendix

This paper provides a rich appendix that expounds upon the authors implementation in much greater detail.

A Training and the rollout policy distribution details

As in other reinforcement learning works each agent used in the paper defines a stochastic policy. While training the models, to increase the probability of an action being taken, A3C applies an update $\Delta \theta$ to the parameters $\theta$ using policy gradient $g(\theta)$:

$ g(\theta) = \nabla_{\theta}log(\pi)(a_{t}|o_{t};\theta)A(o_{t}; \theta_{v})$,

where $A(o_{t}; \theta_{v})$ denotes an estimate of the advantage function. We learn a value function $V(o_t;\theta_v)$ and hence use it to compute the advantage.