kernelized Sorting: Difference between revisions

m (Conversion script moved page Kernelized Sorting to kernelized Sorting: Converting page titles to lowercase) |

|||

| (134 intermediate revisions by 8 users not shown) | |||

| Line 1: | Line 1: | ||

Object matching is a fundamental operation in data analysis. It typically requires the definition of a similarity measure between | Object matching is a fundamental operation in data analysis. It typically requires the definition of a similarity measure between classes of objects to be matched. Instead, we develop an approach which is able to perform matching by requiring a similarity measure only within each of the classes. This is achieved by maximizing the dependency between matched pairs of observations by means of the Hilbert Schmidt Independence Criterion. This problem can be cast as one of maximizing a quadratic assignment problem with special structure and we present a simple algorithm for finding a locally optimal solution. | ||

==Introduction== | ==Introduction== | ||

| Line 9: | Line 9: | ||

Given two sets of observations <math> X= \{ x_{1},..., | Given two sets of observations <math> X= \{ x_{1},..., | ||

x_{m} \}</math> and <math>Y=\{ y_{1},..., y_{m}\} </math> | x_{m} \}\subseteq \mathcal X</math> and <math>Y=\{ y_{1},..., y_{m}\}\subseteq \mathcal Y </math> | ||

Find a permutation matrix <math> | Find a permutation matrix <math>\pi \in \Pi_{m}</math>, | ||

<math> \Pi_{m}:= \{\pi | \pi \in \{0,1\}^{m \times m} \ | <math> \Pi_{m}:= \{ \pi | \pi \in \{0,1\}^{m \times m} \textrm{where} \pi 1_{m}=1_{m}, | ||

\pi^{T}1_{m}=1_{m}\}</math> | \pi^{T}1_{m}=1_{m}\}</math> | ||

(it means <math> \Pi_{m} </math> is an m by m matrix, and in each row or column it has (m-1) zeros and only one 1) | |||

such that <math> \{ (x_{i},y_{\pi (i)}) for 1 \leqslant i \leqslant m \} | such that <math> \{ (x_{i},y_{\pi (i)}) for 1 \leqslant i \leqslant m \} | ||

</math> is maximally dependent. Here <math>1_{m} \in \mathbb{R}^{m}</math> is the | </math> is maximally dependent. Here <math>1_{m} \in \mathbb{R}^{m}</math> is the | ||

vector of all ones. | vector of all ones, and <math>\pi (i)</math> is the column number, for which we have a 1 in the i<sub>th</sub> row. | ||

Denote by <math>D(Z(\pi))</math> a measure of the dependence between x and y, where <math> Z(\pi) := \{ (x_{i},y_{\pi (i)}) for 1 \leqslant i \leqslant m \} | |||

</math>. | |||

Then we define nonparametric sorting of X and Y as follows | |||

<math> | |||

\pi^{\ast}:=\arg\max_{\pi \in \prod_{m}}D(Z(\pi)). | |||

</math> | |||

==Hilbert Schmidt Independence Criterion== | |||

Let sets of observations X and Y be drawn jointly from some probability distribution <math>Pr_{xy}</math>. The Hilbert Schmidt Independence Criterion (HSIC) measures the dependence between x and y by computing the norm of the cross-covariance operator over the domain <math> \mathcal X \times \mathcal Y</math> in Hilbert Space. | |||

let <math>\mathcal {F}</math> be the Reproducing Kernel Hilbert Space (RKHS) on | |||

<math>\mathcal {X}</math> with associated kernel <math>k: \mathcal X \times \mathcal X \rightarrow | |||

\mathbb{R}</math> and feature map <math>\phi: \mathcal X \rightarrow \mathcal {F}</math>. | |||

Let <math>\mathcal {G}</math> be the RKHS on <math>\mathcal Y</math> with kernel <math>l</math> and | |||

feature map <math>\psi</math>. The cross-covariance operator <math>C_{xy}:\mathcal | |||

{G}\rightarrow \mathcal {F}</math> is defined by | |||

<math> | |||

C_{xy}=\mathbb{E}_{xy}[(\phi(x)-\mu_{x})\otimes (\psi(y)-\mu_{y})], | |||

</math> | |||

where <math>\mu_{x}=\mathbb{E}[\phi(x)]</math>, <math>\mu_{y}=\mathbb{E}[\psi(y)]</math>. | |||

HSIC is the square of the Hilbert-Schmidt norm of the cross covariance operator <math>\, C_{xy}</math> | |||

<math> | |||

D(\mathcal {F},\mathcal {G},Pr_{xy}):=\parallel C_{xy} | |||

\parallel_{HS}^{2}. | |||

</math> | |||

In term of kernels, HSIC can be expressed as | |||

<math> | |||

\mathbb{E}_{xx'yy'}[k(x,x')l(y,y')]+\mathbb{E}_{xx'}[k(x,x')]\mathbb{E}_{yy'}[l(y,y')]-2\mathbb{E}_{xy}[\mathbb{E}_{x'}[k(x,x')]\mathbb{E}_{y}[l(y,y')]]. | |||

</math> | |||

where <math>\mathbb{E}_{xx'yy'}</math> is the expectation over both <math>\ (x, y)</math> ~ | |||

<math>\ Pr_{xy}</math> and an additional pair of variables <math>\ (x', y')</math> ~ <math>\ Pr_{xy}</math> | |||

drawn independently according to the same law. | |||

A biased estimator of HSIC given finite sample <math>Z = \{(x_{i}, | |||

y_{i})\}_{i=1}^{m}</math> drawn from <math>Pr_{xy}</math> is | |||

<math> | |||

D(\mathcal {F},\mathcal {G},Z)=(m-1)^{-2}tr HKHL = | |||

(m-1)^{-2} tr \bar{K}\bar{L} | |||

</math> | |||

where <math>K,L\in \mathbb{R}^{m\times m}</math> are the kernel matrices for | |||

the data and the labels respectively, <math>H_{ij}=\delta_{ij}-m^{-1}</math> | |||

centers the data and the labels in feature space, <math>\bar{K}:=HKH</math> and | |||

<math>\bar{L}:=HLH</math> denote the centered versions <math>K</math> and <math>L</math> respectively. | |||

Advantages of HSIC are: | |||

Computing HSIC is simple: only the kernel matrices K and L are needed; | |||

HSIC satisfies concentration of measure conditions, i.e. for random draws of observation from <math>Pr_{xy}</math>, HSIC provides values which are very similar; | |||

Incorporating prior knowledge into the dependence estimation can be done via | |||

kernels. | |||

==Kernelized Sorting== | |||

===Kernelized Sorting=== | |||

'''Claim: ''' Thr problem is equivalent to the optimization problem of | |||

<math> | |||

\pi^{\ast}=\arg\max_{\pi \in \Pi_{m}}[tr \bar{K} | |||

\pi^{T}\bar{L}\pi] | |||

</math> | |||

'''Proof''': Firstly, we need to establish <math>H</math> and <math>\pi</math> matrices commute. | |||

Since <math>H</math> is a centering matrix, we can write it as <math>H=I_{n}-11^{T}</math>. | |||

Actually, note that <math>\ H\pi=\pi H</math> iff <math>\ (I_{n}-11^{T})\pi=\pi (I_{n}-11^{T})</math> iff <math>\ 11^{T}\pi=\pi 11^{T}</math>, a result follows. | |||

Next, recall that the biased estimator of HSIC given finite sample <math>Z = \{(x_{i}, | |||

y_{i})\}_{i=1}^{m}</math> drawn from <math>Pr_{xy}</math> is | |||

<math> | |||

D(\mathcal {F},\mathcal {G},Z)=(m-1)^{-2}tr HKHL = | |||

(m-1)^{-2} tr \bar{K}\bar{L} | |||

</math> | |||

where <math>K,L\in \mathbb{R}^{m\times m}</math> are the kernel matrices for | |||

the data and the labels respectively, i.e. <math>K=xx^{T}</math> and <math>L=yy^{T}</math>. | |||

Now, for any given pair <math>(x, y_{r})</math> between <math>X</math> and <math>Y</math>, we have <math>y_{r}=\pi y</math>. | |||

Note that <math>\pi</math> is a permutation matrix, we have <math>y=\pi^{T} y_{r}</math>, so the kernel matrix <math>L=\pi^{T}y_{r}y_{r}^{T}\pi</math>. | |||

Note that the kernel matrix <math>L_{r}=y_{r}y_{r}^{T}</math>, so the kernel matrix <math>L=\pi^{T}L_{r}\pi</math>. | |||

Note that <math>tr HKHL = tr HKHHLH </math>, since <math>H</math> is idempotent. | |||

So we have <math>tr HKHL = tr HKHHLH = tr \bar K H\pi^{T}L_{r}\pi H = tr \bar K \pi^{T}HL_{r}H\pi = tr \bar K \pi^{T}\bar L_{r}\pi </math>. | |||

Clearly, it is just our objective function. | |||

====Sorting as a special case==== | |||

For general kernel matrices <math>K \,</math> and <math>L \,</math>, where <math>K_{ij}=k(x_i,x_j) \,</math> and <math>L_{ij}=l(x_i,x_j) \,</math>, the objective of the kernelized sorting problem, as explained above, is to find the permutation matrix <math> \pi \,</math> which maximizes <math>tr(\bar{K} \pi^{T}\bar{L}\pi ) = tr(HKH\pi^{T}HLH\pi)\, </math>. | |||

In the special case where the kernel functions <math>k\,</math> and <math>l\,</math> are the inner product in Euclidean space, we have <math>K=xx^{T}\,</math> and <math>L=yy^{T}\,</math>. Hence, we can rewrite the objective as | |||

<math>tr(HKH\pi^{T}HLH\pi) = tr(Hxx^{T}H\pi^{T}Hyy^{T}H\pi) = tr[Hx(Hx)^T\pi^{T}Hy(Hy)^T\pi] = tr[((Hx)^T\pi^{T}Hy) ((Hy)^T\pi Hx))]\,</math>, where the last step uses the property that trace is invariant under cyclic permutations. | |||

Note that <math>(Hx)^T\pi^{T}Hy \, </math> and <math> (Hy)^T\pi Hx = (Hx)^T\pi^{T}Hy \,</math> are scalars, therefore the objective is equal to <math> [(Hx)^T\pi (Hy)]^2 \,</math>. | |||

In the even more special case where the Euclidean space is the real line and the inner product is multiplication of real numbers, the centering matrix <math>H\,</math> merely translates the sample vector <math>y \,</math> (by the sample mean) and thus the order of <math>y \,</math> is preserved. Hence, maximizing <math> [(Hx)^T\pi (Hy)]^2 \,</math> can be solved by maximizing <math>x^T \pi y \,</math>. Under the further assumption that <math>x \,</math> is sorted ascendingly, maximizing <math> x^T \pi y \,</math> is equivalent to sorting <math>y \,</math> ascendingly, according to the Polya-Littlewood-Hardy inequality. | |||

===Diagonal Dominance=== | |||

Replace the expectations by sums where no pairwise summation indices are identical. This leads to the objective function: | |||

<math> | |||

\frac{1}{m(m-1)}\sum_{i\ne | |||

j}K_{ij}L_{ij}+\frac{1}{m^{2}(m-1)^{2}}\sum_{i\ne j,u\ne | |||

v}K_{ij}L_{uv}- \frac{2}{m(m-1)^2}\sum_{i,j\ne i,v\ne i}K_{ij}L_{iv} | |||

</math> | |||

Using the <math>\bar{K}_{ij}=K_{ij}(1-\delta_{ij})</math> and | |||

<math>\bar{L}_{ij}=L_{ij}(1-\delta_{ij})</math> for kernel matrices where | |||

the main diagonal terms have been removed we arrive at the | |||

expression <math>(m-1)^{-1}tr | |||

H\bar{L}H\bar{K}</math>. | |||

===Relaxation to a constrained eigenvalue problem=== | |||

An approximate solution of the problem by solving <br> | |||

<math> | |||

\text{maximize}_{\eta} \left\{ \eta^{T}M\eta \right\} \text{subject to} A\eta=b | |||

</math> | |||

Here the matrix <math>M=K\otimes L\in \mathbb{R}^{m^{2}\times{m^2}}</math> is | |||

given by the outer product of the constituting kernel matrices, | |||

<math>\eta \in \mathbb{R}^{m^2}</math> is a vectorized version of the | |||

permutation matrix <math>\pi</math>, and the constraints imposed by <math>A</math> and <math>b</math> | |||

amount to the polytope constraints imposed by <math>\Pi_{m}</math>. | |||

===Related Work=== | |||

Mutual Information is defined as, <math>I(X,Y)=h(X)+h(Y)-h(X,Y)</math>. We can | |||

approximate MI maximization by maximizing its lower bound. This then | |||

corresponds to minimizing an upper bound on the joint | |||

entropy <math>h(X,Y)</math>. | |||

Optimization | |||

<math> | |||

\pi^{\ast}=argmin_{\pi \in \Pi_{m}}|\log HJ(\pi)H|, | |||

</math> | |||

where <math>\ J_{ij}=K_{ij}L_{\pi(i),\pi(j)}</math>. This is related to the | |||

optimization criterion proposed by Jebara(2004) in the context of | |||

aligning bags of observations by sorting via minimum volume PCA. | |||

===Multivariate Extensions=== | |||

Let there be T random variables <math>x_i \in {\mathcal X}_i</math> which are jointly drawn from some distribution <math>p(x_1,...x_m)</math>. The expectation operator with respect to the joint distribution and with respect to the product of the marginals is given by | |||

<math> | |||

\mathbb{E}_{x_1,...,x_T}[\prod_{i=1}^{T}k_{i}(x_{i},\cdot)]</math> and <math>\prod_{i=1}^{T}\mathbb{E}_{x_i}[k_{i}(x_{i},\cdot)] | |||

</math> | |||

respectively. Both terms are equal if and only if all random variables are independent. The squared difference between both is given by | |||

<math> | |||

\mathbb{E}_{x_{i=1}^T,{x'}_{i=1}^{T}}[\prod_{i=1}^{T}k_{i}(x_{i},x_{i}^{'})]+\prod_{i=1}^{T}\mathbb{E}_{x_{i},x_{i}^{'}}[k_{i}(x_{i},x_{i}^{'})]-2\mathbb{E}_{x_{i=1}^{T}}[\prod_{i=1}^{T}\mathbb{E}_{x_{i}^{'}}[k(x_{i},x_{i}^{'})]] | |||

</math> | |||

which we refer to as multiway HSIC. | |||

Denote by <math>K_{i}</math> the kernel matrix obtained from the kernel <math>k_{i}</math> on the set of observations <math>X_{i}:=\{x_{i1},...,x_{im}\}</math>, the empirical estimate is given by | |||

<math> | |||

HSIC[X_{1},...,X_{T}]:=1_{m}^{T}(\bigodot_{i=1}^{T}K_{i})1_{m}+\prod_{i=1}^{T}1_{m}^{T}K_{i}1_{m}-2\cdot1_{m}^{T}(\bigodot_{i=1}^{T}K_{i}1_{m}) | |||

</math> | |||

where <math>\bigodot_{t=1}^{T}\ast</math> denotes elementwise product of its arguments. To apply this to sorting we only need to define T permutation matrices <math>\pi_{i} \in \Pi_{m}</math> and replace the kernel matrices <math>K_{i}</math> by <math>\pi_{i}^{T}K_{i}\pi_{i}</math>. | |||

==Optimization== | |||

===Convex Objective and Convex Domain=== | |||

Define <math>\pi</math> as a doubly stochastic matrix, | |||

<math> | |||

P_{m}:=\{\pi \in \mathbb{R}^{m \times m} where | |||

\pi_{ij}\geqslant 0 and \sum_{i}\pi_{ij}=1 and \sum_{j}\pi_{ij}=1\} | |||

</math> | |||

The objective function | |||

<math>tr K | |||

\pi^{T}L\pi</math> is convex in <math>\pi</math> . | |||

===Convex-Concave Procedure=== | |||

Compute successive linear lower bounds and maximize | |||

<math> | |||

\pi_{i+1}\leftarrow \arg\max_{\pi \in P_{m}}[tr | |||

\bar{K} \pi^{T}\bar{L} \pi_{i}] | |||

</math> | |||

This will converge to a local maximum. | |||

Initialization is done via sorted principal eigenvector. | |||

===An tentative explanation for this part=== | |||

Basically, I think the optimizing method used in this paper does not apply the Concave Convex Procedure exactly. As I said on Tuesday, I think it just "borrowed" the idea form the Concave Convex Procedure since there is no concave part in this question. | |||

Accoding to the paper, the Concave Convex Procedure works as | |||

follows: <math>f(x)=g(x)-h(x)</math>, where <math>g</math> is convex and <math>h</math> is concave, a | |||

lower bound can be found by | |||

<math> | |||

f(x) \ge g(x_{0}) + \langle x-x_{0},\partial_{x}g(x_{0}) \rangle | |||

-h(x) | |||

</math> | |||

For the objecitve function in the kernelized sorting method, it can be written in the following format | |||

<math>f(\pi)=g(\pi)= tr \bar{K} | |||

\pi^{T}\bar{L}\pi</math> | |||

Currently, suppose we have <math>\pi_{0}</math> and <math>g(\pi_{0}) = tr\bar{K} \pi_{0}^{T}\bar{L}\pi_{0}</math>. | |||

We know that <math>\bigtriangledown_{A} tr ABA^{T}C=CAB+C^{T}AB^{T}</math>. | |||

So <math> \bigtriangledown_{\pi} tr \bar K | |||

\pi^{T} \bar L \pi=\bigtriangledown_{\pi} tr \pi \bar K \pi^{T} \bar L = \bar L \pi \bar K+\bar L^{T} \pi \bar K^{T}</math>. | |||

Since <math>\bar K</math> and <math>\bar L</math> are symmetric matrix, we get | |||

<math>\bigtriangledown_{\pi} tr \pi \bar | |||

K \pi^{T} \bar L = 2\bar L \pi \bar K</math>. | |||

Hence, we get | |||

<math> | |||

\langle \pi - \pi_{0}, \bigtriangledown_{\pi} tr \pi_{0} \bar K \pi_{0}^{T} \bar L\rangle | |||

=\langle \pi - \pi_{0}, 2\bar L \pi_{0} \bar K\rangle | |||

=2tr (\pi - \pi_{0})^{T}\bar L \pi_{0} \bar K | |||

=2tr \bar K(\pi - \pi_{0})^{T}\bar L \pi_{0} | |||

</math> | |||

In this case, we can get | |||

<math> | |||

f(\pi)\ge tr | |||

\bar{K} \pi_{0}^{T}\bar{L}\pi_{0} + 2tr \bar K(\pi - | |||

\pi_{0})^{T}\bar L \pi_{0} </math> | |||

We can drop the coefficient <math>2</math> since we just want to maximize this | |||

lower bound, so we get | |||

<math>f(\pi)\ge tr \bar{K} \pi_{0}^{T}\bar{L}\pi_{0} + tr \bar K(\pi - | |||

\pi_{0})^{T}\bar L \pi_{0} </math> | |||

Hence, we get | |||

<math>f(\pi)\ge tr | |||

\bar{K} \pi^{T}\bar{L}\pi_{0} </math> | |||

So this is the lower bound we want to update repeatedly. | |||

Actually, I think if the Kernel matrices <math>K</math> and <math>L</math> are well defined and computed already, there is no too much parameters in this optimization problem, which means some stochastic gradient descent method can be applied. In fact, I think this question is easier than the assignment problem based the same argument about the complexity of parameters. Hence, interpreting it as a TSP problem and for example, using Simulated Annealing algorithm, is an acceptable method. | |||

== Application == | |||

Assume that we may want to visualize data according to the metric structure inherent in it. More specifically, our objective is to align it | |||

according to a given template, such as a grid, a torus, or any other fixed structure. Such problems occur when presenting images or documents to a user. Most of the algorithms for low dimensional object layout suffer from the problem that the low dimensional presentation is nonuniform. This has the advantage of revealing cluster structure but given limited screen size the presentation is undesirable. To address this problem, we can use kernelized sorting to align objects. In this scenario the kernel matrix L is given by the similarity measure between the objects <math> x_i </math> that are to be aligned. The kernel K, on the other hand, denotes the similarity between the locations where objects are to be aligned to. For the sake of simplicity we used a Gaussian RBF kernel between the objects to laid out and also between the positions of the grid, i.e. <math>\mathbf{k(x,x') = exp(-gamma ||x-x'||^2) }</math>. The kernel width <math>\mathbf{\gamma }</math> was adjusted to the inverse of <math> \mathbf{||x-x'||^2} </math> such that the argument of the exponential is O(1). | |||

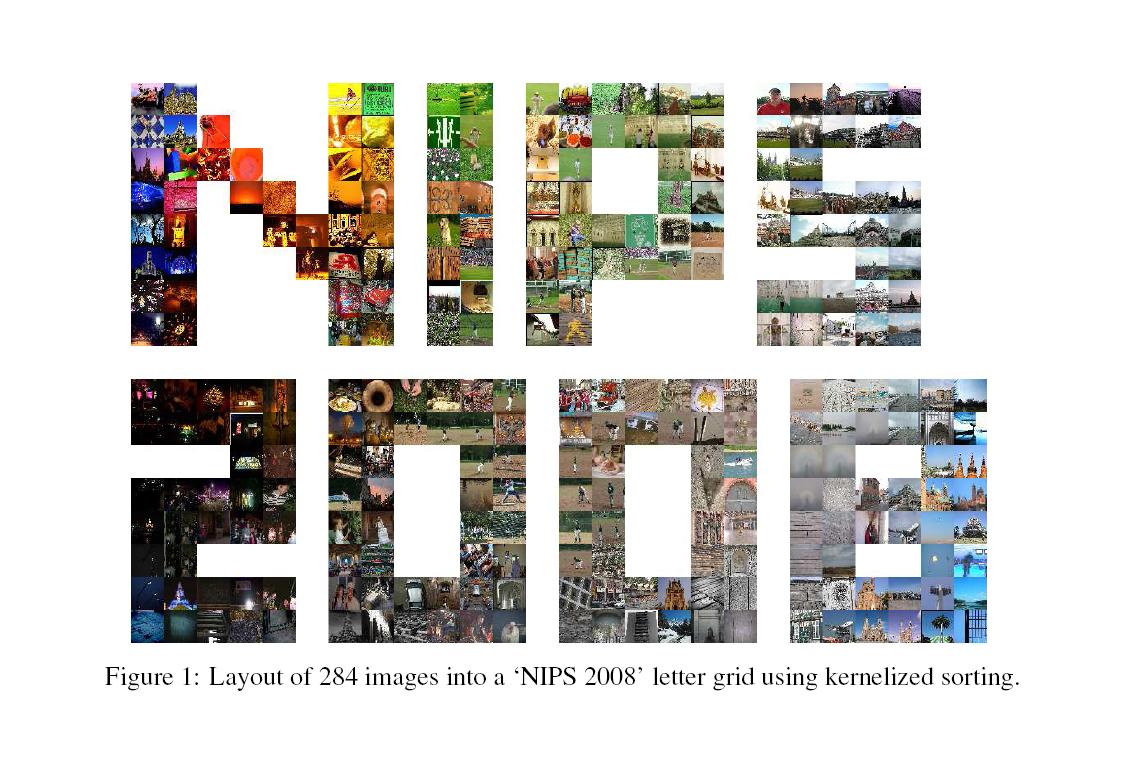

We obtained 284 images from http://www.flickr.com which were resized and downsampled to 40*40 pixels. We converted the images from RGB into Lab color space, yielding 40*40*3 dimensional objects. The grid, corresponding to X is a ‘NIPS 2008’ letters on which the images | |||

are to be laid out. After sorting we display the images according to their matching coordinates (Figure 1). | |||

<center>[[File:Kernelized Sorting-Fig1.JPG]]</center> | |||

We can see images with similar color composition are found at proximal locations. | |||

==Summary== | |||

We generalize sorting by maximizing dependency between matched pairs of observations via HSIC. | |||

Applications of our proposed sorting algorithm range from data visualization to image, data attribute and multilingual document matching. | |||

Latest revision as of 08:45, 30 August 2017

Object matching is a fundamental operation in data analysis. It typically requires the definition of a similarity measure between classes of objects to be matched. Instead, we develop an approach which is able to perform matching by requiring a similarity measure only within each of the classes. This is achieved by maximizing the dependency between matched pairs of observations by means of the Hilbert Schmidt Independence Criterion. This problem can be cast as one of maximizing a quadratic assignment problem with special structure and we present a simple algorithm for finding a locally optimal solution.

Introduction

Problem Statement

Assume we are given two collections of documents purportedly covering the same content, written in two different languages. Can we determine the correspondence between these two sets of documents without using a dictionary?

Sorting and Matching

(Formal) problem formulation:

Given two sets of observations [math]\displaystyle{ X= \{ x_{1},..., x_{m} \}\subseteq \mathcal X }[/math] and [math]\displaystyle{ Y=\{ y_{1},..., y_{m}\}\subseteq \mathcal Y }[/math]

Find a permutation matrix [math]\displaystyle{ \pi \in \Pi_{m} }[/math],

[math]\displaystyle{ \Pi_{m}:= \{ \pi | \pi \in \{0,1\}^{m \times m} \textrm{where} \pi 1_{m}=1_{m}, \pi^{T}1_{m}=1_{m}\} }[/math]

(it means [math]\displaystyle{ \Pi_{m} }[/math] is an m by m matrix, and in each row or column it has (m-1) zeros and only one 1)

such that [math]\displaystyle{ \{ (x_{i},y_{\pi (i)}) for 1 \leqslant i \leqslant m \} }[/math] is maximally dependent. Here [math]\displaystyle{ 1_{m} \in \mathbb{R}^{m} }[/math] is the vector of all ones, and [math]\displaystyle{ \pi (i) }[/math] is the column number, for which we have a 1 in the ith row.

Denote by [math]\displaystyle{ D(Z(\pi)) }[/math] a measure of the dependence between x and y, where [math]\displaystyle{ Z(\pi) := \{ (x_{i},y_{\pi (i)}) for 1 \leqslant i \leqslant m \} }[/math].

Then we define nonparametric sorting of X and Y as follows

[math]\displaystyle{ \pi^{\ast}:=\arg\max_{\pi \in \prod_{m}}D(Z(\pi)). }[/math]

Hilbert Schmidt Independence Criterion

Let sets of observations X and Y be drawn jointly from some probability distribution [math]\displaystyle{ Pr_{xy} }[/math]. The Hilbert Schmidt Independence Criterion (HSIC) measures the dependence between x and y by computing the norm of the cross-covariance operator over the domain [math]\displaystyle{ \mathcal X \times \mathcal Y }[/math] in Hilbert Space.

let [math]\displaystyle{ \mathcal {F} }[/math] be the Reproducing Kernel Hilbert Space (RKHS) on [math]\displaystyle{ \mathcal {X} }[/math] with associated kernel [math]\displaystyle{ k: \mathcal X \times \mathcal X \rightarrow \mathbb{R} }[/math] and feature map [math]\displaystyle{ \phi: \mathcal X \rightarrow \mathcal {F} }[/math]. Let [math]\displaystyle{ \mathcal {G} }[/math] be the RKHS on [math]\displaystyle{ \mathcal Y }[/math] with kernel [math]\displaystyle{ l }[/math] and feature map [math]\displaystyle{ \psi }[/math]. The cross-covariance operator [math]\displaystyle{ C_{xy}:\mathcal {G}\rightarrow \mathcal {F} }[/math] is defined by

[math]\displaystyle{ C_{xy}=\mathbb{E}_{xy}[(\phi(x)-\mu_{x})\otimes (\psi(y)-\mu_{y})], }[/math]

where [math]\displaystyle{ \mu_{x}=\mathbb{E}[\phi(x)] }[/math], [math]\displaystyle{ \mu_{y}=\mathbb{E}[\psi(y)] }[/math].

HSIC is the square of the Hilbert-Schmidt norm of the cross covariance operator [math]\displaystyle{ \, C_{xy} }[/math]

[math]\displaystyle{ D(\mathcal {F},\mathcal {G},Pr_{xy}):=\parallel C_{xy} \parallel_{HS}^{2}. }[/math]

In term of kernels, HSIC can be expressed as

[math]\displaystyle{ \mathbb{E}_{xx'yy'}[k(x,x')l(y,y')]+\mathbb{E}_{xx'}[k(x,x')]\mathbb{E}_{yy'}[l(y,y')]-2\mathbb{E}_{xy}[\mathbb{E}_{x'}[k(x,x')]\mathbb{E}_{y}[l(y,y')]]. }[/math]

where [math]\displaystyle{ \mathbb{E}_{xx'yy'} }[/math] is the expectation over both [math]\displaystyle{ \ (x, y) }[/math] ~ [math]\displaystyle{ \ Pr_{xy} }[/math] and an additional pair of variables [math]\displaystyle{ \ (x', y') }[/math] ~ [math]\displaystyle{ \ Pr_{xy} }[/math] drawn independently according to the same law.

A biased estimator of HSIC given finite sample [math]\displaystyle{ Z = \{(x_{i}, y_{i})\}_{i=1}^{m} }[/math] drawn from [math]\displaystyle{ Pr_{xy} }[/math] is

[math]\displaystyle{ D(\mathcal {F},\mathcal {G},Z)=(m-1)^{-2}tr HKHL = (m-1)^{-2} tr \bar{K}\bar{L} }[/math]

where [math]\displaystyle{ K,L\in \mathbb{R}^{m\times m} }[/math] are the kernel matrices for the data and the labels respectively, [math]\displaystyle{ H_{ij}=\delta_{ij}-m^{-1} }[/math] centers the data and the labels in feature space, [math]\displaystyle{ \bar{K}:=HKH }[/math] and [math]\displaystyle{ \bar{L}:=HLH }[/math] denote the centered versions [math]\displaystyle{ K }[/math] and [math]\displaystyle{ L }[/math] respectively.

Advantages of HSIC are:

Computing HSIC is simple: only the kernel matrices K and L are needed;

HSIC satisfies concentration of measure conditions, i.e. for random draws of observation from [math]\displaystyle{ Pr_{xy} }[/math], HSIC provides values which are very similar;

Incorporating prior knowledge into the dependence estimation can be done via kernels.

Kernelized Sorting

Kernelized Sorting

Claim: Thr problem is equivalent to the optimization problem of [math]\displaystyle{ \pi^{\ast}=\arg\max_{\pi \in \Pi_{m}}[tr \bar{K} \pi^{T}\bar{L}\pi] }[/math]

Proof: Firstly, we need to establish [math]\displaystyle{ H }[/math] and [math]\displaystyle{ \pi }[/math] matrices commute.

Since [math]\displaystyle{ H }[/math] is a centering matrix, we can write it as [math]\displaystyle{ H=I_{n}-11^{T} }[/math].

Actually, note that [math]\displaystyle{ \ H\pi=\pi H }[/math] iff [math]\displaystyle{ \ (I_{n}-11^{T})\pi=\pi (I_{n}-11^{T}) }[/math] iff [math]\displaystyle{ \ 11^{T}\pi=\pi 11^{T} }[/math], a result follows.

Next, recall that the biased estimator of HSIC given finite sample [math]\displaystyle{ Z = \{(x_{i}, y_{i})\}_{i=1}^{m} }[/math] drawn from [math]\displaystyle{ Pr_{xy} }[/math] is

[math]\displaystyle{ D(\mathcal {F},\mathcal {G},Z)=(m-1)^{-2}tr HKHL = (m-1)^{-2} tr \bar{K}\bar{L} }[/math]

where [math]\displaystyle{ K,L\in \mathbb{R}^{m\times m} }[/math] are the kernel matrices for the data and the labels respectively, i.e. [math]\displaystyle{ K=xx^{T} }[/math] and [math]\displaystyle{ L=yy^{T} }[/math].

Now, for any given pair [math]\displaystyle{ (x, y_{r}) }[/math] between [math]\displaystyle{ X }[/math] and [math]\displaystyle{ Y }[/math], we have [math]\displaystyle{ y_{r}=\pi y }[/math].

Note that [math]\displaystyle{ \pi }[/math] is a permutation matrix, we have [math]\displaystyle{ y=\pi^{T} y_{r} }[/math], so the kernel matrix [math]\displaystyle{ L=\pi^{T}y_{r}y_{r}^{T}\pi }[/math].

Note that the kernel matrix [math]\displaystyle{ L_{r}=y_{r}y_{r}^{T} }[/math], so the kernel matrix [math]\displaystyle{ L=\pi^{T}L_{r}\pi }[/math].

Note that [math]\displaystyle{ tr HKHL = tr HKHHLH }[/math], since [math]\displaystyle{ H }[/math] is idempotent.

So we have [math]\displaystyle{ tr HKHL = tr HKHHLH = tr \bar K H\pi^{T}L_{r}\pi H = tr \bar K \pi^{T}HL_{r}H\pi = tr \bar K \pi^{T}\bar L_{r}\pi }[/math].

Clearly, it is just our objective function.

Sorting as a special case

For general kernel matrices [math]\displaystyle{ K \, }[/math] and [math]\displaystyle{ L \, }[/math], where [math]\displaystyle{ K_{ij}=k(x_i,x_j) \, }[/math] and [math]\displaystyle{ L_{ij}=l(x_i,x_j) \, }[/math], the objective of the kernelized sorting problem, as explained above, is to find the permutation matrix [math]\displaystyle{ \pi \, }[/math] which maximizes [math]\displaystyle{ tr(\bar{K} \pi^{T}\bar{L}\pi ) = tr(HKH\pi^{T}HLH\pi)\, }[/math].

In the special case where the kernel functions [math]\displaystyle{ k\, }[/math] and [math]\displaystyle{ l\, }[/math] are the inner product in Euclidean space, we have [math]\displaystyle{ K=xx^{T}\, }[/math] and [math]\displaystyle{ L=yy^{T}\, }[/math]. Hence, we can rewrite the objective as

[math]\displaystyle{ tr(HKH\pi^{T}HLH\pi) = tr(Hxx^{T}H\pi^{T}Hyy^{T}H\pi) = tr[Hx(Hx)^T\pi^{T}Hy(Hy)^T\pi] = tr[((Hx)^T\pi^{T}Hy) ((Hy)^T\pi Hx))]\, }[/math], where the last step uses the property that trace is invariant under cyclic permutations.

Note that [math]\displaystyle{ (Hx)^T\pi^{T}Hy \, }[/math] and [math]\displaystyle{ (Hy)^T\pi Hx = (Hx)^T\pi^{T}Hy \, }[/math] are scalars, therefore the objective is equal to [math]\displaystyle{ [(Hx)^T\pi (Hy)]^2 \, }[/math].

In the even more special case where the Euclidean space is the real line and the inner product is multiplication of real numbers, the centering matrix [math]\displaystyle{ H\, }[/math] merely translates the sample vector [math]\displaystyle{ y \, }[/math] (by the sample mean) and thus the order of [math]\displaystyle{ y \, }[/math] is preserved. Hence, maximizing [math]\displaystyle{ [(Hx)^T\pi (Hy)]^2 \, }[/math] can be solved by maximizing [math]\displaystyle{ x^T \pi y \, }[/math]. Under the further assumption that [math]\displaystyle{ x \, }[/math] is sorted ascendingly, maximizing [math]\displaystyle{ x^T \pi y \, }[/math] is equivalent to sorting [math]\displaystyle{ y \, }[/math] ascendingly, according to the Polya-Littlewood-Hardy inequality.

Diagonal Dominance

Replace the expectations by sums where no pairwise summation indices are identical. This leads to the objective function:

[math]\displaystyle{ \frac{1}{m(m-1)}\sum_{i\ne j}K_{ij}L_{ij}+\frac{1}{m^{2}(m-1)^{2}}\sum_{i\ne j,u\ne v}K_{ij}L_{uv}- \frac{2}{m(m-1)^2}\sum_{i,j\ne i,v\ne i}K_{ij}L_{iv} }[/math]

Using the [math]\displaystyle{ \bar{K}_{ij}=K_{ij}(1-\delta_{ij}) }[/math] and [math]\displaystyle{ \bar{L}_{ij}=L_{ij}(1-\delta_{ij}) }[/math] for kernel matrices where the main diagonal terms have been removed we arrive at the expression [math]\displaystyle{ (m-1)^{-1}tr H\bar{L}H\bar{K} }[/math].

Relaxation to a constrained eigenvalue problem

An approximate solution of the problem by solving

[math]\displaystyle{ \text{maximize}_{\eta} \left\{ \eta^{T}M\eta \right\} \text{subject to} A\eta=b }[/math]

Here the matrix [math]\displaystyle{ M=K\otimes L\in \mathbb{R}^{m^{2}\times{m^2}} }[/math] is given by the outer product of the constituting kernel matrices, [math]\displaystyle{ \eta \in \mathbb{R}^{m^2} }[/math] is a vectorized version of the permutation matrix [math]\displaystyle{ \pi }[/math], and the constraints imposed by [math]\displaystyle{ A }[/math] and [math]\displaystyle{ b }[/math] amount to the polytope constraints imposed by [math]\displaystyle{ \Pi_{m} }[/math].

Related Work

Mutual Information is defined as, [math]\displaystyle{ I(X,Y)=h(X)+h(Y)-h(X,Y) }[/math]. We can approximate MI maximization by maximizing its lower bound. This then corresponds to minimizing an upper bound on the joint entropy [math]\displaystyle{ h(X,Y) }[/math].

Optimization

[math]\displaystyle{ \pi^{\ast}=argmin_{\pi \in \Pi_{m}}|\log HJ(\pi)H|, }[/math]

where [math]\displaystyle{ \ J_{ij}=K_{ij}L_{\pi(i),\pi(j)} }[/math]. This is related to the optimization criterion proposed by Jebara(2004) in the context of aligning bags of observations by sorting via minimum volume PCA.

Multivariate Extensions

Let there be T random variables [math]\displaystyle{ x_i \in {\mathcal X}_i }[/math] which are jointly drawn from some distribution [math]\displaystyle{ p(x_1,...x_m) }[/math]. The expectation operator with respect to the joint distribution and with respect to the product of the marginals is given by

[math]\displaystyle{ \mathbb{E}_{x_1,...,x_T}[\prod_{i=1}^{T}k_{i}(x_{i},\cdot)] }[/math] and [math]\displaystyle{ \prod_{i=1}^{T}\mathbb{E}_{x_i}[k_{i}(x_{i},\cdot)] }[/math]

respectively. Both terms are equal if and only if all random variables are independent. The squared difference between both is given by

[math]\displaystyle{ \mathbb{E}_{x_{i=1}^T,{x'}_{i=1}^{T}}[\prod_{i=1}^{T}k_{i}(x_{i},x_{i}^{'})]+\prod_{i=1}^{T}\mathbb{E}_{x_{i},x_{i}^{'}}[k_{i}(x_{i},x_{i}^{'})]-2\mathbb{E}_{x_{i=1}^{T}}[\prod_{i=1}^{T}\mathbb{E}_{x_{i}^{'}}[k(x_{i},x_{i}^{'})]] }[/math]

which we refer to as multiway HSIC.

Denote by [math]\displaystyle{ K_{i} }[/math] the kernel matrix obtained from the kernel [math]\displaystyle{ k_{i} }[/math] on the set of observations [math]\displaystyle{ X_{i}:=\{x_{i1},...,x_{im}\} }[/math], the empirical estimate is given by

[math]\displaystyle{ HSIC[X_{1},...,X_{T}]:=1_{m}^{T}(\bigodot_{i=1}^{T}K_{i})1_{m}+\prod_{i=1}^{T}1_{m}^{T}K_{i}1_{m}-2\cdot1_{m}^{T}(\bigodot_{i=1}^{T}K_{i}1_{m}) }[/math]

where [math]\displaystyle{ \bigodot_{t=1}^{T}\ast }[/math] denotes elementwise product of its arguments. To apply this to sorting we only need to define T permutation matrices [math]\displaystyle{ \pi_{i} \in \Pi_{m} }[/math] and replace the kernel matrices [math]\displaystyle{ K_{i} }[/math] by [math]\displaystyle{ \pi_{i}^{T}K_{i}\pi_{i} }[/math].

Optimization

Convex Objective and Convex Domain

Define [math]\displaystyle{ \pi }[/math] as a doubly stochastic matrix,

[math]\displaystyle{ P_{m}:=\{\pi \in \mathbb{R}^{m \times m} where \pi_{ij}\geqslant 0 and \sum_{i}\pi_{ij}=1 and \sum_{j}\pi_{ij}=1\} }[/math]

The objective function [math]\displaystyle{ tr K \pi^{T}L\pi }[/math] is convex in [math]\displaystyle{ \pi }[/math] .

Convex-Concave Procedure

Compute successive linear lower bounds and maximize [math]\displaystyle{ \pi_{i+1}\leftarrow \arg\max_{\pi \in P_{m}}[tr \bar{K} \pi^{T}\bar{L} \pi_{i}] }[/math]

This will converge to a local maximum.

Initialization is done via sorted principal eigenvector.

An tentative explanation for this part

Basically, I think the optimizing method used in this paper does not apply the Concave Convex Procedure exactly. As I said on Tuesday, I think it just "borrowed" the idea form the Concave Convex Procedure since there is no concave part in this question.

Accoding to the paper, the Concave Convex Procedure works as follows: [math]\displaystyle{ f(x)=g(x)-h(x) }[/math], where [math]\displaystyle{ g }[/math] is convex and [math]\displaystyle{ h }[/math] is concave, a lower bound can be found by

[math]\displaystyle{ f(x) \ge g(x_{0}) + \langle x-x_{0},\partial_{x}g(x_{0}) \rangle -h(x) }[/math]

For the objecitve function in the kernelized sorting method, it can be written in the following format

[math]\displaystyle{ f(\pi)=g(\pi)= tr \bar{K} \pi^{T}\bar{L}\pi }[/math]

Currently, suppose we have [math]\displaystyle{ \pi_{0} }[/math] and [math]\displaystyle{ g(\pi_{0}) = tr\bar{K} \pi_{0}^{T}\bar{L}\pi_{0} }[/math].

We know that [math]\displaystyle{ \bigtriangledown_{A} tr ABA^{T}C=CAB+C^{T}AB^{T} }[/math].

So [math]\displaystyle{ \bigtriangledown_{\pi} tr \bar K \pi^{T} \bar L \pi=\bigtriangledown_{\pi} tr \pi \bar K \pi^{T} \bar L = \bar L \pi \bar K+\bar L^{T} \pi \bar K^{T} }[/math]. Since [math]\displaystyle{ \bar K }[/math] and [math]\displaystyle{ \bar L }[/math] are symmetric matrix, we get

[math]\displaystyle{ \bigtriangledown_{\pi} tr \pi \bar K \pi^{T} \bar L = 2\bar L \pi \bar K }[/math].

Hence, we get [math]\displaystyle{ \langle \pi - \pi_{0}, \bigtriangledown_{\pi} tr \pi_{0} \bar K \pi_{0}^{T} \bar L\rangle =\langle \pi - \pi_{0}, 2\bar L \pi_{0} \bar K\rangle =2tr (\pi - \pi_{0})^{T}\bar L \pi_{0} \bar K =2tr \bar K(\pi - \pi_{0})^{T}\bar L \pi_{0} }[/math]

In this case, we can get [math]\displaystyle{ f(\pi)\ge tr \bar{K} \pi_{0}^{T}\bar{L}\pi_{0} + 2tr \bar K(\pi - \pi_{0})^{T}\bar L \pi_{0} }[/math]

We can drop the coefficient [math]\displaystyle{ 2 }[/math] since we just want to maximize this lower bound, so we get [math]\displaystyle{ f(\pi)\ge tr \bar{K} \pi_{0}^{T}\bar{L}\pi_{0} + tr \bar K(\pi - \pi_{0})^{T}\bar L \pi_{0} }[/math]

Hence, we get [math]\displaystyle{ f(\pi)\ge tr \bar{K} \pi^{T}\bar{L}\pi_{0} }[/math]

So this is the lower bound we want to update repeatedly.

Actually, I think if the Kernel matrices [math]\displaystyle{ K }[/math] and [math]\displaystyle{ L }[/math] are well defined and computed already, there is no too much parameters in this optimization problem, which means some stochastic gradient descent method can be applied. In fact, I think this question is easier than the assignment problem based the same argument about the complexity of parameters. Hence, interpreting it as a TSP problem and for example, using Simulated Annealing algorithm, is an acceptable method.

Application

Assume that we may want to visualize data according to the metric structure inherent in it. More specifically, our objective is to align it according to a given template, such as a grid, a torus, or any other fixed structure. Such problems occur when presenting images or documents to a user. Most of the algorithms for low dimensional object layout suffer from the problem that the low dimensional presentation is nonuniform. This has the advantage of revealing cluster structure but given limited screen size the presentation is undesirable. To address this problem, we can use kernelized sorting to align objects. In this scenario the kernel matrix L is given by the similarity measure between the objects [math]\displaystyle{ x_i }[/math] that are to be aligned. The kernel K, on the other hand, denotes the similarity between the locations where objects are to be aligned to. For the sake of simplicity we used a Gaussian RBF kernel between the objects to laid out and also between the positions of the grid, i.e. [math]\displaystyle{ \mathbf{k(x,x') = exp(-gamma ||x-x'||^2) } }[/math]. The kernel width [math]\displaystyle{ \mathbf{\gamma } }[/math] was adjusted to the inverse of [math]\displaystyle{ \mathbf{||x-x'||^2} }[/math] such that the argument of the exponential is O(1).

We obtained 284 images from http://www.flickr.com which were resized and downsampled to 40*40 pixels. We converted the images from RGB into Lab color space, yielding 40*40*3 dimensional objects. The grid, corresponding to X is a ‘NIPS 2008’ letters on which the images are to be laid out. After sorting we display the images according to their matching coordinates (Figure 1).

We can see images with similar color composition are found at proximal locations.

Summary

We generalize sorting by maximizing dependency between matched pairs of observations via HSIC.

Applications of our proposed sorting algorithm range from data visualization to image, data attribute and multilingual document matching.