Learning What and Where to Draw: Difference between revisions

Dylanspicker (talk | contribs) No edit summary |

|||

| (20 intermediate revisions by 8 users not shown) | |||

| Line 4: | Line 4: | ||

Generative Adversarial Networks (GANs) have been successfully used to synthesize compelling real-world images. In what follows we outline an enhanced GAN called the Generative Adversarial What- Where Network (GAWWN). In addition to accepting as input a noise vector, this network also accepts instructions describing what content to draw and in which location to draw the content. Traditionally, these models use simple conditioning variables such as a class label or a non-localized caption. The authors of 'Learning What and Where to Draw' believe that image synthesis will be drastically enhanced by incorporating a notion of localized objects. | Generative Adversarial Networks (GANs) have been successfully used to synthesize compelling real-world images. In what follows we outline an enhanced GAN called the Generative Adversarial What- Where Network (GAWWN). In addition to accepting as input a noise vector, this network also accepts instructions describing what content to draw and in which location to draw the content. Traditionally, these models use simple conditioning variables such as a class label or a non-localized caption. The authors of 'Learning What and Where to Draw' believe that image synthesis will be drastically enhanced by incorporating a notion of localized objects. | ||

The main goal in constructing the GAWWN network is to | The main goal in constructing the GAWWN network is to separate the questions of 'what' and 'where' to modify the image at each step of the computational process. At a high-level, the purpose of GAWWN network is to give the generative model more variables to condition the location in addition to text. One of the methods is to draw a bounding box that outlines the location of the subject in question within, such that like-images are provided with the subject in approximately the same location depicted in the aforementioned bounding box. Another method to determine the objects location is to use keypoints to locate features of the images such that the generative model can provide like-images that contain the location of the aforementioned features. Prior to elaborating on the experimental results of the GAWWN the authors cite that this model benefits from greater parameter efficiency and produces more interpretable sample images, since it is known from the input description what each image is intended to depict. The proposed model learns to perform location and content-controllable image synthesis on the Caltech-UCSD (CUB) bird data set and the MPII Human Pose (HBU) data set. | ||

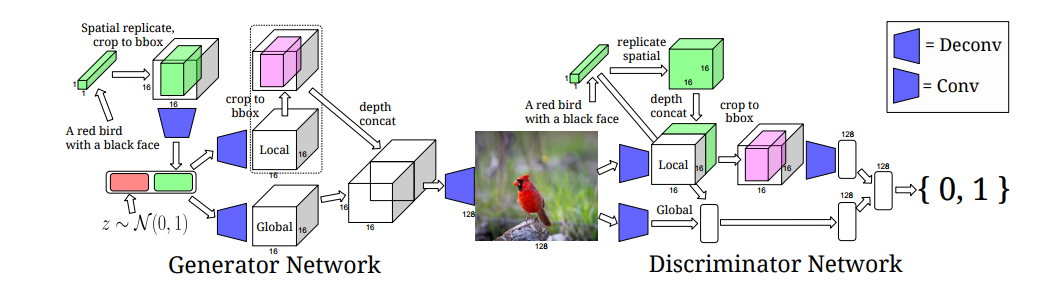

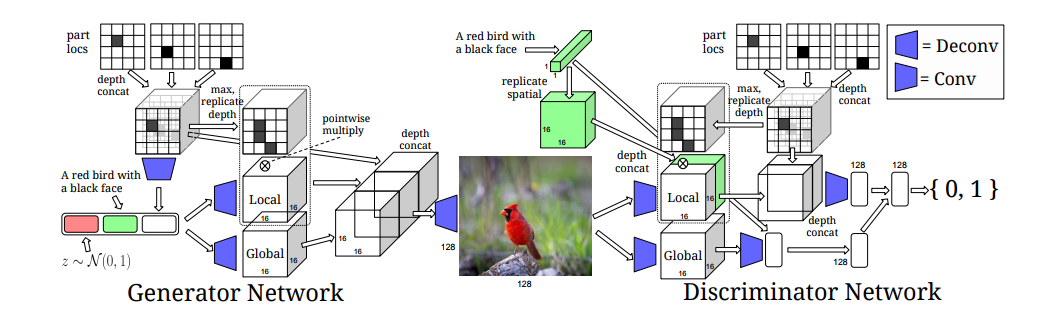

A highlight of this work is that the authors demonstrate two ways to encode spatial constraints into the GAN. First, the authors provide an implementation showing how to condition on the coarse location of a bird by incorporating spatial masking and cropping modules into a text-conditional General Adversarial Network ('''Bounding-box-conditional text-to-image model'''). This technique is implemented using spatial transformers. Second, the authors demonstrate how they are able to condition on part locations of birds and humans in the form of a set of normalized (x,y) coordinates ('''Keypoint-conditional text-to-image model'''). | A highlight of this work is that the authors demonstrate two ways to encode spatial constraints into the GAN. First, the authors provide an implementation showing how to condition on the coarse location of a bird by incorporating spatial masking and cropping modules into a text-conditional General Adversarial Network ('''Bounding-box-conditional text-to-image model'''). This technique is implemented using spatial transformers. Second, the authors demonstrate how they are able to condition on part locations of birds and humans in the form of a set of normalized (x,y) coordinates ('''Keypoint-conditional text-to-image model'''). | ||

| Line 14: | Line 14: | ||

* Yang et al. (2015) followed with a recurrent convolutional encoder-decoder that learned to apply incremental 3D rotations to generate sequences of rotated chair and face images | * Yang et al. (2015) followed with a recurrent convolutional encoder-decoder that learned to apply incremental 3D rotations to generate sequences of rotated chair and face images | ||

* Reed et al. (2015) trained a network to generate images that solved visual analogy problems | * Reed et al. (2015) trained a network to generate images that solved visual analogy problems | ||

* Gregor | * Gregor et al. (2015) used a recurrent variational autoencoder with attention mechanisms for reading and writing different portions of the image canvas. | ||

The authors cite how the above models are all deterministic and discuss how other recent work attempts to learn a probabilistic model with variational autoencoders (Kingma and Welling, 2014, Rezende et al., 2014). In discussing current work in this area it is stated how all of the above formulations could benefit from the principle of separating what and where conditioning variables. | The authors cite how the above models are all deterministic and discuss how other recent work attempts to learn a probabilistic model with a convolutional variational autoencoders (Kingma and Welling, 2014, Rezende et al., 2014) in which the latent space were in separate blocks corresponding to graphics codes. In discussing current work in this area it is stated how all of the above formulations could benefit from the principle of separating what and where conditioning variables. The authors also cite the simple and popular Generative Adversarial Networks (Goodfellow et al.2014, which produces sharper synthetic images compared to images generated by VAE . | ||

The current paper's work is built on top of Reed et al. "Generative Adversarial Text to Image Synthesis, ICML 2016" where the authors proposed an end-to-end deep neural architecture based on conditional GAN framework, which successfully generated realistic images (64 ×64) from natural language descriptions. | The current paper's work is built on top of Reed et al. "Generative Adversarial Text to Image Synthesis, ICML 2016" where the authors proposed an end-to-end deep neural architecture based on conditional GAN framework, which successfully generated realistic images (64 ×64) from natural language descriptions. Also, Lei et al. (2017) proposed a method for MR-to-CT synthesis by a novel deep embedding convolutional neural network (DECNN). Specifically, they generated feature maps from MR images, and then transformed these feature maps using embedding convolutional layers in the network. | ||

== Background Knowledge == | == Background Knowledge == | ||

=== Generative Adversarial Networks === | === Generative Adversarial Networks === | ||

Before outlining the GAWWN we briefly review GANs. A GAN consists of a generator G that generates a synthetic image given a noise vector drawn from either a Gaussian or Uniform distribution. The | Before outlining the GAWWN we briefly review GANs. A GAN consists of a generator G that generates a synthetic image given a noise vector drawn from either a Gaussian or Uniform distribution. The discriminator is tasked with classifying images generated by the generator as either real or synthetic. The two networks compete in the following minimax game: | ||

$\displaystyle \min_{G}$ | $\displaystyle \min_{G}$ | ||

$\max\limits_{G} V(D,G) = \mathop{\mathbb{E}}_{x \sim p_{data}(x)}[log[D(x)] + \mathop{\mathbb{E}}_{ | $\max\limits_{G} V(D,G) = \mathop{\mathbb{E}}_{x \sim p_{data}(x)}[log[D(x)] + \mathop{\mathbb{E}}_{z \sim p_{z}(z)}[log(1-D(G(z)))] $ | ||

where z is | where z is a noise vector. In the context of GAWWN networks we are playing the above minimax game with G(z,c) and D(z,c), where c is the additional what and where information supplied to the network. For the input tuple (x,c) to be interpreted as "real", the image x must not only look real but also match the context information in c. | ||

More details about GANs and the fundamental algorithm that GAWWN is built on are explained below. The main goal of GAN is to provide a generative model for data (e.eg., images). Learning in GANs proceeds via comparison of simulated data with some real input data. There are basically 2 stages within a GAN structure: a generator network and a discriminator network. | |||

Within generator network, objects or in this case images are generated from some distribution <math>p_z(z)</math>. Data generated from generator network are then passed through the discriminator network along with real input dataset. Within the discriminator network, it’s trained to differentiate real input from simulated input. The goals of this structure are to train the generator network to be able to simulate images that can “fool” the discriminator network when compared to real input data, and to train the discriminator network to be able to distinguish a “fake” input from real input data. Mathematically, such optimization problem is summarized into the minimax game demonstrated above. According to this blog post (Introductory guide to Generative Adversarial Networks (GANs) [https://www.analyticsvidhya.com/blog/2017/06/introductory-generative-adversarial-networks-gans/]), the trainings of generator network and discriminator network are separated (Shaikh, 2017). First, discriminator network is trained on real input data and simulated data from generator network to learn what real data look like. Then, using losses propagated through the discriminator network, the generator network is trained to simulate fake data that can be predicted as real data in the previous discriminator network (Shaikh, 2017). This process is repeated iteratively, with each component network adversarially learning to outperform the other. | Within generator network, objects or in this case images are generated from some distribution <math>p_z(z)</math>. Data generated from generator network are then passed through the discriminator network along with real input dataset. Within the discriminator network, it’s trained to differentiate real input from simulated input. The goals of this structure are to train the generator network to be able to simulate images that can “fool” the discriminator network when compared to real input data, and to train the discriminator network to be able to distinguish a “fake” input from real input data. Mathematically, such optimization problem is summarized into the minimax game demonstrated above. According to this blog post (Introductory guide to Generative Adversarial Networks (GANs) [https://www.analyticsvidhya.com/blog/2017/06/introductory-generative-adversarial-networks-gans/]), the trainings of generator network and discriminator network are separated (Shaikh, 2017). First, discriminator network is trained on real input data and simulated data from generator network to learn what real data look like. Then, using losses propagated through the discriminator network, the generator network is trained to simulate fake data that can be predicted as real data in the previous discriminator network (Shaikh, 2017). This process is repeated iteratively, with each component network adversarially learning to outperform the other. | ||

=== Structured Joint Embedding of Visual Descriptions and Images === | === Structured Joint Embedding of Visual Descriptions and Images === | ||

| Line 38: | Line 36: | ||

$\frac{1}{N}\sum_{n=1}^{N} \Delta (y_{n}, f_{v}(n)) + \Delta (y_{n}, f_{t}(t_{n}) $ | $\frac{1}{N}\sum_{n=1}^{N} \Delta (y_{n}, f_{v}(n)) + \Delta (y_{n}, f_{t}(t_{n})) $ | ||

where ${(v_{n}, t_{n}, , n=1,...N)}$ is the training data, $\Delta$ is the 0-1 loss, $v_{n}$ are the images, $t_{n}$ are the text descriptions of class y. The functions $f_{v}$ and $f_{t}$ are defined as follows: | where ${(v_{n}, t_{n}, , n=1,...N)}$ is the training data, $\Delta$ is the 0-1 loss, $v_{n}$ are the images, $t_{n}$ are the text descriptions of class y. The functions $f_{v}$ and $f_{t}$ are defined as follows: | ||

| Line 45: | Line 43: | ||

$ f_{v}(v)$ = $\displaystyle \max_{y \in Y}$ $\mathop{\mathbb{E}}_{t \sim T(y)}[\phi(v)^{T}\varphi(t)], \space f_{t}(t) = \displaystyle \max_{y \in Y}$ $\mathop{\mathbb{E}}_{v \sim V(y)}[\phi(v)^{T}\varphi(t)]$ | $ f_{v}(v)$ = $\displaystyle \max_{y \in Y}$ $\mathop{\mathbb{E}}_{t \sim T(y)}[\phi(v)^{T}\varphi(t)], \space f_{t}(t) = \displaystyle \max_{y \in Y}$ $\mathop{\mathbb{E}}_{v \sim V(y)}[\phi(v)^{T}\varphi(t)]$ | ||

where $\phi$ is the image encoder and $\varphi$ is the text encoder. The intuition behind the encoder is relatively simple. The encoder learns to produce a larger score with images of the correct class compared to the other classes, and works similarly going in the other direction. | where $\phi$ is the image encoder and $\varphi$ is the text encoder. The intuition behind the encoder is relatively simple. The encoder learns to produce a larger score with images of the correct class compared to the other classes, and works similarly going in the other direction. | ||

== GAWWN Visualization and Description == | == GAWWN Visualization and Description == | ||

| Line 52: | Line 50: | ||

==== Generator Network ==== | ==== Generator Network ==== | ||

* Step 1: Start with input noise and text embedding | * Step 1: Start with input noise and text embedding | ||

* Step 2: Replicate text embedding to form a $ | * Step 2: Replicate text embedding to form a $M \times M \times T$ feature map then wrap spatially to fit into unit interval bounding box coordinates | ||

* Step 3: Apply convolution, pooling to reduce spatial dimension to $ | * Step 3: Apply convolution, pooling to reduce spatial dimension to $1 \times 1$ | ||

* Step 4: Concatenate feature vector with the noise vector z | * Step 4: Concatenate feature vector with the noise vector z | ||

* Step 5: Generator branching into local and global processing stages | * Step 5: Generator branching into local and global processing stages | ||

* Step 6: Global pathway stride-2 deconvolutions, local pathway | * Step 6: Global pathway stride-2 deconvolutions, local pathway apply masking operation applied to set regions outside the object bounding box to 0 | ||

* Step 7: Merge local and global pathways | * Step 7: Merge local and global pathways | ||

* Step 8: Apply a series of deconvolutional layers and in the final layer apply tanh activation to restrict | * Step 8: Apply a series of deconvolutional layers and in the final layer apply tanh activation to restrict output to [-1,1] | ||

====Discriminator Network==== | ====Discriminator Network==== | ||

| Line 73: | Line 71: | ||

==== Generator Network ==== | ==== Generator Network ==== | ||

* Step 1: Keypoint locations are encoded into a $ | * Step 1: Keypoint locations are encoded into a $M \times M \times K$ spatial feature map | ||

* Step 2: Keypoint tensor progresses through several stages of the network | * Step 2: Keypoint tensor progresses through several stages of the network | ||

* Step 3: Concatenate keypoint vector with noise vector | * Step 3: Concatenate keypoint vector with noise vector | ||

| Line 89: | Line 87: | ||

=== Conditional keypoint generation model === | === Conditional keypoint generation model === | ||

In creating this application the researchers discuss how it is not feasible to ask the user to input all of the keypoints for a given image. In order to remedy this issue a method is developed to access the conditional distributions of unobserved keypoints given a subset of observed keypoints and the image caption. In order to solve this problem a generic GAN is used. | In creating this application the researchers discuss how it is not feasible to ask the user to input all of the keypoints for a given image. In order to remedy this issue, a method is developed to access the conditional distributions of unobserved keypoints given a subset of observed keypoints and the image caption. In order to solve this problem, a generic GAN is used. | ||

The authors formulate the generator network $G_{k}$ for keypoints s,k as follows: | The authors formulate the generator network $G_{k}$ for keypoints s,k as follows: | ||

| Line 97: | Line 95: | ||

where $\odot$ denotes pointwise multiplication and $f: \Re^{Z+T+3K} \mapsto \Re^{3k}$ is an MLP. As usual, the discriminator learns to distinguish real key points from synthetic keypoints. | where $\odot$ denotes pointwise multiplication and $f: \Re^{Z+T+3K} \mapsto \Re^{3k}$ is an MLP. As usual, the discriminator learns to distinguish real key points from synthetic keypoints. | ||

In both, the Bounding-box-conditional text-to-image model and Keypoint-conditional text-to-image model | In both, the Bounding-box-conditional text-to-image model and Keypoint-conditional text-to-image model, the noise vector z plays an important role in image generation and keypoint generation. This effect is strongly seen in the poor human image generations. Perhaps, a denoising autoencoder should be included in the architecture. This would make the GAN invariant to environmental factors. This could also improve the feature learning whereby keypoints would more accurately be linked to the image poses while training. | ||

, the noise vector z plays an important role in image generation and keypoint generation. This effect is strongly seen in the poor human image generations. Perhaps, a denoising autoencoder should be included in the architecture. This would make the GAN invariant to environmental factors. This could also improve the feature learning whereby keypoints would more accurately be linked to the image poses while training. | |||

== Experiments == | == Experiments == | ||

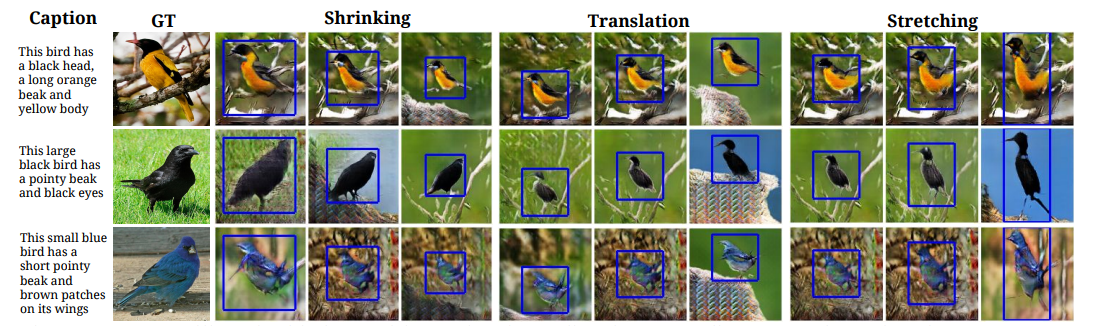

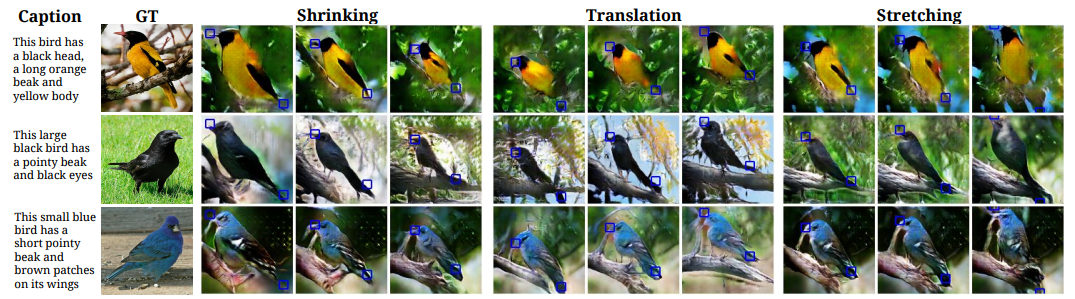

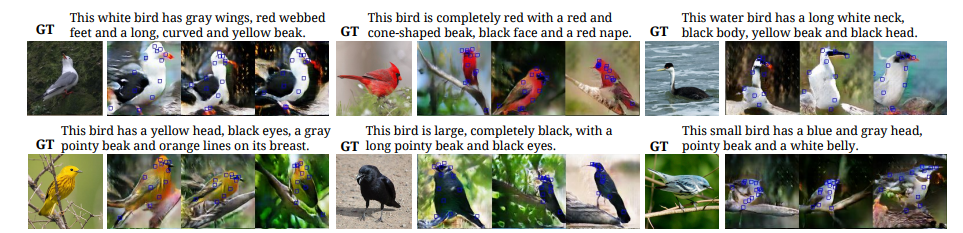

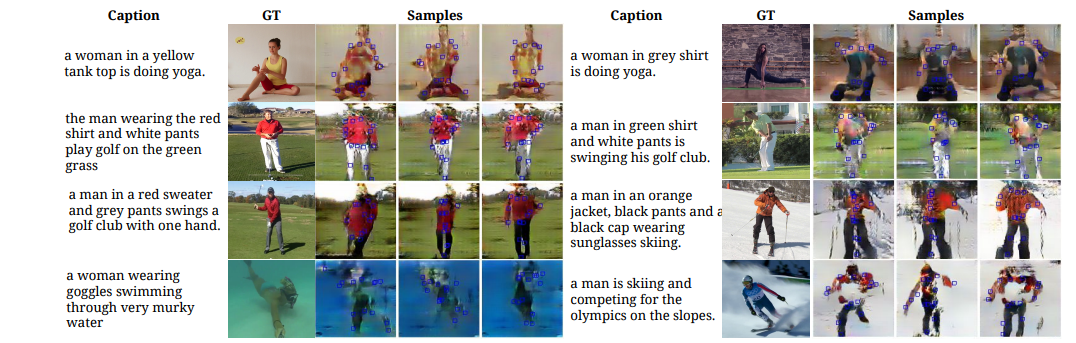

In this section of the wiki we examine the synthetic images generated by the GAWWN conditioning on different model inputs. The experiments are conducted with Caltech-USCD Birds (CUB) and MPII Human Pose (MHP) data sets. CUB has 11,788 images of birds, each belonging to one of 200 different species. The authors also include an additional data set from Reed et al. [2016]. Each image contains bird location via bounding box and keypoint coordinates for 15 bird parts. MHP contains 25K images with individuals participating in 410 different common activities. Mechanical Turk was used to collect three single sentence descriptions for each image. For HBU each image contains multiple sets of keypoints. During training the text embeddings for a given image were taken to be the average of a random sample from the encodings for that image. Caption information was encoded using a pre-trained char-CNN-GRU | In this section of the wiki we examine the synthetic images generated by the GAWWN conditioning on different model inputs. The experiments are conducted with Caltech-USCD Birds (CUB) and MPII Human Pose (MHP) data sets. CUB has 11,788 images of birds, each belonging to one of 200 different species. The authors also include an additional data set from Reed et al. [2016]. Each image contains bird location via bounding box and keypoint coordinates for 15 bird parts. MHP contains 25K images with individuals participating in 410 different common activities. Mechanical Turk was used to collect three single sentence descriptions for each image. For HBU each image contains multiple sets of keypoints. During training the text embeddings for a given image were taken to be the average of a random sample from the encodings for that image. Caption information was encoded using a pre-trained char-CNN-GRU and Adam solver was used to train the GAWWN with a batch size of 16 and learning rate of 0.0002. | ||

=== Controlling via Bounded Boxes === | === Controlling via Bounded Boxes === | ||

| Line 123: | Line 120: | ||

[[File:bb2.PNG]] | [[File:bb2.PNG]] | ||

* Observations: The GAWWN network generates much blurrier human images compared to generated bird images, simple captions seem to work while complex descriptions still present challenges, strong relationship between image caption and image | * Observations: The GAWWN network generates much blurrier human images compared to generated bird images, simple captions seem to work while complex descriptions still present challenges, strong relationship between image caption and image | ||

== Summary of Contributions == | == Summary of Contributions == | ||

* Novel architecture for text- and location-controllable image synthesis, which yields more realistic and high-resolution Caltech-USCD bird samples | * Novel architecture for text- and location-controllable image synthesis, which yields more realistic and high-resolution Caltech-USCD bird samples | ||

| Line 143: | Line 141: | ||

# Identifying large scale stock market movements/patterns using by adding RNN layers to the GAN architecture | # Identifying large scale stock market movements/patterns using by adding RNN layers to the GAN architecture | ||

The authors used the Generative Adversarial Networks as the neural architecture to synthesize compelling real-world images. But it is interesting to compare the performance of this network with the result from another architecture, variational auto-encoder network. | |||

== Criticism == | |||

The results in this paper appear to be useful only removed from the context of S.O.T.A generation techniques. The generated images are not particularly convincing in the context of similar new techniques. The authors introduce what is, by all accounts, a complicated architecture but provide no attempt at justifying this complexity or giving intuition to the structure. The use of adversarial networks seems to be completely arbitrary, particularly given the existence of auto encoders. The method, while perhaps interesting, appears to be mere additions to existing frameworks, with little gain in results, and tremendous additive complexity. | |||

Z. Akata, S. Reed, D. Walter, H. Lee, and B. Schiele. Evaluation of Output Embeddings for Fine-Grained Image | == References == | ||

Classification. In CVPR, 2015. | # Z. Akata, S. Reed, S. Mohan, S. Tenka, B. Schiele, H.Lee. Learning What and Where to Draw. In NIPS 2016 | ||

# Z. Akata, S. Reed, D. Walter, H. Lee, and B. Schiele. Evaluation of Output Embeddings for Fine-Grained Image Classification. In CVPR, 2015. | |||

A. Dosovitskiy, J. Tobias Springenberg, and T. Brox. Learning to generate chairs with convolutional neural | # A. Dosovitskiy, J. Tobias Springenberg, and T. Brox. Learning to generate chairs with convolutional neural networks. In CVPR, 2015. | ||

networks. In CVPR, 2015. | # I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio. Generative adversarial nets. In NIPS, 2014. | ||

# D. P. Kingma and M. Welling. Auto-encoding variational bayes. In ICLR, 2014. | |||

I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio. | # S. Reed, Z. Akata, X. Yan, L. Logeswaran, B. Schiele, and H. Lee. Generative Adversarial Text to Image Synthesis. In ICML, 2016. | ||

Generative adversarial nets. In NIPS, 2014. | # Xiang, Lei, et al. "Deep Embedding Convolutional Neural Network for Synthesizing CT Image from T1-Weighted MR Image." arXiv preprint arXiv:1709.02073 (2017). | ||

# Shaikh, F. (2017, June 15). Introductory guide to Generative Adversarial Networks (GANs) : https://www.analyticsvidhya.com/blog/2017/06/introductory-generative-adversarial-networks-gans/ | |||

S. Reed, Z. Akata, X. Yan, L. Logeswaran, B. Schiele, and H. Lee. Generative Adversarial Text to Image Synthesis. In | |||

ICML, 2016. | |||

Latest revision as of 12:35, 4 December 2017

Introduction

Generative Adversarial Networks (GANs) have been successfully used to synthesize compelling real-world images. In what follows we outline an enhanced GAN called the Generative Adversarial What- Where Network (GAWWN). In addition to accepting as input a noise vector, this network also accepts instructions describing what content to draw and in which location to draw the content. Traditionally, these models use simple conditioning variables such as a class label or a non-localized caption. The authors of 'Learning What and Where to Draw' believe that image synthesis will be drastically enhanced by incorporating a notion of localized objects.

The main goal in constructing the GAWWN network is to separate the questions of 'what' and 'where' to modify the image at each step of the computational process. At a high-level, the purpose of GAWWN network is to give the generative model more variables to condition the location in addition to text. One of the methods is to draw a bounding box that outlines the location of the subject in question within, such that like-images are provided with the subject in approximately the same location depicted in the aforementioned bounding box. Another method to determine the objects location is to use keypoints to locate features of the images such that the generative model can provide like-images that contain the location of the aforementioned features. Prior to elaborating on the experimental results of the GAWWN the authors cite that this model benefits from greater parameter efficiency and produces more interpretable sample images, since it is known from the input description what each image is intended to depict. The proposed model learns to perform location and content-controllable image synthesis on the Caltech-UCSD (CUB) bird data set and the MPII Human Pose (HBU) data set.

A highlight of this work is that the authors demonstrate two ways to encode spatial constraints into the GAN. First, the authors provide an implementation showing how to condition on the coarse location of a bird by incorporating spatial masking and cropping modules into a text-conditional General Adversarial Network (Bounding-box-conditional text-to-image model). This technique is implemented using spatial transformers. Second, the authors demonstrate how they are able to condition on part locations of birds and humans in the form of a set of normalized (x,y) coordinates (Keypoint-conditional text-to-image model).

Related Work

This is not the first paper to show how Deep convolutional networks can be used to generate synthetic images. Other notable works include:

- Dosovitsky et al. (2015) trained a deconvolutional network to generate 3D chair renderings conditioned on a set of graphics codes indicating shape, position and lighting

- Yang et al. (2015) followed with a recurrent convolutional encoder-decoder that learned to apply incremental 3D rotations to generate sequences of rotated chair and face images

- Reed et al. (2015) trained a network to generate images that solved visual analogy problems

- Gregor et al. (2015) used a recurrent variational autoencoder with attention mechanisms for reading and writing different portions of the image canvas.

The authors cite how the above models are all deterministic and discuss how other recent work attempts to learn a probabilistic model with a convolutional variational autoencoders (Kingma and Welling, 2014, Rezende et al., 2014) in which the latent space were in separate blocks corresponding to graphics codes. In discussing current work in this area it is stated how all of the above formulations could benefit from the principle of separating what and where conditioning variables. The authors also cite the simple and popular Generative Adversarial Networks (Goodfellow et al.2014, which produces sharper synthetic images compared to images generated by VAE . The current paper's work is built on top of Reed et al. "Generative Adversarial Text to Image Synthesis, ICML 2016" where the authors proposed an end-to-end deep neural architecture based on conditional GAN framework, which successfully generated realistic images (64 ×64) from natural language descriptions. Also, Lei et al. (2017) proposed a method for MR-to-CT synthesis by a novel deep embedding convolutional neural network (DECNN). Specifically, they generated feature maps from MR images, and then transformed these feature maps using embedding convolutional layers in the network.

Background Knowledge

Generative Adversarial Networks

Before outlining the GAWWN we briefly review GANs. A GAN consists of a generator G that generates a synthetic image given a noise vector drawn from either a Gaussian or Uniform distribution. The discriminator is tasked with classifying images generated by the generator as either real or synthetic. The two networks compete in the following minimax game:

$\displaystyle \min_{G}$

$\max\limits_{G} V(D,G) = \mathop{\mathbb{E}}_{x \sim p_{data}(x)}[log[D(x)] + \mathop{\mathbb{E}}_{z \sim p_{z}(z)}[log(1-D(G(z)))] $

where z is a noise vector. In the context of GAWWN networks we are playing the above minimax game with G(z,c) and D(z,c), where c is the additional what and where information supplied to the network. For the input tuple (x,c) to be interpreted as "real", the image x must not only look real but also match the context information in c. More details about GANs and the fundamental algorithm that GAWWN is built on are explained below. The main goal of GAN is to provide a generative model for data (e.eg., images). Learning in GANs proceeds via comparison of simulated data with some real input data. There are basically 2 stages within a GAN structure: a generator network and a discriminator network.

Within generator network, objects or in this case images are generated from some distribution [math]\displaystyle{ p_z(z) }[/math]. Data generated from generator network are then passed through the discriminator network along with real input dataset. Within the discriminator network, it’s trained to differentiate real input from simulated input. The goals of this structure are to train the generator network to be able to simulate images that can “fool” the discriminator network when compared to real input data, and to train the discriminator network to be able to distinguish a “fake” input from real input data. Mathematically, such optimization problem is summarized into the minimax game demonstrated above. According to this blog post (Introductory guide to Generative Adversarial Networks (GANs) [1]), the trainings of generator network and discriminator network are separated (Shaikh, 2017). First, discriminator network is trained on real input data and simulated data from generator network to learn what real data look like. Then, using losses propagated through the discriminator network, the generator network is trained to simulate fake data that can be predicted as real data in the previous discriminator network (Shaikh, 2017). This process is repeated iteratively, with each component network adversarially learning to outperform the other.

Structured Joint Embedding of Visual Descriptions and Images

In order to encode visual content from text descriptions the authors use a convolutional and recurrent text encoder to establish a correspondence function between images and text features. This approach is not new, the authors rely on the previous work of Reed et al. (2016) to implement this procedure. To learn sentence embeddings the following function is optimized:

$\frac{1}{N}\sum_{n=1}^{N} \Delta (y_{n}, f_{v}(n)) + \Delta (y_{n}, f_{t}(t_{n})) $

where ${(v_{n}, t_{n}, , n=1,...N)}$ is the training data, $\Delta$ is the 0-1 loss, $v_{n}$ are the images, $t_{n}$ are the text descriptions of class y. The functions $f_{v}$ and $f_{t}$ are defined as follows:

$ f_{v}(v)$ = $\displaystyle \max_{y \in Y}$ $\mathop{\mathbb{E}}_{t \sim T(y)}[\phi(v)^{T}\varphi(t)], \space f_{t}(t) = \displaystyle \max_{y \in Y}$ $\mathop{\mathbb{E}}_{v \sim V(y)}[\phi(v)^{T}\varphi(t)]$

where $\phi$ is the image encoder and $\varphi$ is the text encoder. The intuition behind the encoder is relatively simple. The encoder learns to produce a larger score with images of the correct class compared to the other classes, and works similarly going in the other direction.

GAWWN Visualization and Description

Bounding-box-conditional text-to-image model

Generator Network

- Step 1: Start with input noise and text embedding

- Step 2: Replicate text embedding to form a $M \times M \times T$ feature map then wrap spatially to fit into unit interval bounding box coordinates

- Step 3: Apply convolution, pooling to reduce spatial dimension to $1 \times 1$

- Step 4: Concatenate feature vector with the noise vector z

- Step 5: Generator branching into local and global processing stages

- Step 6: Global pathway stride-2 deconvolutions, local pathway apply masking operation applied to set regions outside the object bounding box to 0

- Step 7: Merge local and global pathways

- Step 8: Apply a series of deconvolutional layers and in the final layer apply tanh activation to restrict output to [-1,1]

Discriminator Network

- Step 1: Replicate text as in Step 2 above

- Step 2: Process image in local and global pathways

- Step 3: In local pathway stride2-deconvolutional layers, in global pathway convolutions down to a vector

- Step 4: Local and global pathway output vectors are merged

- Step 5: Produce discriminator score

In this initial stages of this process,the researchers average the feature maps in the presence of multiple localized captions. The reliability of this heuristic is unknown and comparisons are not drawn with alternative heuristics such as Max-Pooling or Min-Pooling. Alternative heuristics could improve results.

Keypoint-conditional text-to-image

Generator Network

- Step 1: Keypoint locations are encoded into a $M \times M \times K$ spatial feature map

- Step 2: Keypoint tensor progresses through several stages of the network

- Step 3: Concatenate keypoint vector with noise vector

- Step 4: Keypoint tensor is flattened into a binary matrix, then replicated into a tensor

- Step 5: Noise-text-keypoint vector is fed to global and local pathways

- Step 6: Orginal keypoint tensor is is concatenated with local and global tensors with additional deconvolutions

- Step 7: Apply tanh activation function

Discriminator Network

- Step 1: Feed text-embedding into discriminator in two stages

- Step 2: Combine text embedding additively with global pathway for convolutional image processing

- Step 3: Spatially replicated text-embedding and concatenate with feature map

- Step 4: Local pathway produces into stride-2 deconvolutions producing an output vector

- Step 5: Combine local and global pathways and produce discriminator score

Conditional keypoint generation model

In creating this application the researchers discuss how it is not feasible to ask the user to input all of the keypoints for a given image. In order to remedy this issue, a method is developed to access the conditional distributions of unobserved keypoints given a subset of observed keypoints and the image caption. In order to solve this problem, a generic GAN is used.

The authors formulate the generator network $G_{k}$ for keypoints s,k as follows:

$G_{k}(z,t,k,s) := s \odot k + (1-s) \odot f(z,t,k)$

where $\odot$ denotes pointwise multiplication and $f: \Re^{Z+T+3K} \mapsto \Re^{3k}$ is an MLP. As usual, the discriminator learns to distinguish real key points from synthetic keypoints.

In both, the Bounding-box-conditional text-to-image model and Keypoint-conditional text-to-image model, the noise vector z plays an important role in image generation and keypoint generation. This effect is strongly seen in the poor human image generations. Perhaps, a denoising autoencoder should be included in the architecture. This would make the GAN invariant to environmental factors. This could also improve the feature learning whereby keypoints would more accurately be linked to the image poses while training.

Experiments

In this section of the wiki we examine the synthetic images generated by the GAWWN conditioning on different model inputs. The experiments are conducted with Caltech-USCD Birds (CUB) and MPII Human Pose (MHP) data sets. CUB has 11,788 images of birds, each belonging to one of 200 different species. The authors also include an additional data set from Reed et al. [2016]. Each image contains bird location via bounding box and keypoint coordinates for 15 bird parts. MHP contains 25K images with individuals participating in 410 different common activities. Mechanical Turk was used to collect three single sentence descriptions for each image. For HBU each image contains multiple sets of keypoints. During training the text embeddings for a given image were taken to be the average of a random sample from the encodings for that image. Caption information was encoded using a pre-trained char-CNN-GRU and Adam solver was used to train the GAWWN with a batch size of 16 and learning rate of 0.0002.

Controlling via Bounded Boxes

- Observations: Similar background across different images but not perfectly invariant, changing bounding box coordinates does not change the direction the bird is facing. The authors take this to mean that the noise vector encodes information about the pose within the bounding box.

- Note: Noise vector is fixed

Controlling individual part locations via keypoints

- Observations: Bird pose respects keypoints and is invariant across samples, background is invariant with changes in noise

- Notes: Noise vector is not fixed

- Observations: Keypoints can be used to shrink, translate and stretch objects, comparing with box points figure can control orientation

Generating both bird keypoints and images from text alone

- Observations: There is no major difference in image quality when comparing synthetic images created using generated and ground truth keypoints

Beyond birds: generating images of humans

- Observations: The GAWWN network generates much blurrier human images compared to generated bird images, simple captions seem to work while complex descriptions still present challenges, strong relationship between image caption and image

Summary of Contributions

- Novel architecture for text- and location-controllable image synthesis, which yields more realistic and high-resolution Caltech-USCD bird samples

- A text-conditional object part completion model enabling a streamlined user interface for specifying part locations

- Exploratory results and a new dataset for pose-conditional text to human image synthesis

Resources

In this section, we enumerate any additional supplementary material provided by the authors to augment our understanding of the techniques discussed in the paper.

Implementations of the GAWWNs can be found in the following repository: https://github.com/reedscot/nips2016.

Discussion

The GAWNN does an excellent job of generating images conditioned on both informal text descriptions and object locations. Image location can be controlled using both bounding box and a set of keypoints. A major achievement is the syntheses of compelling 128 by 128 images, whereas previous models could only generate 64 by 64. Another strength of GAWNN is that it is not constrained at test time by the location conditioning, as the authors are able to learn a generative model of part locations, and generate them at test time.

The ideas presented in this paper are in accord with other areas of applied mathematics. In Quantitative finance one is always looking to condition on additional information when pricing derivative securities. Variance reduction techniques provide ways of conditioning on additional information to improve efficiency in estimation procedures.

This idea of learning "what" and "where" to draw can be applied to a number of fields including:

- Using the generator to create musical sounds coming from a particular instrument (what) and insert it into another musical piece (where) using text

- Using the "what" and "where" model to train the discriminator to identify doctored images/videos in crime

- Identifying large scale stock market movements/patterns using by adding RNN layers to the GAN architecture

The authors used the Generative Adversarial Networks as the neural architecture to synthesize compelling real-world images. But it is interesting to compare the performance of this network with the result from another architecture, variational auto-encoder network.

Criticism

The results in this paper appear to be useful only removed from the context of S.O.T.A generation techniques. The generated images are not particularly convincing in the context of similar new techniques. The authors introduce what is, by all accounts, a complicated architecture but provide no attempt at justifying this complexity or giving intuition to the structure. The use of adversarial networks seems to be completely arbitrary, particularly given the existence of auto encoders. The method, while perhaps interesting, appears to be mere additions to existing frameworks, with little gain in results, and tremendous additive complexity.

References

- Z. Akata, S. Reed, S. Mohan, S. Tenka, B. Schiele, H.Lee. Learning What and Where to Draw. In NIPS 2016

- Z. Akata, S. Reed, D. Walter, H. Lee, and B. Schiele. Evaluation of Output Embeddings for Fine-Grained Image Classification. In CVPR, 2015.

- A. Dosovitskiy, J. Tobias Springenberg, and T. Brox. Learning to generate chairs with convolutional neural networks. In CVPR, 2015.

- I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio. Generative adversarial nets. In NIPS, 2014.

- D. P. Kingma and M. Welling. Auto-encoding variational bayes. In ICLR, 2014.

- S. Reed, Z. Akata, X. Yan, L. Logeswaran, B. Schiele, and H. Lee. Generative Adversarial Text to Image Synthesis. In ICML, 2016.

- Xiang, Lei, et al. "Deep Embedding Convolutional Neural Network for Synthesizing CT Image from T1-Weighted MR Image." arXiv preprint arXiv:1709.02073 (2017).

- Shaikh, F. (2017, June 15). Introductory guide to Generative Adversarial Networks (GANs) : https://www.analyticsvidhya.com/blog/2017/06/introductory-generative-adversarial-networks-gans/