mULTIPLE OBJECT RECOGNITION WITH VISUAL ATTENTION: Difference between revisions

No edit summary |

m (Conversion script moved page MULTIPLE OBJECT RECOGNITION WITH VISUAL ATTENTION to mULTIPLE OBJECT RECOGNITION WITH VISUAL ATTENTION: Converting page titles to lowercase) |

||

| (12 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

= Introduction = | = Introduction = | ||

Recognizing multiple objects in images has been one of the most important goals of computer vision. | Recognizing multiple objects in images has been one of the most important goals of computer vision. Previous work in this classification of sequences of characters often employed a sliding window detector with an individual character-classifier. However, these systems can involve setting components in a case-specific manner for determining possible object locations. In this paper an attention-based model for recognizing multiple objects in images is presented. The proposed model is a deep recurrent neural network trained with reinforcement learning to attend to the most relevant regions of the input image. It has been shown that the proposed method is more accurate than the state-of-the-art convolutional networks and uses fewer parameters and less computation. | ||

In this paper an attention-based model for recognizing multiple objects in images is presented. The proposed model is a deep recurrent neural network trained with reinforcement learning to attend to the most relevant regions of the input image. It has been shown that the proposed method is more accurate than the state-of-the-art convolutional networks and uses fewer parameters and less computation. | |||

One of the main drawbacks of convolutional networks (ConvNets) is their poor scalability with increasing input image size so efficient implementations of these models have become necessary. In this work, the authors take inspiration from the way humans perform visual sequence recognition tasks such as reading by continually moving the fovea to the next relevant object or character, recognizing the individual object, and adding the recognized object to our internal representation of the sequence. The proposed system is a deep recurrent neural network that at each step processes a multi-resolution crop of the input image, called a “glimpse”. The network uses information from the glimpse to update its internal representation of the input, and outputs the next glimpse location and possibly the next object in the sequence. The process continues until the model decides that there are no more objects to process. | One of the main drawbacks of convolutional networks (ConvNets) is their poor scalability with increasing input image size so efficient implementations of these models have become necessary. In this work, the authors take inspiration from the way humans perform visual sequence recognition tasks such as reading by continually moving the fovea to the next relevant object or character, recognizing the individual object, and adding the recognized object to our internal representation of the sequence. The proposed system is a deep recurrent neural network that at each step processes a multi-resolution crop of the input image, called a “glimpse”. The network uses information from the glimpse to update its internal representation of the input, and outputs the next glimpse location and possibly the next object in the sequence. The process continues until the model decides that there are no more objects to process. | ||

| Line 10: | Line 8: | ||

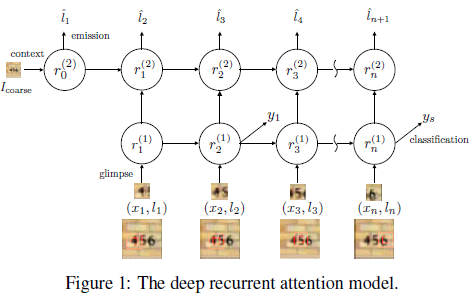

For simplicity, they first describe how our model can be applied to classifying a single object and later show how it can be extended to multiple objects. Processing an image x with an attention based model is a sequential process with N steps, where each step consists of a glimpse. At each step n, the model receives a location ln along with a glimpse observation xn taken at location ln. The model uses the observation to update its internal state and outputs the location ln+1 to process at the next time-step. A graphical representation of the proposed model is shown in Figure 1. | For simplicity, they first describe how our model can be applied to classifying a single object and later show how it can be extended to multiple objects. Processing an image x with an attention based model is a sequential process with N steps, where each step consists of a glimpse. At each step n, the model receives a location ln along with a glimpse observation xn taken at location ln. The model uses the observation to update its internal state and outputs the location ln+1 to process at the next time-step. A graphical representation of the proposed model is shown in Figure 1. | ||

[[File: | [[File:0.PNG | center]] | ||

The above model can be broken down into a number of sub-components, each mapping some input into a vector output. In this paper the term “network” is used to describe these sub-components. | The above model can be broken down into a number of sub-components, each mapping some input into a vector output. In this paper the term “network” is used to describe these sub-components. | ||

Glimpse Network: | |||

The job of the glimpse network is to extract a set of useful features from location of a glimpse of the raw visual input. The glimpse network is a non-linear function that receives the current input image patch, or glimpse (<math>x_n</math>), and its location tuple (<math>l_n</math>) as input and outputs a vector showing that what location has what features. | |||

There are two separate networks in the structure of glimpse network, each of which has its own input. The first one which extracts features of the image patch takes an image patch as input and consists of three convolutional hidden layers without any pooling layers followed by a fully connected layer. Separately, the location tuple is mapped using a fully connected hidden layer. Then element-wise multiplication of two output vectors produces the final glimpse feature vector | There are two separate networks in the structure of glimpse network, each of which has its own input. The first one which extracts features of the image patch takes an image patch as input and consists of three convolutional hidden layers without any pooling layers followed by a fully connected layer. Separately, the location tuple is mapped using a fully connected hidden layer. Then element-wise multiplication of two output vectors produces the final glimpse feature vector <math>g_n</math>. | ||

Recurrent Network: | |||

The recurrent network aggregates information extracted from the individual glimpses and combines the information in a coherent manner that preserves spatial information. The glimpse feature vector gn from the glimpse network is supplied as input to the recurrent network at each time step. | The recurrent network aggregates information extracted from the individual glimpses and combines the information in a coherent manner that preserves spatial information. The glimpse feature vector gn from the glimpse network is supplied as input to the recurrent network at each time step. | ||

The recurrent network consists of two recurrent layers. Two outputs of the recurrent layers are defined as | The recurrent network consists of two recurrent layers. Two outputs of the recurrent layers are defined as <math>r_n^{(1)}</math> and <math>r_n^{(2)}</math>. | ||

Emission Network: | |||

The emission network takes the current state of recurrent network as input and makes a prediction on where to extract the next image patch for the glimpse network. It acts as a controller that directs attention based on the current internal states from the recurrent network. It consists of a fully connected hidden layer that maps the feature vector | The emission network takes the current state of recurrent network as input and makes a prediction on where to extract the next image patch for the glimpse network. It acts as a controller that directs attention based on the current internal states from the recurrent network. It consists of a fully connected hidden layer that maps the feature vector <math>r_n^{(2)}</math> from the top recurrent layer to a coordinate tuple <math>l_{n+1}</math>. | ||

Context Network: | |||

The context network provides the initial state for the recurrent network and its output is used by the emission network to predict the location of the first glimpse. The context network C(.) takes a down-sampled low-resolution version of the whole input image | The context network provides the initial state for the recurrent network and its output is used by the emission network to predict the location of the first glimpse. The context network C(.) takes a down-sampled low-resolution version of the whole input image <math>I_coarse</math> and outputs a fixed length vector <math>c_I</math> . The contextual information provides sensible hints on where the potentially interesting regions are in a given image. The context network employs three convolutional layers that map a coarse image <math>I_coarse</math> to a feature vector. | ||

Classification Network: | |||

The classification network outputs a prediction for the class label y based on the final feature vector | The classification network outputs a prediction for the class label y based on the final feature vector <math>r_N^{(1)}</math> of the lower recurrent layer. The classification network has one fully connected hidden layer and a softmax output layer for the class y. | ||

In order to prevent the model to learn from contextual information than by combining information from different glimpses, the context network and classification network are connected to different recurrent layers in the deep model. This will help the deep recurrent attention model learn to look at locations that are relevant for classifying objects of interest. | In order to prevent the model to learn from contextual information than by combining information from different glimpses, the context network and classification network are connected to different recurrent layers in the deep model. This will help the deep recurrent attention model learn to look at locations that are relevant for classifying objects of interest. | ||

| Line 41: | Line 39: | ||

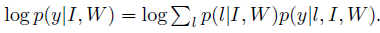

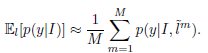

Given the class labels y of image “I”, learning can be formulated as a supervised classification problem with the cross entropy objective function. The attention model predicts the class label conditioned on intermediate latent location variables l from each glimpse and extracts the corresponding patches. We can thus maximize likelihood of the class label by marginalizing over the glimpse locations. | Given the class labels y of image “I”, learning can be formulated as a supervised classification problem with the cross entropy objective function. The attention model predicts the class label conditioned on intermediate latent location variables l from each glimpse and extracts the corresponding patches. We can thus maximize likelihood of the class label by marginalizing over the glimpse locations. | ||

[[File:2eq.PNG | center]] | |||

Using some simplifications, the practical algorithm to train the deep attention model can be expressed as: | |||

[[File:3.PNG | center]] | |||

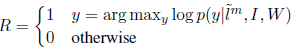

Where <math>\tilde{l^m}</math> is an approximation of location of glimpse “m”.This means that we can sample he glimpse location prediction from the model after each glimpse. In the above equation, log likelihood (in the second term) has an unbounded range that can introduce substantial high variance in the gradient estimator and sometimes induce an undesired large gradient update that is backpropagated through the rest of the model. So in this paper this term is replaced with a 0/1 discrete indicator function (R) and a baseline technique(b) is used to reduce variance in the estimator. | |||

[[File:4eq.PNG | center]] | |||

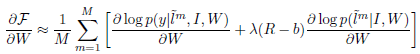

So the gradient update can be expressed as following: | |||

[[File:5.PNG | center]] | |||

In fact, by using the 0/1 indicator function, the learning rule from the above equation is equivalent to the REINFORCE learning model where R is the expected reward. | |||

During inference, the feedforward location prediction can be used as a deterministic prediction on | |||

the location coordinates to extract the next input image patch for the model. Alternatively, our marginalized objective function suggests a procedure to estimate the expected class prediction by using samples of location sequences <math>\{\tilde{l_1^m},\dots,\tilde{l_N^m}\}</math> and averaging their predictions. | |||

[[File:6.PNG | center]] | |||

= Multi Object/Sequence Classification as a Visual Attention Task= | |||

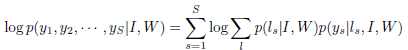

Our proposed attention model can be easily extended to solve classification tasks involving multiple objects. To train the recurrent network, in this case, the multiple object labels for a given image need to be cast into an ordered sequence {y1,...,ys}. Assuming there are S targets in an image, the objective function for the sequential prediction is: | |||

[[File:7.PNG | center]] | |||

= Experiments:= | |||

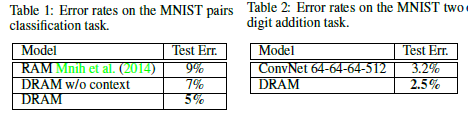

To show the effectiveness of the deep recurrent attention model (DRAM), multi-object classification tasks are investigated on two different datasets: MNIST and multi-digit SVHN. | |||

MNIST Dataset Results: | |||

Two main evaluation of the method is done using MNIST dataset: | |||

1)Learning to find digits | |||

2)Learning to do addition (The model has to find where each digit is and add them up. The task is to predict the sum of the two digits in the image as a classification problem) | |||

The results for both experiments are shown in table 1 and table 2. As stated in the tables, the DRAM model with a context network significantly outperforms the other models. | |||

[[File:8.PNG | center]] | |||

SVHN Dataset Results: | |||

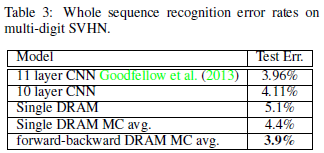

The publicly available multi-digit street view house number (SVHN) dataset consists of images of digits taken from pictures of house fronts. This experiment is more challenging and We trained a model to classify all the digits in an image sequentially. Two different model are implemented in this experiment: | |||

First, the label sequence ordering is chosen to go from left to right as the natural ordering of the house number.in this case, there is a performance gap between the state-of-the-art deep ConvNet and a single DRAM that “reads” from left to right. Therefore, a second recurrent attention model to “read” the house numbers from right to left as a backward model is trained. The forward and backward model can share the same weights for their glimpse networks but they have different weights for their recurrent and their emission networks. The model performance is shown in table 3: | |||

[[File:9.PNG | center]] | |||

As shown in the table, the proposed deep recurrent attention model (DRAM) outperforms the state-ofthe- | |||

art deep ConvNets on the standard SVHN sequence recognition task. | |||

= Discussion and Conclusion:= | |||

The recurrent attention models only process a selected subset of the input have less computational cost than a ConvNet that looks over an entire image. Also, they can naturally work on images of different size with the same computational cost independent of the input dimensionality. Moreover, the attention-based model is less prone to over-fitting than ConvNets, likely because of the stochasticity in the glimpse policy during training. Duvedi C et al. <ref> | |||

Duvedi C and Shah P. [http://vision.stanford.edu/teaching/cs231n/reports/cduvedi_report.pdf Multi-Glance Attention Models For Image Classification ], | |||

</ref> developed a two glances approach that uses a combination of multiple Convolutional neural nets and recurrent neural nets. In this approach RNN’s generate a location for a glimpse within the image and a CNN extracts features from a glimpse of a fixed size at the selected location. In the next step the RNN’s generating the next glance for a glance. The process continues to generate the whole picture by combining features of all relevant patches together. | |||

= References= | |||

<references /> | |||

Latest revision as of 09:46, 30 August 2017

Introduction

Recognizing multiple objects in images has been one of the most important goals of computer vision. Previous work in this classification of sequences of characters often employed a sliding window detector with an individual character-classifier. However, these systems can involve setting components in a case-specific manner for determining possible object locations. In this paper an attention-based model for recognizing multiple objects in images is presented. The proposed model is a deep recurrent neural network trained with reinforcement learning to attend to the most relevant regions of the input image. It has been shown that the proposed method is more accurate than the state-of-the-art convolutional networks and uses fewer parameters and less computation. One of the main drawbacks of convolutional networks (ConvNets) is their poor scalability with increasing input image size so efficient implementations of these models have become necessary. In this work, the authors take inspiration from the way humans perform visual sequence recognition tasks such as reading by continually moving the fovea to the next relevant object or character, recognizing the individual object, and adding the recognized object to our internal representation of the sequence. The proposed system is a deep recurrent neural network that at each step processes a multi-resolution crop of the input image, called a “glimpse”. The network uses information from the glimpse to update its internal representation of the input, and outputs the next glimpse location and possibly the next object in the sequence. The process continues until the model decides that there are no more objects to process.

Deep Recurrent Visual Attention Model:

For simplicity, they first describe how our model can be applied to classifying a single object and later show how it can be extended to multiple objects. Processing an image x with an attention based model is a sequential process with N steps, where each step consists of a glimpse. At each step n, the model receives a location ln along with a glimpse observation xn taken at location ln. The model uses the observation to update its internal state and outputs the location ln+1 to process at the next time-step. A graphical representation of the proposed model is shown in Figure 1.

The above model can be broken down into a number of sub-components, each mapping some input into a vector output. In this paper the term “network” is used to describe these sub-components.

Glimpse Network:

The job of the glimpse network is to extract a set of useful features from location of a glimpse of the raw visual input. The glimpse network is a non-linear function that receives the current input image patch, or glimpse ([math]\displaystyle{ x_n }[/math]), and its location tuple ([math]\displaystyle{ l_n }[/math]) as input and outputs a vector showing that what location has what features. There are two separate networks in the structure of glimpse network, each of which has its own input. The first one which extracts features of the image patch takes an image patch as input and consists of three convolutional hidden layers without any pooling layers followed by a fully connected layer. Separately, the location tuple is mapped using a fully connected hidden layer. Then element-wise multiplication of two output vectors produces the final glimpse feature vector [math]\displaystyle{ g_n }[/math].

Recurrent Network:

The recurrent network aggregates information extracted from the individual glimpses and combines the information in a coherent manner that preserves spatial information. The glimpse feature vector gn from the glimpse network is supplied as input to the recurrent network at each time step. The recurrent network consists of two recurrent layers. Two outputs of the recurrent layers are defined as [math]\displaystyle{ r_n^{(1)} }[/math] and [math]\displaystyle{ r_n^{(2)} }[/math].

Emission Network:

The emission network takes the current state of recurrent network as input and makes a prediction on where to extract the next image patch for the glimpse network. It acts as a controller that directs attention based on the current internal states from the recurrent network. It consists of a fully connected hidden layer that maps the feature vector [math]\displaystyle{ r_n^{(2)} }[/math] from the top recurrent layer to a coordinate tuple [math]\displaystyle{ l_{n+1} }[/math].

Context Network:

The context network provides the initial state for the recurrent network and its output is used by the emission network to predict the location of the first glimpse. The context network C(.) takes a down-sampled low-resolution version of the whole input image [math]\displaystyle{ I_coarse }[/math] and outputs a fixed length vector [math]\displaystyle{ c_I }[/math] . The contextual information provides sensible hints on where the potentially interesting regions are in a given image. The context network employs three convolutional layers that map a coarse image [math]\displaystyle{ I_coarse }[/math] to a feature vector.

Classification Network:

The classification network outputs a prediction for the class label y based on the final feature vector [math]\displaystyle{ r_N^{(1)} }[/math] of the lower recurrent layer. The classification network has one fully connected hidden layer and a softmax output layer for the class y.

In order to prevent the model to learn from contextual information than by combining information from different glimpses, the context network and classification network are connected to different recurrent layers in the deep model. This will help the deep recurrent attention model learn to look at locations that are relevant for classifying objects of interest.

Learning Where and What

Given the class labels y of image “I”, learning can be formulated as a supervised classification problem with the cross entropy objective function. The attention model predicts the class label conditioned on intermediate latent location variables l from each glimpse and extracts the corresponding patches. We can thus maximize likelihood of the class label by marginalizing over the glimpse locations.

Using some simplifications, the practical algorithm to train the deep attention model can be expressed as:

Where [math]\displaystyle{ \tilde{l^m} }[/math] is an approximation of location of glimpse “m”.This means that we can sample he glimpse location prediction from the model after each glimpse. In the above equation, log likelihood (in the second term) has an unbounded range that can introduce substantial high variance in the gradient estimator and sometimes induce an undesired large gradient update that is backpropagated through the rest of the model. So in this paper this term is replaced with a 0/1 discrete indicator function (R) and a baseline technique(b) is used to reduce variance in the estimator.

So the gradient update can be expressed as following:

In fact, by using the 0/1 indicator function, the learning rule from the above equation is equivalent to the REINFORCE learning model where R is the expected reward. During inference, the feedforward location prediction can be used as a deterministic prediction on the location coordinates to extract the next input image patch for the model. Alternatively, our marginalized objective function suggests a procedure to estimate the expected class prediction by using samples of location sequences [math]\displaystyle{ \{\tilde{l_1^m},\dots,\tilde{l_N^m}\} }[/math] and averaging their predictions.

Multi Object/Sequence Classification as a Visual Attention Task

Our proposed attention model can be easily extended to solve classification tasks involving multiple objects. To train the recurrent network, in this case, the multiple object labels for a given image need to be cast into an ordered sequence {y1,...,ys}. Assuming there are S targets in an image, the objective function for the sequential prediction is:

Experiments:

To show the effectiveness of the deep recurrent attention model (DRAM), multi-object classification tasks are investigated on two different datasets: MNIST and multi-digit SVHN.

MNIST Dataset Results:

Two main evaluation of the method is done using MNIST dataset:

1)Learning to find digits

2)Learning to do addition (The model has to find where each digit is and add them up. The task is to predict the sum of the two digits in the image as a classification problem)

The results for both experiments are shown in table 1 and table 2. As stated in the tables, the DRAM model with a context network significantly outperforms the other models.

SVHN Dataset Results:

The publicly available multi-digit street view house number (SVHN) dataset consists of images of digits taken from pictures of house fronts. This experiment is more challenging and We trained a model to classify all the digits in an image sequentially. Two different model are implemented in this experiment: First, the label sequence ordering is chosen to go from left to right as the natural ordering of the house number.in this case, there is a performance gap between the state-of-the-art deep ConvNet and a single DRAM that “reads” from left to right. Therefore, a second recurrent attention model to “read” the house numbers from right to left as a backward model is trained. The forward and backward model can share the same weights for their glimpse networks but they have different weights for their recurrent and their emission networks. The model performance is shown in table 3:

As shown in the table, the proposed deep recurrent attention model (DRAM) outperforms the state-ofthe- art deep ConvNets on the standard SVHN sequence recognition task.

Discussion and Conclusion:

The recurrent attention models only process a selected subset of the input have less computational cost than a ConvNet that looks over an entire image. Also, they can naturally work on images of different size with the same computational cost independent of the input dimensionality. Moreover, the attention-based model is less prone to over-fitting than ConvNets, likely because of the stochasticity in the glimpse policy during training. Duvedi C et al. <ref> Duvedi C and Shah P. Multi-Glance Attention Models For Image Classification , </ref> developed a two glances approach that uses a combination of multiple Convolutional neural nets and recurrent neural nets. In this approach RNN’s generate a location for a glimpse within the image and a CNN extracts features from a glimpse of a fixed size at the selected location. In the next step the RNN’s generating the next glance for a glance. The process continues to generate the whole picture by combining features of all relevant patches together.

References

<references />