imageNet Classification with Deep Convolutional Neural Networks: Difference between revisions

m (Conversion script moved page ImageNet Classification with Deep Convolutional Neural Networks to imageNet Classification with Deep Convolutional Neural Networks: Converting page titles to lowercase) |

|||

| (13 intermediate revisions by 4 users not shown) | |||

| Line 19: | Line 19: | ||

=== ReLU Nonlinearity === | === ReLU Nonlinearity === | ||

Non-saturating nonlinearity ''f(x) = max(0,x)'' also known as Rectified Linear Units (ReLUs)<ref> | |||

Nair V, Hinton G E. [http://machinelearning.wustl.edu/mlpapers/paper_files/icml2010_NairH10.pdf Rectified linear units improve restricted boltzmann machines.] Proceedings of the 27th International Conference on Machine Learning (ICML-10). 2010: 807-814. | Nair V, Hinton G E. [http://machinelearning.wustl.edu/mlpapers/paper_files/icml2010_NairH10.pdf Rectified linear units improve restricted boltzmann machines.] Proceedings of the 27th International Conference on Machine Learning (ICML-10). 2010: 807-814. | ||

</ref> as the nonlinearity function, which | </ref> is used as the nonlinearity function, which works several times faster than equivalents with those standard saturating neurons.Neural networks are usually ill-conditioned and they converge very slowly. By using nonlinearities such as rectifiers (maxpooling units), gradients flow along a few paths instead of all possible paths resulting to faster convergence. Thus, better performance can be achieved by reducing the training time for each epoch and training larger datasets to prevent overfitting. | ||

Deep convolutional neural networks | Deep convolutional neural networks | ||

with ReLUs train several times faster than their | with ReLUs train several times faster than their | ||

| Line 56: | Line 56: | ||

where the sum runs over n “adjacent” kernel maps at the same spatial position. This response normalization implements a form of lateral inhibition inspired by the type found in real neurons, creating competition for big activities amongst neuron outputs computed using different kernels. | where the sum runs over n “adjacent” kernel maps at the same spatial position. This response normalization implements a form of lateral inhibition inspired by the type found in real neurons, creating competition for big activities amongst neuron outputs computed using different kernels. | ||

The constants k, n, α, and β are hyper-parameters whose values are determined using a validation set; k = 2, n = 5, α = 10−4 , and β = 0.75 were used in this research. This normalization was used after applying the ReLU nonlinearity in certain layers | |||

=== Overlapping Pooling === | === Overlapping Pooling === | ||

| Line 65: | Line 67: | ||

[[File:network.JPG | center]] | [[File:network.JPG | center]] | ||

As shown in the figure above, the net contains eight layers with 60 million parameters; the first five are convolutional and the remaining three are fully connected layers. The output of the last layer is fed to a 1000-way softmax. Their network maximizes the average across training cases of the log-probability of the correct label under the prediction distribution. | As shown in the figure above, the net contains eight layers with 60 million parameters; the first five are convolutional and the remaining three are fully connected layers. The first convolutional layer filters the 224 × 224 × 3 input image with 96 kernels of size 11 × 11 × 3 with a stride of 4 pixels (this is the distance between the receptive field centers of neighboring neurons in a kernel map). The second convolutional layer takes as input the (response-normalized and pooled) output of the first convolutional layer and filters it with 256 kernels of size 5 × 5 × 48. The third, fourth, and fifth convolutional layers are connected to one another without any intervening pooling or normalization layers. The third convolutional layer has 384 kernels of size 3 × 3 × 256 connected to the (normalized, pooled) outputs of the second convolutional layer. The fourth convolutional layer has 384 kernels of size 3 × 3 × 192, and the fifth convolutional layer has 256 kernels of size 3 × 3 × 192. The fully-connected layers have 4096 neurons each. The output of the last layer is fed to a 1000-way softmax. Their network maximizes the average across training cases of the log-probability of the correct label under the prediction distribution. | ||

Response-normalization layers follow the first and second convolutional layers. Max-pooling layers follow both response-normalization layers as well as the fifth convolutional layer. The ReLU non-linearity is applied to the output of every convolutional and fully-connected layer. | Response-normalization layers follow the first and second convolutional layers. Max-pooling layers follow both response-normalization layers as well as the fifth convolutional layer. The ReLU non-linearity is applied to the output of every convolutional and fully-connected layer. | ||

| Line 96: | Line 98: | ||

where <math>v</math> is the momentum variable, <math>\epsilon</math> is the learning rate which is adjusted manually throughout training. The weights in each layer are initialized from a zero-mean Gaussian distribution with standard deviation 0.01. The biases in the second, fourth, fifth convolutional layers and fully-connected hidden layers are initialized by 1, while those in the remaining layers are set by 0. This initialization accelerates | where <math>v</math> is the momentum variable, <math>\epsilon</math> is the learning rate which is adjusted manually throughout training. The weights in each layer are initialized from a zero-mean Gaussian distribution with standard deviation 0.01. The biases in the second, fourth, fifth convolutional layers and fully-connected hidden layers are initialized by 1, while those in the remaining layers are set by 0. This initialization accelerates | ||

the early stages of learning by providing the ReLUs with positive inputs. The neuron | the early stages of learning by providing the ReLUs with positive inputs. The neuron | ||

biases in the remaining layers were initialized with the constant 0. | biases in the remaining layers were initialized with the constant 0. Initializing the network with sparse weights is the other thing that reduces the ill-conditioning issue and helps this network work well. | ||

An equal learning rate was used for all layers, which was adjusted manually throughout training. | An equal learning rate was used for all layers, which was adjusted manually throughout training. | ||

The heuristic which was followed was to divide the learning rate by 10 when the validation error | The heuristic which was followed was to divide the learning rate by 10 when the validation error | ||

| Line 108: | Line 110: | ||

For ILSVRC-2010 dataset, their network achieves top-1 and top-5 test set error rates of 37.5% and 17.0%, which was the state of the art at that time. | For ILSVRC-2010 dataset, their network achieves top-1 and top-5 test set error rates of 37.5% and 17.0%, which was the state of the art at that time. | ||

For LSVRC-2012 dataset, the CNN described in this paper achieves a top-5 error rate of 18.2%. Averaging the predictions of five similar CNNs gives an error rate of 16.4%. | The following table shows the results | ||

[[File:Tt1.png]] | |||

Comparison of results on ILSVRC- | |||

2010 test set. In italics are best results | |||

achieved by others. | |||

For LSVRC-2012 dataset, the CNN described in this paper achieves a top-5 error rate of 18.2%. Averaging the predictions of five similar CNNs gives an error rate of 16.4%. The following table summarizes the results for the LSVRC Dataset | |||

[[File:Tt3.png]] | |||

The following figure shows the learnt kernels | |||

[[File:Figg3.png]] | |||

96 convolutional kernels of size | |||

11×11×3 learned by the first convolutional | |||

layer on the 224×224×3 input images. The | |||

top 48 kernels were learned on GPU 1 while | |||

the bottom 48 kernels were learned on GPU | |||

2. See Section 6.1 for details. | |||

=== Image Retrieval === | |||

The convolutional network predicts the image's class based on the last hidden layer with 4096 nodes. If two different images have very similar activation values for these 4096 hidden nodes then the convolutional network would predict the same class for both images and would treat them as the very similar images. Since the network is pretty accurate based on the results, we can expect that if two images do have very similar values in the last node, they would correspond to the same class. | |||

Based on this, the network actually provides an excellent way of mapping images to a 4096 dimension vector such that images with same class should have similar values. This means that after the network has been trained, images can be inputted into this network and their 4096 dimension vector stored. Afterwards, for the process of image retrieval, i.e. retrieve similar images based on an image, it is a simple matter of finding other images with similar vectors based on measures such as Euclidean distance. This can be seen when the researchers calculated the closest Euclidean distance image vectors for several images to retrieve bunch of similar images and generated the following: | |||

[[File:Similarimg.PNG]] | |||

This has a strong advantage over encoder methods in that it actually accounts for the meaning of the image, i.e. type of object, rather than just similarities based on colour or shape and can be seen in the above where despite large differences in shading, angle, and colour, it still managed to retrieve images containing the same object. An issue though is that calculating Euclidean distance for large numbers of 4096 dimension vectors is not very efficient and the researchers proposed mapping these vectors further to an auto encoder with values constricted to 0 or 1. This means that all images would be mapped to a binary code of 0s and 1s. | |||

== Discussion == | == Discussion == | ||

1. It is notable that | 1. The main techniques that allowed this success include the following: efficient GPU training, number of labeled examples, convolutional architecture with max-pooling , rectifying non-linearities , careful initialization , careful parameter update and adaptive learning rate heuristics, layerwise feature normalization , and a dropout trick based on injecting strong binary multiplicative noise on hidden units. | ||

2. It is notable that their network’s performance degrades if a single convolutional layer is removed. So the depth of the network is important for achieving their results. | |||

3. Their experiments suggest that the results can be improved simply by waiting for faster GPUs and bigger datasets to become available. | |||

== Bibliography == | == Bibliography == | ||

<references /> | <references /> | ||

Latest revision as of 09:46, 30 August 2017

Introduction

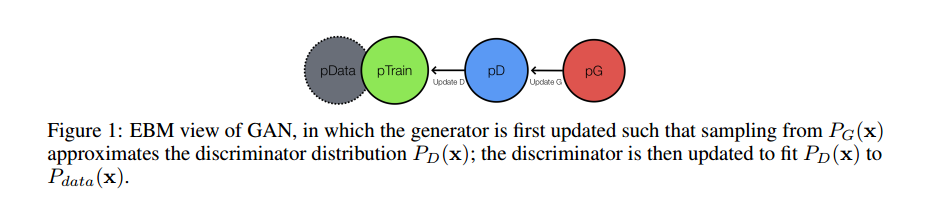

In this paper, they trained a large, deep neural network to classify the 1.2 million high-resolution images in the ImageNet LSVRC-2010 contest into the 1000 different classes. To learn about thousands of objects from millions of images, Convolutional Neural Network (CNN) is utilized due to its large learning capacity, fewer connections and parameters and outstanding performance on image classification.

Moreover, current GPU provides a powerful tool to facilitate the training of interestingly-large CNNs. Thus, they trained one of the largest convolutional neural networks to date on the datasets of ILSVRC-2010 and ILSVRC-2012 and achieved the best results ever reported on these datasets by the time this paper was written.

The code of their work is available here<ref> "High-performance C++/CUDA implementation of convolutional neural networks" </ref>.

Dataset

ImageNet Large-Scale Visual Recognition Challenge (ILSVRC) has roughly 1.2 million labeled high-resolution training images, 50 thousand validation images, and 150 thousand testing images over 1000 categories.

In this paper, the images in this dataset are down-sampled to a fixed resolution of 256 x 256. The only image pre-processing they used is subtracting the mean activity over the training set from each pixel.

Architecture

ReLU Nonlinearity

Non-saturating nonlinearity f(x) = max(0,x) also known as Rectified Linear Units (ReLUs)<ref> Nair V, Hinton G E. Rectified linear units improve restricted boltzmann machines. Proceedings of the 27th International Conference on Machine Learning (ICML-10). 2010: 807-814. </ref> is used as the nonlinearity function, which works several times faster than equivalents with those standard saturating neurons.Neural networks are usually ill-conditioned and they converge very slowly. By using nonlinearities such as rectifiers (maxpooling units), gradients flow along a few paths instead of all possible paths resulting to faster convergence. Thus, better performance can be achieved by reducing the training time for each epoch and training larger datasets to prevent overfitting. Deep convolutional neural networks with ReLUs train several times faster than their equivalents with tanh units. The following figure illustrates this. The shows the number of iterations required to reach 25% training error on the CIFAR-10 dataset for a particular four-layer convolutional network.

A four-layer convolutional neural network with ReLUs (solid line) reaches a 25% training error rate on CIFAR-10 six times faster than an equivalent network with tanh neurons (dashed line). The learning rates for each network were chosen independently to make training as fast as possible. No regularization of any kind was employed. The magnitude of the effect demonstrated here varies with network architecture, but networks with ReLUs consistently learn several times faster than equivalents with saturating neurons.

Training on Multiple GPUs

They spread the net across two GPUs by putting half of the kernels (or neurons) on each GPU and letting GPUs communicate only in certain layers. Choosing the pattern of connectivity could be a problem for cross-validation, so they tune the amount of communication precisely until it is an acceptable fraction of the amount of computation.

Local Response Normalization

ReLUs have the desirable property that they do not require input normalization to prevent them from saturating. However, they find that a local response normalization scheme after applying the ReLU nonlinearity can reduce their top-1 and top-5 error rates by 1.4% and 1.2%.

The response normalization is given by the expression

[math]\displaystyle{ b_{x,y}^{i}=a_{x,y}^{i}/\left ( k+\alpha \sum_{j=max\left ( 0,i-n/2 \right )}^{min\left ( N-1,i+n/2 \right )}\left ( a_{x,y}^{i} \right )^{2} \right )^{\beta } }[/math]

where the sum runs over n “adjacent” kernel maps at the same spatial position. This response normalization implements a form of lateral inhibition inspired by the type found in real neurons, creating competition for big activities amongst neuron outputs computed using different kernels.

The constants k, n, α, and β are hyper-parameters whose values are determined using a validation set; k = 2, n = 5, α = 10−4 , and β = 0.75 were used in this research. This normalization was used after applying the ReLU nonlinearity in certain layers

Overlapping Pooling

Unlike traditional non-overlapping pooling, they use overlapping pooling throughout their network, with pooling window size z = 3 and stride s = 2. This scheme reduces their top-1 and top-5 error rates by 0.4% and 0.3% and makes the network more difficult to overfit.

Overall Architecture

As shown in the figure above, the net contains eight layers with 60 million parameters; the first five are convolutional and the remaining three are fully connected layers. The first convolutional layer filters the 224 × 224 × 3 input image with 96 kernels of size 11 × 11 × 3 with a stride of 4 pixels (this is the distance between the receptive field centers of neighboring neurons in a kernel map). The second convolutional layer takes as input the (response-normalized and pooled) output of the first convolutional layer and filters it with 256 kernels of size 5 × 5 × 48. The third, fourth, and fifth convolutional layers are connected to one another without any intervening pooling or normalization layers. The third convolutional layer has 384 kernels of size 3 × 3 × 256 connected to the (normalized, pooled) outputs of the second convolutional layer. The fourth convolutional layer has 384 kernels of size 3 × 3 × 192, and the fifth convolutional layer has 256 kernels of size 3 × 3 × 192. The fully-connected layers have 4096 neurons each. The output of the last layer is fed to a 1000-way softmax. Their network maximizes the average across training cases of the log-probability of the correct label under the prediction distribution.

Response-normalization layers follow the first and second convolutional layers. Max-pooling layers follow both response-normalization layers as well as the fifth convolutional layer. The ReLU non-linearity is applied to the output of every convolutional and fully-connected layer.

Reducing overfitting

Data Augmentation

The easiest and most common method to reduce overfitting on image data is to artificially enlarge the dataset using label-preserving transformations. In this paper, the transformed images are generated on CPU while GPU is training and do not need to be stored on disk.

The first form of data augmentation consists of generating image translations and horizontal reflections. They extract a random 224 x 224 patches (and their horizontal reflections) from the 256 x 256 images and training the network on these extracted patches. They also perform principal components analysis (PCA) on the set of RGB pixel values. To each training image, multiples of the found principal components, with magnitudes proportional to the corresponding eigenvalues times a random variable drawn from a Gaussian with mean zero and standard deviation 0.1 are added.Therefore to each RGB image pixel the following quantity is added

This scheme helps to capture the object identity invariant with respect to its intensity and color, which reduces the top-1 error rate by over 1%.

Dropout

The “dropout” technique is implemented in the first two fully-connected layers by setting to zero the output of each hidden neuron with probability 0.5. This scheme roughly doubles the number of iterations required to converge. However, it forces the network to learn more robust features that are useful in conjunction with many different random subsets of the other neurons.

Details of leaning

They trained the network using stochastic gradient descent with a batch size of 128 examples, momentum of 0.9, and weight decay of 0.0005. The update rule for weight w was

[math]\displaystyle{ v_{i+1}:=0.9\cdot v_{i}-0.0005\cdot \epsilon \cdot w_{i}-\epsilon \cdot \left \langle \frac{\partial L}{\partial w}|_{w_{i}} \right \rangle_{D_{i}} }[/math]

[math]\displaystyle{ w_{i+1}:=w_{i}+v_{i+1} }[/math]

where [math]\displaystyle{ v }[/math] is the momentum variable, [math]\displaystyle{ \epsilon }[/math] is the learning rate which is adjusted manually throughout training. The weights in each layer are initialized from a zero-mean Gaussian distribution with standard deviation 0.01. The biases in the second, fourth, fifth convolutional layers and fully-connected hidden layers are initialized by 1, while those in the remaining layers are set by 0. This initialization accelerates the early stages of learning by providing the ReLUs with positive inputs. The neuron biases in the remaining layers were initialized with the constant 0. Initializing the network with sparse weights is the other thing that reduces the ill-conditioning issue and helps this network work well. An equal learning rate was used for all layers, which was adjusted manually throughout training. The heuristic which was followed was to divide the learning rate by 10 when the validation error rate stopped improving with the current learning rate. The learning rate was initialized at 0.01 and 6 reduced three times prior to termination. The network was trained for roughly 90 cycles through the training set of 1.2 million images, which took five to six days on two NVIDIA GTX 580 3GB GPUs

Results

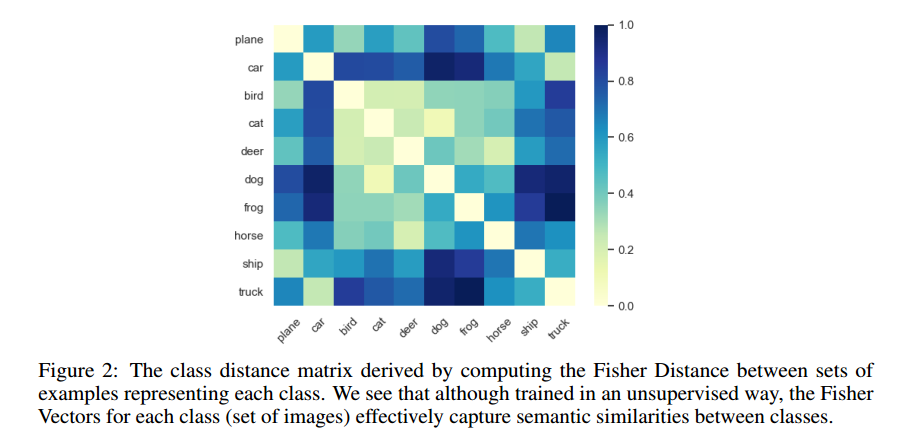

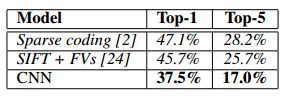

For ILSVRC-2010 dataset, their network achieves top-1 and top-5 test set error rates of 37.5% and 17.0%, which was the state of the art at that time.

The following table shows the results

Comparison of results on ILSVRC- 2010 test set. In italics are best results achieved by others.

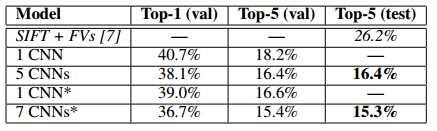

For LSVRC-2012 dataset, the CNN described in this paper achieves a top-5 error rate of 18.2%. Averaging the predictions of five similar CNNs gives an error rate of 16.4%. The following table summarizes the results for the LSVRC Dataset

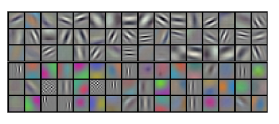

The following figure shows the learnt kernels

96 convolutional kernels of size 11×11×3 learned by the first convolutional layer on the 224×224×3 input images. The top 48 kernels were learned on GPU 1 while the bottom 48 kernels were learned on GPU 2. See Section 6.1 for details.

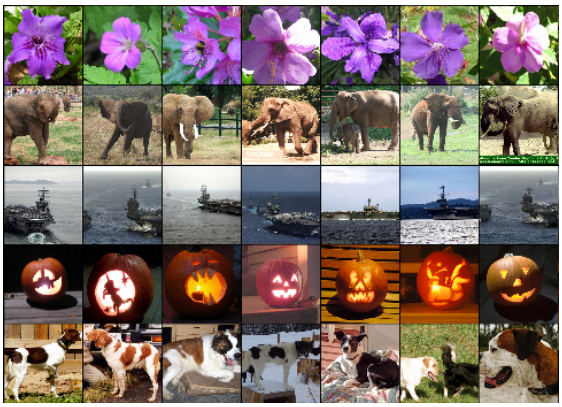

Image Retrieval

The convolutional network predicts the image's class based on the last hidden layer with 4096 nodes. If two different images have very similar activation values for these 4096 hidden nodes then the convolutional network would predict the same class for both images and would treat them as the very similar images. Since the network is pretty accurate based on the results, we can expect that if two images do have very similar values in the last node, they would correspond to the same class.

Based on this, the network actually provides an excellent way of mapping images to a 4096 dimension vector such that images with same class should have similar values. This means that after the network has been trained, images can be inputted into this network and their 4096 dimension vector stored. Afterwards, for the process of image retrieval, i.e. retrieve similar images based on an image, it is a simple matter of finding other images with similar vectors based on measures such as Euclidean distance. This can be seen when the researchers calculated the closest Euclidean distance image vectors for several images to retrieve bunch of similar images and generated the following:

This has a strong advantage over encoder methods in that it actually accounts for the meaning of the image, i.e. type of object, rather than just similarities based on colour or shape and can be seen in the above where despite large differences in shading, angle, and colour, it still managed to retrieve images containing the same object. An issue though is that calculating Euclidean distance for large numbers of 4096 dimension vectors is not very efficient and the researchers proposed mapping these vectors further to an auto encoder with values constricted to 0 or 1. This means that all images would be mapped to a binary code of 0s and 1s.

Discussion

1. The main techniques that allowed this success include the following: efficient GPU training, number of labeled examples, convolutional architecture with max-pooling , rectifying non-linearities , careful initialization , careful parameter update and adaptive learning rate heuristics, layerwise feature normalization , and a dropout trick based on injecting strong binary multiplicative noise on hidden units.

2. It is notable that their network’s performance degrades if a single convolutional layer is removed. So the depth of the network is important for achieving their results.

3. Their experiments suggest that the results can be improved simply by waiting for faster GPUs and bigger datasets to become available.

Bibliography

<references />