memory Networks: Difference between revisions

No edit summary |

m (Conversion script moved page Memory Networks to memory Networks: Converting page titles to lowercase) |

||

| (23 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

= Introduction = | = Introduction = | ||

Most supervised machine learning models are designed to approximate a function that maps input data to a desirable output (e.g. a class label for an image or a translation of a sentence from one language to another). In this sense, | Most supervised machine learning models are designed to approximate a function that maps input data to a desirable output (e.g. a class label for an image or a translation of a sentence from one language to another). In this sense, | ||

such models perform inference using a 'fixed' memory in the form of a set of parameters learned during training. For example, the memory of a recurrent neural network is constituted largely by the weights on the recurrent connections to its hidden layer (along with the layer's activities). As is well known, this form of memory is inherently limited given the fixed dimensionality of the weights in question. It is largely for this reason that recurrent nets have difficulty learning long-range dependencies in sequential data. Learning such dependencies, note, requires ''remembering'' | such models perform inference using a 'fixed' memory in the form of a set of parameters learned during training. For example, the memory of a recurrent neural network is constituted largely by the weights on the recurrent connections to its hidden layer (along with the layer's activities). As is well known, this form of memory is inherently limited given the fixed dimensionality of the weights in question. It is largely for this reason that recurrent nets have difficulty learning long-range dependencies in sequential data. Learning such dependencies, note, requires ''remembering'' items in a sequence for a large number of time steps. | ||

For an interesting class of problems, it is essential for a model to be able to learn long-term dependencies, and to more generally be able to learn to perform inferences using an arbitrarily large memory. Question-answering tasks are paradigmatic of this class of problems, since performing well on such tasks requires remembering all of the information that constitutes a possible answer to the questions being posed. In principle, a recurrent network such as an LSTM could learn to perform QA tasks, but in practice, the amount of information that can be retained by the weights and the hidden states in the LSTM is simply insufficient. | For an interesting class of problems, it is essential for a model to be able to learn long-term dependencies, and to more generally be able to learn to perform inferences using an arbitrarily large memory. Question-answering tasks are paradigmatic of this class of problems, since performing well on such tasks requires remembering all of the information that constitutes a possible answer to the questions being posed. In principle, a recurrent network such as an LSTM could learn to perform QA tasks, but in practice, the amount of information that can be retained by the weights and the hidden states in the LSTM is simply insufficient. | ||

| Line 10: | Line 8: | ||

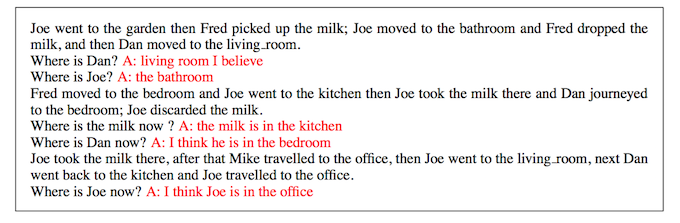

Given this need for a model architecture the combines inference and memory in a sophisticated manner, the authors of this paper propose what they refer to as a "Memory Network". In brief, a memory network is a model that learns to read and write data to an arbitrarily large long-term memory, while also using the data in this memory to perform inferences. The rest of this summary describes the components of a memory network in greater detail, along with some experiments describing its application to a question answering task involving short stories. Below is an example illustrating the model's ability to answer simple questions after being presented with short, multi-sentence stories. | Given this need for a model architecture the combines inference and memory in a sophisticated manner, the authors of this paper propose what they refer to as a "Memory Network". In brief, a memory network is a model that learns to read and write data to an arbitrarily large long-term memory, while also using the data in this memory to perform inferences. The rest of this summary describes the components of a memory network in greater detail, along with some experiments describing its application to a question answering task involving short stories. Below is an example illustrating the model's ability to answer simple questions after being presented with short, multi-sentence stories. | ||

[[File:QA_example.png | frame | centre | Example answers (in red) using a memory network for | [[File:QA_example.png | frame | centre | Example answers (in red) using a memory network for question answering. ]] | ||

= Model Architecture = | = Model Architecture = | ||

| Line 20: | Line 18: | ||

*<math>\ R</math> produces a response given an output feature representation. This response is usually a word or a sentence, but in principle it could also be an action of some kind (e.g. the movement of a robot) | *<math>\ R</math> produces a response given an output feature representation. This response is usually a word or a sentence, but in principle it could also be an action of some kind (e.g. the movement of a robot) | ||

To give a quick overview of how the model operates, an input ''x'' will first be mapped to a feature representation <math>\ I(x)</math> Then, for all memories ''i | To give a quick overview of how the model operates, an input ''x'' will first be mapped to a feature representation <math>\ I(x)</math> Then, for all memories ''i'', the following update is applied: <math>\ m_i = G(m_i, I(x), m) </math>. This means that each memory is updated on the basis of the input ''x'' and the current state of the memory <math>\ m</math>. In the case where each input is simply written to memory, <math>\ G</math> might function to simply select an index that is currently unused and write <math>\ I(x)</math> to the memory location corresponding to this index. Next, an output feature representation is computed as <math>\ o=O(I(x), m)</math>, and a response, <math>\ r</math>, is computed directly from this feature representation as <math>\ r=R(o)</math>. <math>\ O</math> can be interpreted as retrieving a small selection of memories that are relevant to producing a good response, and <math>\ R</math> actually produces the response given the feature representation produced from the relevant memories by <math>\ O</math>. | ||

= A Basic Implementation = | = A Basic Implementation = | ||

In a simple version of the memory network, input text is just written to memory in unaltered form. Or in other words, <math>\ I(x) </math> simply returns ''x'', and <math>\ G </math> writes this text to a new memory slot <math>\ m_{N+1} </math> if <math>\ N </math> is the number of currently filled slots. The memory is accordingly an array of strings, and the inclusion of a new string does nothing to modify existing strings. | |||

Given as much, most of the work being done by the model is performed by the functions <math>\ O </math> and <math>\ R </math>. The job of <math>\ O </math> is to produce an output feature representation by selecting <math>\ k </math> supporting memories from <math>\ m </math> on the basis of the input ''x''. In the experiments described in this paper, <math>\ k </math> is set to either 1 or 2. In the case that <math>\ k=1 </math>, the function <math>\ O </math> behaves as follows: | |||

:<math>\ o_1 = O_1(x, m) = argmax_{i = 1 ... N} S_O(x, m_i) </math> | |||

where <math>\ S_O </math> is a function that scores a candidate memory for its compatibility with ''x''. Essentially, one 'supporting' memory is selected from <math>\ m </math> as being most likely to contain the information needed to answer the question posed in <math>\ x </math>. In this case, the output is <math>\ o_1 = [x, m_{o_1}] </math>, or a list containing the input question and one supporting memory. Alternatively, in the case that <math>\ k=2 </math>', a second supporting memory is selected on the basis of the input and the first supporting memory, as follows: | |||

:<math>\ o_2 = O_2(x, m) = argmax_{i = 1 ... N} S_O([x, m_{o_1}], m_i) </math> | |||

Now, the overall output is <math>\ o_2 = [x, m_{o_1}, m_{o_2}] </math>. (These lists are translated into feature representations as described below). Finally, the result of <math>\ O </math> is used to produce a response in the form of a single word via <math>\ R </math> as follows: | |||

:<math>\ r = argmax_{w \epsilon W} S_R([x, m_{o_1}, m_{o_2}], w) </math> | |||

In short, a response is produced by scoring each word in a set of candidate words against the representation produced by the combination of the input and the two supporting memories. The highest scoring candidate word is then chosen as the model's output. The learned portions of <math>\ O </math> and <math>\ R </math> are the parameters of the functions <math>\ S_O </math> and <math>\ S_R </math>, which perform embeddings of the raw text constituting each function argument, and then return the dot product of these two embeddings as a score. Formally, the function <math>\ S_O </math> can be defined as follows; <math>\ S_R </math> is defined analogously: | |||

:<math>\ S_O(x, y) = \Phi_x(x)^T U^T U \Phi_y(y) </math> | |||

In this equation, <math>\ U </math> is an <math>\ n \times D </math> matrix, where ''n'' is the dimension of the embedding space, and ''D'' is the number of features used to represent each function argument. <math>\ \Phi_x</math> and <math>\ \Phi_y </math> are functions that map each argument (which are strings) into the feature space. In the implementations considered in this paper, the feature space makes use of a bag-of-words representation, such that there are 3 binary features for each word in the model's vocabulary. The first feature corresponds to the presence of the word in the input ''x'', the second feature corresponds to the presence of the word in first supporting memory that is being used to select a second supporting memory, and the third feature representation corresponds to the presence of the word in a candidate memory being scored (i.e. either the first or second supporting memory retrieved by the model). Having these different features allows the model to learn distinct representations for the same word depending on whether the word is present in an input question or in a string stored in memory. | |||

Intuitively, it helps to think of the columns of <math>\ U </math> containing distributed representations of each word in the vocabulary (specifically, there are 3 representations and hence 3 columns devoted to each word). The binary feature representation <math>\ \Phi_x(x)</math> maps the text in ''x'' onto a binary feature vector, where 1's in the vector indicate the presence of a particular word in ''x'', and 0's indicate the absence of this word. Note that different elements of the vector will be set to 1 depending on whether the word occurs in the input ''x'' or in a supporting memory (i.e. when ''x'' is a list containing the input and a supporting memory). The matrix-vector multiplications in the above equation effectively extract and sum the distributed representations corresponding to each of the inputs, ''x'' and ''y''. Thus, a single distributed representation is produced for each input, and the resulting score is the dot product of these two vectors (which in turn is the cosine of the angle between the vectors scaled by the product of the vector norms). In the case where ''x'' is the input query, and ''y'' is a candidate memory, a high dot product indicates that the model thinks that the candidate in question is very relevant to answering the input query. In the case where ''x'' is the output of <math>\ O</math> and ''y'' is a candidate response word, a high dot product indicates that the model thinks that the response word is an appropriate answer given the output feature representation produced by <math>\ O</math>. Distinct embedding matrices <math>\ U_O </math> and <math>\ U_R </math> are used to compute the output feature representation and the response. | |||

The goal of learning is find embedding matrices in which the representations produced for queries, supporting memories, and responses are spatially related such that representations of relevant supporting memories are close to the representations of a query, and such that representations of individual words are close to the output feature representations of the questions they answer. The method used to perform this learning is described in the next section. | |||

= The Training Procedure = | = The Training Procedure = | ||

= | Learning is conducted in a supervised manner; the correct responses and supporting memories for each query are provided during training. The following margin-ranking loss function is used in tandem with stochastic gradient descent to learn the parameters of <math>\ U_O </math> and <math>\ U_R </math>, given an input ''x'', a desired response ''r'', and desired supporting memories, <math>\ m_{o_1}</math> and <math>\ m_{o_2}</math>: | ||

:<math> \sum_{f \neq m_{o_1}} max(0, \gamma + S_O (x, f) - S_O (x, m_{o_1})) + \sum_{f^' \neq m_{o_2}} max(0, \gamma + S_O ([x, m_{o_1}], f^') - S_O ([x, m_{o_1}], m_{o_2})) + </math> | |||

:<math> \sum_{r^' \neq r} max(0, \gamma + S_R ([x, m_{o_1}, m_{o_2}], r^') - S_R ([x, m_{o_1}, m_{o_2}], r)) </math> | |||

where <math>\ f</math>, <math>\ f^'</math> and <math>\ r^'</math> correspond to incorrect candidates for the first supporting memory, the second supporting memory, and the output response, and <math> \gamma</math> corresponds to the margin. Intuitively, each term in the sum penalizes the current parameters in proportion to the number of incorrect memories and responses that get assigned a score within the margin of the score of the correct memories and responses. Or in other words, if the score of a correct candidate memory / response is higher than the score of every incorrect candidate by at least <math> \gamma </math>, the cost is 0. Otherwise, the cost is the sum over all of the differences between the incorrect scores (plus gamma) and the correct score. In fact, this is just the standard hinge loss function. Weston et al. speed up gradient descent by sampling incorrect candidates instead of using all incorrect candidates in the calculation of the gradient for each training example. | |||

= Extensions to the Basic Implementation = | |||

Some limitations of the basic implementation are that it can only output single word responses, can only accept strings (rather than sequences) as input, and cannot use its memory in efficient or otherwise interesting ways. The authors propose a series of extensions to the basic implementation described in the previous section that are designed to overcome these limitations. First, they propose a segmenting function that learns when to segment an input sequence into discrete chunks that get written to individual memory slots. The segmenter is modeled similarly to other components, as an embedding model of the form: | |||

<math> | |||

seg(c)=W^T_{seg}U_s\Phi_{seg}(c) | |||

</math> | |||

where <math>W_{seg}</math> is a vector (effectively the parameters of a linear classifier in embedding space), and <math>c</math> is the sequence of input words represented as a bag of words using a separate dictionary. If <math>seg(c) > \gamma</math>, where <math>\gamma</math> is the margin, then this sequence is recognized as a segment. | |||

Second, they propose the use of hashing to avoid scoring a prohibitively large number of candidate memories. Each input corresponding to a query is hashed into some number of buckets, and only candidates within these buckets are scored during the selection of supporting memories. Hashing is done either by making a bucket per word in the model's vocabulary, or by clustering the learning word embeddings, and creating a bucket per cluster. | |||

The most important extension proposed by the authors involves incorporating information about the time at which a memory was written into the scoring function <math>/ S_O </math>. The model needs to be able to make use of such information to correctly answer questions such as "Where was John before the university" (assuming the model has been told some story about John). To handle temporal information, the feature space is extend to include features that indicate the relative time between when two items where written to memory. Formally, this yields the following revised scoring function: | |||

<math>\ S_{O_t}(x, y, y^') = \Phi_x(x)^T U^T U (\Phi_y(y)-\Phi_y(y^')+\Phi_t(x,y,y^'))</math> | |||

The novelty here lies in the feature mapping function <math> \Phi_t </math>, which takes an input and two candidate supporting memories, and returns a binary feature vector as before, but with the addition of three features that indicate whether <math>x</math> is older than <math>y</math>, whether <math>x</math> is older than <math>y^'</math>, and whether <math>y</math> is older than <math>y'</math>. The model loops over all candidate memories, comparing candidates <math>y</math> and <math>y^'</math>. If <math> S_{O_t}(x, y, y^') </math> is greater than 0, then <math>y</math> is preferred over <math>y^'</math>; otherwise, <math>y'</math> is preferred. If <math>y'</math> is preferred, <math>y</math> is replaced by <math>y'</math> and the loop continues to the next candidate memory (i.e. the new <math>y^'</math>. Once the loop finishes iterating over the entire memory, the winning candidate <math>y</math> is chosen as the supporting memory. | |||

Some further extensions concern allowing the model to deal with words not included in it's vocabulary, and to more effectively take advantage of exact word matches between input queries and candidate supporting memories. | |||

Embedding models cannot efficiently use exact word matches due to the low dimensionality <math>n</math>. One solution is to score a pair <math>x,y</math> with <math>\ \Phi_x(x)U^TU\Phi_y(y)+\lambda\Phi_x(x)^T\Phi_y(y) </math> instead. That is, add the “bag of words” matching score to the learned embedding score (with a mixing parameter λ). Another related way is to stay in the n-dimensional embedding space, but to extend the feature representation D with matching features, e.g., one per word. A matching feature indicates if a word occurs in both x and y. That is, we score with <math>\ \Phi_x(x)U^TU\Phi_y(y,x)</math> where <math>\ \Phi_y</math> is actually built conditionally on x: if some of the words in y match the words in x we set those matching features to 1. Unseen words can be modeled similarly by using matching features on their context words. This then gives a feature space of D = 8|W|. | |||

= Related work = | = Related work = | ||

There are two general approaches to performing question answering that have been developed in the literature. The first makes use of a technique known as semantic parsing to map a query expressed in natural language onto a representation in some formal language that directly extracts information from some external memory such as a knowledge base<ref>J. Berant, A. Chou, R. Frostig, and P. Liang. [http://cs.stanford.edu/~pliang/papers/freebase-emnlp2013.pdf "Semantic parsing on Freebase from question-answer pairs."] . In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, October 2013.</ref><ref>P. Liang, M. Jordan, and D. Klein. [http://www.mitpressjournals.org/doi/pdf/10.1162/COLI_a_00127 "Learning dependency-based compositional semantics"]. In Computational Linguistics, 39.2, p. 389-446. </ref>. The second makes use of embedding methods to represent queries and candidate answers (typically extracted from a knowledge base) as high-dimensional vectors. Learning involves producing embeddings that place query vectors close to the vectors that correspond to their answers. <ref>Bordes, A., S. Chopra, and J. Weston. [http://www.thespermwhale.com/jaseweston/papers/fbqa.pdf "Question Answering with Subgraph Embeddings"]. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, (2014)</ref>. Memory networks fall under the latter approach, and existing variants of this approach can been seen as special cases of the memory network architecture (e.g., <ref>Bordes, A., S. Chopra, and J. Weston. [http://www.thespermwhale.com/jaseweston/papers/fbqa.pdf "Question Answering with Subgraph Embeddings"]. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, (2014)</ref>) | |||

Compared to recent knowledge based approaches memory networks differ in that they do not apply a two-stage strategy: (i) apply information extraction methods to first build the KB; followed by (ii) inference over the the KB. Classical neural network models have also been explored. Typically, this type of memory is distributed across the whole network of weights of the model rather than being compartmentalized into memory locations. Memory networks combine compartmentalized memory with neural network modules that can learn how to read and write to that memory, e.g., to perform reasoning they can interactively read salient facts from the memory. | |||

= Experimental Results = | = Experimental Results = | ||

The authors first test a simple memory network (i.e. <math>\ k=1 </math> on a large scale question answering task involving a dataset consisting of 14 million subject-relation-object triplets. Each triplet is stored as an item in memory, and the answers to particular questions are a single entity (i.e. a subject or object) in one these triplets. The results in the table below indicate that memory networks perform quite well on this task. Note that the memory network with 'bag of words' features includes the extension designed to indicate the presence of exact matches of words in a query and a candidate answer. This seems to contribute significantly to improved performance. | |||

[[File:largescale.png | frame | centre | Results on a large-scale QA task.]] | |||

Scoring a query against all 14 million candidate memories is slow, so the the authors also test their hashing techniques and report the resulting speed-accuracy tradeoffs. As shown in the figure below, the use of cluster-based hashing results in a negligible drop in performance while considering only 1/80th of the complete set of items stored in memory. | |||

[[File:hash.png | frame | centre | Memory hashing results on a large-scale QA task.]] | |||

To test their model on more complex tasks that require chains of inference, the authors create a synthetic dataset consisting approximately 7 thousand statements and 3 thousand questions focused on a toy environment comprised of a 4 people, 3 objects, and 5 rooms. Stories involving multiple statements describing actions performed by these people (e.g. moving an object from one room to another) are used to define the question answering tasks. Questions are focused on a single entity mentioned in a story, and the difficulty of the task is controlled by varying how long ago the most recent mention of this entity is in the story (e.g. the most recent statement in the story vs. the 5th most recent statement in the story). The figure at the top of this page gives an example of these tasks being performed. | |||

In the results below, 'Difficulty 1' tasks are those in which the entity being asked about was mentioned in the most recent statement of the story, while 'Difficulty 5' tasks are those in which the entity being asked about was mentioned in one of the 5 most recent statements. Questions about an 'actor' concern a statement that mentions a person but not an object (e.g. "John went to the garden"). The questions may ask for the current location of the person (e.g. "where is John?") or the previous location of the person (e.g. "Where was John before the garden?") (the column labelled "actor w/o before" in the figure below excludes this latter type of question). More complex questions involve asking about the object in a statement that mentions both a person and an object (e.g. "John dropped the milk", the question might "Where is the milk?"). Note that this task is more challenging, since it requires using multiple pieces of information (i.e. where John was, and what he did while he was there). Comparisons using RNNs and LSTMs are also reported, and for multiword responses as in the first figure above, an LSTM is used in place of <math>\ R </math> | |||

[[File:toyqa.png | frame | centre | Test accuracy on a simulated world QA task.]] | |||

What is most notable about these results is that the inclusion of time features in the MemNN seems to be responsible for most of the improvement over RNNs and LSTMs. | |||

= Discussion = | = Discussion = | ||

One potential concern about the memory network architecture concerns its generalizability to large values of <math>\ k </math>. To explain, each additional supporting memory increases the number of columns in the embedding matrices by the size of the model's vocabulary. This could become impractical for standard vocabularies with tens of thousands of terms. | |||

A second concern is that the memory network, as described, is engineered to answer very particular kinds of questions (i.e. questions in which the order of events is important). To handle different kinds of questions, different features would likely need to be added (e.g. quantificational features to handle statements involving quantifiers such as 'some', 'many', etc.). This sort of ad-hoc design calls into question whether the architecture is capable of performing scalable, general-purpose question answering. | |||

= Resources = | = Resources = | ||

= | Memory Network implementations on [https://github.com/facebook/MemNN Github] | ||

= Bibliography = | |||

<references /> | |||

Latest revision as of 09:46, 30 August 2017

Introduction

Most supervised machine learning models are designed to approximate a function that maps input data to a desirable output (e.g. a class label for an image or a translation of a sentence from one language to another). In this sense, such models perform inference using a 'fixed' memory in the form of a set of parameters learned during training. For example, the memory of a recurrent neural network is constituted largely by the weights on the recurrent connections to its hidden layer (along with the layer's activities). As is well known, this form of memory is inherently limited given the fixed dimensionality of the weights in question. It is largely for this reason that recurrent nets have difficulty learning long-range dependencies in sequential data. Learning such dependencies, note, requires remembering items in a sequence for a large number of time steps.

For an interesting class of problems, it is essential for a model to be able to learn long-term dependencies, and to more generally be able to learn to perform inferences using an arbitrarily large memory. Question-answering tasks are paradigmatic of this class of problems, since performing well on such tasks requires remembering all of the information that constitutes a possible answer to the questions being posed. In principle, a recurrent network such as an LSTM could learn to perform QA tasks, but in practice, the amount of information that can be retained by the weights and the hidden states in the LSTM is simply insufficient.

Given this need for a model architecture the combines inference and memory in a sophisticated manner, the authors of this paper propose what they refer to as a "Memory Network". In brief, a memory network is a model that learns to read and write data to an arbitrarily large long-term memory, while also using the data in this memory to perform inferences. The rest of this summary describes the components of a memory network in greater detail, along with some experiments describing its application to a question answering task involving short stories. Below is an example illustrating the model's ability to answer simple questions after being presented with short, multi-sentence stories.

Model Architecture

A memory network is composed of a memory [math]\displaystyle{ \ m }[/math] (in the form of a collection of vectors or strings, indexed individually as [math]\displaystyle{ \ m_i }[/math]), and four possibly learned functions [math]\displaystyle{ \ I }[/math], [math]\displaystyle{ \ G }[/math], [math]\displaystyle{ \ O }[/math], and [math]\displaystyle{ \ R }[/math]. The functions are defined as follows:

- [math]\displaystyle{ \ I }[/math] maps a natural language expression onto an 'input' feature representation (e.g., a real-valued vector). The input can either be a fact to be added to the memory [math]\displaystyle{ \ m }[/math] (e.g. 'John is at the university') , or a question for which an answer is being sought (e.g. 'Where is John?').

- [math]\displaystyle{ \ G }[/math] updates the contents of the memory [math]\displaystyle{ \ m }[/math] on the basis of an input. The updating can involve simply writing the input to new memory location, or it can involve the modification or compression of existing memories to perform a kind of generalization on the state of the memory.

- [math]\displaystyle{ \ O }[/math] produces an 'output' feature representation given a new input and the current state of the memory. The input and output feature representations reside in the same embedding space.

- [math]\displaystyle{ \ R }[/math] produces a response given an output feature representation. This response is usually a word or a sentence, but in principle it could also be an action of some kind (e.g. the movement of a robot)

To give a quick overview of how the model operates, an input x will first be mapped to a feature representation [math]\displaystyle{ \ I(x) }[/math] Then, for all memories i, the following update is applied: [math]\displaystyle{ \ m_i = G(m_i, I(x), m) }[/math]. This means that each memory is updated on the basis of the input x and the current state of the memory [math]\displaystyle{ \ m }[/math]. In the case where each input is simply written to memory, [math]\displaystyle{ \ G }[/math] might function to simply select an index that is currently unused and write [math]\displaystyle{ \ I(x) }[/math] to the memory location corresponding to this index. Next, an output feature representation is computed as [math]\displaystyle{ \ o=O(I(x), m) }[/math], and a response, [math]\displaystyle{ \ r }[/math], is computed directly from this feature representation as [math]\displaystyle{ \ r=R(o) }[/math]. [math]\displaystyle{ \ O }[/math] can be interpreted as retrieving a small selection of memories that are relevant to producing a good response, and [math]\displaystyle{ \ R }[/math] actually produces the response given the feature representation produced from the relevant memories by [math]\displaystyle{ \ O }[/math].

A Basic Implementation

In a simple version of the memory network, input text is just written to memory in unaltered form. Or in other words, [math]\displaystyle{ \ I(x) }[/math] simply returns x, and [math]\displaystyle{ \ G }[/math] writes this text to a new memory slot [math]\displaystyle{ \ m_{N+1} }[/math] if [math]\displaystyle{ \ N }[/math] is the number of currently filled slots. The memory is accordingly an array of strings, and the inclusion of a new string does nothing to modify existing strings.

Given as much, most of the work being done by the model is performed by the functions [math]\displaystyle{ \ O }[/math] and [math]\displaystyle{ \ R }[/math]. The job of [math]\displaystyle{ \ O }[/math] is to produce an output feature representation by selecting [math]\displaystyle{ \ k }[/math] supporting memories from [math]\displaystyle{ \ m }[/math] on the basis of the input x. In the experiments described in this paper, [math]\displaystyle{ \ k }[/math] is set to either 1 or 2. In the case that [math]\displaystyle{ \ k=1 }[/math], the function [math]\displaystyle{ \ O }[/math] behaves as follows:

- [math]\displaystyle{ \ o_1 = O_1(x, m) = argmax_{i = 1 ... N} S_O(x, m_i) }[/math]

where [math]\displaystyle{ \ S_O }[/math] is a function that scores a candidate memory for its compatibility with x. Essentially, one 'supporting' memory is selected from [math]\displaystyle{ \ m }[/math] as being most likely to contain the information needed to answer the question posed in [math]\displaystyle{ \ x }[/math]. In this case, the output is [math]\displaystyle{ \ o_1 = [x, m_{o_1}] }[/math], or a list containing the input question and one supporting memory. Alternatively, in the case that [math]\displaystyle{ \ k=2 }[/math]', a second supporting memory is selected on the basis of the input and the first supporting memory, as follows:

- [math]\displaystyle{ \ o_2 = O_2(x, m) = argmax_{i = 1 ... N} S_O([x, m_{o_1}], m_i) }[/math]

Now, the overall output is [math]\displaystyle{ \ o_2 = [x, m_{o_1}, m_{o_2}] }[/math]. (These lists are translated into feature representations as described below). Finally, the result of [math]\displaystyle{ \ O }[/math] is used to produce a response in the form of a single word via [math]\displaystyle{ \ R }[/math] as follows:

- [math]\displaystyle{ \ r = argmax_{w \epsilon W} S_R([x, m_{o_1}, m_{o_2}], w) }[/math]

In short, a response is produced by scoring each word in a set of candidate words against the representation produced by the combination of the input and the two supporting memories. The highest scoring candidate word is then chosen as the model's output. The learned portions of [math]\displaystyle{ \ O }[/math] and [math]\displaystyle{ \ R }[/math] are the parameters of the functions [math]\displaystyle{ \ S_O }[/math] and [math]\displaystyle{ \ S_R }[/math], which perform embeddings of the raw text constituting each function argument, and then return the dot product of these two embeddings as a score. Formally, the function [math]\displaystyle{ \ S_O }[/math] can be defined as follows; [math]\displaystyle{ \ S_R }[/math] is defined analogously:

- [math]\displaystyle{ \ S_O(x, y) = \Phi_x(x)^T U^T U \Phi_y(y) }[/math]

In this equation, [math]\displaystyle{ \ U }[/math] is an [math]\displaystyle{ \ n \times D }[/math] matrix, where n is the dimension of the embedding space, and D is the number of features used to represent each function argument. [math]\displaystyle{ \ \Phi_x }[/math] and [math]\displaystyle{ \ \Phi_y }[/math] are functions that map each argument (which are strings) into the feature space. In the implementations considered in this paper, the feature space makes use of a bag-of-words representation, such that there are 3 binary features for each word in the model's vocabulary. The first feature corresponds to the presence of the word in the input x, the second feature corresponds to the presence of the word in first supporting memory that is being used to select a second supporting memory, and the third feature representation corresponds to the presence of the word in a candidate memory being scored (i.e. either the first or second supporting memory retrieved by the model). Having these different features allows the model to learn distinct representations for the same word depending on whether the word is present in an input question or in a string stored in memory.

Intuitively, it helps to think of the columns of [math]\displaystyle{ \ U }[/math] containing distributed representations of each word in the vocabulary (specifically, there are 3 representations and hence 3 columns devoted to each word). The binary feature representation [math]\displaystyle{ \ \Phi_x(x) }[/math] maps the text in x onto a binary feature vector, where 1's in the vector indicate the presence of a particular word in x, and 0's indicate the absence of this word. Note that different elements of the vector will be set to 1 depending on whether the word occurs in the input x or in a supporting memory (i.e. when x is a list containing the input and a supporting memory). The matrix-vector multiplications in the above equation effectively extract and sum the distributed representations corresponding to each of the inputs, x and y. Thus, a single distributed representation is produced for each input, and the resulting score is the dot product of these two vectors (which in turn is the cosine of the angle between the vectors scaled by the product of the vector norms). In the case where x is the input query, and y is a candidate memory, a high dot product indicates that the model thinks that the candidate in question is very relevant to answering the input query. In the case where x is the output of [math]\displaystyle{ \ O }[/math] and y is a candidate response word, a high dot product indicates that the model thinks that the response word is an appropriate answer given the output feature representation produced by [math]\displaystyle{ \ O }[/math]. Distinct embedding matrices [math]\displaystyle{ \ U_O }[/math] and [math]\displaystyle{ \ U_R }[/math] are used to compute the output feature representation and the response.

The goal of learning is find embedding matrices in which the representations produced for queries, supporting memories, and responses are spatially related such that representations of relevant supporting memories are close to the representations of a query, and such that representations of individual words are close to the output feature representations of the questions they answer. The method used to perform this learning is described in the next section.

The Training Procedure

Learning is conducted in a supervised manner; the correct responses and supporting memories for each query are provided during training. The following margin-ranking loss function is used in tandem with stochastic gradient descent to learn the parameters of [math]\displaystyle{ \ U_O }[/math] and [math]\displaystyle{ \ U_R }[/math], given an input x, a desired response r, and desired supporting memories, [math]\displaystyle{ \ m_{o_1} }[/math] and [math]\displaystyle{ \ m_{o_2} }[/math]:

- [math]\displaystyle{ \sum_{f \neq m_{o_1}} max(0, \gamma + S_O (x, f) - S_O (x, m_{o_1})) + \sum_{f^' \neq m_{o_2}} max(0, \gamma + S_O ([x, m_{o_1}], f^') - S_O ([x, m_{o_1}], m_{o_2})) + }[/math]

- [math]\displaystyle{ \sum_{r^' \neq r} max(0, \gamma + S_R ([x, m_{o_1}, m_{o_2}], r^') - S_R ([x, m_{o_1}, m_{o_2}], r)) }[/math]

where [math]\displaystyle{ \ f }[/math], [math]\displaystyle{ \ f^' }[/math] and [math]\displaystyle{ \ r^' }[/math] correspond to incorrect candidates for the first supporting memory, the second supporting memory, and the output response, and [math]\displaystyle{ \gamma }[/math] corresponds to the margin. Intuitively, each term in the sum penalizes the current parameters in proportion to the number of incorrect memories and responses that get assigned a score within the margin of the score of the correct memories and responses. Or in other words, if the score of a correct candidate memory / response is higher than the score of every incorrect candidate by at least [math]\displaystyle{ \gamma }[/math], the cost is 0. Otherwise, the cost is the sum over all of the differences between the incorrect scores (plus gamma) and the correct score. In fact, this is just the standard hinge loss function. Weston et al. speed up gradient descent by sampling incorrect candidates instead of using all incorrect candidates in the calculation of the gradient for each training example.

Extensions to the Basic Implementation

Some limitations of the basic implementation are that it can only output single word responses, can only accept strings (rather than sequences) as input, and cannot use its memory in efficient or otherwise interesting ways. The authors propose a series of extensions to the basic implementation described in the previous section that are designed to overcome these limitations. First, they propose a segmenting function that learns when to segment an input sequence into discrete chunks that get written to individual memory slots. The segmenter is modeled similarly to other components, as an embedding model of the form:

[math]\displaystyle{ seg(c)=W^T_{seg}U_s\Phi_{seg}(c) }[/math]

where [math]\displaystyle{ W_{seg} }[/math] is a vector (effectively the parameters of a linear classifier in embedding space), and [math]\displaystyle{ c }[/math] is the sequence of input words represented as a bag of words using a separate dictionary. If [math]\displaystyle{ seg(c) \gt \gamma }[/math], where [math]\displaystyle{ \gamma }[/math] is the margin, then this sequence is recognized as a segment.

Second, they propose the use of hashing to avoid scoring a prohibitively large number of candidate memories. Each input corresponding to a query is hashed into some number of buckets, and only candidates within these buckets are scored during the selection of supporting memories. Hashing is done either by making a bucket per word in the model's vocabulary, or by clustering the learning word embeddings, and creating a bucket per cluster.

The most important extension proposed by the authors involves incorporating information about the time at which a memory was written into the scoring function [math]\displaystyle{ / S_O }[/math]. The model needs to be able to make use of such information to correctly answer questions such as "Where was John before the university" (assuming the model has been told some story about John). To handle temporal information, the feature space is extend to include features that indicate the relative time between when two items where written to memory. Formally, this yields the following revised scoring function:

[math]\displaystyle{ \ S_{O_t}(x, y, y^') = \Phi_x(x)^T U^T U (\Phi_y(y)-\Phi_y(y^')+\Phi_t(x,y,y^')) }[/math]

The novelty here lies in the feature mapping function [math]\displaystyle{ \Phi_t }[/math], which takes an input and two candidate supporting memories, and returns a binary feature vector as before, but with the addition of three features that indicate whether [math]\displaystyle{ x }[/math] is older than [math]\displaystyle{ y }[/math], whether [math]\displaystyle{ x }[/math] is older than [math]\displaystyle{ y^' }[/math], and whether [math]\displaystyle{ y }[/math] is older than [math]\displaystyle{ y' }[/math]. The model loops over all candidate memories, comparing candidates [math]\displaystyle{ y }[/math] and [math]\displaystyle{ y^' }[/math]. If [math]\displaystyle{ S_{O_t}(x, y, y^') }[/math] is greater than 0, then [math]\displaystyle{ y }[/math] is preferred over [math]\displaystyle{ y^' }[/math]; otherwise, [math]\displaystyle{ y' }[/math] is preferred. If [math]\displaystyle{ y' }[/math] is preferred, [math]\displaystyle{ y }[/math] is replaced by [math]\displaystyle{ y' }[/math] and the loop continues to the next candidate memory (i.e. the new [math]\displaystyle{ y^' }[/math]. Once the loop finishes iterating over the entire memory, the winning candidate [math]\displaystyle{ y }[/math] is chosen as the supporting memory.

Some further extensions concern allowing the model to deal with words not included in it's vocabulary, and to more effectively take advantage of exact word matches between input queries and candidate supporting memories.

Embedding models cannot efficiently use exact word matches due to the low dimensionality [math]\displaystyle{ n }[/math]. One solution is to score a pair [math]\displaystyle{ x,y }[/math] with [math]\displaystyle{ \ \Phi_x(x)U^TU\Phi_y(y)+\lambda\Phi_x(x)^T\Phi_y(y) }[/math] instead. That is, add the “bag of words” matching score to the learned embedding score (with a mixing parameter λ). Another related way is to stay in the n-dimensional embedding space, but to extend the feature representation D with matching features, e.g., one per word. A matching feature indicates if a word occurs in both x and y. That is, we score with [math]\displaystyle{ \ \Phi_x(x)U^TU\Phi_y(y,x) }[/math] where [math]\displaystyle{ \ \Phi_y }[/math] is actually built conditionally on x: if some of the words in y match the words in x we set those matching features to 1. Unseen words can be modeled similarly by using matching features on their context words. This then gives a feature space of D = 8|W|.

Related work

There are two general approaches to performing question answering that have been developed in the literature. The first makes use of a technique known as semantic parsing to map a query expressed in natural language onto a representation in some formal language that directly extracts information from some external memory such as a knowledge base<ref>J. Berant, A. Chou, R. Frostig, and P. Liang. "Semantic parsing on Freebase from question-answer pairs." . In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, October 2013.</ref><ref>P. Liang, M. Jordan, and D. Klein. "Learning dependency-based compositional semantics". In Computational Linguistics, 39.2, p. 389-446. </ref>. The second makes use of embedding methods to represent queries and candidate answers (typically extracted from a knowledge base) as high-dimensional vectors. Learning involves producing embeddings that place query vectors close to the vectors that correspond to their answers. <ref>Bordes, A., S. Chopra, and J. Weston. "Question Answering with Subgraph Embeddings". In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, (2014)</ref>. Memory networks fall under the latter approach, and existing variants of this approach can been seen as special cases of the memory network architecture (e.g., <ref>Bordes, A., S. Chopra, and J. Weston. "Question Answering with Subgraph Embeddings". In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, (2014)</ref>)

Compared to recent knowledge based approaches memory networks differ in that they do not apply a two-stage strategy: (i) apply information extraction methods to first build the KB; followed by (ii) inference over the the KB. Classical neural network models have also been explored. Typically, this type of memory is distributed across the whole network of weights of the model rather than being compartmentalized into memory locations. Memory networks combine compartmentalized memory with neural network modules that can learn how to read and write to that memory, e.g., to perform reasoning they can interactively read salient facts from the memory.

Experimental Results

The authors first test a simple memory network (i.e. [math]\displaystyle{ \ k=1 }[/math] on a large scale question answering task involving a dataset consisting of 14 million subject-relation-object triplets. Each triplet is stored as an item in memory, and the answers to particular questions are a single entity (i.e. a subject or object) in one these triplets. The results in the table below indicate that memory networks perform quite well on this task. Note that the memory network with 'bag of words' features includes the extension designed to indicate the presence of exact matches of words in a query and a candidate answer. This seems to contribute significantly to improved performance.

Scoring a query against all 14 million candidate memories is slow, so the the authors also test their hashing techniques and report the resulting speed-accuracy tradeoffs. As shown in the figure below, the use of cluster-based hashing results in a negligible drop in performance while considering only 1/80th of the complete set of items stored in memory.

To test their model on more complex tasks that require chains of inference, the authors create a synthetic dataset consisting approximately 7 thousand statements and 3 thousand questions focused on a toy environment comprised of a 4 people, 3 objects, and 5 rooms. Stories involving multiple statements describing actions performed by these people (e.g. moving an object from one room to another) are used to define the question answering tasks. Questions are focused on a single entity mentioned in a story, and the difficulty of the task is controlled by varying how long ago the most recent mention of this entity is in the story (e.g. the most recent statement in the story vs. the 5th most recent statement in the story). The figure at the top of this page gives an example of these tasks being performed.

In the results below, 'Difficulty 1' tasks are those in which the entity being asked about was mentioned in the most recent statement of the story, while 'Difficulty 5' tasks are those in which the entity being asked about was mentioned in one of the 5 most recent statements. Questions about an 'actor' concern a statement that mentions a person but not an object (e.g. "John went to the garden"). The questions may ask for the current location of the person (e.g. "where is John?") or the previous location of the person (e.g. "Where was John before the garden?") (the column labelled "actor w/o before" in the figure below excludes this latter type of question). More complex questions involve asking about the object in a statement that mentions both a person and an object (e.g. "John dropped the milk", the question might "Where is the milk?"). Note that this task is more challenging, since it requires using multiple pieces of information (i.e. where John was, and what he did while he was there). Comparisons using RNNs and LSTMs are also reported, and for multiword responses as in the first figure above, an LSTM is used in place of [math]\displaystyle{ \ R }[/math]

What is most notable about these results is that the inclusion of time features in the MemNN seems to be responsible for most of the improvement over RNNs and LSTMs.

Discussion

One potential concern about the memory network architecture concerns its generalizability to large values of [math]\displaystyle{ \ k }[/math]. To explain, each additional supporting memory increases the number of columns in the embedding matrices by the size of the model's vocabulary. This could become impractical for standard vocabularies with tens of thousands of terms.

A second concern is that the memory network, as described, is engineered to answer very particular kinds of questions (i.e. questions in which the order of events is important). To handle different kinds of questions, different features would likely need to be added (e.g. quantificational features to handle statements involving quantifiers such as 'some', 'many', etc.). This sort of ad-hoc design calls into question whether the architecture is capable of performing scalable, general-purpose question answering.

Resources

Memory Network implementations on Github

Bibliography

<references />