deep Sparse Rectifier Neural Networks: Difference between revisions

m (Conversion script moved page Deep Sparse Rectifier Neural Networks to deep Sparse Rectifier Neural Networks: Converting page titles to lowercase) |

|||

| (27 intermediate revisions by 10 users not shown) | |||

| Line 1: | Line 1: | ||

= Introduction = | = Introduction = | ||

Machine learning scientists and computational neuroscientists deal with neural networks differently. Machine learning scientists aim to obtain models that are easy to train and easy to generalize, while neuroscientists' objective is to produce useful representation of the scientific data. In other words, machine learning scientists care more about efficiency, while neuroscientists care more about interpretability of the model. | |||

In this paper they show that two common gaps between computational neuroscience models and machine learning neural network models can be bridged by rectifier activation function. One is between deep networks learnt with and without unsupervised pre-training; the other one is between the activation function and sparsity in neural networks. | |||

== Sparsity == | == Biological Plausibility and Sparsity == | ||

In the brain, neurons rarely fire at the same time as a way to balance quality of representation and energy conservation. This is in stark contrast to sigmoid neurons which fire at 1/2 of their maximum rate when at zero. A solution to this problem is to use a rectifier neuron which does not fire at it's zero value. This rectifier linear unit is inspired by a common biological model of neuron, the leaky integrate-and-fire model (LIF), proposed by Dayan and Abott<ref> | |||

Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems | |||

</ref>. It's function is illustrated in the figure below (middle). | |||

<gallery mode=packed widths="280px" heights="250px"> | |||

Image:sig_neuron.png|Sigmoid and TANH Neuron | |||

Image:lif_neuron.png|Leaky Integrate Fire Neuron | |||

Image:rect_neuron.png|Rectified Linear Neuron | |||

</gallery> | |||

Given that the rectifier neuron has a larger range of inputs that will be output as zero, it's representation will obviously be more sparse. In the paper, the two most salient advantages of sparsity are: | |||

- '''Information Disentangling''' As opposed to a dense representation, where every slight input change results in a considerable output change, a the non-zero items of a sparse representation remain almost constant to slight input changes. | |||

- '''Variable Dimensionality''' A sparse representation can effectively choose how many dimensions to use to represent a variable, since it choose how many non-zero elements to contribute. Thus, the precision is variable, allowing for more efficient representation of complex items. | |||

Further benefits of a sparse representation and rectified linear neurons in particular are better linear separability (because the input is represented in a higher-dimensional space) and less computational complexity (most units are off and for on-units only a linear functions has to be computed). | |||

However, it should also be noted that sparsity reduces the capacity of the model because each unit takes part in the representation of fewer values. | |||

== Advantages of rectified linear units == | |||

The rectifier activation function <math>\,max(0, x)</math> allows a network to easily obtain sparse representations since only a subset of hidden units will have a non-zero activation value for some given input and this sparsity can be further increased through regularization methods. Therefore, the rectified linear activation function will utilize the advantages listed in the previous section for sparsity. | |||

For a given input, only a subset of hidden units in each layer will have non-zero activation values. The rest of the hidden units will have zero and they are essentially turned off. Each hidden unit activation value is then composed of a linear combination of the active (non-zero) hidden units in the previous layer due to the linearity of the rectified linear function. By repeating this through each layer, one can see that the neural network is actually an exponentially increasing number of linear models who share parameters since the later layers will use the same values from the earlier layers. Since the network is linear, the gradient is easy to calculate and compute and travels back through the active nodes without vanishing gradient problem caused by non-linear sigmoid or tanh functions. In addition to the standard one, three versions of ReLUthat are modified as: Leaky, Parametric, and Randomized leaky ReLU. | |||

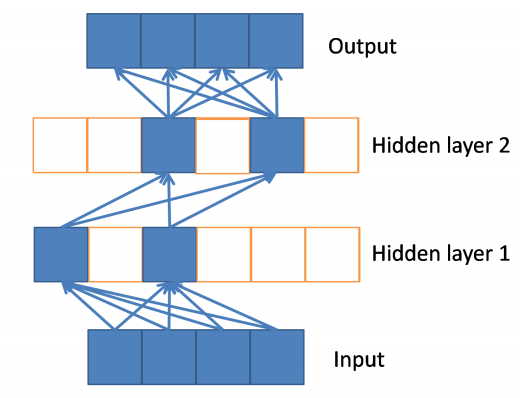

The sparsity and linear model can be seen in the figure the researchers made: | |||

[[File:RLU.PNG]] | |||

Each layer is a linear combination of the previous layer. | |||

== Potential problems of rectified linear units == | |||

The zero derivative below zero in the rectified neurons blocks the back-propagation of the gradient during learning. Using a smooth variant of the rectification non-linearity (the softplus activation) this effect was investigated. Surprisingly, the results suggest the hard rectifications performs better. The authors hypothesize that the hard rectification is not a problem as long as the gradient can be propagated along some paths through the network and that the complete shut-off with the hard rectification sharpens the credit attribution to neurons in the learning phase. | |||

Furthermore, the unbounded nature of the rectification non-linearity can lead to numerical instabilities if activations grow too large. To circumvent this a <math>L_1</math> regularizer is used. Also, if symmetry is required, this can be obtained by using two rectifier units with shared parameters, but requires twice as many hidden units as a network with a symmetric activation function. | |||

Finally, rectifier networks are subject to ill conditioning of the parametrization. Biases and weights can be scaled in different (and consistent) ways while preserving the same overall network function. | |||

This paper addresses several difficulties when one wants to use rectifier activation into stacked denoising auto-encoder. The author have experienced several strategies to try to solve these problem. | |||

1. Use a softplus activation function for the reconstruction layer, along with a quadratic cost: <math> L(x, \theta) = ||x-log(1+exp(f(\tilde{x}, \theta)))||^2</math> | |||

2. scale the rectifier activation values between 0 and 1, then use a sigmoid activation function for the reconstruction layer, along with a cross-entropy reconstruction cost. <math> L(x, \theta) = -xlog(\sigma(f(\tilde{x}, \theta))) - (1-x)log(1-\sigma(f(\tilde{x}, \theta))) </math> | |||

The first strategy yield better generalization on image data and the second one on text data. | |||

= Experiments = | = Experiments = | ||

| Line 15: | Line 63: | ||

== Results == | == Results == | ||

[[File:rectifier_res_1. | '''Results from image classification''' | ||

[[File:rectifier_res_1.png]] | |||

[[File:rectifier_res_2. | '''Results from sentiment classification''' | ||

[[File:rectifier_res_2.png]] | |||

For image recognition task, they find that there is almost no improvement when using unsupervised pre-training with rectifier activations, contrary to what is experienced using tanh or softplus. However, it achieves best performance when the network is trained Without unsupervised pre-training. | |||

In the NORB and sentiment analysis cases, the network benefited greatly from pre-training. However, the benefit in NORB diminished as the training set size grew. | In the NORB and sentiment analysis cases, the network benefited greatly from pre-training. However, the benefit in NORB diminished as the training set size grew. | ||

| Line 23: | Line 75: | ||

The result from the Amazon dataset was 78.95%, while the state of the art was 73.72%. | The result from the Amazon dataset was 78.95%, while the state of the art was 73.72%. | ||

== Criticism == | The sparsity achieved with the rectified linear neurons helps to diminish the gap between networks with unsupervised pre-training and no pre-training. | ||

== Discussion / Criticism == | |||

* Rectifier neurons really aren't biologically plausible for a variety of reasons. Namely, the neurons in the cortex do not have tuning curves resembling the rectifier. Additionally, the ideal sparsity of the rectifier networks were from 50 to 80%, while the brain is estimated to have a sparsity of around 95 to 99%. | |||

* The Sparsity property encouraged by ReLu is a double edged sword, while sparsity encourages information disentangling, efficient variable-size representation, linear separability, increased robustness as suggested by the author of this paper, <ref>Szegedy, Christian, et al. "Going deeper with convolutions." arXiv preprint arXiv:1409.4842 (2014).</ref> argues that computing sparse non-uniform data structures is very inefficient, the overhead and cache-misses would make it computationally expensive to justify using sparse data structures. | |||

* ReLu does not have vanishing gradient problems | |||

* ReLu can be prone to "die", in other words it may output same value regardless of what input you give the ReLu unit. This occurs when a large negative bias to the unit is learnt causing the output of the ReLu to be zero, thus getting stuck at zero because gradient at zero is zero. Solutions to mitigate this problem include techniques such as Leaky ReLu and Maxout. | |||

= Bibliography = | |||

<references /> | |||

Latest revision as of 08:46, 30 August 2017

Introduction

Machine learning scientists and computational neuroscientists deal with neural networks differently. Machine learning scientists aim to obtain models that are easy to train and easy to generalize, while neuroscientists' objective is to produce useful representation of the scientific data. In other words, machine learning scientists care more about efficiency, while neuroscientists care more about interpretability of the model.

In this paper they show that two common gaps between computational neuroscience models and machine learning neural network models can be bridged by rectifier activation function. One is between deep networks learnt with and without unsupervised pre-training; the other one is between the activation function and sparsity in neural networks.

Biological Plausibility and Sparsity

In the brain, neurons rarely fire at the same time as a way to balance quality of representation and energy conservation. This is in stark contrast to sigmoid neurons which fire at 1/2 of their maximum rate when at zero. A solution to this problem is to use a rectifier neuron which does not fire at it's zero value. This rectifier linear unit is inspired by a common biological model of neuron, the leaky integrate-and-fire model (LIF), proposed by Dayan and Abott<ref> Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems </ref>. It's function is illustrated in the figure below (middle).

-

Sigmoid and TANH Neuron

-

Leaky Integrate Fire Neuron

-

Rectified Linear Neuron

Given that the rectifier neuron has a larger range of inputs that will be output as zero, it's representation will obviously be more sparse. In the paper, the two most salient advantages of sparsity are:

- Information Disentangling As opposed to a dense representation, where every slight input change results in a considerable output change, a the non-zero items of a sparse representation remain almost constant to slight input changes.

- Variable Dimensionality A sparse representation can effectively choose how many dimensions to use to represent a variable, since it choose how many non-zero elements to contribute. Thus, the precision is variable, allowing for more efficient representation of complex items.

Further benefits of a sparse representation and rectified linear neurons in particular are better linear separability (because the input is represented in a higher-dimensional space) and less computational complexity (most units are off and for on-units only a linear functions has to be computed).

However, it should also be noted that sparsity reduces the capacity of the model because each unit takes part in the representation of fewer values.

Advantages of rectified linear units

The rectifier activation function [math]\displaystyle{ \,max(0, x) }[/math] allows a network to easily obtain sparse representations since only a subset of hidden units will have a non-zero activation value for some given input and this sparsity can be further increased through regularization methods. Therefore, the rectified linear activation function will utilize the advantages listed in the previous section for sparsity.

For a given input, only a subset of hidden units in each layer will have non-zero activation values. The rest of the hidden units will have zero and they are essentially turned off. Each hidden unit activation value is then composed of a linear combination of the active (non-zero) hidden units in the previous layer due to the linearity of the rectified linear function. By repeating this through each layer, one can see that the neural network is actually an exponentially increasing number of linear models who share parameters since the later layers will use the same values from the earlier layers. Since the network is linear, the gradient is easy to calculate and compute and travels back through the active nodes without vanishing gradient problem caused by non-linear sigmoid or tanh functions. In addition to the standard one, three versions of ReLUthat are modified as: Leaky, Parametric, and Randomized leaky ReLU.

The sparsity and linear model can be seen in the figure the researchers made:

Each layer is a linear combination of the previous layer.

Potential problems of rectified linear units

The zero derivative below zero in the rectified neurons blocks the back-propagation of the gradient during learning. Using a smooth variant of the rectification non-linearity (the softplus activation) this effect was investigated. Surprisingly, the results suggest the hard rectifications performs better. The authors hypothesize that the hard rectification is not a problem as long as the gradient can be propagated along some paths through the network and that the complete shut-off with the hard rectification sharpens the credit attribution to neurons in the learning phase.

Furthermore, the unbounded nature of the rectification non-linearity can lead to numerical instabilities if activations grow too large. To circumvent this a [math]\displaystyle{ L_1 }[/math] regularizer is used. Also, if symmetry is required, this can be obtained by using two rectifier units with shared parameters, but requires twice as many hidden units as a network with a symmetric activation function.

Finally, rectifier networks are subject to ill conditioning of the parametrization. Biases and weights can be scaled in different (and consistent) ways while preserving the same overall network function.

This paper addresses several difficulties when one wants to use rectifier activation into stacked denoising auto-encoder. The author have experienced several strategies to try to solve these problem.

1. Use a softplus activation function for the reconstruction layer, along with a quadratic cost: [math]\displaystyle{ L(x, \theta) = ||x-log(1+exp(f(\tilde{x}, \theta)))||^2 }[/math]

2. scale the rectifier activation values between 0 and 1, then use a sigmoid activation function for the reconstruction layer, along with a cross-entropy reconstruction cost. [math]\displaystyle{ L(x, \theta) = -xlog(\sigma(f(\tilde{x}, \theta))) - (1-x)log(1-\sigma(f(\tilde{x}, \theta))) }[/math]

The first strategy yield better generalization on image data and the second one on text data.

Experiments

Networks with rectifier neurons were applied to the domains of image recognition and sentiment analysis. The datasets for image recognition included both black and white (MNIST, NISTP), colour (CIFAR10) and stereo (NORB) images.

The datasets for sentiment analysis were taken from opentable.com and Amazon. The task of both was to predict the star rating based off the text blurb of the review.

Results

Results from image classification File:rectifier res 1.png

Results from sentiment classification File:rectifier res 2.png

For image recognition task, they find that there is almost no improvement when using unsupervised pre-training with rectifier activations, contrary to what is experienced using tanh or softplus. However, it achieves best performance when the network is trained Without unsupervised pre-training.

In the NORB and sentiment analysis cases, the network benefited greatly from pre-training. However, the benefit in NORB diminished as the training set size grew.

The result from the Amazon dataset was 78.95%, while the state of the art was 73.72%.

The sparsity achieved with the rectified linear neurons helps to diminish the gap between networks with unsupervised pre-training and no pre-training.

Discussion / Criticism

- Rectifier neurons really aren't biologically plausible for a variety of reasons. Namely, the neurons in the cortex do not have tuning curves resembling the rectifier. Additionally, the ideal sparsity of the rectifier networks were from 50 to 80%, while the brain is estimated to have a sparsity of around 95 to 99%.

- The Sparsity property encouraged by ReLu is a double edged sword, while sparsity encourages information disentangling, efficient variable-size representation, linear separability, increased robustness as suggested by the author of this paper, <ref>Szegedy, Christian, et al. "Going deeper with convolutions." arXiv preprint arXiv:1409.4842 (2014).</ref> argues that computing sparse non-uniform data structures is very inefficient, the overhead and cache-misses would make it computationally expensive to justify using sparse data structures.

- ReLu does not have vanishing gradient problems

- ReLu can be prone to "die", in other words it may output same value regardless of what input you give the ReLu unit. This occurs when a large negative bias to the unit is learnt causing the output of the ReLu to be zero, thus getting stuck at zero because gradient at zero is zero. Solutions to mitigate this problem include techniques such as Leaky ReLu and Maxout.

Bibliography

<references />