incremental Learning, Clustering and Hierarchy Formation of Whole Body Motion Patterns using Adaptive Hidden Markov Chains(Summary): Difference between revisions

m (Conversion script moved page Incremental Learning, Clustering and Hierarchy Formation of Whole Body Motion Patterns using Adaptive Hidden Markov Chains(Summary) to [[incremental Learning, Clustering and Hierarchy Formation of Whole Body Motion Patt...) |

|||

| (16 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

This paper | This paper presents a novel approach for incremental learning of motion primitives by observation of human motion. This algorithm aims at online incremental learning of human motion patterns with applications to humanoids and other robotic agents. The algorithm automatically abstracts the human motions into a dynamic stochastic model, which is used for subsequent recognition as well as motion generation. The motion modeling is performed as the motions are perceived from a human demonstrator. The size and structure of the HMM models are also learned by the algorithm. | ||

This incremental learning of the motion primitives will construct a tree structure representing a hierarchy of known motions. The resulting tree structure is dependent on the history of observations of the robot, with the most specialized (leaf) nodes occurring in those regions of the motion space where the most examples have been observed. The motion primitives are learned using HMM or factorial HMM and the generative hierarchical model is built based on a similarity measure between the learned HMM models for the observed motions. Each node in the resulting tree represents a motion primitive and can be used for motion recognition as well as generation. | This incremental learning of the motion primitives will construct a tree structure representing a hierarchy of known motions. The resulting tree structure is dependent on the history of observations of the robot, with the most specialized (leaf) nodes occurring in those regions of the motion space where the most examples have been observed. The motion primitives are learned using HMM or factorial HMM and the generative hierarchical model is built based on a similarity measure between the learned HMM models for the observed motions. Each node in the resulting tree represents a motion primitive and can be used for motion recognition as well as generation. | ||

The algorithm can be used for activity recognition during human-robot interaction, activity monitoring in rehabilitation and sport training, and motion generation on different autonomous structures. | The algorithm can be used for activity recognition during human-robot interaction, activity monitoring in rehabilitation and sport training, and motion generation on different autonomous structures. | ||

==Factorial HMM== | ==Factorial HMM== | ||

In the case of HMM, the dynamic of motion is modeled with a single discrete state variable, which varies according to a transition matrix A[N,N] ( N is the number of hidden states). Each state is associated with a | In the case of HMM, the dynamic of motion is modeled with a single discrete state variable, which varies according to a transition matrix A[N,N] ( N is the number of hidden states). Each state is associated with a continuous output distribution model. The probability of observing an output o at a hidden state q is modeled by a multivariate Gaussian of size K, where K is the number of outputs. Mixture of Gaussians are commonly used to model the output distribution. This distribution is mixture of Bernoulli and Gaussian distributions, where the Bernoulli distribution selects one of the two existing Gaussian distributions and the outcome is provided by that Gaussian distribution. | ||

HMMs are commonly used for encoding sequential observations that exhibit some phase and amplitude variations between different exemplars of the observations. HMMs are attractive as they can be used for both recognition and generation. Efficient algorithms exist for estimating the model parameters (e.g., the Baum-Welch algorithm, an expectation-maximization algorithm), evaluating the likelihood that a new observation sequence was generated from an HMM model (e.g., forward algorithm), and estimating the most probable state sequence given an observation sequence(Viterbi algorithm). | HMMs are commonly used for encoding sequential observations that exhibit some phase and amplitude variations between different exemplars of the observations. HMMs are attractive as they can be used for both recognition and generation. Efficient algorithms exist for estimating the model parameters (e.g., the Baum-Welch algorithm, an expectation-maximization algorithm), evaluating the likelihood that a new observation sequence was generated from an HMM model (e.g., forward algorithm), and estimating the most probable state sequence given an observation sequence(Viterbi algorithm). | ||

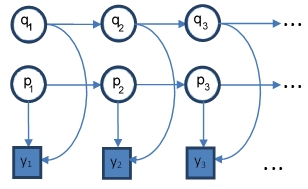

FHMM is an extension of HMM in which multiple independent dynamic processes are coupled | FHMM is an extension of HMM in which multiple independent dynamic processes are coupled together to generate an observation sequence. Each layer is a separate HMM model with transition matrix <math>A_m[N_m,N_m]</math> and <math>N_m</math> observation probabilities modelled as multivariate Gaussian distributions of size K, where subscript m represents the HMM model in layer m. Each state in the individual HMM models have <math>K</math> associated outputs. Observation at each time instance depends on the current state in each layer and is estimated by combining the observations of the individual HMM models through an expectation function for generating the output of the system. The parallel independent dynamic processes can be seen as latent features with a Markov chain dynamics. The expectation function is a multivariate Gaussian function with the chain output as the means, and a covariance matrix representing the signal noise. Figure 1 shows schematics of a factorial HMM model with two layers of left-to-right HMMs in which {q1, q2, …, qn} and {p1, p2, …, pn} represent hidden states of the HMMs in layer 1 and layer 2, respectively, each with a total number of n states. FHMM observation sequence is represented by {y1, y2, …, yn}. | ||

[[File:FHMM.jpg|center|frame|Fig.1: Factorial Hidden Markov Model (FHMM) with 2 layers.]] | [[File:FHMM.jpg|center|frame|Fig.1: Factorial Hidden Markov Model (FHMM) with 2 layers.]] | ||

There are a number of ways to combine the information from the layers in order to compute the probability of the observation, i.e <math>\, P(Y_t|Q_t)</math>, where Y is the observation at time t and Q is the state of all the layers at time t. One way to combine them was proposed in the original paper that introduced the Factorial HMM. In this method the observations are assumed to be distributed according to a Gaussian distribution; the mean and the covariance of the distribution is a linear combination of the means of all the layers states. <ref> | |||

Logan, et.al , Factorial Hidden Markov Models for Speech Recognition: Preliminary Experiment,1997 | |||

</ref> | |||

In human motion analysis, it is shown that FHMM improves recognition abilities between similar motions and also it is found better in generating exemplars of the encoded movements when compared with movement generation using conventional HMM. | In human motion analysis, it is shown that FHMM improves recognition abilities between similar motions and also it is found better in generating exemplars of the encoded movements when compared with movement generation using conventional HMM. | ||

Baum-Welch EM algorithm has been extended to estimate the parameters of FHMM <ref name = "R1">Ghahramani, Z., Jordan, M.I.: Factorial hidden markov models. Machine Learning 29, 245–273 (1997)</ref>. However, the resulting EM algorithm is computationally expensive <math>O(TmN^{m+1})</math>. As can be seen, the time complexity increases exponentially with increase | Baum-Welch EM algorithm has been extended to estimate the parameters of FHMM <ref name = "R1">Ghahramani, Z., Jordan, M.I.: Factorial hidden markov models. Machine Learning 29, 245–273 (1997)</ref>. However, the resulting EM algorithm is computationally expensive <math>O(TmN^{m+1})</math>. As can be seen, the time complexity increases exponentially with increase in a single number of chains and hence, the E-step of the algorithm becomes intractable. Approximate approaches are proposed with quadratic time complexity in number of chains. These alternative algorithms implement an approximation of the E-step in FHMM training <ref name ="R2">Jacobs, R.A., Jiang, W., Tanner, M.A.: Factorial hidden markov models and the generalized back-fitting algorithm. Neural Computation 14, 2415–2437 (2002)</ref>, <ref name ="R1">Ghahramani, Z., Jordan, M.I.: Factorial hidden markov models. Machine Learning 29, 245–273 (1997)</ref>. Although these approximate approaches reduce the time complexity of FHMM training, they are still not suitable for online learning of motion primitives as they train all the chains simultaneously. This complexity also results in inefficient recognition using trained FHMMs as compared to single HMMs. In this paper a novel approach for sequential trialing of FHMMs is proposed. The new approach is developed based on the idea that a single HMM would be sufficient when the movement is the knowledge space are very dissimilar and hence easy to discriminate and a more complex model in the form of additional chains are added only when movements are very similar and hard to distinguish. | ||

The proposed sequential training starts with training a single HMM first and then adds extra chains as needed. The subsequent HMMs are trained on the error between the training data and the output of the trained HMMs. | The proposed sequential training starts with training a single HMM first and then adds extra chains as needed. The subsequent HMMs are trained on the error between the training data and the output of the trained HMMs. | ||

| Line 20: | Line 24: | ||

In this approach, each human motion is initially encoded in a single left-to-right HMM (non-periodic motions)<math>\lambda(\pi,A,B)</math>, where <math>\pi</math> is a vector of initial state probabilities (priors). Therefore, the vector of prior carries a value of 1 for the first state and zero for the rest. In this model, the underlying hidden state sequence consists of either transitions to the successive state or to the current state (i.e., no return to previous state). The observation probability distribution is modeled as a Gaussian or a mixture of Gaussians with diagonal covariance matrix considered for simplicity. | In this approach, each human motion is initially encoded in a single left-to-right HMM (non-periodic motions)<math>\lambda(\pi,A,B)</math>, where <math>\pi</math> is a vector of initial state probabilities (priors). Therefore, the vector of prior carries a value of 1 for the first state and zero for the rest. In this model, the underlying hidden state sequence consists of either transitions to the successive state or to the current state (i.e., no return to previous state). The observation probability distribution is modeled as a Gaussian or a mixture of Gaussians with diagonal covariance matrix considered for simplicity. | ||

There is a tradeoff in size of these single HMMs. A small-size HMM would generalize better is recognition tasks when the movements are very dissimilar, while a larger HMM would be better in cases of similar indistinguishable movements. However, a model with low-number of states will perform poorly in generation tasks. Movement generation using small HMM model | There is a tradeoff in size of these single HMMs. A small-size HMM would generalize better is recognition tasks when the movements are very dissimilar, while a larger HMM would be better in cases of similar indistinguishable movements. However, a model with low-number of states will perform poorly in generation tasks. Movement generation using small HMM model is likely to compromise the fine details of the movements. Adding more states to the HMM model will be enhance the motion reproduction but the resulting HMM will be more prone to overfitting. Sequential FHMMs is introduced here to overcome the recognition and generation limitation of single HMM models. | ||

==Incremental Behavior Learning and Hierarchy Formation == | ==Incremental Behavior Learning and Hierarchy Formation == | ||

During a | During a continuous learning from demonstration scenario, the robot observe motions and should decide if the observed motion is a known motion primitive or a new motion primitive should be learned. Furthermore, over a life-time of the robot, the number of the learned motions primitives grows large and there need to be an affective way for storing, retrieving and arranging these motion primitives. The paper proposes a hierarchical structure for storing the learned motion acquired through the repeated observation of that motion segment. In this tree structure, each node stores similar observed motion segment and a group model encoding (synthesizing) that motion type. These group models can be used to recognize a similar motion and generate a similar motion for the robot. The size of the models is adjusted based on accuracy requirement in each region of the knowledge database (if there are many motions similar to the model motion, a higher number of chains will be used to encode that motion). | ||

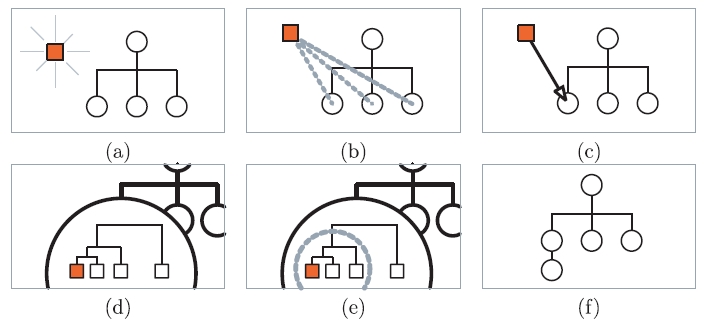

The algorithm initially starts with a single motion (root node). each time a new motion is observed from the demonstrator, it is encoded into a HMM. The encoded motion is compared to the exciting group models via a tree search algorithm using a symmetric intra-model distance measure based on the Kullback–Leibler distance (Equation 1) and placed into the closest group. Likewise, this similarity measure can be applied to FHMM group models for the purpose of comparison. In the case of FHMM, the log-likelihood is computed using a modified version of forward algorithm, which | The algorithm initially starts with a single motion (root node). each time a new motion is observed from the demonstrator, it is encoded into a HMM. The encoded motion is compared to the exciting group models via a tree search algorithm using a symmetric intra-model distance measure based on the Kullback–Leibler distance (Equation 1) and placed into the closest group. Likewise, this similarity measure can be applied to FHMM group models for the purpose of comparison. In the case of FHMM, the log-likelihood is computed using a modified version of forward algorithm, which benefits from the independence between the dynamic chains in a FHMM <ref name ="R1">Ghahramani, Z., Jordan, M.I.: Factorial hidden Markov models. Machine Learning 29, 245–273 (1997)</ref>. Each time a group receives a a new member will be passed to a hierarchical agglomerative clustering algorithm to search for a child groups with sufficiently similar members. A motion model for the newly formed subgroups will be built using the motion exemplars in the new subgroup. Hence, the algorithm incrementally learns and arrange the motion primitives in a tree structure. Overview of the clustering algorithm is shown in Figure 2. | ||

<center><math>D(\lambda_{1},\lambda_{2})=1 | <center><math>D(\lambda_{1},\lambda_{2})=\frac{1}{T}[logP(O^{(2)}|\lambda_2)-logP(O^{(2)}|\lambda_1)]</math></center> | ||

<center><math>D_s = | <center><math>D_s = \frac{D(\lambda_{1},\lambda_{2})+D(\lambda_{2},\lambda_{1})}{2}</math></center> | ||

In Equation 1, <math>O^{(2)}</math> represents the observation sequence generated with the newly learned model <math>\lambda_2</math>, and <math>\lambda_1</math> is an existing group model. | In Equation 1, <math>O^{(2)}</math> represents the observation sequence generated with the newly learned model <math>\lambda_2</math>, and <math>\lambda_1</math> is an existing group model. | ||

[[File:FHMM1.jpg|center|frame|Fig.2: Overview of the clustering algorithm (a square represents a data sequence, and a circle represents a group): (a) a new observation sequence is observed and encoded as an HMM; (b) the observation sequence is compared to existing groups via tree search; (c) the new sequence is placed in the closest existing group; (d) local clustering is performed on the modified group (zoomed in view of modified group); (e) a new subgroup is formed from similar motions in the modified group; (f) the subgroup is added to the tree as a child of the modified group.]] | [[File:FHMM1.jpg|center|frame|Fig.2: Overview of the clustering algorithm (a square represents a data sequence, and a circle represents a group): (a) a new observation sequence is observed and encoded as an HMM; (b) the observation sequence is compared to existing groups via tree search; (c) the new sequence is placed in the closest existing group; (d) local clustering is performed on the modified group (zoomed in view of modified group); (e) a new subgroup is formed from similar motions in the modified group; (f) the subgroup is added to the tree as a child of the modified group.]] | ||

| Line 35: | Line 39: | ||

<center><math>D_{thresh} = K_{maxGD}D_{max}^G</math></center> | <center><math>D_{thresh} = K_{maxGD}D_{max}^G</math></center> | ||

<math>D_{thresh}</math> is the distance | <math>D_{thresh}</math> is the distance threshold at which a new observation is considered for inclusion in a node and K_{maxGD}is the multiplication factor applied and <math>D_{max}^G</math> is the maximum intra observation distance measured for a given node. if the computed distances between the newly observed motion and the already exciting models is not smaller than the threshold, then the new motion is places in the parent cluster. The maximum intra observation distance for a node <math>D_{max}^G</math> is also the criterion used to decide the level of model complexity required for the motion sequence. If the new motion is most similar to a node which <math>D_{max}^G</math> falls below a certain threshold, the FHMM model is generated by adding additional chain(s) to the current representation. The resulting FHMM has higher discriminative abilities to distinguish between more similar motions. | ||

===Clustering and New Group Formation=== | |||

When a new motion is added to a group, the clustering is evoked within that modified group to find any possible subgroups. Subgroups are formed with a collection of motions that are more similar than the level of similarity found in the group. | |||

The complete link hierarchical clustering algorithm id used for within-group clustering. Clustering are done using the following measures: minimum number of elements and maximum distance measure. the maximum distance measure is based on average intra-cluster distances. | |||

<center><math> D_{Cutoff} = \mu - K_{Cutoff} \sigma </math></center> | |||

Only clusters in which the maximum distance is less the <math>D_{Cutoff}</math> are formed. <math>\mu</math> is the average intra-cluster distances. | |||

=== New Behavior Instantiation=== | |||

If a new cluster was formed in the previous step, a new behavior for the new cluster will be modeled using all the members of that cluster, the structure of the probabilistic modelling (HMM or FHMM) is determined based on the maximum intra-observation distance, <math>D_{max}^G</math>, in the new subgroup. if the members of the cluster are becoming increasingly similar to each other and more accurate discrimination is needed, additional HMM chains are added and the resulting FHMM model is sequentially trained as described in the following. | |||

=== Sequential training of FHMM === | |||

as described above, when a new motion is observed, it is encoded using a single HMM using an EM algorithm (Baum-Welch). Additional chains are trained on the error between the true data and the motion generated by the scaled sum of the preceding chains. | |||

<center><math>e_i^n = \frac{1}{W}(y_i^n-\sum_{i=0}^{m-1}WC_i)</math></center> | |||

where <math>e_i^n</math> is the residual error for a set of N time series sequences, <math>y_i^n</math> is the true data, <math>\frac{1}{W}</math> is the weight applied to each chain, <math>M</math> is the new number of chains, and <math>C_i</math> is the contribution of each previously trained chain <math>i</math>. There are three methods proposed in the paper to approximate the contribution of chain: Gamma, Viterbi, and generated methods. Given the training data for the new chain, the new chain is trained with Baum-Welch algorithm. | |||

Following training, for forward algorithm (recognition), the covariance at each state combination is computed as: | |||

<center><math>Cov = \sum_{i=0}^{M}W^2Cov_j^{(i)}</math></center> | |||

where <math>Cov</math> is the resulting covariance and <math>Cov_j^{(i)}</math> is the covariance at state <math>j</math> of chain <math>i</math>. The developed algorithm is fast, suitable for online acquisitions of motions, recognition and generation. This algorithm assumes independence of the chains given the data. | |||

===Deterministic Motion Generation=== | |||

Constructed group models are used to generate a desired motion. First, the expected state durations for all the states in the trained left-to-right HMM are computed first using: | |||

<center><math>\bar{d_i^m}=\frac{1}{1-a_{ii}^m}</math></center> | |||

where <math>\bar{d_i^m}</math> is the expected state duration and <math>a_{ii}^m</math> is the self transition probability for state <math>i</math> in chain <math>m</math>. Then, the mean for the individual Gaussians used to model the output distribution associated with each hidden state will be used to reconstruct the movements following the order in the state sequence, <math>s_1, s_2, ..., s_{N_h}</math>, where <math>N_h</math> is the total number of states. Once the state sequence has been computed for each chain, the desired motion sequence is calculated by summing the contribution from each chain at each time step, based on that chain’s current state value. | |||

Alternatively, if it is desired to generate a motion that closely resembles a specific motion observation in the group, Viterbi algorithm can be used to generate a motion that inherits the characteristic of both the group model and a specific observation <ref name="R3">Lee, D., Nakamura, Y.: Mimesis from partial observations. In: Proceedings of the International Conference on Intelligent Robots and Systems, pp. 1911–1916 (2005)</ref>. | |||

The resulting state trajectory needs to be smoothed to eliminate discontinuities during state transitions. For this purpose, a low-pass filter is applied to the generated movement trajectory. | |||

===Experiments and Sum-ups=== | |||

The paper presents results a couple of experiments to show the efficacy of the proposed approach for motion primitive acquisitions, recognition and generation. The first set of experiments compares the recognition and generation performance of HMMs and FHMMs and the validity of using HMM and FHMM selectively is confirmed. The second set of experiments test the incremental clustering and organization algorithm. It is shown through experiments that the proposed sequential learning achieves comparable results to exact training algorithm, while significantly reducing the computation time and allowing the existing model knowledge to be reused. The proposed incremental clustering and organization also provide an efficient tool for storing and retrieving motion primitives learned during the course of robot interaction with environment and observation of its human partner. | |||

==References== | |||

<references /> | |||

Latest revision as of 09:46, 30 August 2017

This paper presents a novel approach for incremental learning of motion primitives by observation of human motion. This algorithm aims at online incremental learning of human motion patterns with applications to humanoids and other robotic agents. The algorithm automatically abstracts the human motions into a dynamic stochastic model, which is used for subsequent recognition as well as motion generation. The motion modeling is performed as the motions are perceived from a human demonstrator. The size and structure of the HMM models are also learned by the algorithm. This incremental learning of the motion primitives will construct a tree structure representing a hierarchy of known motions. The resulting tree structure is dependent on the history of observations of the robot, with the most specialized (leaf) nodes occurring in those regions of the motion space where the most examples have been observed. The motion primitives are learned using HMM or factorial HMM and the generative hierarchical model is built based on a similarity measure between the learned HMM models for the observed motions. Each node in the resulting tree represents a motion primitive and can be used for motion recognition as well as generation. The algorithm can be used for activity recognition during human-robot interaction, activity monitoring in rehabilitation and sport training, and motion generation on different autonomous structures.

Factorial HMM

In the case of HMM, the dynamic of motion is modeled with a single discrete state variable, which varies according to a transition matrix A[N,N] ( N is the number of hidden states). Each state is associated with a continuous output distribution model. The probability of observing an output o at a hidden state q is modeled by a multivariate Gaussian of size K, where K is the number of outputs. Mixture of Gaussians are commonly used to model the output distribution. This distribution is mixture of Bernoulli and Gaussian distributions, where the Bernoulli distribution selects one of the two existing Gaussian distributions and the outcome is provided by that Gaussian distribution. HMMs are commonly used for encoding sequential observations that exhibit some phase and amplitude variations between different exemplars of the observations. HMMs are attractive as they can be used for both recognition and generation. Efficient algorithms exist for estimating the model parameters (e.g., the Baum-Welch algorithm, an expectation-maximization algorithm), evaluating the likelihood that a new observation sequence was generated from an HMM model (e.g., forward algorithm), and estimating the most probable state sequence given an observation sequence(Viterbi algorithm).

FHMM is an extension of HMM in which multiple independent dynamic processes are coupled together to generate an observation sequence. Each layer is a separate HMM model with transition matrix [math]\displaystyle{ A_m[N_m,N_m] }[/math] and [math]\displaystyle{ N_m }[/math] observation probabilities modelled as multivariate Gaussian distributions of size K, where subscript m represents the HMM model in layer m. Each state in the individual HMM models have [math]\displaystyle{ K }[/math] associated outputs. Observation at each time instance depends on the current state in each layer and is estimated by combining the observations of the individual HMM models through an expectation function for generating the output of the system. The parallel independent dynamic processes can be seen as latent features with a Markov chain dynamics. The expectation function is a multivariate Gaussian function with the chain output as the means, and a covariance matrix representing the signal noise. Figure 1 shows schematics of a factorial HMM model with two layers of left-to-right HMMs in which {q1, q2, …, qn} and {p1, p2, …, pn} represent hidden states of the HMMs in layer 1 and layer 2, respectively, each with a total number of n states. FHMM observation sequence is represented by {y1, y2, …, yn}.

There are a number of ways to combine the information from the layers in order to compute the probability of the observation, i.e [math]\displaystyle{ \, P(Y_t|Q_t) }[/math], where Y is the observation at time t and Q is the state of all the layers at time t. One way to combine them was proposed in the original paper that introduced the Factorial HMM. In this method the observations are assumed to be distributed according to a Gaussian distribution; the mean and the covariance of the distribution is a linear combination of the means of all the layers states. <ref> Logan, et.al , Factorial Hidden Markov Models for Speech Recognition: Preliminary Experiment,1997 </ref>

In human motion analysis, it is shown that FHMM improves recognition abilities between similar motions and also it is found better in generating exemplars of the encoded movements when compared with movement generation using conventional HMM. Baum-Welch EM algorithm has been extended to estimate the parameters of FHMM <ref name = "R1">Ghahramani, Z., Jordan, M.I.: Factorial hidden markov models. Machine Learning 29, 245–273 (1997)</ref>. However, the resulting EM algorithm is computationally expensive [math]\displaystyle{ O(TmN^{m+1}) }[/math]. As can be seen, the time complexity increases exponentially with increase in a single number of chains and hence, the E-step of the algorithm becomes intractable. Approximate approaches are proposed with quadratic time complexity in number of chains. These alternative algorithms implement an approximation of the E-step in FHMM training <ref name ="R2">Jacobs, R.A., Jiang, W., Tanner, M.A.: Factorial hidden markov models and the generalized back-fitting algorithm. Neural Computation 14, 2415–2437 (2002)</ref>, <ref name ="R1">Ghahramani, Z., Jordan, M.I.: Factorial hidden markov models. Machine Learning 29, 245–273 (1997)</ref>. Although these approximate approaches reduce the time complexity of FHMM training, they are still not suitable for online learning of motion primitives as they train all the chains simultaneously. This complexity also results in inefficient recognition using trained FHMMs as compared to single HMMs. In this paper a novel approach for sequential trialing of FHMMs is proposed. The new approach is developed based on the idea that a single HMM would be sufficient when the movement is the knowledge space are very dissimilar and hence easy to discriminate and a more complex model in the form of additional chains are added only when movements are very similar and hard to distinguish.

The proposed sequential training starts with training a single HMM first and then adds extra chains as needed. The subsequent HMMs are trained on the error between the training data and the output of the trained HMMs.

Human Motion Pattern Representation Using FHMMs

In this approach, each human motion is initially encoded in a single left-to-right HMM (non-periodic motions)[math]\displaystyle{ \lambda(\pi,A,B) }[/math], where [math]\displaystyle{ \pi }[/math] is a vector of initial state probabilities (priors). Therefore, the vector of prior carries a value of 1 for the first state and zero for the rest. In this model, the underlying hidden state sequence consists of either transitions to the successive state or to the current state (i.e., no return to previous state). The observation probability distribution is modeled as a Gaussian or a mixture of Gaussians with diagonal covariance matrix considered for simplicity.

There is a tradeoff in size of these single HMMs. A small-size HMM would generalize better is recognition tasks when the movements are very dissimilar, while a larger HMM would be better in cases of similar indistinguishable movements. However, a model with low-number of states will perform poorly in generation tasks. Movement generation using small HMM model is likely to compromise the fine details of the movements. Adding more states to the HMM model will be enhance the motion reproduction but the resulting HMM will be more prone to overfitting. Sequential FHMMs is introduced here to overcome the recognition and generation limitation of single HMM models.

Incremental Behavior Learning and Hierarchy Formation

During a continuous learning from demonstration scenario, the robot observe motions and should decide if the observed motion is a known motion primitive or a new motion primitive should be learned. Furthermore, over a life-time of the robot, the number of the learned motions primitives grows large and there need to be an affective way for storing, retrieving and arranging these motion primitives. The paper proposes a hierarchical structure for storing the learned motion acquired through the repeated observation of that motion segment. In this tree structure, each node stores similar observed motion segment and a group model encoding (synthesizing) that motion type. These group models can be used to recognize a similar motion and generate a similar motion for the robot. The size of the models is adjusted based on accuracy requirement in each region of the knowledge database (if there are many motions similar to the model motion, a higher number of chains will be used to encode that motion).

The algorithm initially starts with a single motion (root node). each time a new motion is observed from the demonstrator, it is encoded into a HMM. The encoded motion is compared to the exciting group models via a tree search algorithm using a symmetric intra-model distance measure based on the Kullback–Leibler distance (Equation 1) and placed into the closest group. Likewise, this similarity measure can be applied to FHMM group models for the purpose of comparison. In the case of FHMM, the log-likelihood is computed using a modified version of forward algorithm, which benefits from the independence between the dynamic chains in a FHMM <ref name ="R1">Ghahramani, Z., Jordan, M.I.: Factorial hidden Markov models. Machine Learning 29, 245–273 (1997)</ref>. Each time a group receives a a new member will be passed to a hierarchical agglomerative clustering algorithm to search for a child groups with sufficiently similar members. A motion model for the newly formed subgroups will be built using the motion exemplars in the new subgroup. Hence, the algorithm incrementally learns and arrange the motion primitives in a tree structure. Overview of the clustering algorithm is shown in Figure 2.

In Equation 1, [math]\displaystyle{ O^{(2)} }[/math] represents the observation sequence generated with the newly learned model [math]\displaystyle{ \lambda_2 }[/math], and [math]\displaystyle{ \lambda_1 }[/math] is an existing group model.

The comparison between the newly observed motion and the learned group models are done a the leaves of each node in the tree. If the distance to a child node is sufficiently small, the new motion recurses to the most similar child node. Otherwise, the motion segment is added to the parent node.

[math]\displaystyle{ D_{thresh} }[/math] is the distance threshold at which a new observation is considered for inclusion in a node and K_{maxGD}is the multiplication factor applied and [math]\displaystyle{ D_{max}^G }[/math] is the maximum intra observation distance measured for a given node. if the computed distances between the newly observed motion and the already exciting models is not smaller than the threshold, then the new motion is places in the parent cluster. The maximum intra observation distance for a node [math]\displaystyle{ D_{max}^G }[/math] is also the criterion used to decide the level of model complexity required for the motion sequence. If the new motion is most similar to a node which [math]\displaystyle{ D_{max}^G }[/math] falls below a certain threshold, the FHMM model is generated by adding additional chain(s) to the current representation. The resulting FHMM has higher discriminative abilities to distinguish between more similar motions.

Clustering and New Group Formation

When a new motion is added to a group, the clustering is evoked within that modified group to find any possible subgroups. Subgroups are formed with a collection of motions that are more similar than the level of similarity found in the group. The complete link hierarchical clustering algorithm id used for within-group clustering. Clustering are done using the following measures: minimum number of elements and maximum distance measure. the maximum distance measure is based on average intra-cluster distances.

Only clusters in which the maximum distance is less the [math]\displaystyle{ D_{Cutoff} }[/math] are formed. [math]\displaystyle{ \mu }[/math] is the average intra-cluster distances.

New Behavior Instantiation

If a new cluster was formed in the previous step, a new behavior for the new cluster will be modeled using all the members of that cluster, the structure of the probabilistic modelling (HMM or FHMM) is determined based on the maximum intra-observation distance, [math]\displaystyle{ D_{max}^G }[/math], in the new subgroup. if the members of the cluster are becoming increasingly similar to each other and more accurate discrimination is needed, additional HMM chains are added and the resulting FHMM model is sequentially trained as described in the following.

Sequential training of FHMM

as described above, when a new motion is observed, it is encoded using a single HMM using an EM algorithm (Baum-Welch). Additional chains are trained on the error between the true data and the motion generated by the scaled sum of the preceding chains.

where [math]\displaystyle{ e_i^n }[/math] is the residual error for a set of N time series sequences, [math]\displaystyle{ y_i^n }[/math] is the true data, [math]\displaystyle{ \frac{1}{W} }[/math] is the weight applied to each chain, [math]\displaystyle{ M }[/math] is the new number of chains, and [math]\displaystyle{ C_i }[/math] is the contribution of each previously trained chain [math]\displaystyle{ i }[/math]. There are three methods proposed in the paper to approximate the contribution of chain: Gamma, Viterbi, and generated methods. Given the training data for the new chain, the new chain is trained with Baum-Welch algorithm. Following training, for forward algorithm (recognition), the covariance at each state combination is computed as:

where [math]\displaystyle{ Cov }[/math] is the resulting covariance and [math]\displaystyle{ Cov_j^{(i)} }[/math] is the covariance at state [math]\displaystyle{ j }[/math] of chain [math]\displaystyle{ i }[/math]. The developed algorithm is fast, suitable for online acquisitions of motions, recognition and generation. This algorithm assumes independence of the chains given the data.

Deterministic Motion Generation

Constructed group models are used to generate a desired motion. First, the expected state durations for all the states in the trained left-to-right HMM are computed first using:

where [math]\displaystyle{ \bar{d_i^m} }[/math] is the expected state duration and [math]\displaystyle{ a_{ii}^m }[/math] is the self transition probability for state [math]\displaystyle{ i }[/math] in chain [math]\displaystyle{ m }[/math]. Then, the mean for the individual Gaussians used to model the output distribution associated with each hidden state will be used to reconstruct the movements following the order in the state sequence, [math]\displaystyle{ s_1, s_2, ..., s_{N_h} }[/math], where [math]\displaystyle{ N_h }[/math] is the total number of states. Once the state sequence has been computed for each chain, the desired motion sequence is calculated by summing the contribution from each chain at each time step, based on that chain’s current state value. Alternatively, if it is desired to generate a motion that closely resembles a specific motion observation in the group, Viterbi algorithm can be used to generate a motion that inherits the characteristic of both the group model and a specific observation <ref name="R3">Lee, D., Nakamura, Y.: Mimesis from partial observations. In: Proceedings of the International Conference on Intelligent Robots and Systems, pp. 1911–1916 (2005)</ref>. The resulting state trajectory needs to be smoothed to eliminate discontinuities during state transitions. For this purpose, a low-pass filter is applied to the generated movement trajectory.

Experiments and Sum-ups

The paper presents results a couple of experiments to show the efficacy of the proposed approach for motion primitive acquisitions, recognition and generation. The first set of experiments compares the recognition and generation performance of HMMs and FHMMs and the validity of using HMM and FHMM selectively is confirmed. The second set of experiments test the incremental clustering and organization algorithm. It is shown through experiments that the proposed sequential learning achieves comparable results to exact training algorithm, while significantly reducing the computation time and allowing the existing model knowledge to be reused. The proposed incremental clustering and organization also provide an efficient tool for storing and retrieving motion primitives learned during the course of robot interaction with environment and observation of its human partner.

References

<references />