compressed Sensing Reconstruction via Belief Propagation: Difference between revisions

m (Conversion script moved page Compressed Sensing Reconstruction via Belief Propagation to compressed Sensing Reconstruction via Belief Propagation: Converting page titles to lowercase) |

|||

| (61 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

==Introduction== | ==Introduction== | ||

One of the key theorem in digital signal processing is [http://www.dynamicmeasurementsolutions.com/Articles/SV_0202lago.pdf Shannon/Nyquist] theorem. This theorem specifies the conditions on which a band limited signal can be reconstructed uniquely from its discrete samples. This property of band limited signals made signal processing viable on natural analog signals. However, in some applications even the sampled signal lays in a extremely high dimensional vector space. To make signal processing algorithms computationally tractable, many researchers work on compressiblity of signals. Here, it is assumed that most of the information content of a signal lays in a few samples with large magnitude. This lead to study and investigation on a class of signals, known as compressible signals. | |||

Compressible signals can be stored efficiently by ignoring small value coefficients. Naturally it means that during sampling procedure loosing those samples is unimportant. This lead to a natural questions: Can we sample compressible signals in a compressed way? Is there any method to sense only those large value coefficients? In parallel works by Donoho <ref name="R1"> D. Donoho, “Compressed Sensing,” in IEEE Trans. on Info. theory, vol 52, no 4,pp. 1289–1306, Apr. 2006.</ref> and Candes et. al <ref name="R2"> E. Candes, J. Romberg, J.; T. Tao, “Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information,” in IEEE Trans. on Info. theory, vol 52, no 2,pp. 489–509, Feb. 2006.</ref> this question was answered. They showed that this procedure can be done by measuring a small number of random linear projection of the source signal. They also provided a decoding scheme for reconstructing the source signal using those measurements and provided theoretical proofs for different aspects of this topic. | |||

Tow important questions in CS theory is: 1. How many measurement do we need to be able to reconstruct a specific signal in stable and robust way? 2. What is the decoding algorithm complexity? These are vital questions which must be answered in order to make CS theory an alternative procedure for ordinary sampling. These questions have been answered by various researchers. Possibly the most important limitation of CS theory is the complexity of the current decoding algorithms. Those algorithms with strong theoretical background which can be applied to different applications are computationally expensive and can not be used for large scale problems. For this reason one of the active research topics in this field, is to develop tractable CS decoding algorithms. A recently published paper <ref name="R3"> D. Baron, S. Sarvotham, and R.G. Baraniuk, “Compressive Sensing Via Belief Propagation ,” in IEEE Trans. on Signal Processing, vol 58, no 1,pp. 269–280, Jan. 2010.</ref> tries to provide a decoding algorithms via belief propagation (BP). Authors connect CS to graphical models in an interesting approach and then use BP on the corresponding graph to provide a decoding algorithm. In the current paper summary we will follow their approach which indicates the importance of graphical models and the wide range of problems that these models can be used on. It is shown that this approach leads to a decoding algorithm with much less computational load. | |||

==Compressed Sensing== | ==Compressed Sensing== | ||

Compressive sensing is a method for sampling and representing compressible signals at below the Nyquist rate measurement rates. Let <math>\ x</math> is a <math>N \times 1</math> column vector in <math>\mathbb{R}^N</math> that only has <math>K \le N</math> non-zero entries. To measure this source signal we measure a small number of linear combinations of its elements, <math>\ M</math>, as follows | |||

<center><math>\ y=\psi x ,</math> (1)</center> | |||

where <math>\ y \in \mathbb{R}^M</math> is the vector containing the measurements and <math>\psi \in \mathbb{R}^{M \times N}</math> is a matrix that carries the coordinate basis we use in our application. The goal of CS theory is, given <math>\psi </math> and <math>\ y </math>,to recover the source signal in a stable and robust approach. | |||

It is obvious that this problem is ill-posed and infinitely many solutions satisfy (1). Since we assume that our source signal is sparse we can take advantage of this knowledge and assert sparsity as prior to limit the feasible region of solutions. Interestingly it has been proven that under this priority if the source signal is sparse enough then it can be recovered as the solution of optimization problem <ref name="R1"/> and <ref name="R2"/>: | |||

<center><math>\ \hat{x}=\textrm{argmin} ||x||_0 , \textrm{s.t.} y=\psi x</math> (2)</center> | |||

where <math>\ ||.||_0 </math> denotes <math>\ l_0</math> norm that measures the number of non-zero entries. Unfortunately <math>\ l_0 </math> optimization is NP hard and needs combinatorial enumeration and thus is intractable. Interestingly, it has been proven that if the sensing matrix satisfies restricted isometry property (RIP), then (2) can be equivalently solves as: | |||

<center><math>\ \hat{x}=\textrm{argmin} ||x||_1 , \textrm{s.t.} y=\psi x</math> (3)</center> | |||

Here we have replaced <math>\ l_0</math> norm by <math>\ l_1</math> norm which is convex and robust towards additive noise. This optimization can be solved efficiently using linear programming and reconstruction complexity is <math>\ O(N^3)</math> . It has been shown that matrices with independently identical distributed Gaussian or Bernoulli entries satisfy RIP condition and can be used effectively as sensing matrix. In such cases the linear programming decoder requires <math>\ K log(1+N/K)</math> measurements for signal reconstruction. | |||

==Compressed Sensing Reconstruction Algorithms== | ==Compressed Sensing Reconstruction Algorithms== | ||

Linear programming is the core approach for designing CS decoding algorithms. This approach is tractable with complexity of <math>\ O(N^3) </math>, however this complexity is intractable for large scale problems. For example a <math>\ 256\times 256 </math> image will need <math>\ 2^{48} </math> operations for decoding! One the other hand the encoding stage will need <math>\ O(NM) </math> computations for a dense sensing matrix. For this reason one important direction in this filed is to design and simplify the encoding and decoding algorithms. | |||

One alternative decoding approach is iterative greedy algorithm. This approach needs slightly more measurements but it decreases computational complexity significantly. Perhaps the most well know algorithms in this category are Orthogonal Matching Pursuit <ref name="R4"> J. Tropp and A. C. Gilbert, “Signal recovery from partial information via orthogonal matching pursuit,” Apr. 2005, Preprint.</ref> and Matching Pursuit (MP) <ref name="R5"> EM. F. Duarte, M. B. Wakin, and R. G. Baraniuk, “Fast reconstruction of piece-wise smooth signals from random projections ,” in Proc. SPARS05, France, Nov. 2005.</ref>. OMP requires <math>\ 2K ln(N) </math> measurements and the computational complexity is <math>\ O(KN^2) </math>. Even though it is better but it still is intractable for large <math>\ N </math> and <math>\ K </math>. More advanced versions like StOMP can recover the signal in <math>\ Nlog(N) </math> complexity. It is much faster and can be used for larger class of signals. | |||

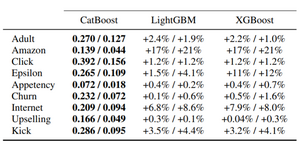

The common assumption in these approaches is that sensing matrix is assumed to be dense. Recently some researchers are trying to use sparse sensing matrix and use it to simplify the decoding algorithms. For example chaining pursuit uses this assumption and recovers the signal with complexity <math>\ K log^2(N) log^2(K)</math>. Table. 1 summaries CS schemes including their complexity as well as required measurements for each method. These results indicates that we can reduce the complexity only at expense of having more measurements. The last row indicates the algorithm of the current paper. This shows superiority of this algorithm. Later we will see that this superiority comes from using BP, though some simplifying assumption has been made and the method is not general proposed. (Click on the image for table enlargement) | |||

[[File:Table1.png|thumb|right|Table.1 CS schemes.]] | |||

==Lessons from LDPC codes applied to CS== | |||

At first glance it may seem impossible or at lease complicated to connect CS and a graphical model. The main idea for this procedure comes from the world of error control coding. Sparse error control coding schemes such as LDPC strongly suggests the use of sparse compressed sensing matrices. Can we use sparse CS matrices to encode sparse signals efficiently? Can we take advantage of the sparse structure to reduce the encoding and decoding complexity? To answer these questions we will have a brief review on LDPC code which will clarify how we can assign a graphical model to a CS problem. | |||

An LDPC code is a block code that has a sparse parity check matrix <math>\ H </math>. The parity check matrix of an LDPC code can be represented by a biparitite graph (see Fig. 1). | |||

[[File:graph111.png|thumb|right|Fig.1 CS The graph representation of a CS proble.]] | |||

There are two class of nodes in the graph. The first class represents the transmitted data and the second class represents the check bits. A check bit is resulted as the linear combination of transmitted data. An edge exists in the graph if a transmitted bit appears in linear combination which produces an arbitrary check bit. This graph representation is not a special characteristics of LDPC codes. What makes it special is that for these codes the graph is sparse.: the number of edges in the graph grows linearly with <math>\ N </math> rather than <math>\ N^2 </math>. Since the graph is sparse decoding can be done efficiently using BP algorithm on the corresponding graph. This the key idea that suggests that CS decoding algorithms may develop using BP. Though our graph can have cycles but experiments show that even for cyclic graphs BP provides an acceptable approximation of the real answer. | |||

===Connecting CS decoding to graph decoding algorithms=== | |||

Similar to error codes, a sensing matrix can be represented by a biparitie graph.The neighbors of a given measurement node is the set of coefficient nodes that are used to generate that measurement. In order to follow the LDPC scheme we need to choose sensing matrix to be sparse. As the first step in using graphical models in CS we assume the sensing matrix to be very simple: we assume the entries are only belonging to the set <math>\ \{0,1,-1\} </math>. Hence the encoding procedure is very simple and only involves summation and difference. This assumption make the corresponding graph to be sparse and hence we can use message passing algorithms for inference (note message passing for a general graph, possibly not sparse, is NP hard problem). We assume that each row of <math>\ \psi </math> contains exactly <math>\ L </math> non zero elements. This number is chosen based on properties of the source signal. | |||

====Encoding complexity==== | |||

If the row weight of <math>\ \psi </math> is equal to <math>\ L </math> and we have <math>\ M </math> measurements then the encoding complexity will be <math>\ O(ML) </math>. Choosing <math>\ M=Nlog(N)/L </math> experimental seems to be appropriate and thus <math>\ O(ML)= O(Nlog(N)) </math> | |||

==CS-LDPC decoding of sparse signals== | ==CS-LDPC decoding of sparse signals== | ||

In this section we focus on approximately sparse signals. Similar to other inference problems which use BP we need to know probability distributions. Here we will assume a prior on source signal and then use it in BP procedure. | |||

=====Source model===== | |||

Since we want to use graphical model techniques it is necessary to assert a prior on the source signal. Here we will model the source signal by mixture of Gaussians model. This model is simple and captures prior knowledge about the source sparsity. | |||

= | Let <math>\ X=[X(1),...,X(N)] </math> be a random vector in <math>\mathbb{R}^N</math> and <math>\ x=[x(1),...,x(N)] </math> be an instance of <math>\ X</math>. An approximately sparse signal has a few large magnitude coefficients and a lot of small magnitude coefficients. One can assume that we have two states for coefficients. One state is "high" which corresponds to large magnitude coefficients and the other state is "low" which corresponds to small magnitude coefficients. We denote the state of the j'th coefficient by <math>\ q(j)</math>, where it is either equal to 1 (high) or 0 (low). The vector <math>\ q=[q(1),...,q(N)</math> which contains these state variables determines the source state configuration. We assume that this vector is an outcome of the state random variable <math>\ Q=[Q(1),...,Q(N)] </math> which is simply assumed to have multinomial distribution. For each <math>\ x(j) </math> we associate for the "high" state an outcome of a high variance Gaussian distribution and for the "low" state an outcome of a low variance Gaussian distribution to model the coefficients. Thus: | ||

= | <center><math>\ X(j)|Q(j)=1 \approx N(0,\sigma_1^2) \textrm{and} X(j)|Q(j)=0 \approx N(0,\sigma_0^2)</math> (4)</center> | ||

==== | We assume that coefficients are distributed independently. To have about <math>\ K</math> large magnitude coefficients we choose the Bernoulli parameter of the <math>\ Q</math> equal to <math>\ S=K/N</math>. Fig. 2 illustrates the mixture of Gaussian model PDF and Fig. 3 is an outcome of such a distribution for <math>\ N=50, K=5, \sigma_1^2=30, \sigma_0^2=1</math>. | ||

[[File:fig111.png|thumb|right|Fig.2 The mixture of Gaussian model PDF .]] | |||

[[File:fig112.png|thumb|right|Fig.3 sample outcome of the mixture of Gaussian model.]] | |||

====Approximate solution to CS statistical inference via message passing==== | =====Decoding via statistical inference===== | ||

The framework that was set transforms the original CS problem to a Bayesian inference problem. As we have seen in the course we can used message passing BP algorithm to solve these problems over factor graphs. | |||

As we know (1) is undetermined linear system and assuming <math>\ \phi</math> to be full rank the solution lie in a hyperplane of dimension <math>\ N-M</math>. We use MAP and MMSE estimations to for the CS reconstruction. Note that MMSE and MAP estimates are identical for multivariate Gaussian rv's but we need both of them to derive the solution. | |||

<center><math>\ \hat{x}_{MMSE}= argmin_{x'}E(X-x') \textrm{s.t}|: y=\phi x'</math> (5)</center> | |||

<center><math>\ \hat{x}_{MAP}= argmin_{x'}P(X=x') \textrm{s.t} :y=\phi x'</math> (6)</center> | |||

First we derive the exact MMSE estimate of the source in our framework. Unfortunately it has exponential complexity and is intractable for large scale systems. Due to sparse structure assumption of <math>\phi</math> we can use message passing scheme to estimate <math>\ \phi</math> approximately but in a computationally robust and stable approach. | |||

=====Exact solution to CS statistical inference===== | |||

To obtain MAP and MMSE estimates first we will assume <math>\ q </math> is known and will derive the MMSE estimate given <math>\ q </math>. Later we use this estimate to derive MMSE estimate. Let covariance matrix of <math>\ X </math> conditioned on <math>\ q </math> is denoted by <math>\ \Sigma_q</math>. It is a diagonal matrix with the j'th diagonal entry equal to <math>\ \sigma_0^2 </math> if the state is low and <math>\ \sigma_1^2 </math> if the state is high. | |||

======Theorem 1====== | |||

Given <math>\ y=\psi x </math>, then the MMSE and the MAP estimate of <math>\ x </math> conditioned on knowing the state vector is given: | |||

<center><math>\ \hat{x}_{MMSE,q}=\hat{x}_{MAP,q}=\Sigma_q \Phi^T (\Phi\Sigma_q \Phi^T)^{-1}y </math> (7)</center> | |||

Proof: If <math>\ q </math> is known then we have: | |||

<center><math>\ P[X=x|Q=q]=\frac{1}{(2\pi)^{N/2}det(\Sigma_q)^{1/2}} e^{-\frac{1}{2}x^T\Sigma_q^{-1}x} </math> (8)</center> | |||

We do change of variable <math>\ \theta=\Sigma_q^{-1/2} x' </math>. Then we have <math>\ \theta_{MAP}=argmin_{\theta}{\theta^T\theta} </math> such that <math>\ y=\Phi \Sigma_q^{1/2}\theta </math>. This is a simple least square problem and the solution can be computed using pseudo inverse: <math>\ |theta_{MAP}=\Sigma_q^{1/2} \Phi^T (\Phi\Sigma_q \Phi^T)^{-1}y </math>. Inverse change of the variable gives the result. Finally as mentioned MMSE and MAP estimates are the same for multivariate Gaussian random variables. | |||

Now we use this conditional solution to result in the general solution. | |||

======Theorem 2====== | |||

The MMSE estimate of <math>\ x </math> when the state configuration is unknown is given by | |||

<center><math>\ \hat{x}_{MMSE}=\Sigma_{q\in[0,1]^N}P[Y=y|Q=q]P[Q=q]\hat{x,q}_{MMSE} </math> (8)</center> | |||

The expected value of MMSE error obeys the equalities: | |||

<center><math>\ \hat{x}_{MMSE}=E[X|Y=y]=\Sigma_{q\in[0,1]^N}E[X,Q=q|Y=y]=\Sigma_{q\in[0,1]^N}P[Q=q|Y=y]E[X|Q=q,Y=y] </math> | |||

<math>\ =\Sigma_{q\in[0,1]^N}P[Q=q|Y=y]\hat{x}_{MMSE,q}=\Sigma_{q\in[0,1]^N}P[Y=y|Q=q]P[Q=q]\hat{x}_{MMSE,q} </math></center> | |||

which completes the proof. | |||

Since the summation is done on all outcomes of <math>\ q </math>, it is intractable for large <math>\ N </math>. However sparse structure of sensing matrix makes it possible to use message passing algorithms such as BP to approximate the solution. It is tried to use a message passing approach to compute the approximate marginals for <math>\ [X(j)|Y] </math> and thus evaluate the MMSE and MAP estimates for each coefficient. | |||

=====Approximate solution to CS statistical inference via message passing===== | |||

We employ BP over the model factor graph. The factor graph shown in Fig. 4 captures the relationship between the signal coefficients, their states, and the observed CS measurements. We have two types of nodes: variable nodes (black) and constraint nodes (white). All edge connects a variable node to a constraint node. We have three types of variables: states <math>\ Q(j) </math>, coefficients <math>\ X(j) </math> and the measurements <math>\ Y(i) </math> and three types of constraint nodes: prior constraint nodes which impose Bernoulli prior on state variables, constraint nodes which connects the state variables and the coefficient variables which impose the conditional distribution of the coefficient value given the state and the third type connect one measurements to the corresponding coefficients. We employ BP to infer the probability distributions of the coefficient and state variables conditioned on state variables, i.e. <math>\ P[X(j)|Y=y] </math>,<math>\ P[Q(j)|Y=y] </math>. Then we can derive MAP/MMSE estimate for each coefficients. We review some interesting details: | |||

1. We have only two types of message passing:multiplication of beliefs at the variable nodes and convolution at the constraint node. | |||

2. We propose two strategies to encode the beliefs: | |||

* Approximating the distribution by a mixture of Gaussian with a given number of components and we use the parameters of the mixture of the Gaussian as the messages. | |||

*We sample PDF's and use the samples as the messages. | |||

[[File:cor.png|thumb|right|Fig.4 Factor graph for a CS reconstruction problem.]] | |||

=====Decoding complexity===== | |||

Consider the sampled PDF approach first. Let each message be a vector of <math>\ p </math> samples. At a variable node we multiply <math>\ R+1 </math> vectors of length <math>\ p </math> so for one iteration we have <math>\ O(NRp) </math> complexity. At a constraint node we perform convolution in DFT domain so for each node the computational complexity is <math>\ O(Lp+Lplog(p))=O(Lplog(p)) </math> and overall <math>\ O(MLplog(p)) </math> for <math>\ M </math> nodes. For <math>\ log(N) </math> iterations the overall complexity is <math>\ O(MLplog(p)log(N)) </math>. As a rule of thumb we chose <math>\ p=\sigma_1/\sigma_0 </math> and hence the complexity can be expressed as <math>\ O(ML\frac{\sigma_1}{\sigma_0}log(\frac{\sigma_1}{\sigma_0}))=O(Npolylog(N)) </math>. | |||

In the second scenario let <math>\ m </math> be the maximum model order that we deal with. The complexity per iteration for each coefficient nose is <math>\ O(m^2R^2) </math> and <math>\ O(Nm^2R^2) </math> for <math>\ N </math> nodes. Similarly the complexity for <math>\ M</math> constraint nodes is given by <math>\ O(m^2L^2M)</math>. Thus the over all complexity for <math>\ log(N)</math> iterations is: <math>\ O(m^2LM(R+L)log(N))</math>. Assuming <math>\ L=O(N/K)</math>, <math>\ R=O(log(N/K))</math>, <math>\ M=O(Klog(N/K))</math>, the overall complexity is <math>\ O(m^2log(1/S)log(N)(N/S))</math>. | |||

==Numerical results== | ==Numerical results== | ||

The results are taken from the reference paper. The performance is studied for several level of sparsity and <math>\ \sigma_1/\sigma_0 </math> ratios. Fig. 5 shows results for this purpose (<math>\ N=6000, \sigma_1/\sigma_0=30 </math> ). Note as <math>\ L </math> is increased we require less measurements to obtain the same accuracy. | |||

[[File:res.png|thumb|right|Fig.5 Reconstruction Results.]] | |||

==Conclusions== | |||

In this paper BP was employed successfully for problem of CS reconstruction. Though some assumptions are made which limits the algorithm in practice but it lead to promising results. The proposed method is much faster than previous methods and requires less measurements. One good research direction for future is to try to propose non parametric algorithms for CS problem. | |||

==Donoho's compressed sensing== | |||

In the introduction, the work proposed earlier by Donoho was referred to. Here we try to give an overview of such work. The model supposes the following, assuming <math>x</math> is a signal that requires <math>m</math> samples to be reconstructed, and that it is known a priori that <math>x</math> is compressible by transform coding with a known transform, and it is possible to acquire data of <math>x</math> by measuring <math>n</math> general linear functions, rather than the ordinary pixels. If the collection of linear functions is well chosen, and a certain degree of reconstruction error is allowed, the size of <math>n</math> can be massively smaller than <math>m</math>. Therefore, certain natural classes of signals or images with <math>m</math> pixels need only <math>n = O(m^{\frac{1}{4}}log^{\frac{2}{5}}(m))</math> non-adaptive non-pixel samples for faithful recovery, as opposed to the ordinary <math>m</math>-pixel samples. | |||

= | Donoho supposes that the object has a sparse representation in some orthonormal basis, i.e. the coefficients belong to an <math>l^p</math> ball for <math>0 < p \leq 1</math>. This implies that the <math>N</math> most important coefficients in the expansion allow a reconstruction with <math>l^2</math> error <math>O(N^{\frac{1}{2}-\frac{1}{p}})</math>. It is possible to design <math>n=O(N*log(m))</math> non-adaptive measurements which contain the information necessary to reconstruct any such object with accuracy comparable to the accuracy which would be possible if the <math>N</math> most important coefficients of that object were directly observable. In addition, a good approximation to those <math>N</math> important coefficients may be extracted from the <math>n</math> measurements by solving a suitable linear program called Basis Pursuit. The non-adaptive measurements own the character of random linear combinations of basis elements. | ||

Experiments showed that most subspaces are near-optimal, and that convex optimization can be used for processing information derived from such near-optimal subspaces. | |||

==References== | ==References== | ||

<references /> | <references /> | ||

Latest revision as of 09:45, 30 August 2017

Introduction

One of the key theorem in digital signal processing is Shannon/Nyquist theorem. This theorem specifies the conditions on which a band limited signal can be reconstructed uniquely from its discrete samples. This property of band limited signals made signal processing viable on natural analog signals. However, in some applications even the sampled signal lays in a extremely high dimensional vector space. To make signal processing algorithms computationally tractable, many researchers work on compressiblity of signals. Here, it is assumed that most of the information content of a signal lays in a few samples with large magnitude. This lead to study and investigation on a class of signals, known as compressible signals.

Compressible signals can be stored efficiently by ignoring small value coefficients. Naturally it means that during sampling procedure loosing those samples is unimportant. This lead to a natural questions: Can we sample compressible signals in a compressed way? Is there any method to sense only those large value coefficients? In parallel works by Donoho <ref name="R1"> D. Donoho, “Compressed Sensing,” in IEEE Trans. on Info. theory, vol 52, no 4,pp. 1289–1306, Apr. 2006.</ref> and Candes et. al <ref name="R2"> E. Candes, J. Romberg, J.; T. Tao, “Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information,” in IEEE Trans. on Info. theory, vol 52, no 2,pp. 489–509, Feb. 2006.</ref> this question was answered. They showed that this procedure can be done by measuring a small number of random linear projection of the source signal. They also provided a decoding scheme for reconstructing the source signal using those measurements and provided theoretical proofs for different aspects of this topic.

Tow important questions in CS theory is: 1. How many measurement do we need to be able to reconstruct a specific signal in stable and robust way? 2. What is the decoding algorithm complexity? These are vital questions which must be answered in order to make CS theory an alternative procedure for ordinary sampling. These questions have been answered by various researchers. Possibly the most important limitation of CS theory is the complexity of the current decoding algorithms. Those algorithms with strong theoretical background which can be applied to different applications are computationally expensive and can not be used for large scale problems. For this reason one of the active research topics in this field, is to develop tractable CS decoding algorithms. A recently published paper <ref name="R3"> D. Baron, S. Sarvotham, and R.G. Baraniuk, “Compressive Sensing Via Belief Propagation ,” in IEEE Trans. on Signal Processing, vol 58, no 1,pp. 269–280, Jan. 2010.</ref> tries to provide a decoding algorithms via belief propagation (BP). Authors connect CS to graphical models in an interesting approach and then use BP on the corresponding graph to provide a decoding algorithm. In the current paper summary we will follow their approach which indicates the importance of graphical models and the wide range of problems that these models can be used on. It is shown that this approach leads to a decoding algorithm with much less computational load.

Compressed Sensing

Compressive sensing is a method for sampling and representing compressible signals at below the Nyquist rate measurement rates. Let [math]\displaystyle{ \ x }[/math] is a [math]\displaystyle{ N \times 1 }[/math] column vector in [math]\displaystyle{ \mathbb{R}^N }[/math] that only has [math]\displaystyle{ K \le N }[/math] non-zero entries. To measure this source signal we measure a small number of linear combinations of its elements, [math]\displaystyle{ \ M }[/math], as follows

where [math]\displaystyle{ \ y \in \mathbb{R}^M }[/math] is the vector containing the measurements and [math]\displaystyle{ \psi \in \mathbb{R}^{M \times N} }[/math] is a matrix that carries the coordinate basis we use in our application. The goal of CS theory is, given [math]\displaystyle{ \psi }[/math] and [math]\displaystyle{ \ y }[/math],to recover the source signal in a stable and robust approach.

It is obvious that this problem is ill-posed and infinitely many solutions satisfy (1). Since we assume that our source signal is sparse we can take advantage of this knowledge and assert sparsity as prior to limit the feasible region of solutions. Interestingly it has been proven that under this priority if the source signal is sparse enough then it can be recovered as the solution of optimization problem <ref name="R1"/> and <ref name="R2"/>:

where [math]\displaystyle{ \ ||.||_0 }[/math] denotes [math]\displaystyle{ \ l_0 }[/math] norm that measures the number of non-zero entries. Unfortunately [math]\displaystyle{ \ l_0 }[/math] optimization is NP hard and needs combinatorial enumeration and thus is intractable. Interestingly, it has been proven that if the sensing matrix satisfies restricted isometry property (RIP), then (2) can be equivalently solves as:

Here we have replaced [math]\displaystyle{ \ l_0 }[/math] norm by [math]\displaystyle{ \ l_1 }[/math] norm which is convex and robust towards additive noise. This optimization can be solved efficiently using linear programming and reconstruction complexity is [math]\displaystyle{ \ O(N^3) }[/math] . It has been shown that matrices with independently identical distributed Gaussian or Bernoulli entries satisfy RIP condition and can be used effectively as sensing matrix. In such cases the linear programming decoder requires [math]\displaystyle{ \ K log(1+N/K) }[/math] measurements for signal reconstruction.

Compressed Sensing Reconstruction Algorithms

Linear programming is the core approach for designing CS decoding algorithms. This approach is tractable with complexity of [math]\displaystyle{ \ O(N^3) }[/math], however this complexity is intractable for large scale problems. For example a [math]\displaystyle{ \ 256\times 256 }[/math] image will need [math]\displaystyle{ \ 2^{48} }[/math] operations for decoding! One the other hand the encoding stage will need [math]\displaystyle{ \ O(NM) }[/math] computations for a dense sensing matrix. For this reason one important direction in this filed is to design and simplify the encoding and decoding algorithms.

One alternative decoding approach is iterative greedy algorithm. This approach needs slightly more measurements but it decreases computational complexity significantly. Perhaps the most well know algorithms in this category are Orthogonal Matching Pursuit <ref name="R4"> J. Tropp and A. C. Gilbert, “Signal recovery from partial information via orthogonal matching pursuit,” Apr. 2005, Preprint.</ref> and Matching Pursuit (MP) <ref name="R5"> EM. F. Duarte, M. B. Wakin, and R. G. Baraniuk, “Fast reconstruction of piece-wise smooth signals from random projections ,” in Proc. SPARS05, France, Nov. 2005.</ref>. OMP requires [math]\displaystyle{ \ 2K ln(N) }[/math] measurements and the computational complexity is [math]\displaystyle{ \ O(KN^2) }[/math]. Even though it is better but it still is intractable for large [math]\displaystyle{ \ N }[/math] and [math]\displaystyle{ \ K }[/math]. More advanced versions like StOMP can recover the signal in [math]\displaystyle{ \ Nlog(N) }[/math] complexity. It is much faster and can be used for larger class of signals.

The common assumption in these approaches is that sensing matrix is assumed to be dense. Recently some researchers are trying to use sparse sensing matrix and use it to simplify the decoding algorithms. For example chaining pursuit uses this assumption and recovers the signal with complexity [math]\displaystyle{ \ K log^2(N) log^2(K) }[/math]. Table. 1 summaries CS schemes including their complexity as well as required measurements for each method. These results indicates that we can reduce the complexity only at expense of having more measurements. The last row indicates the algorithm of the current paper. This shows superiority of this algorithm. Later we will see that this superiority comes from using BP, though some simplifying assumption has been made and the method is not general proposed. (Click on the image for table enlargement)

Lessons from LDPC codes applied to CS

At first glance it may seem impossible or at lease complicated to connect CS and a graphical model. The main idea for this procedure comes from the world of error control coding. Sparse error control coding schemes such as LDPC strongly suggests the use of sparse compressed sensing matrices. Can we use sparse CS matrices to encode sparse signals efficiently? Can we take advantage of the sparse structure to reduce the encoding and decoding complexity? To answer these questions we will have a brief review on LDPC code which will clarify how we can assign a graphical model to a CS problem.

An LDPC code is a block code that has a sparse parity check matrix [math]\displaystyle{ \ H }[/math]. The parity check matrix of an LDPC code can be represented by a biparitite graph (see Fig. 1).

There are two class of nodes in the graph. The first class represents the transmitted data and the second class represents the check bits. A check bit is resulted as the linear combination of transmitted data. An edge exists in the graph if a transmitted bit appears in linear combination which produces an arbitrary check bit. This graph representation is not a special characteristics of LDPC codes. What makes it special is that for these codes the graph is sparse.: the number of edges in the graph grows linearly with [math]\displaystyle{ \ N }[/math] rather than [math]\displaystyle{ \ N^2 }[/math]. Since the graph is sparse decoding can be done efficiently using BP algorithm on the corresponding graph. This the key idea that suggests that CS decoding algorithms may develop using BP. Though our graph can have cycles but experiments show that even for cyclic graphs BP provides an acceptable approximation of the real answer.

Connecting CS decoding to graph decoding algorithms

Similar to error codes, a sensing matrix can be represented by a biparitie graph.The neighbors of a given measurement node is the set of coefficient nodes that are used to generate that measurement. In order to follow the LDPC scheme we need to choose sensing matrix to be sparse. As the first step in using graphical models in CS we assume the sensing matrix to be very simple: we assume the entries are only belonging to the set [math]\displaystyle{ \ \{0,1,-1\} }[/math]. Hence the encoding procedure is very simple and only involves summation and difference. This assumption make the corresponding graph to be sparse and hence we can use message passing algorithms for inference (note message passing for a general graph, possibly not sparse, is NP hard problem). We assume that each row of [math]\displaystyle{ \ \psi }[/math] contains exactly [math]\displaystyle{ \ L }[/math] non zero elements. This number is chosen based on properties of the source signal.

Encoding complexity

If the row weight of [math]\displaystyle{ \ \psi }[/math] is equal to [math]\displaystyle{ \ L }[/math] and we have [math]\displaystyle{ \ M }[/math] measurements then the encoding complexity will be [math]\displaystyle{ \ O(ML) }[/math]. Choosing [math]\displaystyle{ \ M=Nlog(N)/L }[/math] experimental seems to be appropriate and thus [math]\displaystyle{ \ O(ML)= O(Nlog(N)) }[/math]

CS-LDPC decoding of sparse signals

In this section we focus on approximately sparse signals. Similar to other inference problems which use BP we need to know probability distributions. Here we will assume a prior on source signal and then use it in BP procedure.

Source model

Since we want to use graphical model techniques it is necessary to assert a prior on the source signal. Here we will model the source signal by mixture of Gaussians model. This model is simple and captures prior knowledge about the source sparsity.

Let [math]\displaystyle{ \ X=[X(1),...,X(N)] }[/math] be a random vector in [math]\displaystyle{ \mathbb{R}^N }[/math] and [math]\displaystyle{ \ x=[x(1),...,x(N)] }[/math] be an instance of [math]\displaystyle{ \ X }[/math]. An approximately sparse signal has a few large magnitude coefficients and a lot of small magnitude coefficients. One can assume that we have two states for coefficients. One state is "high" which corresponds to large magnitude coefficients and the other state is "low" which corresponds to small magnitude coefficients. We denote the state of the j'th coefficient by [math]\displaystyle{ \ q(j) }[/math], where it is either equal to 1 (high) or 0 (low). The vector [math]\displaystyle{ \ q=[q(1),...,q(N) }[/math] which contains these state variables determines the source state configuration. We assume that this vector is an outcome of the state random variable [math]\displaystyle{ \ Q=[Q(1),...,Q(N)] }[/math] which is simply assumed to have multinomial distribution. For each [math]\displaystyle{ \ x(j) }[/math] we associate for the "high" state an outcome of a high variance Gaussian distribution and for the "low" state an outcome of a low variance Gaussian distribution to model the coefficients. Thus:

We assume that coefficients are distributed independently. To have about [math]\displaystyle{ \ K }[/math] large magnitude coefficients we choose the Bernoulli parameter of the [math]\displaystyle{ \ Q }[/math] equal to [math]\displaystyle{ \ S=K/N }[/math]. Fig. 2 illustrates the mixture of Gaussian model PDF and Fig. 3 is an outcome of such a distribution for [math]\displaystyle{ \ N=50, K=5, \sigma_1^2=30, \sigma_0^2=1 }[/math].

Decoding via statistical inference

The framework that was set transforms the original CS problem to a Bayesian inference problem. As we have seen in the course we can used message passing BP algorithm to solve these problems over factor graphs.

As we know (1) is undetermined linear system and assuming [math]\displaystyle{ \ \phi }[/math] to be full rank the solution lie in a hyperplane of dimension [math]\displaystyle{ \ N-M }[/math]. We use MAP and MMSE estimations to for the CS reconstruction. Note that MMSE and MAP estimates are identical for multivariate Gaussian rv's but we need both of them to derive the solution.

First we derive the exact MMSE estimate of the source in our framework. Unfortunately it has exponential complexity and is intractable for large scale systems. Due to sparse structure assumption of [math]\displaystyle{ \phi }[/math] we can use message passing scheme to estimate [math]\displaystyle{ \ \phi }[/math] approximately but in a computationally robust and stable approach.

Exact solution to CS statistical inference

To obtain MAP and MMSE estimates first we will assume [math]\displaystyle{ \ q }[/math] is known and will derive the MMSE estimate given [math]\displaystyle{ \ q }[/math]. Later we use this estimate to derive MMSE estimate. Let covariance matrix of [math]\displaystyle{ \ X }[/math] conditioned on [math]\displaystyle{ \ q }[/math] is denoted by [math]\displaystyle{ \ \Sigma_q }[/math]. It is a diagonal matrix with the j'th diagonal entry equal to [math]\displaystyle{ \ \sigma_0^2 }[/math] if the state is low and [math]\displaystyle{ \ \sigma_1^2 }[/math] if the state is high.

Theorem 1

Given [math]\displaystyle{ \ y=\psi x }[/math], then the MMSE and the MAP estimate of [math]\displaystyle{ \ x }[/math] conditioned on knowing the state vector is given:

Proof: If [math]\displaystyle{ \ q }[/math] is known then we have:

We do change of variable [math]\displaystyle{ \ \theta=\Sigma_q^{-1/2} x' }[/math]. Then we have [math]\displaystyle{ \ \theta_{MAP}=argmin_{\theta}{\theta^T\theta} }[/math] such that [math]\displaystyle{ \ y=\Phi \Sigma_q^{1/2}\theta }[/math]. This is a simple least square problem and the solution can be computed using pseudo inverse: [math]\displaystyle{ \ |theta_{MAP}=\Sigma_q^{1/2} \Phi^T (\Phi\Sigma_q \Phi^T)^{-1}y }[/math]. Inverse change of the variable gives the result. Finally as mentioned MMSE and MAP estimates are the same for multivariate Gaussian random variables.

Now we use this conditional solution to result in the general solution.

Theorem 2

The MMSE estimate of [math]\displaystyle{ \ x }[/math] when the state configuration is unknown is given by

The expected value of MMSE error obeys the equalities:

which completes the proof.

Since the summation is done on all outcomes of [math]\displaystyle{ \ q }[/math], it is intractable for large [math]\displaystyle{ \ N }[/math]. However sparse structure of sensing matrix makes it possible to use message passing algorithms such as BP to approximate the solution. It is tried to use a message passing approach to compute the approximate marginals for [math]\displaystyle{ \ [X(j)|Y] }[/math] and thus evaluate the MMSE and MAP estimates for each coefficient.

Approximate solution to CS statistical inference via message passing

We employ BP over the model factor graph. The factor graph shown in Fig. 4 captures the relationship between the signal coefficients, their states, and the observed CS measurements. We have two types of nodes: variable nodes (black) and constraint nodes (white). All edge connects a variable node to a constraint node. We have three types of variables: states [math]\displaystyle{ \ Q(j) }[/math], coefficients [math]\displaystyle{ \ X(j) }[/math] and the measurements [math]\displaystyle{ \ Y(i) }[/math] and three types of constraint nodes: prior constraint nodes which impose Bernoulli prior on state variables, constraint nodes which connects the state variables and the coefficient variables which impose the conditional distribution of the coefficient value given the state and the third type connect one measurements to the corresponding coefficients. We employ BP to infer the probability distributions of the coefficient and state variables conditioned on state variables, i.e. [math]\displaystyle{ \ P[X(j)|Y=y] }[/math],[math]\displaystyle{ \ P[Q(j)|Y=y] }[/math]. Then we can derive MAP/MMSE estimate for each coefficients. We review some interesting details: 1. We have only two types of message passing:multiplication of beliefs at the variable nodes and convolution at the constraint node. 2. We propose two strategies to encode the beliefs:

- Approximating the distribution by a mixture of Gaussian with a given number of components and we use the parameters of the mixture of the Gaussian as the messages.

- We sample PDF's and use the samples as the messages.

Decoding complexity

Consider the sampled PDF approach first. Let each message be a vector of [math]\displaystyle{ \ p }[/math] samples. At a variable node we multiply [math]\displaystyle{ \ R+1 }[/math] vectors of length [math]\displaystyle{ \ p }[/math] so for one iteration we have [math]\displaystyle{ \ O(NRp) }[/math] complexity. At a constraint node we perform convolution in DFT domain so for each node the computational complexity is [math]\displaystyle{ \ O(Lp+Lplog(p))=O(Lplog(p)) }[/math] and overall [math]\displaystyle{ \ O(MLplog(p)) }[/math] for [math]\displaystyle{ \ M }[/math] nodes. For [math]\displaystyle{ \ log(N) }[/math] iterations the overall complexity is [math]\displaystyle{ \ O(MLplog(p)log(N)) }[/math]. As a rule of thumb we chose [math]\displaystyle{ \ p=\sigma_1/\sigma_0 }[/math] and hence the complexity can be expressed as [math]\displaystyle{ \ O(ML\frac{\sigma_1}{\sigma_0}log(\frac{\sigma_1}{\sigma_0}))=O(Npolylog(N)) }[/math]. In the second scenario let [math]\displaystyle{ \ m }[/math] be the maximum model order that we deal with. The complexity per iteration for each coefficient nose is [math]\displaystyle{ \ O(m^2R^2) }[/math] and [math]\displaystyle{ \ O(Nm^2R^2) }[/math] for [math]\displaystyle{ \ N }[/math] nodes. Similarly the complexity for [math]\displaystyle{ \ M }[/math] constraint nodes is given by [math]\displaystyle{ \ O(m^2L^2M) }[/math]. Thus the over all complexity for [math]\displaystyle{ \ log(N) }[/math] iterations is: [math]\displaystyle{ \ O(m^2LM(R+L)log(N)) }[/math]. Assuming [math]\displaystyle{ \ L=O(N/K) }[/math], [math]\displaystyle{ \ R=O(log(N/K)) }[/math], [math]\displaystyle{ \ M=O(Klog(N/K)) }[/math], the overall complexity is [math]\displaystyle{ \ O(m^2log(1/S)log(N)(N/S)) }[/math].

Numerical results

The results are taken from the reference paper. The performance is studied for several level of sparsity and [math]\displaystyle{ \ \sigma_1/\sigma_0 }[/math] ratios. Fig. 5 shows results for this purpose ([math]\displaystyle{ \ N=6000, \sigma_1/\sigma_0=30 }[/math] ). Note as [math]\displaystyle{ \ L }[/math] is increased we require less measurements to obtain the same accuracy.

Conclusions

In this paper BP was employed successfully for problem of CS reconstruction. Though some assumptions are made which limits the algorithm in practice but it lead to promising results. The proposed method is much faster than previous methods and requires less measurements. One good research direction for future is to try to propose non parametric algorithms for CS problem.

Donoho's compressed sensing

In the introduction, the work proposed earlier by Donoho was referred to. Here we try to give an overview of such work. The model supposes the following, assuming [math]\displaystyle{ x }[/math] is a signal that requires [math]\displaystyle{ m }[/math] samples to be reconstructed, and that it is known a priori that [math]\displaystyle{ x }[/math] is compressible by transform coding with a known transform, and it is possible to acquire data of [math]\displaystyle{ x }[/math] by measuring [math]\displaystyle{ n }[/math] general linear functions, rather than the ordinary pixels. If the collection of linear functions is well chosen, and a certain degree of reconstruction error is allowed, the size of [math]\displaystyle{ n }[/math] can be massively smaller than [math]\displaystyle{ m }[/math]. Therefore, certain natural classes of signals or images with [math]\displaystyle{ m }[/math] pixels need only [math]\displaystyle{ n = O(m^{\frac{1}{4}}log^{\frac{2}{5}}(m)) }[/math] non-adaptive non-pixel samples for faithful recovery, as opposed to the ordinary [math]\displaystyle{ m }[/math]-pixel samples.

Donoho supposes that the object has a sparse representation in some orthonormal basis, i.e. the coefficients belong to an [math]\displaystyle{ l^p }[/math] ball for [math]\displaystyle{ 0 \lt p \leq 1 }[/math]. This implies that the [math]\displaystyle{ N }[/math] most important coefficients in the expansion allow a reconstruction with [math]\displaystyle{ l^2 }[/math] error [math]\displaystyle{ O(N^{\frac{1}{2}-\frac{1}{p}}) }[/math]. It is possible to design [math]\displaystyle{ n=O(N*log(m)) }[/math] non-adaptive measurements which contain the information necessary to reconstruct any such object with accuracy comparable to the accuracy which would be possible if the [math]\displaystyle{ N }[/math] most important coefficients of that object were directly observable. In addition, a good approximation to those [math]\displaystyle{ N }[/math] important coefficients may be extracted from the [math]\displaystyle{ n }[/math] measurements by solving a suitable linear program called Basis Pursuit. The non-adaptive measurements own the character of random linear combinations of basis elements.

Experiments showed that most subspaces are near-optimal, and that convex optimization can be used for processing information derived from such near-optimal subspaces.

References

<references />