stat841f11: Difference between revisions

| Line 1: | Line 1: | ||

== [[stat841f14 | Data Visualization (Stat 442 / 842, CM 762 - Fall 2014) ]] == | |||

== Archive == | |||

==[[f11Stat841proposal| Proposal for Final Project]]== | ==[[f11Stat841proposal| Proposal for Final Project]]== | ||

==[[f11Stat841presentation| Presentation Sign Up]]== | |||

==[[f11Stat841EditorSignUp| Editor Sign Up]]== | ==[[f11Stat841EditorSignUp| Editor Sign Up]]== | ||

| Line 21: | Line 24: | ||

== Classification (Lecture: Sep. 20, 2011) == | == Classification (Lecture: Sep. 20, 2011) == | ||

===Introduction=== | |||

''Machine learning'' (ML) methodology in general is an artificial intelligence approach to establish and train a model to recognize the pattern or underlying mapping of a system based on a set of training examples consisting of input and output patterns. Unlike in classical statistics where inference is made from small datasets, machine learning involves drawing inference from an overwhelming amount of data that could not be reasonably parsed by manpower. | |||

In machine learning, pattern recognition is the assignment of some sort of output value (or label) to a given input value (or instance), according to some specific algorithm. The approach of using examples to produce the output labels is known as ''learning methodology''. When the underlying function from inputs to outputs exists, it is referred to as the target function. The estimate of the target function which is learned or output by the learning algorithm is known as the solution of learning problem. In case of classification this function is referred to as the ''decision function''. | |||

In the broadest sense, any method that incorporates information from training samples in the design of a classifier employs learning. Learning tasks can be classified along different dimensions. One important dimension is the distinction between supervised and unsupervised learning. In supervised learning a category label for each pattern in the training set is provided. The trained system will then generalize to new data samples. In unsupervised learning , on the other hand, training data has not been labeled, and the system forms clusters or natural grouping of input patterns based on some sort of measure of similarity and it can then be used to determine the correct output value for new data instances. | |||

The first category is known as ''pattern classification'' and the second one as ''clustering''. Pattern classification is the main focus in this course. | |||

''' | '''Classification problem formulation ''': Suppose that we are given ''n'' observations. Each observation consists of a pair: a vector <math>\mathbf{x}_i\subset \mathbb{R}^d, \quad i=1,...,n</math>, and the associated label <math>y_i</math>. | ||

<math> | Where <math>\mathbf{x}_i = (x_{i1}, x_{i2}, ... x_{id}) \in \mathcal{X} \subset \mathbb{R}^d</math> and <math>Y_i</math> belongs to some finite set <math>\mathcal{Y}</math>. | ||

The classification task is now looking for a function <math>f:\mathbf{x}_i\mapsto y</math> which maps the input data points to a target value (i.e. class label). Function <math>f(\mathbf{x},\theta)</math> is defined by a set of parametrs <math>\mathbf{\theta}</math> and the goal is to train the classifier in a way that among all possible mappings with different parameters the obtained decision boundary gives the minimum classification error. | |||

=== Definitions === | |||

'''true error rate''' for classifier <math>h</math> is the error with respect to the underlying distribution | The '''true error rate''' for classifier <math>h</math> is the error with respect to the unknown underlying distribution when predicting a discrete random variable Y from a given input X. | ||

<math>L(h) = P(h(X) \neq Y )</math> | <math>L(h) = P(h(X) \neq Y )</math> | ||

'''empirical error rate''' | The '''empirical error rate''' is the error of our classification function <math>h(x)</math> on a given dataset with known outputs (e.g. training data, test data) | ||

<math>\hat{L}_n(h) = (1/n) \sum_{i=1}^{n} \mathbf{I}(h(X_i) \neq Y_i)</math> | <math>\hat{L}_n(h) = (1/n) \sum_{i=1}^{n} \mathbf{I}(h(X_i) \neq Y_i)</math> | ||

where h is a clssifier | |||

and <math>\mathbf{I}()</math> is an indicator function. The indicator function is defined by | |||

<math>\mathbf{I}(x) = \begin{cases} | <math>\mathbf{I}(x) = \begin{cases} | ||

| Line 54: | Line 60: | ||

So in this case, | So in this case, | ||

<math>\mathbf{I}(h(X_i)\neq Y_i) = \begin{cases} | <math>\mathbf{I}(h(X_i)\neq Y_i) = \begin{cases} | ||

1 & \text{if } h(X_i)\neq Y_i \text{ (i.e. | 1 & \text{if } h(X_i)\neq Y_i \text{ (i.e. misclassification)} \\ | ||

0 & \text{if } h(X_i)=Y_i \text{ (i.e. classified properly)} | 0 & \text{if } h(X_i)=Y_i \text{ (i.e. classified properly)} | ||

\end{cases}</math> | \end{cases}</math> | ||

For example, suppose we have 100 new data points with known (true) labels | |||

... | <math>X_1 ... X_{100}</math> | ||

<math>y_1 ... y_{100}</math> | |||

To calculate the empirical error, we count how many times our function <math>h(X)</math> classifies incorrectly (does not match <math>y</math>) and divide by n=100. | |||

To calculate the empirical error we count how many | |||

=== Bayes Classifier === | === Bayes Classifier === | ||

The principle of Bayes Classifier is to calculate the posterior probability of a given object from its prior probability via Bayes | The principle of the Bayes Classifier is to calculate the posterior probability of a given object from its prior probability via Bayes' Rule, and then assign the object to the class with the largest posterior probability<ref> http://www.wikicoursenote.com/wiki/Stat841#Bayes_Classifier </ref>. | ||

First recall Bayes' Rule, in the format | First recall Bayes' Rule, in the format | ||

| Line 90: | Line 94: | ||

= \frac{P(X=x|Y=1) P(Y=1)} {P(X=x|Y=1) P(Y=1) + P(X=x|Y=0) P(Y=0)}</math> | = \frac{P(X=x|Y=1) P(Y=1)} {P(X=x|Y=1) P(Y=1) + P(X=x|Y=0) P(Y=0)}</math> | ||

Bayes' rule can be approached by computing either: | Bayes' rule can be approached by computing either one of the following: | ||

1) '''The posterior''': <math>\ P(Y=1|X=x) </math> and <math>\ P(Y=0|X=x) </math> | 1) '''The posterior''': <math>\ P(Y=1|X=x) </math> and <math>\ P(Y=0|X=x) </math> | ||

2) '''The likelihood''': <math>\ P(X=x|Y=1) </math> and <math>\ P(X=x|Y=0) </math> | 2) '''The likelihood''': <math>\ P(X=x|Y=1) </math> and <math>\ P(X=x|Y=0) </math> | ||

The former reflects a '''Bayesian''' approach. The Bayesian approach uses previous beliefs and observed data (e.g., the random variable <math>\ X </math>) to determine the probability distribution of the parameter of interest (e.g., the random variable <math>\ Y </math>). The probability, according to Bayesians, is a ''degree of belief'' in the parameter of interest taking on a particular value (e.g., <math>\ Y=1 </math>), given a particular observation (e.g., <math>\ X=x </math>). Historically, the difficulty in this approach lies with determining the posterior distribution | The former reflects a '''Bayesian''' approach. The Bayesian approach uses previous beliefs and observed data (e.g., the random variable <math>\ X </math>) to determine the probability distribution of the parameter of interest (e.g., the random variable <math>\ Y </math>). The probability, according to Bayesians, is a ''degree of belief'' in the parameter of interest taking on a particular value (e.g., <math>\ Y=1 </math>), given a particular observation (e.g., <math>\ X=x </math>). Historically, the difficulty in this approach lies with determining the posterior distribution. However, more recent methods such as '''Markov Chain Monte Carlo (MCMC)''' allow the Bayesian approach to be implemented <ref name="PCAustin">P. C. Austin, C. D. Naylor, and J. V. Tu, "A comparison of a Bayesian vs. a frequentist method for profiling hospital performance," ''Journal of Evaluation in Clinical Practice'', 2001</ref>. | ||

The latter reflects a '''Frequentist''' approach. The Frequentist approach assumes that the probability distribution | The latter reflects a '''Frequentist''' approach. The Frequentist approach assumes that the probability distribution (including the mean, variance, etc.) is fixed for the parameter of interest (e.g., the variable <math>\ Y </math>, which is ''not'' random). The observed data (e.g., the random variable <math>\ X </math>) is simply a ''sampling'' of a far larger population of possible observations. Thus, a certain repeatability or ''frequency'' is expected in the observed data. If it were possible to make an infinite number of observations, then the true probability distribution of the parameter of interest can be found. In general, frequentists use a technique called '''hypothesis testing''' to compare a ''null hypothesis'' (e.g. an assumption that the mean of the probability distribution is <math>\ \mu_0 </math>) to an alternative hypothesis (e.g. assuming that the mean of the probability distribution is larger than <math>\ \mu_0 </math>) <ref name="PCAustin"/>. For more information on hypothesis testing see <ref>R. Levy, "Frequency hypothesis testing, and contingency tables" class notes for LING251, Department of Linguistics, University of California, 2007. Available: [http://idiom.ucsd.edu/~rlevy/lign251/fall2007/lecture_8.pdf http://idiom.ucsd.edu/~rlevy/lign251/fall2007/lecture_8.pdf] </ref>. | ||

There was some class discussion on which approach should be used. Both the ease of computation and the validity of both approaches were discussed. A main point that was brought up in class is that Frequentists consider X to be a random variable, but they do not consider Y to be a random variable because it has to take on one of the values from a fixed set (in the above case it would be either 0 or 1 and there is only one ''correct'' label for a given value X=x). Thus, from a Frequentist's perspective it does not make sense to talk about the probability of Y. This is actually a grey area and sometimes ''Bayesians'' and ''Frequentists'' use each others' approaches. So using ''Bayes' rule'' doesn't necessarily mean you're a ''Bayesian''. Overall, the question remains unresolved. | There was some class discussion on which approach should be used. Both the ease of computation and the validity of both approaches were discussed. A main point that was brought up in class is that Frequentists consider X to be a random variable, but they do not consider Y to be a random variable because it has to take on one of the values from a fixed set (in the above case it would be either 0 or 1 and there is only one ''correct'' label for a given value X=x). Thus, from a Frequentist's perspective it does not make sense to talk about the probability of Y. This is actually a grey area and sometimes ''Bayesians'' and ''Frequentists'' use each others' approaches. So using ''Bayes' rule'' doesn't necessarily mean you're a ''Bayesian''. Overall, the question remains unresolved. | ||

| Line 108: | Line 112: | ||

<math> P(Y=1|X=x) = \frac{P(X=x|Y=1) P(Y=1)} {P(X=x|Y=1) P(Y=1) + P(X=x|Y=0) P(Y=0)}</math> | <math> P(Y=1|X=x) = \frac{P(X=x|Y=1) P(Y=1)} {P(X=x|Y=1) P(Y=1) + P(X=x|Y=0) P(Y=0)}</math> | ||

P(Y=1) : the | P(Y=1) : The Prior, probability of Y taking the value chosen | ||

denominator : | denominator : Equivalent to P(X=x), for all values of Y, normalizes the probability | ||

<math>h(x) = | <math>h(x) = | ||

| Line 130: | Line 134: | ||

</math> | </math> | ||

''Theorem'': Bayes | '''Theorem''': The Bayes Classifier is optimal, i.e., if <math>h</math> is any other classification rule, | ||

then <math>L(h^*) <= L(h)</math> | then <math>L(h^*) <= L(h)</math> | ||

Why then do we need other | '''Proof''': Consider any classifier <math>h</math>. We can express the error rate as | ||

::<math> P( \{h(X) \ne Y \} ) = E_{X,Y} [ \mathbf{1}_{\{h(X) \ne Y \}} ] = E_X \left[ E_Y[ \mathbf{1}_{\{h(X) \ne Y \}}| X] \right] </math> | |||

To minimize this last expression, it suffices to minimize the inner expectation. Expanding this expectation: | |||

::<math> E_Y[ \mathbf{1}_{\{h(X) \ne Y \}}| X] = \sum_{y \in Supp(Y)} P( h(X) \ne y | X) \mathbf{1}_{\{h(X) \ne y \} } </math> | |||

which, in the two-class case, simplifies to | |||

::::<math> = P( h(X) \ne 0 | X) \mathbf{1}_{\{h(X) \ne 0 \} } + P( h(X) \ne 1 | X) \mathbf{1}_{\{h(X) \ne 1 \} } </math> | |||

::::<math> = r(X) \mathbf{1}_{\{h(X) \ne 0 \} } + (1-r(X))\mathbf{1}_{\{h(X) \ne 1 \} } </math> | |||

where <math>r(x)</math> is defined as above. We should 'choose' h(X) to equal the label that minimizes the sum. Consider if <math>r(X)>1/2 </math>, then <math>r(X)>1-r(X)</math> so we should let <math>h(X) = 1</math> to minimize the sum. Thus the Bayes classifier is the optimal classifier. | |||

Why then do we need other classification methods? Because X densities are often/typically unknown. I.e., <math>f_k(x)</math> and/or <math>\pi_k</math> unknown. | |||

<math>P(Y=k|X=x) = \frac{P(X=x|Y=k)P(Y=k)} {P(X=x)} = \frac{f_k(x) \pi_k} {\sum_k f_k(x) \pi_k}</math> | <math>P(Y=k|X=x) = \frac{P(X=x|Y=k)P(Y=k)} {P(X=x)} = \frac{f_k(x) \pi_k} {\sum_k f_k(x) \pi_k}</math> | ||

Therefore, we rely on some data to estimate quantities. | <math>f_k(x)</math> is referred to as the class conditional distribution (~likelihood). | ||

Therefore, we must rely on some data to estimate these quantities. | |||

=== Three Main Approaches === | === Three Main Approaches === | ||

'''1. Empirical Risk Minimization''': | '''1. Empirical Risk Minimization''': | ||

Choose a set of classifiers H (e.g., | Choose a set of classifiers H (e.g., linear, neural network) and find <math>h^* \in H</math> | ||

that minimizes (some estimate of) L(h). | that minimizes (some estimate of) the true error, L(h). | ||

'''2. Regression''': | '''2. Regression''': | ||

| Line 164: | Line 181: | ||

Estimate <math>P(X=x|Y=0)</math> from <math>X_i</math>'s for which <math>Y_i = 0</math> | Estimate <math>P(X=x|Y=0)</math> from <math>X_i</math>'s for which <math>Y_i = 0</math> | ||

Estimate <math>P(X=x|Y=1)</math> from <math>X_i</math>'s for which <math>Y_i = 1</math> | Estimate <math>P(X=x|Y=1)</math> from <math>X_i</math>'s for which <math>Y_i = 1</math> | ||

and let <math>P(Y= | and let <math>P(Y=y) = (1/n) \sum_{i=1}^{n} I(Y_i = y)</math> | ||

Define <math>\hat{r}(x) = \hat{P}(Y=1|X=x)</math> and | Define <math>\hat{r}(x) = \hat{P}(Y=1|X=x)</math> and | ||

| Line 174: | Line 191: | ||

</math> | </math> | ||

It is possible that there may not be enough data to | It is possible that there may not be enough data to use ''density estimation'', but the main problem lies with high dimensional spaces, as the estimation results may have a high error rate and sometimes estimation may be infeasible. The term ''curse of dimensionality'' was coined by Bellman <ref>R. E. Bellman, ''Dynamic Programming''. Princeton University Press, | ||

1957</ref> to describe this problem. | 1957</ref> to describe this problem. | ||

As the dimension of the space goes up, the learning | As the dimension of the space goes up, the data points required for learning increases exponentially. | ||

To learn more about methods for handling high-dimensional data see <ref> https://docs.google.com/viewer?url=http%3A%2F%2Fwww.bios.unc.edu%2F~dzeng%2FBIOS740%2Flecture_notes.pdf</ref> | |||

The third approach is the simplest. | |||

=== Multi-Class Classification === | === Multi-Class Classification === | ||

| Line 185: | Line 204: | ||

''Theorem'': <math>Y \in \mathcal{Y} = {1,2,..., k}</math> optimal rule | ''Theorem'': <math>Y \in \mathcal{Y} = \{1,2,..., k\} </math> optimal rule | ||

<math>h*(x) = argmax_k P</math> | <math>\ h^{*}(x) = argmax_k P(Y=k|X=x) </math> | ||

where <math>P(Y=k|X=x) = \frac{f_k(x) \pi_k} {\sum_r f_r \pi_r}</math> | where <math>P(Y=k|X=x) = \frac{f_k(x) \pi_k} {\sum_r f_r(x) \pi_r}</math> | ||

===Examples of Classification=== | ===Examples of Classification=== | ||

| Line 198: | Line 217: | ||

* Speech recognition. | * Speech recognition. | ||

* Handwriting recognition. | * Handwriting recognition. | ||

There are also some interesting reads on Bayes Classification: | |||

* http://esto.nasa.gov/conferences/estc2004/papers/b8p4.pdf (NASA) | |||

* http://www.cmla.ens-cachan.fr/fileadmin/Membres/vachier/Garcia6812.pdf (application to medical images) | |||

* http://www.springerlink.com/content/g221vh5m6744362r/ (Journal of Medical Systems) | |||

== LDA and QDA == | == LDA and QDA == | ||

| Line 220: | Line 244: | ||

1) Assume Gaussian distributions | 1) Assume Gaussian distributions | ||

<math>f_k(x) = \frac{1}{(2\pi)^{d/2} |\Sigma_k|^{1/2}} exp(- | <math>f_k(x) = \frac{1}{(2\pi)^{d/2} |\Sigma_k|^{1/2}} \text{exp}\big(-\frac{1}{2}(\mathbf{x-\mu_k}) \Sigma_k^{-1}(\mathbf{x-\mu_k}) )</math> | ||

must compare | must compare | ||

| Line 229: | Line 253: | ||

To find the decision boundary, set | To find the decision boundary, set | ||

<math>f_1(x) \pi_1 = f_0(x) \pi_0 </math> | <math>f_1(x) \pi_1 = f_0(x) \pi_0 </math> | ||

<math> \frac{1}{(2\pi)^{d/2} |\Sigma_1|^{1/2}} exp(-\frac{1}{2}(\mathbf{x - \mu_1}) \Sigma_1^{-1}(\mathbf{x-\mu_1}) )\pi_1 = \frac{1}{(2\pi)^{d/2} |\Sigma_0|^{1/2}} exp(-\frac{1}{2}(\mathbf{x -\mu_0}) \Sigma_0^{-1}(\mathbf{x-\mu_0}) )\pi_0</math> | |||

2) Assume <math>\Sigma_1 = \Sigma_0</math>, we can use <math>\Sigma = \Sigma_0 = \Sigma_1</math>. | 2) Assume <math>\Sigma_1 = \Sigma_0</math>, we can use <math>\Sigma = \Sigma_0 = \Sigma_1</math>. | ||

<math> \frac{1}{(2\pi)^{d/2} |\Sigma|^{1/2}} exp(-\frac{1}{2}(\mathbf{x -\mu_1}) \Sigma^{-1}(\mathbf{x-\mu_1}) )\pi_1 = \frac{1}{(2\pi)^{d/2} |\Sigma|^{1/2}} exp(-\frac{1}{2}(\mathbf{x- \mu_0}) \Sigma^{-1}(\mathbf{x-\mu_0}) )\pi_0</math> | |||

3) Cancel <math>(2\pi)^{-d/2} |\Sigma|^{-1/2}</math> from both sides. | |||

<math> exp(-\frac{1}{2}(\mathbf{x - \mu_1}) \Sigma^{-1}(\mathbf{x-\mu_1}) )\pi_1 = exp(-\frac{1}{2}(\mathbf{x - \mu_0}) \Sigma^{-1}(\mathbf{x-\mu_0}) )\pi_0</math> | |||

4) Take log of both sides. | |||

<math> -\frac{1}{2}(\mathbf{x - \mu_1}) \Sigma^{-1}(\mathbf{x-\mu_1}) )+ \text{log}(\pi_1) = -\frac{1}{2}(\mathbf{x - \mu_0}) \Sigma^{-1}(\mathbf{x-\mu_0}) )+ \text{log}(\pi_0)</math> | |||

<math>(1 | 5) Subtract one side from both sides, leaving zero on one side. | ||

<math>-\frac{1}{2}(\mathbf{x - \mu_1})^T \Sigma^{-1} (\mathbf{x-\mu_1}) + \text{log}(\pi_1) - [-\frac{1}{2}(\mathbf{x - \mu_0})^T \Sigma^{-1} (\mathbf{x-\mu_0}) + \text{log}(\pi_0)] = 0 </math> | |||

<math>\frac{1}{2}[-\mathbf{x}^T \Sigma^{-1}\mathbf{x - \mu_1}^T \Sigma^{-1} \mathbf{\mu_1} + 2\mathbf{\mu_1}^T \Sigma^{-1} \mathbf{x} | |||

+ \mathbf{x}^T \Sigma^{-1}\mathbf{x} + \mathbf{\mu_0}^T \Sigma^{-1} \mathbf{\mu_0} - 2\mathbf{\mu_0}^T \Sigma^{-1} \mathbf{x} ] | + \mathbf{x}^T \Sigma^{-1}\mathbf{x} + \mathbf{\mu_0}^T \Sigma^{-1} \mathbf{\mu_0} - 2\mathbf{\mu_0}^T \Sigma^{-1} \mathbf{x} ] | ||

+ log(\pi_1 | + \text{log}(\frac{\pi_1}{\pi_0}) = 0 </math> | ||

Cancelling out the terms quadratic in <math>\mathbf{x}</math> and rearranging results in | Cancelling out the terms quadratic in <math>\mathbf{x}</math> and rearranging results in | ||

<math> | <math>\frac{1}{2}[-\mathbf{\mu_1}^T \Sigma^{-1} \mathbf{\mu_1} + \mathbf{\mu_0}^T \Sigma^{-1} \mathbf{\mu_0} | ||

+ (2\mathbf{\mu_1}^T \Sigma^{-1} - 2\mathbf{\mu_0}^T \Sigma^{-1}) \mathbf{x}] | + (2\mathbf{\mu_1}^T \Sigma^{-1} - 2\mathbf{\mu_0}^T \Sigma^{-1}) \mathbf{x}] | ||

+ log(\pi_1 | + \text{log}(\frac{\pi_1}{\pi_0}) = 0 </math> | ||

| Line 269: | Line 302: | ||

Suppose that <math>\,Y \in \{1,\dots,K\}</math>, if <math>\,f_k(x) = Pr(X=x|Y=k)</math> is Gaussian | Suppose that <math>\,Y \in \{1,\dots,K\}</math>, if <math>\,f_k(\mathbf{x}) = Pr(X=\mathbf{x}|Y=k)</math> is Gaussian. The Bayes Classifier is | ||

:<math>\,h^*(x) = \arg\max_{k} \delta_k(x)</math> | :<math>\,h^*(\mathbf{x}) = \arg\max_{k} \delta_k(\mathbf{x})</math> | ||

Where | Where | ||

<math> \,\delta_k(x) = - \frac{1}{2}log(|\Sigma_k|) - \frac{1}{2}(x-\ | <math> \,\delta_k(\mathbf{x}) = - \frac{1}{2}log(|\Sigma_k|) - \frac{1}{2}(\mathbf{x}-\boldsymbol{\mu}_k)^\top\Sigma_k^{-1}(\mathbf{x}-\boldsymbol{\mu}_k) + log (\pi_k) </math> | ||

When the Gaussian variances are equal <math>\Sigma_1 = \Sigma_0</math> (e.g. LDA), then | When the Gaussian variances are equal <math>\Sigma_1 = \Sigma_0</math> (e.g. LDA), then | ||

<math> \,\delta_k(x) = x^\top\Sigma^{-1}\ | <math> \,\delta_k(\mathbf{x}) = \mathbf{x}^\top\Sigma^{-1}\boldsymbol{\mu}_k - \frac{1}{2}\boldsymbol{\mu}_k^\top\Sigma^{-1}\boldsymbol{\mu}_k + log (\pi_k) </math> | ||

(To compute this, we need to calculate the value of <math>\,\delta </math> for each class, and then take the one with the max. value). | (To compute this, we need to calculate the value of <math>\,\delta </math> for each class, and then take the one with the max. value). | ||

| Line 303: | Line 336: | ||

===Computation=== | ===Computation=== | ||

For QDA we need to calculate: <math> \,\delta_k(x) = - \frac{1}{2}log(|\Sigma_k|) - \frac{1}{2}(x-\ | For QDA we need to calculate: <math> \,\delta_k(\mathbf{x}) = - \frac{1}{2}log(|\Sigma_k|) - \frac{1}{2}(\mathbf{x}-\boldsymbol{\mu}_k)^\top\Sigma_k^{-1}(\mathbf{x}-\boldsymbol{\mu}_k) + log (\pi_k) </math> | ||

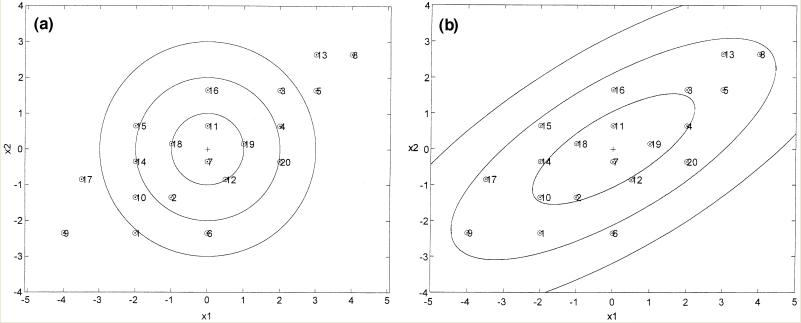

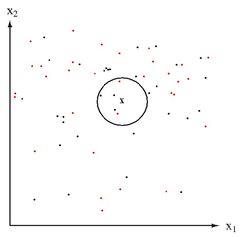

Lets first consider when <math>\, \Sigma_k = I, \forall k </math>. This is the case where each distribution is spherical, around the mean point. | Lets first consider when <math>\, \Sigma_k = I, \forall k </math>. This is the case where each distribution is spherical, around the mean point. | ||

| Line 312: | Line 345: | ||

We have: | We have: | ||

<math> \,\delta_k = - \frac{1}{2}log(|I|) - \frac{1}{2}(x-\ | <math> \,\delta_k = - \frac{1}{2}log(|I|) - \frac{1}{2}(\mathbf{x}-\boldsymbol{\mu}_k)^\top I(\mathbf{x}-\boldsymbol{\mu}_k) + log (\pi_k) </math> | ||

but <math>\ \log(|I|)=\log(1)=0 </math> | but <math>\ \log(|I|)=\log(1)=0 </math> | ||

and <math>\, (x-\ | and <math>\, (\mathbf{x}-\boldsymbol{\mu}_k)^\top I(\mathbf{x}-\boldsymbol{\mu}_k) = (\mathbf{x}-\boldsymbol{\mu}_k)^\top(\mathbf{x}-\boldsymbol{\mu}_k) </math> is the [http://en.wikipedia.org/wiki/Euclidean_distance#Squared_Euclidean_Distance squared Euclidean distance] between two points <math>\,\mathbf{x}</math> and <math>\,\boldsymbol{\mu}_k</math> | ||

Thus in this condition, a new point can be classified by its distance away from the center of a class, adjusted by some prior. | Thus in this condition, a new point can be classified by its distance away from the center of a class, adjusted by some prior. | ||

| Line 324: | Line 357: | ||

====Case 2==== | ====Case 2==== | ||

When <math>\, \Sigma_k \neq I </math> | When <math>\, \Sigma_k \neq I </math> | ||

Using the [[Singular Value Decomposition(SVD) | Singular Value Decomposition (SVD)]] of <math>\, \Sigma_k</math> | Using the [[Singular Value Decomposition(SVD) | Singular Value Decomposition (SVD)]] of <math>\, \Sigma_k</math> | ||

| Line 332: | Line 366: | ||

:<math>\begin{align} | :<math>\begin{align} | ||

(x-\ | (\mathbf{x}-\boldsymbol{\mu}_k)^\top\Sigma_k^{-1}(\mathbf{x}-\boldsymbol{\mu}_k)&= (\mathbf{x}-\boldsymbol{\mu}_k)^\top U_kS_k^{-1}U_k^T(\mathbf{x}-\boldsymbol{\mu}_k)\\ | ||

& = (U_k^\top x-U_k^\top\ | & = (U_k^\top \mathbf{x}-U_k^\top\boldsymbol{\mu}_k)^\top S_k^{-1}(U_k^\top \mathbf{x}-U_k^\top \boldsymbol{\mu}_k)\\ | ||

& = (U_k^\top x-U_k^\top\ | & = (U_k^\top \mathbf{x}-U_k^\top\boldsymbol{\mu}_k)^\top S_k^{-\frac{1}{2}}S_k^{-\frac{1}{2}}(U_k^\top \mathbf{x}-U_k^\top\boldsymbol{\mu}_k) \\ | ||

& = (S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top\ | & = (S_k^{-\frac{1}{2}}U_k^\top \mathbf{x}-S_k^{-\frac{1}{2}}U_k^\top\boldsymbol{\mu}_k)^\top I(S_k^{-\frac{1}{2}}U_k^\top \mathbf{x}-S_k^{-\frac{1}{2}}U_k^\top \boldsymbol{\mu}_k) \\ | ||

& = (S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top\ | & = (S_k^{-\frac{1}{2}}U_k^\top \mathbf{x}-S_k^{-\frac{1}{2}}U_k^\top\boldsymbol{\mu}_k)^\top(S_k^{-\frac{1}{2}}U_k^\top \mathbf{x}-S_k^{-\frac{1}{2}}U_k^\top \boldsymbol{\mu}_k) \\ | ||

\end{align} | \end{align} | ||

</math> | </math> | ||

| Line 342: | Line 376: | ||

If we think of <math> \, S_k^{-\frac{1}{2}}U_k^\top </math> as a linear transformation that takes points in class <math>\,k</math> and distributes them spherically around a point, like in case 1. Thus when we are given a new point, we can apply the modified <math>\,\delta_k</math> values to calculate <math>\ h^*(\,x)</math>. After applying the singular value decomposition, <math>\,\Sigma_k^{-1}</math> is considered to be an identity matrix such that | If we think of <math> \, S_k^{-\frac{1}{2}}U_k^\top </math> as a linear transformation that takes points in class <math>\,k</math> and distributes them spherically around a point, like in case 1. Thus when we are given a new point, we can apply the modified <math>\,\delta_k</math> values to calculate <math>\ h^*(\,x)</math>. After applying the singular value decomposition, <math>\,\Sigma_k^{-1}</math> is considered to be an identity matrix such that | ||

<math> \,\delta_k = - \frac{1}{2}log(|I|) - \frac{1}{2}[(S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top\ | <math> \,\delta_k = - \frac{1}{2}log(|I|) - \frac{1}{2}[(S_k^{-\frac{1}{2}}U_k^\top \mathbf{x}-S_k^{-\frac{1}{2}}U_k^\top\boldsymbol{\mu}_k)^\top(S_k^{-\frac{1}{2}}U_k^\top \mathbf{x}-S_k^{-\frac{1}{2}}U_k^\top \boldsymbol{\mu}_k)] + log (\pi_k) </math> | ||

and, | and, | ||

| Line 357: | Line 391: | ||

As can be seen from the illustration above, the Mahalanobis distance takes into account the distribution of the data points, whereas the Euclidean distance would treat the data as though it has a spherical distribution. Thus, the Mahalanobis distance applies for the more general classification in [[#Case 2 | Case 2]], whereas the Euclidean distance applies to the special case in [[#Case 1 | Case 1]] where the data distribution is assumed to be spherical. | As can be seen from the illustration above, the Mahalanobis distance takes into account the distribution of the data points, whereas the Euclidean distance would treat the data as though it has a spherical distribution. Thus, the Mahalanobis distance applies for the more general classification in [[#Case 2 | Case 2]], whereas the Euclidean distance applies to the special case in [[#Case 1 | Case 1]] where the data distribution is assumed to be spherical. | ||

== Principal Component Analysis (Lecture: Sep. 27, 2011) == | Generally, we can conclude that QDA provides a better classifier for the data then LDA because LDA assumes that the covariance matrix is identical for each class, but QDA does not. QDA still uses Gaussian distribution as a class conditional distribution. In our real life, this distribution can not be happened each time, so we have to use other distribution as a complement. | ||

===The Number of Parameters in LDA and QDA=== | |||

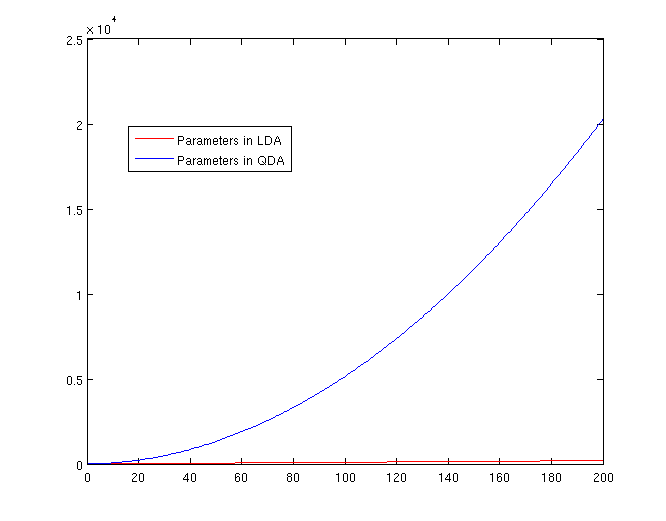

Both LDA and QDA require us to estimate some parameters. Here is a comparison between the number of parameters needed to be estimated for LDA and QDA: | |||

LDA: Since we just need to compare the differences between one given class and remaining <math>\,K-1</math> classes, totally, there are <math>\,K-1</math> differences. For each of them, <math>\,a^{T}x+b</math> requires <math>\,d+1</math> parameters. Therefore, there are <math>\,(K-1)\times(d+1)</math> parameters. | |||

QDA: For each of the differences, <math>\,x^{T}ax + b^{T}x + c</math> requires <math>\frac{1}{2}(d+1)\times d + d + 1 = \frac{d(d+3)}{2}+1</math> parameters. Therefore, there are <math>(K-1)(\frac{d(d+3)}{2}+1)</math> parameters. Thus QDA may suffer much more extremely from the curse of dimensionality. | |||

[[File:Lda-qda-parameters.png|frame|center|A plot of the number of parameters that must be estimated, in terms of (K-1). The x-axis represents the number of dimensions in the data. As is easy to see, QDA is far less robust than LDA for high-dimensional data sets.]] | |||

== Trick: Using LDA to do QDA == | |||

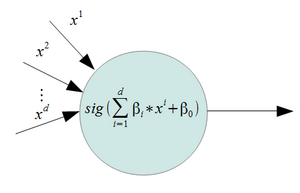

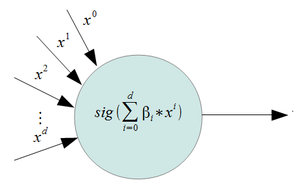

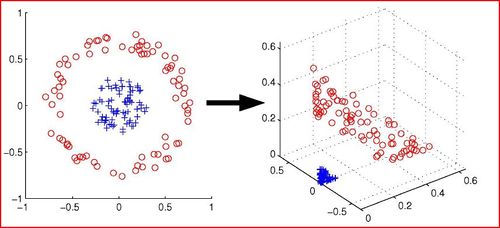

There is a trick that allows us to use the linear discriminant analysis (LDA) algorithm to generate as its output a quadratic function that can be used to classify data. This trick is similar to, but more primitive than, the [http://en.wikipedia.org/wiki/Kernel_trick Kernel trick] that will be discussed later in the course. | |||

In this approach the feature vector is augmented with the quadratic terms (i.e. new dimensions are introduced) where the original data will be projected to that dimensions. We then apply LDA on the new higher-dimensional data. | |||

The motivation behind this approach is to take advantage of the fact that fewer parameters have to be calculated in LDA , as explained in previous sections, and therefore have a more robust system in situations where we have fewer data points. | |||

If we look back at the equations for LDA and QDA, we see that in LDA we must estimate <math>\,\mu_1</math>, <math>\,\mu_2</math> and <math>\,\Sigma</math>. In QDA we must estimate all of those, plus another <math>\,\Sigma</math>; the extra <math>\,\frac{d(d-1)}{2}</math> estimations make QDA less robust with fewer data points. | |||

=== Theoretically === | |||

Suppose we have a quadratic function to estimate: <math>g(\mathbf{x}) = y = \mathbf{x}^T\mathbf{v}\mathbf{x} + \mathbf{w}^T\mathbf{x}</math>. | |||

Using this trick, we introduce two new vectors, <math>\,\hat{\mathbf{w}}</math> and <math>\,\hat{\mathbf{x}}</math> such that: | |||

<math>\hat{\mathbf{w}} = [w_1,w_2,...,w_d,v_1,v_2,...,v_d]^T</math> | |||

and | |||

<math>\hat{\mathbf{x}} = [x_1,x_2,...,x_d,{x_1}^2,{x_2}^2,...,{x_d}^2]^T</math> | |||

We can then apply LDA to estimate the new function: <math>\hat{g}(\mathbf{x},\mathbf{x}^2) = \hat{y} =\hat{\mathbf{w}}^T\hat{\mathbf{x}}</math>. | |||

Note that we can do this for any <math>\, x</math> and in any dimension; we could extend a <math>D \times n</math> matrix to a quadratic dimension by appending another <math>D \times n</math> matrix with the original matrix squared, to a cubic dimension with the original matrix cubed, or even with a different function altogether, such as a <math>\,sin(x)</math> dimension. Note, we are not applying QDA, but instead extending LDA to calculate a non-linear boundary, that will be different from QDA. This algorithm is called nonlinear LDA. | |||

== Principal Component Analysis (PCA) (Lecture: Sep. 27, 2011) == | |||

'''Principal Component Analysis (PCA)''' is a method of dimensionality reduction/feature extraction that transforms the data from a D dimensional space into a new coordinate system of dimension d, where d <= D ( the worst case would be to have d=D). The goal is to preserve as much of the variance in the original data as possible when switching the coordinate systems. Give data on D variables, the hope is that the data points will lie mainly in a linear subspace of dimension lower than D. In practice, the data will usually not lie precisely in some lower dimensional subspace. | '''Principal Component Analysis (PCA)''' is a method of dimensionality reduction/feature extraction that transforms the data from a D dimensional space into a new coordinate system of dimension d, where d <= D ( the worst case would be to have d=D). The goal is to preserve as much of the variance in the original data as possible when switching the coordinate systems. Give data on D variables, the hope is that the data points will lie mainly in a linear subspace of dimension lower than D. In practice, the data will usually not lie precisely in some lower dimensional subspace. | ||

The new variables that form a new coordinate system are called '''principal components''' (PCs). PCs are denoted by <math>\ | The new variables that form a new coordinate system are called '''principal components''' (PCs). PCs are denoted by <math>\ \mathbf{u}_1, \mathbf{u}_2, ... , \mathbf{u}_D </math>. The principal components form a basis for the data. Since PCs are orthogonal linear transformations of the original variables there is at most D PCs. Normally, not all of the D PCs are used but rather a subset of d PCs, <math>\ \mathbf{u}_1, \mathbf{u}_2, ... , \mathbf{u}_d </math>, to approximate the space spanned by the original data points <math>\ \mathbf{x}=[x_1, x_2, ... , x_D]^T </math>. We can choose d based on what percentage of the variance of the original data we would like to maintain. | ||

The first PC, <math>\ \mathbf{u}_1 </math> is called '''first principal component''' and has the maximum variance, thus it accounts for the most significant variance in the data. The second PC, <math>\ \mathbf{u}_2 </math> is called '''second principal component''' and has the second highest variance and so on until PC, <math>\ \mathbf{u}_D </math> which has the minimum variance. | |||

<math>\ | |||

Let <math>u_i = \mathbf{w}^T\mathbf{x_i}</math> be the projection of the data point <math>\mathbf{x_i}</math> on the direction of '''w''' if '''w''' is of length one. | |||

<math>\mathbf{u = (u_1,....,u_D)^T}\qquad</math> , <math>\quad\mathbf{w^Tw = 1 }</math> | |||

<math>var(u) =\mathbf{w}^T X (\mathbf{w}^T X)^T = \mathbf{w}^T X X^T\mathbf{w} = \mathbf{w}^TS\mathbf{w} \quad </math> | |||

Where <math>\quad X X^T = S </math> is the sample covariance matrix. | |||

We would like to find the <math>\ \mathbf{w} </math> which gives us maximum variation: | |||

Note: we require the constraint <math>\ | <math>\ \max (Var(\mathbf{w}^T \mathbf{x})) = \max (\mathbf{w}^T S \mathbf{w}) </math> | ||

Note: we require the constraint <math>\ \mathbf{w}^T \mathbf{w} = 1 </math> because if there is no constraint on the length of <math>\ \mathbf{w} </math> then there is no upper bound. With the constraint, the direction and not the length that maximizes the variance can be found. | |||

| Line 390: | Line 463: | ||

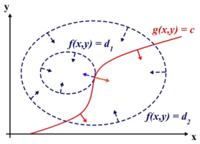

Lagrange multipliers are used to find the maximum or minimum of a function <math>\displaystyle f(x,y)</math> subject to | Lagrange multipliers are used to find the maximum or minimum of a function <math>\displaystyle f(x,y)</math> subject to constraint <math>\displaystyle g(x,y)=0</math> | ||

we define a new constant <math> \lambda</math> called a [http://en.wikipedia.org/wiki/Lagrange_multipliers Lagrange Multiplier] and we form the Lagrangian,<br /><br /> | we define a new constant <math> \lambda</math> called a [http://en.wikipedia.org/wiki/Lagrange_multipliers Lagrange Multiplier] and we form the Lagrangian,<br /><br /> | ||

<math>\displaystyle L(x,y,\lambda) = f(x,y) - \lambda g(x,y)</math> | <math>\displaystyle L(x,y,\lambda) = f(x,y) - \lambda g(x,y)</math> | ||

<br /><br /> | <br /><br /> | ||

If <math>\displaystyle (x^*,y^*)</math> is the max of <math>\displaystyle f(x,y)</math>, there exists <math>\displaystyle \lambda^*</math> such that <math>\displaystyle (x^*,y^*,\lambda^*) </math> is a stationary point of <math>\displaystyle L</math> (partial derivatives are 0). | If <math>\displaystyle f(x^*,y^*)</math> is the max of <math>\displaystyle f(x,y)</math>, there exists <math>\displaystyle \lambda^*</math> such that <math>\displaystyle (x^*,y^*,\lambda^*) </math> is a stationary point of <math>\displaystyle L</math> (partial derivatives are 0). | ||

<br>In addition <math>\displaystyle (x^*,y^*)</math> is a point in which functions <math>\displaystyle f</math> and <math>\displaystyle g</math> touch but do not cross. At this point, the tangents of <math>\displaystyle f</math> and <math>\displaystyle g</math> are parallel or gradients of <math>\displaystyle f</math> and <math>\displaystyle g</math> are parallel, such that: | <br>In addition <math>\displaystyle (x^*,y^*)</math> is a point in which functions <math>\displaystyle f</math> and <math>\displaystyle g</math> touch but do not cross. At this point, the tangents of <math>\displaystyle f</math> and <math>\displaystyle g</math> are parallel or gradients of <math>\displaystyle f</math> and <math>\displaystyle g</math> are parallel, such that: | ||

<br /><br /> | <br /><br /> | ||

| Line 422: | Line 495: | ||

Solving the system we obtain two stationary points: <math>\displaystyle (\sqrt{2}/2,-\sqrt{2}/2)</math> and <math>\displaystyle (-\sqrt{2}/2,\sqrt{2}/2)</math>. In order to understand which one is the maximum, we just need to substitute it in <math>\displaystyle f(x,y)</math> and see which one as the biggest value. In this case the maximum is <math>\displaystyle (\sqrt{2}/2,-\sqrt{2}/2)</math>. | Solving the system we obtain two stationary points: <math>\displaystyle (\sqrt{2}/2,-\sqrt{2}/2)</math> and <math>\displaystyle (-\sqrt{2}/2,\sqrt{2}/2)</math>. In order to understand which one is the maximum, we just need to substitute it in <math>\displaystyle f(x,y)</math> and see which one as the biggest value. In this case the maximum is <math>\displaystyle (\sqrt{2}/2,-\sqrt{2}/2)</math>. | ||

===Determining | ===Determining w :=== | ||

Use the Lagrange multiplier conversion to obtain: | Use the Lagrange multiplier conversion to obtain: | ||

<math>\displaystyle L( | <math>\displaystyle L(\mathbf{w}, \lambda) = \mathbf{w}^T S\mathbf{w} - \lambda (\mathbf{w}^T \mathbf{w} - 1)</math> where <math>\displaystyle \lambda </math> is a constant | ||

Take the derivative and set it to zero: | Take the derivative and set it to zero: | ||

<math>\displaystyle{\ | <math>\displaystyle{\partial L \over{\partial \mathbf{w}}} = 0 </math> | ||

To obtain: | To obtain: | ||

<math>\displaystyle | <math>\displaystyle 2S\mathbf{w} - 2 \lambda \mathbf{w} = 0</math> | ||

Rearrange to obtain: | Rearrange to obtain: | ||

<math>\displaystyle | <math>\displaystyle S\mathbf{w} = \lambda \mathbf{w}</math> | ||

where <math>\displaystyle | where <math>\displaystyle w</math> is eigenvector of <math>\displaystyle S </math> and <math>\ \lambda </math> is the eigenvalue of <math>\displaystyle S </math> as <math>\displaystyle S\mathbf{w}= \lambda \mathbf{w} </math> , and <math>\displaystyle \mathbf{w}^T \mathbf{w}=1</math> , then we can write | ||

<math>\displaystyle | <math>\displaystyle \mathbf{w}^T S\mathbf{w}= \mathbf{w}^T\lambda \mathbf{w}= \lambda \mathbf{w}^T \mathbf{w} =\lambda </math> | ||

Note that the PCs decompose the total variance in the data in the following way | Note that the PCs decompose the total variance in the data in the following way : | ||

<math> \sum_{i=1}^{D} Var(u_i) </math> | <math> \sum_{i=1}^{D} Var(u_i) </math> | ||

| Line 449: | Line 522: | ||

<math>= \sum_{i=1}^{D} (\lambda_i) </math> | <math>= \sum_{i=1}^{D} (\lambda_i) </math> | ||

<math>\ = Tr(S) </math> | <math>\ = Tr(S) </math> ---- (S is a co-variance matrix, and therefore it's symmetric) | ||

<math>= \sum_{i=1}^{D} Var(x_i)</math> | <math>= \sum_{i=1}^{D} Var(x_i)</math> | ||

== Principal Component Analysis (PCA) Continued (Lecture: Sep. 29, 2011) == | == Principal Component Analysis (PCA) Continued (Lecture: Sep. 29, 2011) == | ||

As can be seen from the above expressions, <math>\ Var( | As can be seen from the above expressions, <math>\ Var(\mathbf{w}^\top \mathbf{w}) = \mathbf{w}^\top S \mathbf{w}= \lambda </math> where lambda is an eigenvalue of the sample covariance matrix <math>\ S </math> and <math>\ \mathbf{w}</math> is its corresponding eigenvector. So <math>\ Var(u_i) </math> is maximized if <math>\ \lambda_i </math> is the maximum eigenvalue of <math>\ S </math> and the first principal component (PC) is the corresponding eigenvector. Each successive PC can be generated in the above manner by taking the eigenvectors of <math>\ S</math><ref>www.wikipedia.org/wiki/Eigenvalues_and_eigenvectors</ref> that correspond to the eigenvalues: | ||

<math>\ \lambda_1 \geq ... \geq \lambda_D </math> | <math>\ \lambda_1 \geq ... \geq \lambda_D </math> | ||

| Line 467: | Line 540: | ||

====Reconstruction Error==== | ====Reconstruction Error==== | ||

<math> \sum_{i=1}^{n} || x_i - \hat{x}_i ||^2 </math> | <math> e = \sum_{i=1}^{n} || x_i - \hat{x}_i ||^2 </math> | ||

====Minimize Reconstruction Error==== | ====Minimize Reconstruction Error==== | ||

| Line 481: | Line 554: | ||

Differentiate with respect to <math>\ y_i </math>: | Differentiate with respect to <math>\ y_i </math>: | ||

<math> \frac{ | <math> \frac{\partial e}{\partial y_i} = 0 </math> | ||

we can rewrite reconstruction-error as : <math>\ e = \sum_{i=1}^n(x_i - U_d y_i)^T(x_i - U_d y_i) </math> | |||

<math>\ \frac{\partial e}{\partial y_i} = 2(-U_d)(x_i - U_d y_i) = 0 </math> | |||

since <math>\ U_d(x_i - U_d y_i) </math> is a linear combination of the columns of <math>\ U_d </math>, | |||

which are independent (orthogonal to each other) we can conclude that: | |||

<math>\ | <math>\ x_i - U_d y_i = 0 </math> or equivalently, | ||

<math>\ x_i = U_d y_i </math> | <math>\ x_i = U_d y_i </math> | ||

| Line 493: | Line 574: | ||

<math>\ min_{U_d} \sum_{i=1}^n || x_i - U_d U_d^T x_i||^2 </math> | <math>\ min_{U_d} \sum_{i=1}^n || x_i - U_d U_d^T x_i||^2 </math> | ||

====Using | ====PCA Implementation Using Singular Value Decomposition==== | ||

A unique solution can be obtained by finding the [[Singular Value Decomposition(SVD) | Singular Value Decomposition (SVD)]] of <math>\ X </math>: | A unique solution can be obtained by finding the [[Singular Value Decomposition(SVD) | Singular Value Decomposition (SVD)]] of <math>\ X </math>: | ||

| Line 499: | Line 580: | ||

<math>\ X = U S V^T </math> | <math>\ X = U S V^T </math> | ||

For each rank d, <math>\ U_d </math> consists of the first d columns of <math>\ U </math>. Also, the covariance matrix can be expressed as follows <math>\ S = \ | For each rank d, <math>\ U_d </math> consists of the first d columns of <math>\ U </math>. Also, the covariance matrix can be expressed as follows <math>\ S = \frac{1}{n-1}\sum_{i=1}^n (x_i - \mu)(x_i - \mu)^T </math>. | ||

Simply put, by subtracting the mean of each of the data point features and then applying SVD, one can find the principal components: | Simply put, by subtracting the mean of each of the data point features and then applying SVD, one can find the principal components: | ||

| Line 507: | Line 588: | ||

<math>\ \tilde{X} = U S V^T </math> | <math>\ \tilde{X} = U S V^T </math> | ||

Where <math>\ X </math> is a d by n matrix of data points and the features of each data point form a column in <math>\ X </math>. Also, <math>\ \mu </math> is a d by n matrix | Where <math>\ X </math> is a d by n matrix of data points and the features of each data point form a column in <math>\ X </math>. Also, <math>\ \mu </math> is a d by n matrix with identical columns each equal to the mean of the <math>\ x_i</math>'s, ie <math>\mu_{:,j}=\frac{1}{n}\sum_{i=1}^n x_i </math>. Note that the arrangement of data points is a convention and indeed in Matlab or conventional statistics, the transpose of the matrices in the above formulae is used. | ||

As the <math>\ S </math> matrix from the SVD has the eigenvalues arranged from largest to smallest, the corresponding eigenvectors in the <math>\ U </math> matrix from the SVD will be such that the first column of <math>\ U </math> is the first principal component and the second column is the second principal component and so on. | As the <math>\ S </math> matrix from the SVD has the eigenvalues arranged from largest to smallest, the corresponding eigenvectors in the <math>\ U </math> matrix from the SVD will be such that the first column of <math>\ U </math> is the first principal component and the second column is the second principal component and so on. | ||

| Line 516: | Line 597: | ||

==== Example 1 ==== | ==== Example 1 ==== | ||

Consider a matrix of data points <math>\ X </math> with the dimensions 560 by 1965. 560 is the number of elements in each column. Each column is a vector representation of a 20x28 grayscale pixel image of a face (see image below) and there is a total of 1965 different images of faces. Each of the images are corrupted by noise, but the noise can be removed | Consider a matrix of data points <math>\ X </math> with the dimensions 560 by 1965. 560 is the number of elements in each column. Each column is a vector representation of a 20x28 grayscale pixel image of a face (see image below) and there is a total of 1965 different images of faces. Each of the images are corrupted by noise, but the noise can be removed by projecting the data back to the original space taking as many dimensions as one likes (e.g, 2, 3 4 0r 5). The corresponding Matlab commands are shown below: | ||

[[File:FreyFaceExample.PNG|thumb|185px|An example of the face images used in [[#Example 1 | Example 1]] with noise removed. Source: <ref>S. Roweis (2011). ''Data for MATLAB.'' [Online]. Available: [http://cs.nyu.edu/~roweis/data.html http://cs.nyu.edu/~roweis/data.html.] |</ref>]] | [[File:FreyFaceExample.PNG|thumb|185px|An example of the face images used in [[#Example 1 | Example 1]] with noise removed. Source: <ref>S. Roweis (2011). ''Data for MATLAB.'' [Online]. Available: [http://cs.nyu.edu/~roweis/data.html http://cs.nyu.edu/~roweis/data.html.] |</ref>]] | ||

<pre style="align:left; width: | <pre style="align:left; width: 75%; padding: 2% 2%"> | ||

>> % start with a 560 by 1965 matrix X that contains the data points | >> % start with a 560 by 1965 matrix X that contains the data points | ||

>> load(noisy.mat); | >> load(noisy.mat); | ||

| Line 529: | Line 610: | ||

>> | >> | ||

>> % perform SVD, if X matrix if full rank, will obtain 560 PCs | >> % perform SVD, if X matrix if full rank, will obtain 560 PCs | ||

>> [U | >> [S U V] = svd(X); | ||

>> | >> | ||

>> % reconstruct X using only the first ten principal components | >> % reconstruct X ( project X onto the original space) using only the first ten principal components | ||

>> | >> Y_pca = U(:, 1:10)'*X; | ||

>> | >> | ||

>> % show image in column 10 of X_hat which is now a 560 by 1965 matrix | >> % show image in column 10 of X_hat which is now a 560 by 1965 matrix | ||

>> imagesc(reshape(X_hat(:,10),20,28)') | >> imagesc(reshape(X_hat(:,10),20,28)') | ||

</pre> | </pre> | ||

The reason why the noise is removed in the reconstructed image is because the noise does not create a major variation in a single direction in the original data. Hence, the first ten PCs are not in the direction of the noise. Thus, reconstructing the image using the first ten PCs, will remove the noise. | The reason why the noise is removed in the reconstructed image is because the noise does not create a major variation in a single direction in the original data. Hence, the first ten PCs taken from <math>\ U </math> matrix are not in the direction of the noise. Thus, reconstructing the image using the first ten PCs, will remove the noise. | ||

==== Example 2 ==== | ==== Example 2 ==== | ||

| Line 578: | Line 659: | ||

==== Example 3 ==== | ==== Example 3 ==== | ||

(Not discussed in class) In the news recently was a story that captures some of the ideas behind PCA. Over the past two years, Scott Golder and Michael Macy, researchers from Cornell University, collected 509 million Twitter messages from 2.4 million users in 84 different countries. The data they used were words collected at various times of day and they classified the data into two different categories: positive emotion words and negative emotion words. Then, they were able to study this new data to evaluate subjects' moods at different times of day, while the subjects were in different parts of the world. They found that the subjects generally exhibited positive emotions in the mornings and late evenings, and negative emotions mid-day. They were able to "project their data onto a smaller dimensional space" using PCS. Their paper, "Diurnal and Seasonal Mood Vary with Work, Sleep, and Daylength Across Diverse Cultures," is available in the journal Science.<ref>http://www.pcworld.com/article/240831/twitter_analysis_reveals_global_human_moodiness.html</ref> | (Not discussed in class) In the news recently was a story that captures some of the ideas behind PCA. Over the past two years, Scott Golder and Michael Macy, researchers from Cornell University, collected 509 million Twitter messages from 2.4 million users in 84 different countries. The data they used were words collected at various times of day and they classified the data into two different categories: positive emotion words and negative emotion words. Then, they were able to study this new data to evaluate subjects' moods at different times of day, while the subjects were in different parts of the world. They found that the subjects generally exhibited positive emotions in the mornings and late evenings, and negative emotions mid-day. They were able to "project their data onto a smaller dimensional space" using PCS. Their paper, "Diurnal and Seasonal Mood Vary with Work, Sleep, and Daylength Across Diverse Cultures," is available in the journal Science.<ref>http://www.pcworld.com/article/240831/twitter_analysis_reveals_global_human_moodiness.html</ref>. | ||

Assumptions Underlying Principal Component Analysis can be found here<ref>http://support.sas.com/publishing/pubcat/chaps/55129.pdf</ref> | |||

==== Example 4 ==== | |||

(Not discussed in class) A somewhat well known learning rule in the field of neural networks called Oja's rule can be used to train networks of neurons to compute the principal component directions of data sets. <ref>A Simplified Neuron Model as a Principal Component Analyzer. Erkki Oja. 1982. Journal of Mathematical Biology. 15: 267-273</ref> This rule is formulated as follows | |||

<math>\,\Delta w = \eta yx -\eta y^2w </math> | |||

where <math>\,\Delta w </math> is the neuron weight change, <math>\,\eta</math> is the learning rate, <math>\,y</math> is the neuron output given the current input, <math>\,x</math> is the current input and <math>\,w</math> is the current neuron weight. This learning rule shares some similarities with another method for calculating principal components: power iteration. The basic algorithm for power iteration (taken from wikipedia: <ref>Wikipedia. http://en.wikipedia.org/wiki/Principal_component_analysis#Computing_principal_components_iteratively</ref>) is shown below | |||

<math>\mathbf{p} =</math> a random vector | |||

do ''c'' times: | |||

<math>\mathbf{t} = 0</math> (a vector of length ''m'') | |||

for each row <math>\mathbf{x} \in \mathbf{X^T}</math> | |||

<math>\mathbf{t} = \mathbf{t} + (\mathbf{x} \cdot \mathbf{p})\mathbf{x}</math> | |||

<math>\mathbf{p} = \frac{\mathbf{t}}{|\mathbf{t}|}</math> | |||

return <math>\mathbf{p}</math> | |||

Comparing this with the neuron learning rule we can see that the term <math>\, \eta y x </math> is very similar to the <math>\,\mathbf{t}</math> update equation in the power iteration method, and identical if the neuron model is assumed to be linear (<math>\,y(x)=x\mathbf{p}</math>) and the learning rate is set to 1. Additionally, the <math>\, -\eta y^2w </math> term performs the normalization, the same function as the <math>\,\mathbf{p}</math> update equation in the power iteration method. | |||

=== Observations === | === Observations === | ||

Some observations about the PCA were brought up in class: | Some observations about the PCA were brought up in class: | ||

* '''PCA''' assumes that data is on a ''linear subspace'' or close to a linear subspace. For non-linear dimensionality reduction, other techniques are used. Amongst the first proposed techniques for non-linear dimensionality reduction are '''Locally Linear Embedding (LLE)''' and '''Isomap'''. More recent techniques include '''Maximum Variance Unfolding (MVU)''' and '''t-Distributed Stochastic Neighbor Embedding (t-SNE)'''. '''Kernel PCAs''' may also be used, but they depend on the type of kernel used and generally do not work well in practice. (Kernels will be covered in more detail later in the course.) | |||

* Finding the number of PCs to use is not straightforward. It requires knowledge about the ''instrinsic dimentionality of data''. In practice, oftentimes a heuristic approach is adopted by looking at the eigenvalues ordered from largest to smallest. If there is a "dip" in the magnitude of the eigenvalues, the "dip" is used as a cut off point and only the large eigenvalues before the "dip" are used. Otherwise, it is possible to add up the eigenvalues from largest to smallest until a certain percentage value is reached. This percentage value represents the percentage of variance that is preserved when projecting onto the PCs corresponding to the eigenvalues that have been added together to achieve the percentage. | |||

* It is a good idea to normalize the variance of the data before applying PCA. This will avoid PCA finding PCs in certain directions due to the scaling of the data, rather than the real variance of the data. | |||

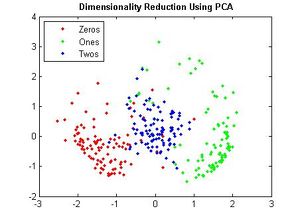

* PCA can be considered as an unsupervised approach, since the main direction of variation is not known beforehand, i.e. it is not completely certain which dimension the first PC will capture. The PCs found may not correspond to the desired labels for the data set. There are, however, alternate methods for performing supervised dimensionality reduction. | |||

* (Not in class) Even though the traditional PCA method does not work well on data set that lies on a non-linear manifold. A revised PCA method, called c-PCA, has been introduced to improve the stability and convergence of intrinsic dimension estimation. The approach first finds a minimal cover (a cover of a set X is a collection of sets whose union contains X as a subset<ref>http://en.wikipedia.org/wiki/Cover_(topology)</ref>) of the data set. Since set covering is an NP-hard problem, the approach only finds an approximation of minimal cover to reduce the complexity of the run time. In each subset of the minimal cover, it applies PCA and filters out the noise in the data. Finally the global intrinsic dimension can be determined from the variance results from all the subsets. The algorithm produces robust results.<ref>Mingyu Fan, Nannan Gu, Hong Qiao, Bo Zhang, Intrinsic dimension estimation of data by principal component analysis, 2010. Available: http://arxiv.org/abs/1002.2050</ref> | |||

*(Not in class) While PCA finds the mathematically optimal method (as in minimizing the squared error), it is sensitive to outliers in the data that produce large errors PCA tries to avoid. It therefore is common practice to remove outliers before computing PCA. However, in some contexts, outliers can be difficult to identify. For example in data mining algorithms like correlation clustering, the assignment of points to clusters and outliers is not known beforehand. A recently proposed generalization of PCA based on a '''Weighted PCA''' increases robustness by assigning different weights to data objects based on their estimated relevancy.<ref>http://en.wikipedia.org/wiki/Principal_component_analysis</ref> | |||

* (Not in class) Comparison between PCA and LDA: Principal Component Analysis (PCA)and Linear Discriminant Analysis (LDA) are two commonly used techniques for data classification and dimensionality reduction. Linear Discriminant Analysis easily handles the case where the within-class frequencies are unequal and their performances has been examined on randomly generated test data. This method maximizes the ratio of between-class variance to the within-class variance in any particular data set thereby guaranteeing maximal separability. ... The prime difference between LDA and PCA is that PCA does more of feature classification and LDA does data classification. In PCA, the shape and location of the original data sets changes when transformed to a different space whereas LDA doesn’t change the location but only tries to provide more class separability and draw a decision region between the given classes. This method also helps to better understand the distribution of the feature data." <ref> Balakrishnama, S., Ganapathiraju, A. LINEAR DISCRIMINANT ANALYSIS - A BRIEF TUTORIAL. http://www.isip.piconepress.com/publications/reports/isip_internal/1998/linear_discrim_analysis/lda_theory.pdf </ref> | |||

=== Summary === | === Summary === | ||

The PCA algorithm can be summarized into the following steps: | The PCA algorithm can be summarized into the following steps: | ||

# '''Recover basis''' | |||

#: <math>\ \text{ Calculate } XX^T=\Sigma_{i=1}^{t}x_ix_{i}^{T} \text{ and let } U=\text{ eigenvectors of } XX^T \text{ corresponding to the largest } d \text{ eigenvalues.} </math> | |||

# '''Encode training data''' | |||

#: <math>\ \text{Let } Y=U^TX \text{, where } Y \text{ is a } d \times t \text{ matrix of encodings of the original data.} </math> | |||

# '''Reconstruct training data''' | |||

#: <math> \hat{X}=UY=UU^TX </math>. | |||

# '''Encode test example''' | |||

#: <math>\ y = U^Tx \text{ where } y \text{ is a } d\text{-dimensional encoding of } x </math>. | |||

# '''Reconstruct test example''' | |||

#: <math> \hat{x}=Uy=UU^Tx </math>. | |||

=== Dual PCA === | |||

Singular value decomposition allows us to formulate the principle components algorithm entirely in terms of dot products between data points and limit the direct dependence on the original dimensionality ''d''. Now assume that the dimensionality ''d'' of the ''d × n'' matrix of data X is large (i.e., ''d >> n''). In this case, the algorithm described in previous sections become impractical. We would prefer a run time that depends only on the number of training examples ''n'', or that at least has a reduced dependence on ''n''. | |||

Note that in the SVD factorization <math>\ X = U \Sigma V^T </math>, the eigenvectors in <math>\ U </math> corresponding to non-zero singular values in <math>\ \Sigma </math> (square roots of eigenvalues) are in a one-to-one correspondence with the eigenvectors in <math>\ V </math> . | |||

After performing dimensionality reduction on <math>\ U </math> and keeping only the first ''l'' eigenvectors, corresponding to the top ''l'' non-zero singular values in <math>\ \Sigma </math>, these eigenvectors will still be in a one-to-one correspondence with the first ''l'' eigenvectors in <math>\ V </math> : | |||

<math>\ X V = U \Sigma </math> | |||

<math>\ \Sigma </math> is square and invertible, because its diagonal has non-zero entries. Thus, the following conversion between the top ''l'' eigenvectors can be derived: | |||

<math>\ U = X V \Sigma^{-1} </math> | |||

Now Replacing <math>\ U </math> with <math>\ X V \Sigma^{-1} </math> gives us the dual form of PCA. | |||

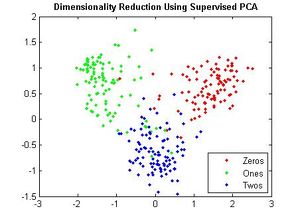

== Fisher Discriminant Analysis (FDA) (Lecture: Sep. 29, 2011 - Oct. 04, 2011) == | |||

'''Fisher Discriminant Analysis (FDA)''' is sometimes called ''Fisher Linear Discriminant Analysis (FLDA)'' or just ''Linear Discriminant Analysis (LDA)''. This causes confusion with the [[#LDA | ''Linear Discriminant Analysis (LDA)'']] technique covered earlier in the course. The LDA technique covered earlier in the course has a normality assumption and is a boundary finding technique. The FDA technique outlined here is a supervised feature extraction technique. FDA differs from PCA as well because PCA does not use the class labels, <math>\ y_i</math>, of the data <math>\ (x_i,y_i)</math> while FDA organizes data into their ''classes'' by finding the direction of maximum separation between classes. | |||

=== PCA === | |||

- Find a rank d subspace which minimize the squared reconstruction error: | |||

<math> \Sigma = |x_i - \hat{x} |^2</math> | |||

where <math>\hat{x} </math> is projection of original data. | |||

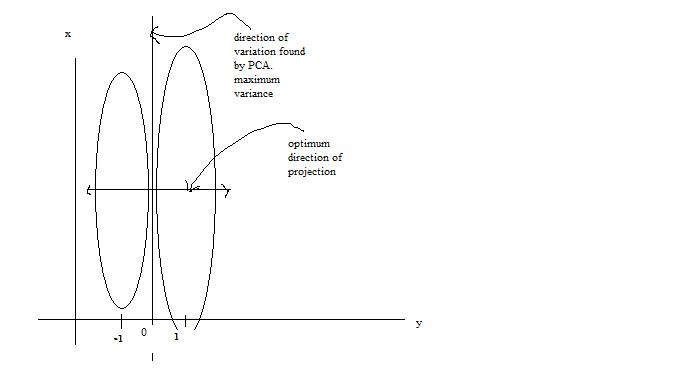

One main drawback of the PCA technique is that the direction of greatest variation may not produce the classification we desire. For example, imagine if the [[#Example 2 | data set]] above had a lighting filter applied to a random subset of the images. Then the greatest variation would be the brightness and not the more important variations we wish to classify. As another example , if we imagine 2 cigar like clusters in 2 dimensions, one cigar has <math>y = 1</math> and the other <math>y = -1</math>. The cigars are positioned in parallel and very closely together, such that the variance in the total data-set, ignoring the labels, is in the direction of the cigars. For classification, this would be a terrible projection, because all labels get evenly mixed and we destroy the useful information. A much more useful projection is orthogonal to the cigars, i.e. in the direction of least overall variance, which would perfectly separate the data-cases (obviously, we would still need to perform classification in this 1-D space.) See figure below <ref>www.ics.uci.edu/~welling/classnotes/papers_class/Fisher-LDA.pdf</ref>. FDA circumvents this problem by using the labels, <math>\ y_i</math>, of the data <math>\ (x_i,y_i)</math> i.e. the FDA uses ''supervised learning''. | |||

The main difference between FDA and PCA is that, in PCA we are interested in transforming the data to a new coordinate system such that the greatest variance of data lies on the first coordinate, but in FDA, we project the data of each class onto a point in such a way that the resulting points would be as far apart from each other as possible. The FDA goal is achieved by projecting data onto a suitably chosen line that minimizes the within class variance, and maximizes the distance between the two classes i.e. group similar data together and spread different data apart. This way, new data acquired can be compared, after a transformation, to where these projections, using some well-chosen metric. | |||

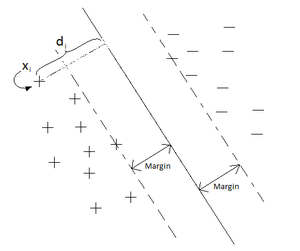

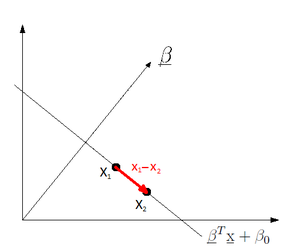

[[File:Classification.jpg | Two cigar distributions where the direction of greatest variance is not the most useful for classification]] | |||

We first consider the cases of two-classes. Denote the mean and covariance matrix of class <math>i=0,1</math> by <math>\mathbf{\mu}_i</math> and <math>\mathbf{\Sigma}_i</math> respectively. We transform the data so that it is projected into 1 dimension i.e. a scalar value. To do this, we compute the inner product of our <math>dx1</math>-dimensional data, <math>\mathbf{x}</math>, by a to-be-determined <math>dx1</math>-dimensional vector <math>\mathbf{w}</math>. The new means and covariances of the transformed data: | We first consider the cases of two-classes. Denote the mean and covariance matrix of class <math>i=0,1</math> by <math>\mathbf{\mu}_i</math> and <math>\mathbf{\Sigma}_i</math> respectively. We transform the data so that it is projected into 1 dimension i.e. a scalar value. To do this, we compute the inner product of our <math>dx1</math>-dimensional data, <math>\mathbf{x}</math>, by a to-be-determined <math>dx1</math>-dimensional vector <math>\mathbf{w}</math>. The new means and covariances of the transformed data: | ||

| Line 629: | Line 750: | ||

::<math> \Sigma'_i :\rightarrow \mathbf{w}^{T}\mathbf{\sigma}_i \mathbf{w}</math> | ::<math> \Sigma'_i :\rightarrow \mathbf{w}^{T}\mathbf{\sigma}_i \mathbf{w}</math> | ||

The new means and variances are actually scalar values now, but we will use vector and matrix notation and arguments throughout the following derivation as the multi-class case is then just a simpler extension. | The new means and variances are actually scalar values now, but we will use vector and matrix notation and arguments throughout the following derivation as the multi-class case is then just a simpler extension. | ||

===Goals of FDA=== | |||

As will be shown in the objective function, the goal of FDA is to maximize the separation of the classes (between class variance) and minimize the scatter within each class (within class variance). That is, our ideal situation is that the individual classes are as far away from each other as possible and at the same time the data within each class are as close to each other as possible (collapsed to a single point in the most extreme case). An interesting note is that R. A. Fisher who FDA is named after, used the FDA technique for purposes of taxonomy, in particular for categorizing different species of iris flowers. <ref name="RAFisher">R. A. Fisher, "The Use of Multiple measurements in Taxonomic Problems," ''Annals of Eugenics'', 1936</ref>. It is very easy to visualize what is meant by within class variance (i.e. differences between the iris flowers of the same species) and between class variance (i.e. the differences between the iris flowers of different species) in that case. | |||

Our first goal is to minimize the individual classes' covariance | First, we need to reduce the dimensionality of covariate to one dimension (two-class case) by projecting the data onto a line. That is take the d-dimensional input values x and project it to one dimension by using <math>z=\mathbf{w}^T \mathbf{x}</math> where <math>\mathbf{w}^T </math> is 1 by d and <math>\mathbf{x}</math> is d by 1. | ||

Goal: choose the vector <math>\mathbf{w}=[w_1,w_2,w_3,...,w_d]^T </math> that best seperate the data, then we perform classification with projected data <math>z</math> instead of original data <math>\mathbf{x}</math> . | |||

<math>\hat{{\mu}_0}=\frac{1}{n_0}\sum_{i:y_i=0} x_i</math> | |||

<math>\hat{{\mu}_1}=\frac{1}{n_1}\sum_{i:y_i=1} x_i</math> | |||

<math>\mathbf{x}\rightarrow\mathbf{w}^{T}\mathbf{x}</math>. <br /> | |||

<math>\mathbf{\mu}\rightarrow\mathbf{w}^{T}\mathbf{\mu}</math>.<br /> | |||

<math>\mathbf{\Sigma}\rightarrow\mathbf{w}^{T}\mathbf{\Sigma}\mathbf{w}</math> <br /> | |||

'''1)''' Our '''first''' goal is to minimize the individual classes' covariance. This will help to collapse the data together. | |||

We have two minimization problems | We have two minimization problems | ||

::<math>\min_{\mathbf{w}} \mathbf{w} \mathbf{\Sigma}_0 \mathbf{w | ::<math>\min_{\mathbf{w}} \mathbf{w}^{T} \mathbf{\Sigma}_0 \mathbf{w}</math> | ||

and | and | ||

::<math>\min_{\mathbf{w}} \mathbf{w} \mathbf{\Sigma}_1 \mathbf{w | ::<math>\min_{\mathbf{w}} \mathbf{w}^{T} \mathbf{\Sigma}_1 \mathbf{w}</math>. | ||

But these can be combined: | But these can be combined: | ||

::<math> \min_{\mathbf{w}} \mathbf{w} \mathbf{\Sigma}_0 \mathbf{w | ::<math> \min_{\mathbf{w}} \mathbf{w} ^{T}\mathbf{\Sigma}_0 \mathbf{w} + \mathbf{w}^{T} \mathbf{\Sigma}_1 \mathbf{w}</math> | ||

:: <math> = \min_{\mathbf{w}} \mathbf{w} ( \mathbf{\Sigma_0} + \mathbf{\Sigma_1} ) \mathbf{w | :: <math> = \min_{\mathbf{w}} \mathbf{w} ^{T}( \mathbf{\Sigma_0} + \mathbf{\Sigma_1} ) \mathbf{w}</math> | ||

Define <math> \mathbf{S}_W =\mathbf{\Sigma_0} + \mathbf{\Sigma_1} </math>, called the ''within class variance matrix''. | Define <math> \mathbf{S}_W =\mathbf{\Sigma_0} + \mathbf{\Sigma_1} </math>, called the ''within class variance matrix''. | ||

Our second goal is to move the minimized classes as far away from each other as possible. One way to accomplish this is to maximize the distances between the means of the transformed data i.e. | '''2)''' Our '''second''' goal is to move the minimized classes as far away from each other as possible. One way to accomplish this is to maximize the distances between the means of the transformed data i.e. | ||

<math> \max_{\mathbf{w}} |\mathbf{w}^{T}\mathbf{mu}_0 - \mathbf{w}^{T}\mathbf{mu}_1|^2 </math> | <math> \max_{\mathbf{w}} |\mathbf{w}^{T}\mathbf{\mu}_0 - \mathbf{w}^{T}\mathbf{\mu}_1|^2 </math> | ||

Simplifying: | Simplifying: | ||

::<math> \max_{\mathbf{w}} \,(\mathbf{w}^{T}\mathbf{\mu}_0 - \mathbf{w}^{T}\mathbf{\mu}_1)^T (\mathbf{w}^{T}\mathbf{\mu}_0 - \mathbf{w}^{T}\mathbf{mu}_1) </math> <br/> | ::<math> \max_{\mathbf{w}} \,(\mathbf{w}^{T}\mathbf{\mu}_0 - \mathbf{w}^{T}\mathbf{\mu}_1)^T (\mathbf{w}^{T}\mathbf{\mu}_0 - \mathbf{w}^{T}\mathbf{\mu}_1) </math> <br/> | ||

::<math> = \max_{\mathbf{w}}\, (\mathbf{\mu}_0-\mathbf{\mu}_1)^{T}\mathbf{w | ::<math> = \max_{\mathbf{w}}\, (\mathbf{\mu}_0-\mathbf{\mu}_1)^{T}\mathbf{w} \mathbf{w}^{T} (\mathbf{\mu}_0-\mathbf{\mu}_1)</math> <br/> | ||

::<math> = \max_{\mathbf{w}} \,\mathbf{w}^{T}(\mathbf{\mu}_0-\mathbf{\mu}_1)(\mathbf{\mu}_0-\mathbf{\mu}_1)^{T}\mathbf{w}</math> | ::<math> = \max_{\mathbf{w}} \,\mathbf{w}^{T}(\mathbf{\mu}_0-\mathbf{\mu}_1)(\mathbf{\mu}_0-\mathbf{\mu}_1)^{T}\mathbf{w}</math> | ||

| Line 663: | Line 800: | ||

This matrix, called the ''between class variance matrix'', is a rank 1 matrix, so an inverse does not exist. Altogether, we have two optimization problems we must solve simultaneously: | This matrix, called the ''between class variance matrix'', is a rank 1 matrix, so an inverse does not exist. Altogether, we have two optimization problems we must solve simultaneously: | ||

::1) <math> \min_{\mathbf{w}} \mathbf{w} \mathbf{S_W} \mathbf{w | ::1) <math> \min_{\mathbf{w}} \mathbf{w}^{T} \mathbf{S_W} \mathbf{w} </math><br/> | ||

::2) <math> \max_{\mathbf{w}} \mathbf{w} \mathbf{S_B} \mathbf{w | ::2) <math> \max_{\mathbf{w}} \mathbf{w}^{T} \mathbf{S_B} \mathbf{w} </math> | ||

There are other metrics one can use to both minimize the data's variance and maximizes the distance between classes, and other goals we can try to accomplish (see metric learning, below...one day), but Fisher used this elegant method, hence his recognition in the name, and we will follow his method. | There are other metrics one can use to both minimize the data's variance and maximizes the distance between classes, and other goals we can try to accomplish (see metric learning, below...one day), but Fisher used this elegant method, hence his recognition in the name, and we will follow his method. | ||

| Line 670: | Line 807: | ||

We can combine the two optimization problems into one after noting that the negative of max is min: | We can combine the two optimization problems into one after noting that the negative of max is min: | ||

::<math> \max_{\mathbf{w}} \mathbf{w} \mathbf{ | ::<math> \max_{\mathbf{w}} \; \alpha \mathbf{w}^{T} \mathbf{S_B} \mathbf{w} - \mathbf{w}^{T} \mathbf{S_W} \mathbf{w} </math><br/> | ||

The <math>\alpha</math> coefficient is a necessary scaling factor: if the scale of one of the terms is much larger than the other, the optimization problem will be dominated by the larger term. This means we have another unknown, <math>\alpha</math>, to solve for. Instead, we can circumvent the scaling problem by looking at the ratio of the quantities, the original solution Fisher proposed: | The <math>\alpha</math> coefficient is a necessary scaling factor: if the scale of one of the terms is much larger than the other, the optimization problem will be dominated by the larger term. This means we have another unknown, <math>\alpha</math>, to solve for. Instead, we can circumvent the scaling problem by looking at the ratio of the quantities, the original solution Fisher proposed: | ||

::<math> \max_{\mathbf{w}} \frac{\mathbf{w} \mathbf{S_B} \mathbf{w | ::<math> \max_{\mathbf{w}} \frac{\mathbf{w}^{T} \mathbf{S_B} \mathbf{w}}{\mathbf{w}^{T} \mathbf{S_W} \mathbf{w}} </math> | ||

This optimization problem can be shown<ref> | This optimization problem can be shown<ref> | ||

| Line 680: | Line 817: | ||

</ref> to be equivalent to the following optimization problem: | </ref> to be equivalent to the following optimization problem: | ||

:: <math> \max_{\mathbf{w}} \mathbf{w} \mathbf{S_B} \mathbf{w | :: <math> \max_{\mathbf{w}} \mathbf{w}^{T} \mathbf{S_B} \mathbf{w}</math> <br /> | ||

(optimized function) | |||

: | subject to: | ||

:: <math> {\mathbf{w}^{T} \mathbf{S_W} \mathbf{w}} = 1 </math><br /> | |||

(constraint) | |||

A heuristic understanding of this equivalence is that we have two degrees of freedom: direction and scalar. The scalar value is irrelevant to our discussion. Thus, we can set one of the values to be a constant. We can use Lagrange multipliers to solve this optimization problem: | A heuristic understanding of this equivalence is that we have two degrees of freedom: direction and scalar. The scalar value is irrelevant to our discussion. Thus, we can set one of the values to be a constant. We can use Lagrange multipliers to solve this optimization problem: | ||

::<math>L( \mathbf{w}, \lambda) = \mathbf{w} \mathbf{S_B} \mathbf{w | ::<math>L( \mathbf{w}, \lambda) = \mathbf{w}^{T} \mathbf{S_B} \mathbf{w} - \lambda(\mathbf{w}^{T} \mathbf{S_W} \mathbf{w}-1)</math> | ||

:: <math> \Rightarrow \frac{\partial L}{\partial \mathbf{w}} = 2 \mathbf{S}_B \mathbf{w} - 2\lambda \mathbf{S}_W\mathbf{w} </math> | :: <math> \Rightarrow \frac{\partial L}{\partial \mathbf{w}} = 2 \mathbf{S}_B \mathbf{w} - 2\lambda \mathbf{S}_W\mathbf{w} </math> | ||

| Line 693: | Line 834: | ||

::<math> \mathbf{S}_B \mathbf{w} = \lambda \mathbf{S}_W \mathbf{w} </math> | ::<math> \mathbf{S}_B \mathbf{w} = \lambda \mathbf{S}_W \mathbf{w} </math> | ||

:: <math> \Rightarrow \mathbf{S}_W^{-1} \mathbf{S}_B \mathbf{w} = \lambda \mathbf{w} </math> | :: <math> \Rightarrow \mathbf{S}_W^{-1} \mathbf{S}_B \mathbf{w} = \lambda \mathbf{w} </math> | ||

This is a generalized eigenvalue problem and <math>\ \mathbf{w} </math> can be computed as the eigenvector corresponds to the largest eigenvalue of | |||

:: <math> \mathbf{S}_W^{-1} \mathbf{S}_B </math> | |||

It is very likely that <math> \mathbf{S}_W </math> has an inverse. If not, the pseudo-inverse<ref> | It is very likely that <math> \mathbf{S}_W </math> has an inverse. If not, the pseudo-inverse<ref> | ||

| Line 700: | Line 844: | ||

</ref> can be used. In Matlab the pseudo-inverse function is named ''pinv''. Thus, we should choose <math>\mathbf{w}</math> to equal the eigenvector of the largest eigenvalue as our projection vector. | </ref> can be used. In Matlab the pseudo-inverse function is named ''pinv''. Thus, we should choose <math>\mathbf{w}</math> to equal the eigenvector of the largest eigenvalue as our projection vector. | ||

In fact we can simplify the above expression further in the of two classes. Recall the definition of <math>\mathbf{S}_B = (\mathbf{\mu}_0-\mathbf{\mu}_1)(\mathbf{\mu}_0-\mathbf{\mu}_1)^{T}</math>. Substituting this into our expression: | In fact we can simplify the above expression further in the case of two classes. Recall the definition of <math>\mathbf{S}_B = (\mathbf{\mu}_0-\mathbf{\mu}_1)(\mathbf{\mu}_0-\mathbf{\mu}_1)^{T}</math>. Substituting this into our expression: | ||

::<math> \mathbf{S}_W^{-1}(\mathbf{\mu}_0-\mathbf{\mu}_1)(\mathbf{\mu}_0-\mathbf{\mu}_1)^{T} \mathbf{w} = \lambda \mathbf{w} </math> | ::<math> \mathbf{S}_W^{-1}(\mathbf{\mu}_0-\mathbf{\mu}_1)(\mathbf{\mu}_0-\mathbf{\mu}_1)^{T} \mathbf{w} = \lambda \mathbf{w} </math> | ||

::<math> (\mathbf{S}_W^{-1}(\mathbf{\mu}_0-\mathbf{\mu}_1) ) ((\mathbf{\mu}_0-\mathbf{\mu}_1)^{T} \mathbf{w}) = \lambda \mathbf{w} </math> | |||

This second term is a scalar value, let's denote it <math>\beta</math>. Then | This second term is a scalar value, let's denote it <math>\beta</math>. Then | ||

::<math> \mathbf{S}_W^{-1}(\mathbf{\mu}_0-\mathbf{\mu}_1) \propto \mathbf{w} </math> | ::<math> \mathbf{S}_W^{-1}(\mathbf{\mu}_0-\mathbf{\mu}_1) = \frac{\lambda}{\beta} \mathbf{w} </math> | ||

::<math> \Rightarrow \, \mathbf{S}_W^{-1}(\mathbf{\mu}_0-\mathbf{\mu}_1) \propto \mathbf{w} </math> | |||

<br /> | |||

(this equation indicates the direction of the separation). | |||

All we are interested in the direction of <math>\mathbf{w}</math>, so to compute this is sufficient to finding our projection vector. Though this will not work in higher dimensions, as <math>\mathbf{w}</math> would be a matrix and not a vector in higher dimensions. | |||

=== Extensions to Multiclass Case === | |||

If we have <math>\ k</math> classes, we need <math>\ k-1</math> directions i.e. we need to project <math>\ k</math> 'points' onto a <math>\ k-1</math> dimensional hyperplane. What does this change in our above derivation? The most significant difference is that our projection vector,<math>\mathbf{w}</math>, is no longer a vector but instead is a matrix <math>\mathbf{W}</math>, where <math>\mathbf{W}</math> is a d*(k-1) matrix if X is in d-dim. We transform the data as: | |||

===Extensions to Multiclass Case === | |||

If we have <math>k</math> classes, we need <math>k-1</math> directions i.e. we need to project <math>k</math> 'points' onto a <math>k-1</math> dimensional hyperplane. What does this change in our above derivation? The most significant difference is that our projection vector,<math>\mathbf{w}</math>, is no longer a vector but instead is a matrix <math>\mathbf{W}</math>. We transform the data as | |||

::<math> \mathbf{x}' :\rightarrow \mathbf{W}^{T} \mathbf{x}</math> | ::<math> \mathbf{x}' :\rightarrow \mathbf{W}^{T} \mathbf{x}</math> | ||

| Line 725: | Line 871: | ||

::<math>\min_{\mathbf{W}} \mathbf{W}^{T} \mathbf{S}_W \mathbf{W} </math> | ::<math>\min_{\mathbf{W}} \mathbf{W}^{T} \mathbf{S}_W \mathbf{W} </math> | ||

Similarly, the second optimization problem is | Similarly, the second optimization problem is: | ||

::<math>\max_{\mathbf{W}} \mathbf{W}^{T} \mathbf{S}_B \mathbf{W} </math> | ::<math>\max_{\mathbf{W}} \mathbf{W}^{T} \mathbf{S}_B \mathbf{W} </math> | ||

What is <math>\mathbf{S}_B</math> in this case? It can be shown that <math>\mathbf{S}_T = \mathbf{S}_B + \mathbf{S}_W </math> where <math> \mathbf{S}_T </math> is the covariance matrix of all the data. From this we can compute <math> \mathbf{S}_B </math>. Next, if we express | What is <math>\mathbf{S}_B</math> in this case? It can be shown that <math>\mathbf{S}_T = \mathbf{S}_B + \mathbf{S}_W </math> where <math> \mathbf{S}_T </math> is the covariance matrix of all the data. From this we can compute <math> \mathbf{S}_B </math>. | ||

Next, if we express <math> \mathbf{W} = ( \mathbf{w}_1 , \mathbf{w}_2 , \dots ,\mathbf{w}_k ) </math> observe that, for <math> \mathbf{A} = \mathbf{S}_B , \mathbf{S}_W </math>: | |||

::<math> Tr(\mathbf{W}^{T} \mathbf{A} \mathbf{W}) = \mathbf{w}_1^{T} \mathbf{A} \mathbf{w}_1 + \dots + \mathbf{w}_k^{T} \mathbf{A} \mathbf{w}_k </math> | |||

where <math>\ Tr()</math> is the trace of a matrix. Thus, following the same steps as in the two-class case, we have the new optimization problem: | |||