stat341f11: Difference between revisions

m (Conversion script moved page Stat341f11 to stat341f11: Converting page titles to lowercase) |

|||

| (762 intermediate revisions by 33 users not shown) | |||

| Line 1: | Line 1: | ||

Please contribute to the discussion of splitting up this page into multiple pages on the [[{{TALKPAGENAME}}|talk page]]. | |||

==[[signupformStat341F11| Editor Sign Up]]== | ==[[signupformStat341F11| Editor Sign Up]]== | ||

==Sampling - | ==Notation== | ||

The following guidelines on notation were posted on the Wiki Course Note page for [[Stat946f11|STAT 946]]. Add to them as necessary for consistent notation on this page. | |||

Capital letters will be used to denote random variables and lower case letters denote observations for those random variables: | |||

* <math>\{X_1,\ X_2,\ \dots,\ X_n\}</math> random variables | |||

* <math>\{x_1,\ x_2,\ \dots,\ x_n\}</math> observations of the random variables | |||

The joint ''probability mass function'' can be written as: | |||

<center><math> P( X_1 = x_1, X_2 = x_2, \dots, X_n = x_n )</math></center> | |||

or as shorthand, we can write this as <math>p( x_1, x_2, \dots, x_n )</math>. In these notes both types of notation will be used. | |||

We can also define a set of random variables <math>X_Q</math> where <math>Q</math> represents a set of subscripts. | |||

==Sampling - September 20, 2011== | |||

The meaning of sampling is to generate data points or numbers such that these data follow a certain distribution.<br /> | The meaning of sampling is to generate data points or numbers such that these data follow a certain distribution.<br /> | ||

i.e. From <math>x \sim~f(x)</math> sample <math>\,x_{1}, x_{2}, ..., x_{1000}</math> | i.e. From <math>x \sim~f(x)</math> sample <math>\,x_{1}, x_{2}, ..., x_{1000}</math> | ||

In practice, it maybe difficult to find the joint distribution of random variables. | In practice, it maybe difficult to find the joint distribution of random variables. We will explore different methods for simulating random variables, and how to draw conclusions using the simulated data. | ||

===Sampling from Uniform Distribution=== | |||

Computers cannot generate random numbers as they are deterministic; however they can produce pseudo random numbers using algorithms. Generated numbers mimic the properties of random numbers but they are never truly random. One famous algorithm that is considered highly reliable is the Mersenne twister[http://en.wikipedia.org/wiki/Mersenne_twister], which generates random numbers in an almost uniform distribution. | |||

====Multiplicative Congruential==== | ====Multiplicative Congruential==== | ||

*involves | *involves four parameters: integers <math>\,a, b, m</math>, and an initial value <math>\,x_0</math> which we call the seed | ||

*a sequence of integers is defined as | *a sequence of integers is defined as | ||

:<math>x_{k+1} \equiv (ax_{k} + b) \mod{m}</math> | :<math>x_{k+1} \equiv (ax_{k} + b) \mod{m}</math> | ||

| Line 21: | Line 39: | ||

<pre> | <pre> | ||

a=13; | a = 13; | ||

b=0; | b = 0; | ||

m=31; | m = 31; | ||

x(1)=1; | x(1) = 1; | ||

for ii = | for ii = 2:1000 | ||

x(ii | x(ii) = mod(a*x(ii-1)+b, m); | ||

end | end | ||

</pre> | </pre> | ||

| Line 42: | Line 60: | ||

hist(x) | hist(x) | ||

</pre> | </pre> | ||

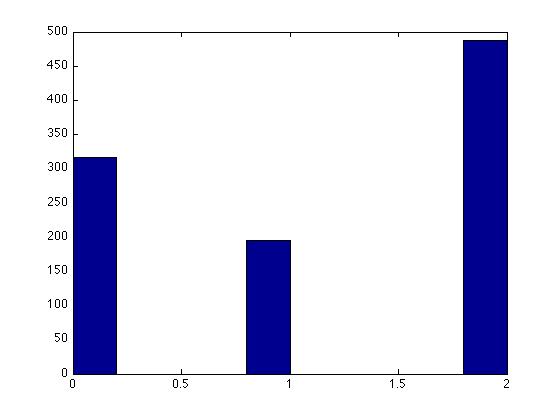

Histogram Output: | |||

[[File:uniform.jpg]] | |||

Facts about this algorithm: | Facts about this algorithm: | ||

*In this example, the first 30 terms in the sequence are a permutation of integers from 1 to 30 and then the sequence repeats itself. | *In this example, the first 30 terms in the sequence are a permutation of integers from 1 to 30 and then the sequence repeats itself. In the general case, this algorithm has a period of m-1. | ||

*Values are between 0 and < | *Values are between <b>0</b> and <b>m-1</b>, inclusive. | ||

*Dividing the numbers by < | *Dividing the numbers by <b> m-1 </b> yields numbers in the interval <b>[0,1]</b>. | ||

*MATLAB's <code>rand</code> function once used this algorithm with < | *MATLAB's <code>rand</code> function once used this algorithm with <b>a= 7<sup>5</sup></b>, <b>b= 0</b>, <b>m= 2<sup>31</sup>-1</b>,for reasons described in Park and Miller's 1988 paper "Random Number Generators: Good Ones are Hard to Find" (available [http://www.firstpr.com.au/dsp/rand31/p1192-park.pdf online]). | ||

*Visual Basic's <code>RND</code> function also used this algorithm with < | *Visual Basic's <code>RND</code> function also used this algorithm with <b>a= 1140671485</b>, <b>b= 12820163</b>, <b>m= 2<sup>24</sup></b>. ([http://support.microsoft.com/kb/231847 Reference]) | ||

===Inverse Transform Method=== | ===Inverse Transform Method=== | ||

This is a basic method for sampling. Theoretically using this method we can generate sample numbers at random from any probability distribution once we know its cumulative distribution function (cdf). | This is a basic method for sampling. Theoretically using this method we can generate sample numbers at random from any probability distribution once we know its cumulative distribution function (cdf). This method is very efficient computationally if the cdf of can be analytically inverted. | ||

====Theorem==== | ====Theorem==== | ||

Take <math>U \sim~ \mathrm{Unif}[0, 1]</math> and let <math>X=F^{-1}(U)</math>. Then X has distribution function <math>F(\cdot)</math>, | Take <math>U \sim~ \mathrm{Unif}[0, 1]</math> and let <math>\ X = F^{-1}(U) </math>. Then <math>\ X</math> has a cumulative distribution function of <math>F(\cdot)</math>, ie. <math>F(x)=P(X \leq x)</math>, where <math>F^{-1}(\cdot)</math> is the inverse of <math>F(\cdot)</math>. | ||

'''Proof''' | '''Proof''' | ||

| Line 81: | Line 101: | ||

*Step 1. Draw <math>U \sim~ \mathrm{Unif}[0, 1]</math> | *Step 1. Draw <math>U \sim~ \mathrm{Unif}[0, 1]</math> | ||

*Step 2. < | *Step 2. <b><i>X=F <sup>−1</sup>(U)</i></b> | ||

'''Example''' | '''Example''' | ||

| Line 87: | Line 107: | ||

Take the exponential distribution for example | Take the exponential distribution for example | ||

:<math>\,f(x)={\lambda}e^{-{\lambda}x}</math> | :<math>\,f(x)={\lambda}e^{-{\lambda}x}</math> | ||

:<math>\,F(x)=\int_0^x {\lambda}e^{-{\lambda}u} du=1-e^{-{\lambda}x}</math> | :<math>\,F(x)=\int_0^x {\lambda}e^{-{\lambda}u} du=[-e^{-{\lambda}u}]_0^x=1-e^{-{\lambda}x}</math> | ||

Let: <math>\,F(x)=y</math> | Let: <math>\,F(x)=y</math> | ||

| Line 98: | Line 118: | ||

*Step 1. Draw <math>U \sim~ \mathrm{Unif}[0, 1]</math> | *Step 1. Draw <math>U \sim~ \mathrm{Unif}[0, 1]</math> | ||

*Step 2. <math>x=\frac{-ln(1-U)}{\lambda}</math> | *Step 2. <math>x=\frac{-ln(1-U)}{\lambda}</math> | ||

Note: If U~Unif[0, 1], then (1 - U) and U have the same distribution. This allows us to slightly simplify step 2 into an alternate form: | |||

*Alternate Step 2. <math>x=\frac{-ln(U)}{\lambda}</math> | |||

'''MATLAB code''' | '''MATLAB code''' | ||

| Line 105: | Line 128: | ||

for ii = 1:1000 | for ii = 1:1000 | ||

u = rand; | u = rand; | ||

x(ii)=-log(1-u)/0.5 | x(ii) = -log(1-u)/0.5; | ||

end | end | ||

hist(x) | hist(x) | ||

</pre> | </pre> | ||

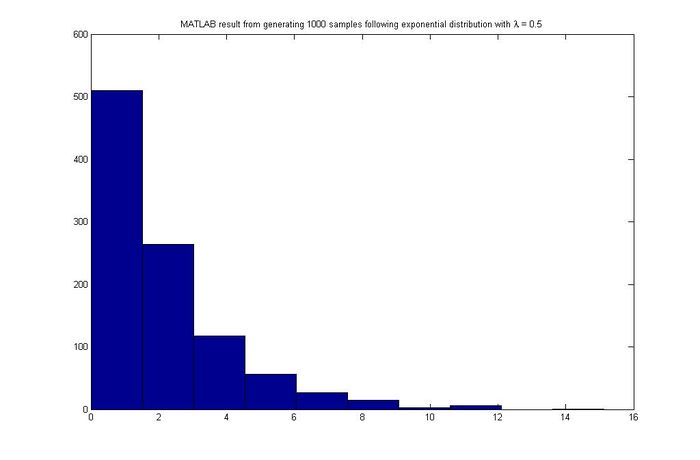

MATLAB result | '''MATLAB result''' | ||

[[File:MATLAB_Exp.jpg|center| | [[File:MATLAB_Exp.jpg|center|700px]] | ||

====Discrete Case==== | ====Discrete Case - September 22, 2011==== | ||

This same technique can be applied to the discrete case. Generate a discrete random variable <math>\,x</math> that has probability mass function <math>\,P(X=x_i)=P_i </math> where <math>\,x_0<x_1<x_2...</math> and <math>\,\sum_i P_i=1</math> | This same technique can be applied to the discrete case. Generate a discrete random variable <math>\,x</math> that has probability mass function <math>\,P(X=x_i)=P_i </math> where <math>\,x_0<x_1<x_2...</math> and <math>\,\sum_i P_i=1</math> | ||

*Step 1. Draw <math>u \sim~ \mathrm{Unif}[0, 1]</math> | *Step 1. Draw <math>u \sim~ \mathrm{Unif}[0, 1]</math> | ||

*Step 2. <math>\,x=x_i</math> if <math>\,F(x_{i-1})<u \leq F(x_i)</math> | *Step 2. <math>\,x=x_i</math> if <math>\,F(x_{i-1})<u \leq F(x_i)</math> | ||

'''Example''' | |||

Let x be a discrete random variable with the following probability mass function: | Let x be a discrete random variable with the following probability mass function: | ||

| Line 139: | Line 159: | ||

F(x) = \begin{cases} | F(x) = \begin{cases} | ||

0 & x < 0 \\ | 0 & x < 0 \\ | ||

0.3 & | 0.3 & x \leq 0 \\ | ||

0.5 & | 0.5 & x \leq 1 \\ | ||

1 & | 1 & x \leq 2 | ||

\end{cases}</math> | \end{cases}</math> | ||

| Line 149: | Line 169: | ||

<pre> | <pre> | ||

Draw U ~ Unif[0,1] | Draw U ~ Unif[0,1] | ||

if U <= 0.3 | |||

return 0 | |||

else if 0.3 < U <= 0.5 | |||

return 1 | |||

else if 0.5 < U <= 1 | |||

return 2 | |||

</pre> | </pre> | ||

| Line 163: | Line 181: | ||

<pre> | <pre> | ||

for ii = 1:1000 | for ii = 1:1000 | ||

u = rand; | |||

if u <= 0.3 | |||

x(ii) = 0; | |||

else if u <= 0.5 | |||

x(ii) = 1; | |||

else | |||

x(ii) = 2; | |||

end | |||

end | end | ||

</pre> | </pre> | ||

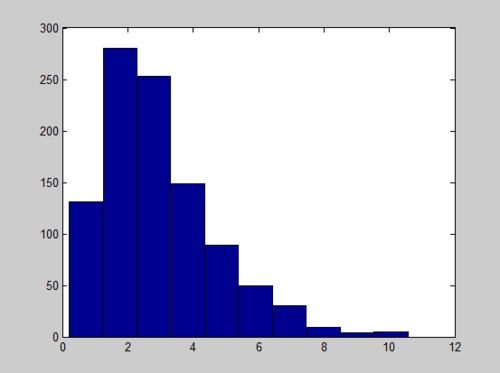

Matlab Output: | |||

[[File:Discreteinv.jpg]] | |||

'''Pseudo code for the Discrete Case:''' | '''Pseudo code for the Discrete Case:''' | ||

| Line 180: | Line 201: | ||

1. Draw U ~ Unif [0,1] | 1. Draw U ~ Unif [0,1] | ||

2. If <math> U \leq P_0 </math>, deliver < | 2. If <math> U \leq P_0 </math>, deliver <b><i>X= x<sub>0</sub></i></b> | ||

3. Else if <math> U \leq P_0 + P_1 </math>, deliver < | 3. Else if <math> U \leq P_0 + P_1 </math>, deliver <b><i>X= x<sub>1</sub></i></b> | ||

4. Else If <math> U \leq P_0 +....+ P_k </math>, deliver < | 4. Else If <math> U \leq P_0 +....+ P_k </math>, deliver <b><i>X= x<sub>k</sub></i></b> | ||

====Limitations==== | |||

This method is useful, but it's not practical in many cases because we can't always obtain <math>F</math> or <math> F^{-1} </math> (some functions are not integrable or invertible), and sometimes <math>f(x)</math> cannot be obtained in closed form. Let's look at some examples: | |||

*Continuous case | *Continuous case | ||

If we want to use this method to draw the ''pdf'' of '''normal distribution''', we may find ourselves | If we want to use this method to draw the ''pdf'' of '''normal distribution''', we may find ourselves geting stuck when trying to find its ''cdf''. | ||

The simplest case of '''normal distribution''' is <math>f(x)=\frac{1}{\sqrt{2\pi}}e^{-\frac{x^2}{2}}</math>, | The simplest case of '''normal distribution''' is <math>f(x)=\frac{1}{\sqrt{2\pi}}e^{-\frac{x^2}{2}}</math>, | ||

whose ''cdf'' is <math>F(x)=\frac{1}{\sqrt{2\pi}}\int_{-\infty}^{x}{e^{-\frac{u^2}{2}}}du</math>. This integral cannot be expressed in terms of elementary functions. So evaluating it and then finding the inverse is a very difficult task. | whose ''cdf'' is <math>F(x)=\frac{1}{\sqrt{2\pi}}\int_{-\infty}^{x}{e^{-\frac{u^2}{2}}}du</math>. This integral cannot be expressed in terms of elementary functions. So evaluating it and then finding the inverse is a very difficult task. | ||

| Line 197: | Line 219: | ||

But when n takes on values that are very large, say 50, it is hard to do so. | But when n takes on values that are very large, say 50, it is hard to do so. | ||

===Acceptance/Rejection | ===Acceptance/Rejection Method=== | ||

The aforementioned difficulties of the inverse transform method motivates a sampling method that does not require analytically calculating cdf's and their inverses, which is the acceptance/rejection sampling method. Here, <math> f(x)</math> is approximated by another function, say <math>g(x)</math>, with the idea being that <math>g(x)</math> is a "nicer" function to work with than <math>f(x)</math>. | The aforementioned difficulties of the inverse transform method motivates a sampling method that does not require analytically calculating cdf's and their inverses, which is the acceptance/rejection sampling method. Here, <math> \displaystyle f(x)</math> is approximated by another function, say <math>\displaystyle g(x)</math>, with the idea being that <math>\displaystyle g(x)</math> is a "nicer" function to work with than <math>\displaystyle f(x)</math>. | ||

Suppose we assume the following: | Suppose we assume the following: | ||

1. There exists another distribution <math>g(x)</math> that is easier to work with and that you know how to sample from, and | 1. There exists another distribution <math>\displaystyle g(x)</math> that is easier to work with and that you know how to sample from, and | ||

2. There exists a constant c such that <math>f(x) \leq c \cdot g(x)</math> for all x | 2. There exists a constant c such that <math>f(x) \leq c \cdot g(x)</math> for all x | ||

Under these assumptions, we can sample from <math>f(x)</math> by sampling from <math>g(x)</math> | Under these assumptions, we can sample from <math>\displaystyle f(x)</math> by sampling from <math>\displaystyle g(x)</math> | ||

====General Idea==== | ====General Idea==== | ||

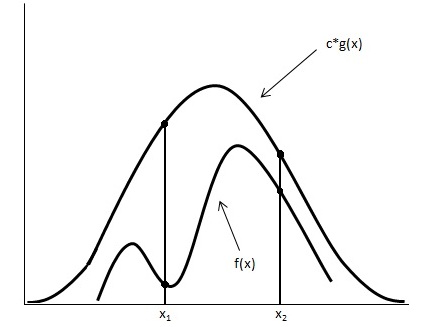

Looking at the image below we have graphed <math> c \cdot g(x) </math> and <math>f(x)</math>. | Looking at the image below we have graphed <math> c \cdot g(x) </math> and <math>\displaystyle f(x)</math>. | ||

[[File:Graph_updated.jpg]] | [[File:Graph_updated.jpg]] | ||

Using the acceptance/rejection method we will accept some of the points from <math>g(x)</math> and reject some of the points from <math> g(x)</math>. The points that will be accepted from <math>g(x)</math> will have a distribution similar to <math>f(x)</math>. We can see from the image that the values around <math>x_1</math> will be sampled more often under <math>c \cdot g(x)</math> than under <math>f(x)</math>, so we will have to reject more samples taken at x<sub>1</sub>. Around <math>x_2</math> the number of samples that are drawn and the number of samples we need are much closer, so we accept more samples that we get at <math>x_2</math> | Using the acceptance/rejection method we will accept some of the points from <math>\displaystyle g(x)</math> and reject some of the points from <math>\displaystyle g(x)</math>. The points that will be accepted from <math>\displaystyle g(x)</math> will have a distribution similar to <math>\displaystyle f(x)</math>. We can see from the image that the values around <math>\displaystyle x_1</math> will be sampled more often under <math>c \cdot g(x)</math> than under <math>\displaystyle f(x)</math>, so we will have to reject more samples taken at x<sub>1</sub>. Around <math>\displaystyle x_2</math> the number of samples that are drawn and the number of samples we need are much closer, so we accept more samples that we get at <math>\displaystyle x_2</math>. We should remember to take these considerations into account when evaluating the efficiency of a given acceptance-rejection method. Rejecting a high proportion of samples ultimately leaves us with a longer time until we retrieve our desired distribution. We should question whether a better function <math> g(x) </math> can be chosen in our situation. | ||

====Procedure==== | ====Procedure==== | ||

| Line 224: | Line 247: | ||

3. If <math> U \leq \frac{f(y)}{c \cdot g(y)}</math> then x=y; else return to 1 | 3. If <math> U \leq \frac{f(y)}{c \cdot g(y)}</math> then x=y; else return to 1 | ||

Note that the choice of <math> c </math> plays an important role in the efficiency of the algorithm. We want <math> c \cdot g(x) </math> to be "tightly fit" over <math> f(x) </math> to increase the probability of accepting points, and therefore reducing the number of sampling attempts. Mathematically, we want to minimize <math> c </math> such that <math>f(x) \leq c \cdot g(x) \ \forall x</math>. We do this by setting | |||

<math> \frac{d}{dx}(\frac{f(x)}{g(x)}) = 0 </math>, solving for a maximum point <math> x_0 </math> and setting <math> c = \frac{f(x_0)}{g(x_0)}. </math> | |||

====Proof==== | |||

Mathematically, we need to show that the sample points given that they are accepted have a distribution of f(x). | |||

<math>\begin{align} P(y|accepted) &= \frac{P(y, accepted)}{P(accepted)} \\ | |||

&= \frac{P(accepted|y) P(y)}{P(accepted)}\end{align} </math> (Bayes' Rule) | |||

<math>\displaystyle P(y) = g(y)</math> | |||

<math>P(accepted|y) =P(u\leq \frac{f(y)}{c \cdot g(y)}) =\frac{f(y)}{c \cdot g(y)} </math>,where u ~ Unif [0,1] | |||

<math>P(accepted) = \sum P(accepted|y)\cdot P(y)=\int^{}_y \frac{f(s)}{c \cdot g(s)}g(s) ds=\int^{}_y \frac{f(s)}{c} ds=\frac{1}{c} \cdot\int^{}_y f(s) ds=\frac{1}{c}</math> | |||

So, | |||

<math> P(y|accepted) = \frac{ \frac {f(y)}{c \cdot g(y)} \cdot g(y)}{\frac{1}{c}} =f(y) </math> | |||

====Continuous Case==== | |||

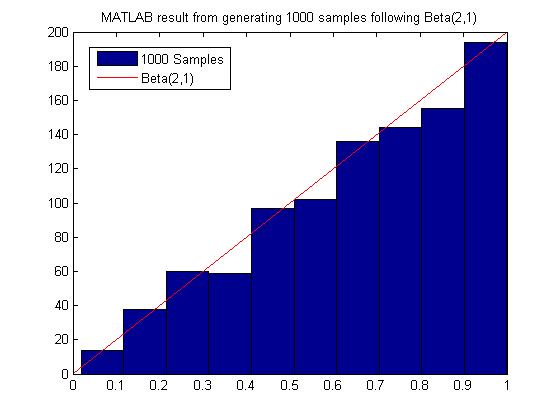

'''Example''' | '''Example''' | ||

| Line 233: | Line 280: | ||

In general: | In general: | ||

<math>Beta(\alpha, \beta) = \frac{\Gamma (\alpha + \beta)}{\Gamma(\alpha)\Gamma(\beta)}</math> <math>\displaystyle x^{\alpha-1}</math> <math>\displaystyle(1-x)^{\beta-1}</math>, <math>\displaystyle 0<x<1</math> | |||

Note: <math>\Gamma(n) = (n-1)!</math> if n is a positive integer | Note: <math>\!\Gamma(n) = (n-1)!</math> if n is a positive integer | ||

<math>\begin{align} f(x) &= Beta(2,1) \\ | <math>\begin{align} f(x) &= Beta(2,1) \\ | ||

| Line 242: | Line 289: | ||

&= 2x \end{align}</math> | &= 2x \end{align}</math> | ||

We want to choose <math>g(x)</math> that is easy to sample from. So we choose <math>g(x)</math> to be uniform distribution. | We want to choose <math>\displaystyle g(x)</math> that is easy to sample from. So we choose <math>\displaystyle g(x)</math> to be uniform distribution on <math>\ (0,1)</math> since that is the domain for the Beta function. | ||

We now want a constant c such that <math>f(x) \leq c \cdot g(x) </math> for all x from (0,1) | We now want a constant c such that <math>f(x) \leq c \cdot g(x) </math> for all x from Unif(0,1) | ||

So,<br /> | So,<br /> | ||

<math>c \geq \frac{f(x)}{g(x)}</math>, for all x from (0,1) | <math>c \geq \frac{f(x)}{g(x)}</math>, for all x from (0,1) | ||

| Line 293: | Line 340: | ||

[[File:MATLAB_Beta.jpg]] | [[File:MATLAB_Beta.jpg]] | ||

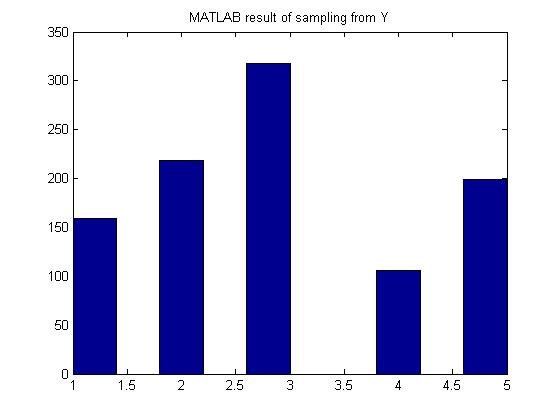

= | ====Discrete Example==== | ||

===Discrete Example=== | |||

Generate random variables according to the p.m.f: | Generate random variables according to the p.m.f: | ||

| Line 359: | Line 382: | ||

[[File:MATLAB_Y.jpg]] | [[File:MATLAB_Y.jpg]] | ||

== | ====Limitations==== | ||

===Sampling From Gamma=== | Most of the time we have to sample many more points from g(x) before we can obtain an acceptable amount of samples from f(x), hence this method may not be computationally efficient. It depends on our choice of g(x). For example, in the example above to sample from Beta(2,1), we need roughly 2000 samples from g(X) to get 1000 acceptable samples of f(x). | ||

In addition, in situations where a g(x) function is chosen and used, there can be a discrepancy between the functional behaviors of f(x) and g(x) that render this method unreliable. For example, given the normal distribution function as g(x) and a function of f(x) with a "fat" mid-section and "thin tails", this method becomes useless as more points near the two ends of f(x) will be rejected, resulting in a tedious and overwhelming number of sampling points having to be sampled due to the high rejection rate of such a method. | |||

===Sampling From Gamma and Normal Distribution - September 27, 2011=== | |||

====Sampling From Gamma==== | |||

'''Gamma Distribution''' | |||

The Gamma function is written as <math>X \sim~ Gamma (t, \lambda) </math> | The Gamma function is written as <math>X \sim~ Gamma (t, \lambda) </math> | ||

<math> F(x) = \int_{0}^{\lambda x} \frac{e^{-y}y^{t-1}}{(t-1)!} dy </math> | :<math> F(x) = \int_{0}^{\lambda x} \frac{e^{-y}y^{t-1}}{(t-1)!} dy </math> | ||

If you have t samples of the exponential distribution,<br> | If you have t samples of the exponential distribution,<br> | ||

<br> <math> \begin{align} | <br> <math> \begin{align} X_1 \sim~ Exp(\lambda)\\ \vdots \\ X_t \sim~ Exp(\lambda) \end{align} | ||

</math> | </math> | ||

The sum of these t samples has a gamma distribution, | The sum of these t samples has a gamma distribution, | ||

<math> | :<math> X_1+X_2+ ... + X_t \sim~ Gamma (t, \lambda) </math><br> | ||

<math> \sum_{i=1}^{t} | :<math> \sum_{i=1}^{t} X_i \sim~ Gamma (t, \lambda) </math> where <math>X_i \sim~Exp(\lambda)</math><br> | ||

We can sample the exponential distribution using the inverse transform method from previous class,<br> | '''Method''' <br> | ||

<math>\,f(x)={\lambda}e^{-{\lambda}x}</math><br> | Suppose we want to sample <math>\ k </math> points from <math>\ Gamma (t, \lambda) </math>. <br> | ||

<math>\,F^{-1}(u)=\frac{-ln(1-u)}{\lambda}</math><br> | We can sample the exponential distribution using the inverse transform method from the previous class,<br> | ||

<math>\,F^{-1}(u)=\frac{-ln(u)}{\lambda}</math> 1 - u is the same as x since <math>U \sim~ unif [0,1] </math><br> | :<math>\,f(x)={\lambda}e^{-{\lambda}x}</math><br> | ||

<math> \begin{align} \frac{-ln(u_1)}{\lambda} - \frac{ln(u_2)}{\lambda} - ... - \frac{ln(u_t)}{\lambda} = x_1\\ \vdots \\ \frac{-ln(u_1)}{\lambda} - \frac{ln(u_2)}{\lambda} - ... - \frac{ln(u_t)}{\lambda} = | :<math>\,F^{-1}(u)=\frac{-ln(1-u)}{\lambda}</math><br> | ||

</math><br> | :<math>\,F^{-1}(u)=\frac{-ln(u)}{\lambda}</math> | ||

<math> \frac {-\sum_{i=1}^{t} ln(u_i)}{\lambda} = x</math> | (1 - u) is the same as x since <math>U \sim~ unif [0,1] </math><br> | ||

:<math> \begin{align} \frac{-ln(u_1)}{\lambda} - \frac{ln(u_2)}{\lambda} - ... - \frac{ln(u_t)}{\lambda} = x_1\\ \vdots \\ \frac{-ln(u_1)}{\lambda} - \frac{ln(u_2)}{\lambda} - ... - \frac{ln(u_t)}{\lambda} = x_k \end{align} | |||

:</math><br> | |||

:<math> \frac {-\sum_{i=1}^{t} ln(u_i)}{\lambda} = x</math> | |||

MATLAB code for a Gamma(1 | '''MATLAB code''' for a Gamma(3,1) is | ||

<pre> | <pre> | ||

| Line 393: | Line 427: | ||

And the Histogram of X follows a Gamma distribution with long tail: | And the Histogram of X follows a Gamma distribution with long tail: | ||

[[File:Hist.PNG]] | [[File:Hist.PNG|center|500px]] | ||

We can improve the quality of histogram by adjusting the Matlab code to include the number of bins we want: hist(x, number_of_bins) | |||

<pre> | |||

x = sum(-log(rand(20000,3)),2); | |||

hist(x,40) | |||

</pre> | |||

[[File:untitled.jpg|center|500px]] | |||

''' R code''' for a Gamma(3,1) is | |||

<pre> | <pre> | ||

a<-apply(-log(matrix(runif(3000),nrow=1000)),1,sum); | a<-apply(-log(matrix(runif(3000),nrow=1000)),1,sum); | ||

hist(a); | hist(a); | ||

</pre> | </pre> | ||

Histogram: | |||

[[File:hist_gamma.png]] | [[File:hist_gamma.png|center|500px]] | ||

Here is another histogram of Gamma coding with R | Here is another histogram of Gamma coding with R | ||

| Line 412: | Line 454: | ||

rug(jitter(a)); | rug(jitter(a)); | ||

</pre> | </pre> | ||

[[File:hist_gamma_2.png]] | [[File:hist_gamma_2.png|center|500px]] | ||

===Box-Muller Transform=== | ====Sampling from Normal Distribution using Box-Muller Transform - September 29, 2011==== | ||

=====Procedure===== | |||

==== | # Generate <math>\displaystyle u_1</math> and <math>\displaystyle u_2</math>, two values sampled from a uniform distribution between 0 and 1. | ||

# Set <math>\displaystyle R^2 = -2log(u_1)</math> so that <math>\displaystyle R^2</math> is exponential with mean 2 <br> Set <math>\!\theta = 2*\pi*u_2</math> so that <math>\!\theta</math> ~ <math>\ Unif[0, 2\pi] </math> | |||

# Set <math>\displaystyle X = R cos(\theta)</math> <br> Set <math>\displaystyle Y = R sin(\theta)</math> | |||

=====Justification===== | |||

Suppose we have X ~ N(0, 1) and Y ~ N(0, 1) where X and Y are independent normal random variables. The relative probability density function of these two random variables using Cartesian coordinates is: | |||

<math> f(X, Y) dxdy= f(X) f(Y) dxdy= \frac{1}{\sqrt{2\pi}}e^{-x^2/2} \frac{1}{\sqrt{2\pi}}e^{-y^2/2} dxdy= \frac{1}{2\pi}e^{-(x^2+y^2)/2}dxdy </math> <br> | |||

In polar coordinates <math>\displaystyle R^2 = x^2 + y^2</math>, so the relative probability density function of these two random variables using polar coordinates is: | |||

In polar coordinates <math>R^2 = x^2 + y^2</math>, so the relative probability density function of these two random variables using polar coordinates is: | |||

<math> f(R, \theta) = \frac{1}{2\pi}e^{-R^2/2} </math> <br> | <math> f(R, \theta) = \frac{1}{2\pi}e^{-R^2/2} </math> <br> | ||

If we have <math>R^2 | If we have <math>\displaystyle R^2 \sim exp(1/2)</math> and <math>\!\theta \sim unif[0, 2\pi]</math> we get an equivalent relative probability density function. Notice that when doing a two by two linear transformation, the determinant of the Jacobian needs to be included in the change of variable formula where: | ||

<math> |J|=\left|\frac{\partial(x,y)}{\partial(R,\theta)}\right|= \left|\begin{matrix}\frac{\partial x}{\partial R}&\frac{\partial x}{\partial \theta}\\\frac{\partial y}{\partial R}&\frac{\partial y}{\partial \theta}\end{matrix}\right|=R </math> <br> | |||

<math> f( | <math> f(X, Y) dxdy = f(R, \theta)|J|dRd\theta = \frac{1}{2\pi}e^{-R^2/2}R dRd\theta= \frac{1}{4\pi}e^{-\frac{s}{2}} dSd\theta </math> <br>where <math> S=R^2. </math> <br> | ||

Therefore we can generate a point in polar coordinates using the uniform and exponential distributions, then convert the point to Cartesian coordinates and the resulting X and Y values will be equivalent to samples generated from N(0, 1). | Therefore we can generate a point in polar coordinates using the uniform and exponential distributions, then convert the point to Cartesian coordinates and the resulting X and Y values will be equivalent to samples generated from N(0, 1). | ||

'''MATLAB code''' | |||

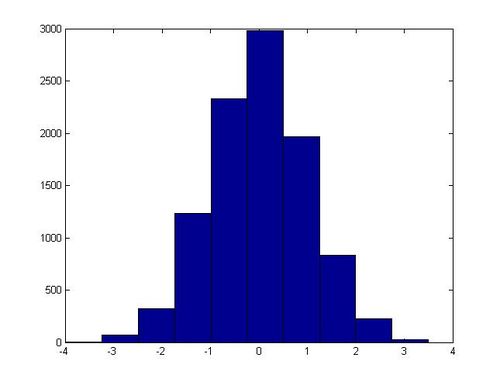

In MatLab this algorithm can be implemented with the following code, which generates 20,000 samples from N(0, 1): | In MatLab this algorithm can be implemented with the following code, which generates 20,000 samples from N(0, 1): | ||

| Line 486: | Line 501: | ||

In one execution of this script, the following histogram for x was generated: | In one execution of this script, the following histogram for x was generated: | ||

[[File:Hist standard normal.jpg]] | [[File:Hist standard normal.jpg|center|500px]] | ||

===Non-Standard Normal Distributions=== | =====Non-Standard Normal Distributions===== | ||

'''Example 1: Single-variate Normal''' | |||

If X ~ Norm(0, 1) then (a + bX) has a normal distribution with a mean of <math>\displaystyle a</math> and a standard deviation of <math>\displaystyle b</math> (which is equivalent to a variance of <math>\displaystyle b^2</math>). Using this information with the Box-Muller transform, we can generate values sampled from some random variable <math>\displaystyle Y\sim N(a,b^2) </math> for arbitrary values of <math>\displaystyle a,b</math>. | |||

# Generate a sample u from Norm(0, 1) using the Box-Muller transform. | |||

# Set v = a + bu. | |||

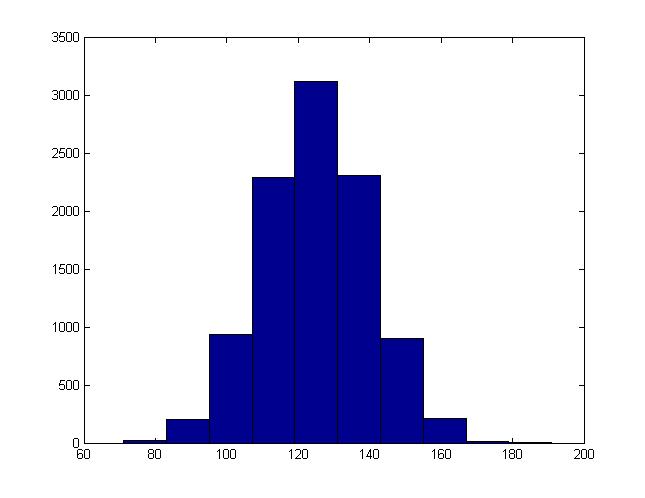

The values for v generated in this way will be equivalent to | The values for v generated in this way will be equivalent to sample from a <math>\displaystyle N(a, b^2)</math>distribution. We can modify the MatLab code used in the last section to demonstrate this. We just need to add one line before we generate the histogram: | ||

<pre> | |||

v = a + b * x; | |||

</pre> | |||

For instance, this is the histogram generated when b = 15, a = 125: | For instance, this is the histogram generated when b = 15, a = 125: | ||

[[File:Hist normal.jpg]] | [[File:Hist normal.jpg|center|500]] | ||

'''Example 2: Multi-variate Normal''' | |||

// In | The Box-Muller method can be extended to higher dimensions to generate multivariate normals. The objects generated will be nx1 vectors, and their variance will be described by nxn covariance matrices. | ||

<math>\mathbf{z} = N(\mathbf{u}, \Sigma)</math> defines the n by 1 vector <math>\mathbf{z}</math> such that: | |||

* <math>\displaystyle u_i</math> is the average of <math>\displaystyle z_i</math> | |||

* <math>\!\Sigma_{ii}</math> is the variance of <math>\displaystyle z_i</math> | |||

* <math>\!\Sigma_{ij}</math> is the co-variance of <math>\displaystyle z_i</math> and <math>\displaystyle z_j</math> | |||

If <math>\displaystyle z_1, z_2, ..., z_d</math> are normal variables with mean 0 and variance 1, then the vector <math>\displaystyle (z_1, z_2,..., z_d) </math> has mean 0 and variance <math>\!I</math>, where 0 is the zero vector and <math>\!I</math> is the identity matrix. This fact suggests that the method for generating a multivariate normal is to generate each component individually as single normal variables. | |||

The mean and the covariance matrix of a multivariate normal distribution can be adjusted in ways analogous to the single variable case. If <math>\mathbf{z} \sim N(0,I)</math>, then <math>\Sigma^{1/2}\mathbf{z}+\mu \sim N(\mu,\Sigma)</math>. Note here that the covariance matrix is symmetric and nonnegative, so its square root should always exist. | |||

We can compute <math>\mathbf{z}</math> in the following way: | |||

# Generate an n by 1 vector <math>\mathbf{x} = \begin{bmatrix}x_{1} & x_{2} & ... & x_{n}\end{bmatrix}</math> where <math>x_{i}</math> ~ Norm(0, 1) using the Box-Muller transform. | |||

# Calculate <math>\!\Sigma^{1/2}</math> using singular value decomposition. | |||

# Set <math>\mathbf{z} = \Sigma^{1/2} \mathbf{x} + \mathbf{u}</math>. | |||

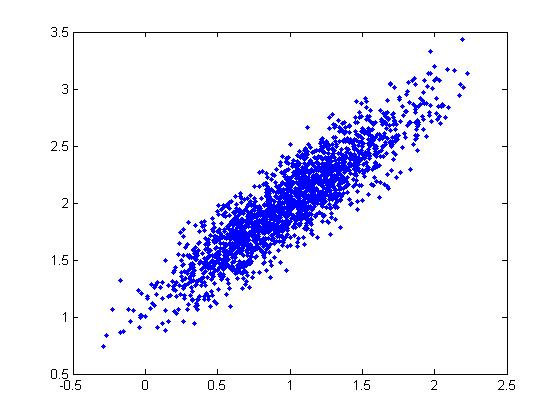

The following MatLab code provides an example, where a scatter plot of 10000 random points is generated. In this case x and y have a co-variance of 0.9 - a very strong positive correlation. | |||

<pre> | <pre> | ||

| Line 524: | Line 560: | ||

root_E = u * (s ^ (1 / 2)); | root_E = u * (s ^ (1 / 2)); | ||

z = (root_E * [x y]') | z = (root_E * [x y]'); | ||

z(: | z(1,:) = z(1,:) + 0; | ||

z(: | z(2,:) = z(2,:) + -3; | ||

scatter(z(: | scatter(z(1,:), z(2,:)) | ||

</pre> | </pre> | ||

This code generated the following scatter plot: | This code generated the following scatter plot: | ||

[[File:scatter covar.jpg]] | [[File:scatter covar.jpg|center|500px]] | ||

In Matlab, we can also use the function "sqrtm()" or "chol()" (Cholesky Decomposition) to calculate square root of a matrix directly. Note that the resulting root matrices may be different but this does materially affect the simulation. | |||

Here is an example: | |||

<pre> | |||

E = [1, 0.9; 0.9, 1]; | |||

r1 = sqrtm(E); | |||

r2 = chol(E); | |||

</pre> | |||

R code for a multivariate normal distribution: | |||

<pre> | |||

n=10000; | |||

r2<--2*log(runif(n)); | |||

theta<-2*pi*(runif(n)); | |||

x<-sqrt(r2)*cos(theta); | |||

y<-sqrt(r2)*sin(theta); | |||

a<-matrix(c(x,y),nrow=n,byrow=F); | |||

e<-matrix(c(1,.9,09,1),nrow=2,byrow=T); | |||

svde<-svd(e); | |||

root_e<-svde$u %*% diag(svde$d)^1/2; | |||

z<-t(root_e %*%t(a)); | |||

z[,1]=z[,1]+5; | |||

z[,2]=z[,2]+ -8; | |||

par(pch=19); | |||

plot(z,col=rgb(1,0,0,alpha=0.06)) | |||

</pre> | |||

[[File:m_normal.png|center|500px]] | |||

=====Remarks===== | |||

MATLAB's randn function uses the ziggurat method to generate normal distributed samples. It is an efficient rejection method based on covering the probability density function with a set of horizontal rectangles so as to obtain points within each rectangle. It is reported that a 800 MHz Pentium III laptop can generate over 10 million random numbers from normal distribution in less than one second. ([http://www.mathworks.com/company/newsletters/news_notes/clevescorner/spring01_cleve.html Reference]) | |||

===Sampling From Binomial Distributions=== | ===Sampling From Binomial Distributions=== | ||

In order to generate a sample x from X ~ Bin(n, p), we can follow the following procedure: | In order to generate a sample x from <math>\displaystyle X \sim Bin(n, p)</math>, we can follow the following procedure: | ||

1. Generate n uniform random numbers sampled from <math>\displaystyle Unif [0, 1] </math>: <math>\displaystyle u_1, u_2, ..., u_n</math>. | |||

2. Set x to be the total number of cases where <math>\displaystyle u_i <= p</math> for all <math>\displaystyle 1 <= i <= n</math>. | |||

In MatLab this can be coded with a single line. The following generates a sample from <math>\displaystyle X \sim Bin(n, p)</math> | |||

sum(rand(n, 1) <= p, 1) | |||

==Bayesian Inference and Frequentist Inference - October 4, 2011== | |||

===Bayesian inference vs Frequentist inference=== | |||

The Bayesian method has become popular in the last few decades as simulation and computer technology makes it more applicable. For more information about its history and application, please refer to http://en.wikipedia.org/wiki/Bayesian_inference. | |||

As for frequentist inference, please refer to http://en.wikipedia.org/wiki/Frequentist_inference. | |||

====Example==== | |||

Consider the 'probability' that a person drinks a cup of coffee on a specific day. The interpretations of this for a frequentist and a bayesian are as follows: | |||

<br><br> | |||

Frequentist: There is no explanation to this expression. It is essentially meaningless since it has only occurred once. Therefore, it is not a probability. | |||

<br> | |||

Bayesian: Probability captures not only the frequency of occurrences but also one's degree of belief about the random component of a proposition. Therefore it is a valid probability. | |||

====Example of face identification==== | |||

Consider a picture of a face that is associated with an identity (person). Take the face as input x and the person as output y. The person can be either Ali or Tom. We have y=1 if it is Ali and y=0 if it is Tom. We can divide the picture into 100*100 pixels and insert them into a 10,000*1 column vector, which captures x. | |||

If you are a frequentist, you would compare <math>\Pr(X=x|y=1)</math> with <math>\Pr(X=x|y=0)</math> and see which one is higher.<br /> If you are Bayesian, you would compare <math>\Pr(y=1|X=x)</math> with <math>\Pr(y=0|X=x)</math>. | |||

====Summary of differences between two schools==== | |||

*Frequentist: Probability refers to limiting relative frequency. (objective) | |||

*Bayesian: Probability describes degree of belief not frequency. (subjective) | |||

e.g. The probability that you drank a cup of tea on May 20, 2001 is 0.62 does not refer to any frequency. | |||

---- | |||

*Frequentist: Parameters are fixed, unknown constants. | |||

*Bayesian: Parameters are random variables and we can make probabilistic statement about them. | |||

---- | |||

*Frequentist: Statistical procedures should be designed to have long run frequency properties. | |||

e.g. a 95% confidence interval should trap true value of the parameter with limiting frequency at least 95%. | |||

*Bayesian: It makes inferences about <math>\theta</math> by producing a probability distribution for <math>\theta</math>. Inference (e.g. point estimates and interval estimates) will be extracted from this distribution :<math>f(\theta|X) = \frac{f(X | \theta)\, f(\theta)}{f(X)}.</math> | |||

====Bayesian inference==== | |||

Bayesian inference is usually carried out in the following way: | |||

1. Choose a prior probability density function of <math>\!\theta</math> which is <math>f(\!\theta)</math>. This is our belief about <math>\theta</math> before we see any data. | |||

2. Choose a statistical model <math>\displaystyle f(x|\theta)</math> that reflects our beliefs about X. | |||

3. After observing data <math>\displaystyle x_1,...,x_n</math>, we update our beliefs and calculate the posterior probability. | |||

<math>f(\theta|x) = \frac{f(\theta,x)}{f(x)}=\frac{f(x|\theta) \cdot f(\theta)}{f(x)}=\frac{f(x|\theta) \cdot f(\theta)}{\int^{}_\theta f(x|\theta) \cdot f(\theta) d\theta}</math>, where <math>\displaystyle f(\theta|x)</math> is the posterior probability, <math>\displaystyle f(\theta)</math> is the prior probability, <math>\displaystyle f(x|\theta)</math> is the likelihood of observing X=x given <math>\!\theta</math> and f(x) is the marginal probability of X=x. | |||

If we have i.i.d. observations <math>\displaystyle x_1,...,x_n</math>, we can replace <math>\displaystyle f(x|\theta)</math> with <math>f({x_1,...,x_n}|\theta)=\prod_{i=1}^n f(x_i|\theta)</math> because of independency. | |||

We denote <math>\displaystyle f({x_1,...,x_n}|\theta)</math> as <math>\displaystyle L_n(\theta)</math> which is called likelihood. And we use <math>\displaystyle x^n</math> to denote <math>\displaystyle (x_1,...,x_n)</math>. | |||

<math>f(\theta|x^n) = \frac{f(x^n|\theta) \cdot f(\theta)}{f(x^n)}=\frac{f(x^n|\theta) \cdot f(\theta)}{\int^{}_\theta f(x^n|\theta) \cdot f(\theta) d\theta}</math> , where <math>\int^{}_\theta f(x^n|\theta) \cdot f(\theta) d\theta</math> is a constant <math>\displaystyle c_n</math> which does not depend on <math>\displaystyle \theta </math>. So <math>f(\theta|x^n) \propto f(x^n|\theta) \cdot f(\theta)</math>. The posterior probability is proportional to the likelihood times prior probability. | |||

Note that it does not matter if we throw away <math>\displaystyle c_n</math>,we can always recover it. | |||

What do we do about the posterier distribution? | |||

*Point estimate | |||

<math> \bar{\theta}=\int\theta \cdot f(\theta|x^n) d\theta=\frac{\int\theta \cdot L_n(\theta)\cdot f(\theta) d(\theta)}{c_n}</math> | |||

*Baysian Interval estimate | |||

<math> \int^{a}_{-\infty} f(\theta|x^n) d\theta=\int^{\infty}_{b} f(\theta|x^n) d\theta=1-\alpha </math> | |||

Let C=(a,b); | |||

Then <math> P(\theta\in C|x^n)=\int^{b}_{a} f(\theta|x^n)d(\theta)=1-\alpha </math>. | |||

C is a <math>\displaystyle 1-\alpha</math> posterior interval. | |||

Let <math>\tilde{\theta}=(\theta_1,...,\theta_d)^T</math>, then <math>f(\theta_1|x^n) = \int^{} \int^{} \dots \int^{}f(\tilde{\theta}|X)d\theta_2d\theta_3 \dots d\theta_d </math> and <math>E(\theta_1|x^n)=\int^{}\theta_1 \cdot f(\theta_1|x^n) d\theta_1</math> | |||

====Example 1: Estimating parameters of a univariate Gaussian distribution==== | |||

Suppose X follows a univariate Gaussian distribution (i.e. a Normal distribution) with parameters <math>\!\mu</math> and | |||

<math>\displaystyle {\sigma^2}</math>. | |||

(a) For Frequentists: | |||

<math>f(x|\theta)= \frac{1}{\sqrt{2\pi}\sigma} \cdot e^{-\frac{1}{2}(\frac{x-\mu}{\sigma})^2}</math> | |||

<math>L_n(\theta)= \prod_{i=1}^n \frac{1}{\sqrt{2\pi}\sigma} \cdot e^{-\frac{1}{2}(\frac{x_i-\mu}{\sigma})^2}</math> | |||

<math>\ln L_n(\theta) = l(\theta) = \sum_{i=1}^n[ -\frac{1}{2}\ln 2\pi-\ln \sigma-\frac{1}{2}(\frac{x_i-\mu}{\sigma})^2]</math> | |||

To get the maximum likelihood estimator of <math>\!\mu</math> (mle), we find the <math>\hat{\mu}</math> which maximizes <math>\displaystyle L_n(\theta)</math>: | |||

<math>\frac{\partial l(\theta)}{\partial \mu}= \sum_{i=1}^n \frac{1}{\sigma}(\frac{x_i-\mu}{\sigma})=0 \Rightarrow \sum_{i=1}^n x_i = n\mu \Rightarrow \hat{\mu}_{mle}=\bar{x}</math> | |||

(b) For Bayesians: | |||

<math>f(\theta|x) \propto f(x|\theta) \cdot f(\theta)</math> | |||

We assume that the mean of the above normal distribution is itself distributed normally with mean <math>\!\mu_0</math> and variance <math>\!\Gamma</math>. | |||

Suppose <math>\!\mu\sim N(\mu_0, \!\Gamma^2</math>), | |||

so <math>f(\mu) = \frac{1}{\sqrt{2\pi}\Gamma} \cdot e^{-\frac{1}{2}(\frac{\mu-\mu_0}{\Gamma})^2}</math> | |||

<math>f(x|\mu) = \frac{1}{\sqrt{2\pi}\tilde{\sigma}} \cdot e^{-\frac{1}{2}(\frac{x-\mu}{\tilde{\sigma}})^2}</math> | |||

<math>\tilde{\mu} = \frac{\frac{n}{\sigma^2}}{\frac{n}{\sigma^2}+\frac{1}{\Gamma^2}}\bar{x}+\frac{\frac{1}{\Gamma^2}}{\frac{n}{\sigma^2}+\frac{1}{\Gamma^2}}\mu_0</math>, where <math>\tilde{\mu}</math> is the estimator of <math>\!\mu</math>. | |||

* If prior belief about <math>\!\mu_0</math> is strong, then <math>\!\Gamma</math> is small and <math>\frac{1}{\Gamma^2}</math> is large. <math>\tilde{\mu}</math> is close to <math>\!\mu_0</math> and the observations will not affect too much. On the contrary, if prior belief about <math>\!\mu_0</math> is weak, <math>\!\Gamma</math> is large and <math>\frac{1}{\Gamma^2}</math> is small. <math>\tilde{\mu}</math> depends more on observations. (This is intuitive, when our original belief is reliable, then the sample is not important in improving the result; when the belief is not reliable, then we depend a lot on the sample.) | |||

* When the sample is large (i.e. n <math>\to \infty</math>), <math>\tilde{\mu} \to \bar{x}</math> and the impact of prior belief about <math>\!\mu</math> is weakened. | |||

=='''Basic Monte Carlo Integration - October 6th, 2011'''== | |||

Three integration methods would be taught in this course: | |||

*Basic Monte Carlo Integration | |||

*Importance Sampling | |||

*Markov Chain Monte Carlo (MCMC) | |||

The first, and most basic, method of numerical integration we will see is Monte Carlo Integration. We use this to solve an integral of the form: <math> I = \int_{a}^{b} h(x) dx </math> | |||

Note the following derivation: | |||

<math>\begin{align} | |||

\displaystyle I & = \int_{a}^{b} h(x)dx \\ | |||

& = \int_{a}^{b} h(x)((b-a)/(b-a))dx \\ | |||

& = \int_{a}^{b} (h(x)(b-a))(1/(b-a))dx \\ | |||

& = \int_{a}^{b} w(x)f(x)dx \\ | |||

& = E[w(x)] \\ | |||

\end{align} | |||

</math> | |||

~ <math> \frac{1}{n} \sum_{i=1}^{n} w(x) </math> | |||

Where w(x) = h(x)(b-a) and f(x) is the probability density function of a uniform random variable on the interval [a,b]. The expectation, with respect to the distribution of f, of w is taken from n samples of x. | |||

===='''General Procedure'''==== | |||

i) Draw n samples <math> x_i \sim~ Unif[a,b] </math> | |||

ii) Compute <math> \ w(x_i) </math> for every sample | |||

iii) Obtain an estimate of the integral, <math> \hat{I} </math>, as follows: | |||

<math> \hat{I} = \frac{1}{n} \sum_{i=1}^{n} w(x_i)</math> . Clearly, this is just the average of the simulation results. | |||

By the strong law of large numbers <math> \hat{I} </math> converges to <math> \ I </math> as <math> \ n \rightarrow \infty </math>. Because of this, we can compute all sorts of useful information, such as variance, standard error, and confidence intervals. | |||

Standard Error: <math> SE = \frac{Standard Deviation} {\sqrt{n}} </math> | |||

Variance: <math> V = \frac{\sum_{i=1}^{n} (w(x)-\hat{I})^2}{n-1} </math> | |||

Confidence Interval: <math> \hat{I} \pm t_{(\alpha/2)} SE </math> | |||

==='''Example: Uniform Distribution'''=== | |||

Consider the integral, <math> \int_{0}^{1} x^3dx </math>, which is easily solved through standard analytical integration methods, and is equal to .25. Now, let us check this answer with a numerical approximation using Monte Carlo Integration. | |||

We generate a 1 by 10000 vector of uniform (on the interval [0,1]) random variables and call that vector 'u'. We see that our 'w' in this case is <math> x^3 </math>, so we set <math> w = u^3 </math>. Our <math>\hat{I}</math> is equal to the mean of w. | |||

In Matlab, we can solve this integration problem with the following code: | |||

<pre> | |||

u = rand(1,10000); | |||

w = u.^3; | |||

mean(w) | |||

ans = 0.2475 | |||

</pre> | |||

Note the '.' after 'u' in the second line of code, indicating that each entry in the matrix is cubed. Also, our approximation is close to the actual value of .25. Now let's try to get an even better approximation by generating more sample points. | |||

<pre> | |||

u= rand(1,100000); | |||

w= u.^3; | |||

mean(w) | |||

ans = .2503 | |||

</pre> | |||

We see that when the number of sample points is increased, our approximation improves, as one would expect. | |||

==='''Generalization'''=== | |||

Up to this point we have seen how to numerically approximate an integral when the distribution of f is uniform. Now we will see how to generalize this to other distributions. | |||

<math> I = \int h(x)f(x)dx </math> | |||

If f is a distribution function (pdf), then <math> I </math> can be estimated as E<sub>f</sub>[h(x)]. This means taking the expectation of h with respect to the distribution of f. Our previous example is the case where f is the uniform distribution between [a,b]. | |||

'''Procedure for the General Case''' | |||

i) Draw n samples from f | |||

ii) Compute h(x<sub>i</sub>) | |||

iii) <math>\hat{I} = \frac{1}{n} \sum_{i=1}^{n} h(x</math><sub>i</sub><math>)</math> | |||

==='''Example: Exponential Distribution'''=== | |||

Find <math> E[\sqrt{x}] </math> for <math> \displaystyle f = e^{-x} </math>, which is the exponential distribution with mean 1. | |||

<math> I = \int_{0}^{\infty} \sqrt{x} e^{-x}dx </math> | |||

We can see that we must draw samples from f, the exponential distribution. | |||

To find a numerical solution using Monte Carlo Integration we see that: | |||

u= rand(1,10000)<br /> | |||

X= -log(u)<br /> | |||

h= <math> \sqrt{x} </math> <br /> | |||

I= mean(h) | |||

To implement this procedure in Matlab, use the following code: | |||

<pre> | |||

u = rand(1,10000); | |||

X = -log(u); | |||

h = x.^.5; | |||

mean(h) | |||

ans = .8841 | |||

</pre> | |||

An easy way to check whether your approximation is correct is to use the built in Matlab function 'quadl' which takes a function and bounds for the integral and returns a solution for the definite integral of that function. For this specific example, we can enter: | |||

<pre> | |||

f = @(x) sqrt(x).*exp(-x); | |||

% quadl runs into computational problems when the upper bound is "inf" or an extremely large number, | |||

% so choose just a moderately large number. | |||

quadl(f,0,100) | |||

ans = | |||

0.8862 | |||

</pre> | |||

From the above result, we see that our approximation was quite close. | |||

==='''Example: Normal Distribution'''=== | |||

Let <math> f(x) = (1/(2 \pi)^{1/2}) e^{(-x^2)/2} </math>. Compute the cumulative distribution function at some point x. | |||

<math> F(x)= \int_{-\infty}^{x} f(s)ds = \int_{-\infty}^{x}(1)(1/(2 \pi)^{1/2}) e^{(-s^2)/2}ds </math>. The (1) is inserted to illustrate that our h(x) will be the constant function 1, and our f(x) is the normal distribution. To take into account the upper bound of integration, x, any values sampled that are greater than x will be set to zero. | |||

This is the Matlab code for solving F(2): | |||

<pre> | |||

u = randn(1,10000) | |||

h = u < 2; | |||

mean(h) | |||

ans = .9756 | |||

</pre> | |||

We generate a 1 by 10000 vector of standard normal random variables and we return a value of 1 if u is less than 2, and 0 otherwise. | |||

We can also build the function F(x) in matlab in the following way: | |||

<pre> | |||

function F(x) | |||

u=rand(1,1000000); | |||

h=u<x; | |||

mean(h) | |||

</pre> | |||

==='''Example: Binomial Distribution'''=== | |||

In this example we will see the Bayesian Inference for 2 Binomial Distributions. | |||

Let <math> X ~ Bin(n,p) </math> and <math> Y ~ Bin(m,q) </math>, and let <math> \!\delta = p-q </math>. | |||

Therefore, <math> \displaystyle \!\delta = x/n - y/m </math> which is the frequentist approach. | |||

Bayesian wants <math> \displaystyle f(p,q|x,y) = f(x,y|p,q)f(p,q)/f(x,y) </math>, where <math> f(x,y)=\iint\limits_{\!\theta} f(x,y|p,q)f(p,q)\,dp\,dq</math> is a constant. | |||

Thus, <math> \displaystyle f(p,q|x,y)\propto f(x,y|p,q)f(p,q) </math>. Now we assume that <math>\displaystyle f(p,q) = f(p)f(q) = 1 </math> and f(p) and f(q) are uniform. | |||

Therefore, <math> \displaystyle f(p,q|x,y)\propto p^x(1-p)^{n-x}q^y(1-q)^{m-y} </math>. | |||

<math> E[\delta|x,y] = \int_{0}^{1} \int_{0}^{1} (p-q)f(p,q|x,y)dpdq </math>. | |||

As you can see this is much tougher than the frequentist approach. | |||

=='''Importance Sampling and Basic Monte Carlo Integration - October 11th, 2011'''== | |||

==='''Example: Binomial Distribution (Continued)'''=== | |||

Suppose we are given two independent Binomial Distributions <math>\displaystyle X \sim Bin(n, p_1)</math>, <math>\displaystyle Y \sim Bin(m, p_2)</math>. We would like to give an Monte Carlo estimate of <math>\displaystyle \delta = p_1 - p_2</math><br> | |||

Frequentist approach: <br><br><math>\displaystyle \hat{p_1} = \frac{X}{n}</math> ; <math>\displaystyle \hat{p_2} = \frac{Y}{m}</math><br><br><math>\displaystyle \hat{\delta} = \hat{p_1} - \hat{p_2} = \frac{X}{n} - \frac{Y}{m}</math><br><br> | |||

Bayesian approach to compute the expected value of <math>\displaystyle \delta</math>:<br><br> | |||

<math>\displaystyle E(\delta|X,Y) = \int\int(p_1-p_2) f(p_1,p_2|X,Y)\,dp_1dp_2</math><br><br> | |||

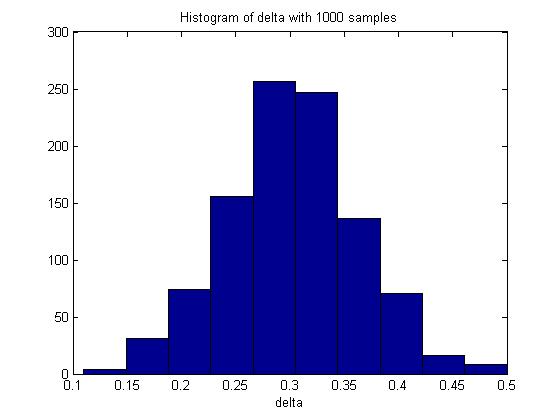

Assume that <math>\displaystyle n = 100, m = 100, p_1 = 0.5, p_2 = 0.8</math> and the sample size is 1000.<br> | |||

MATLAB code of the above example: | |||

<pre> | |||

n = 100; | |||

m = 100; | |||

p_1 = 0.5; | |||

p_2 = 0.8; | |||

p1 = mean(rand(n,1000)<p_1,1); | |||

p2 = mean(rand(m,1000)<p_2,1); | |||

delta = p2 - p1; | |||

hist(delta) | |||

mean(delta) | |||

</pre> | |||

In one execution of the code, the mean of delta was 0.3017. The histogram of delta generated was: | |||

[[File:Hist delta.jpg|center|]] | |||

Through Monte Carlo simulation, we can obtain an empirical distribution of delta and carry out inference on the data obtained, such as computing the mean, maximum, variance, standard deviation and the standard error of delta. | |||

==='''Importance Sampling'''=== | |||

====Motivation==== | |||

Consider the integral <math>\displaystyle I = \int h(x)f(x)\,dx</math><br><br> | |||

According to basic Monte Carlo Integration, if we can sample from the probability density function <math>\displaystyle f(x)</math> and feed the samples of <math>\displaystyle f(x)</math> back to <math>\displaystyle h(x)</math>, <math>\displaystyle I</math> can be estimated as an average of <math>\displaystyle h(x)</math> ( i.e. <math>\hat{I} = \frac{1}{n} \sum_{i=1}^{n} h(x_i)</math> )<br> | |||

However, the Monte Carlo method works when we know how to sample from <math>\displaystyle f(x)</math>. In the case where it is difficult to sample from <math>\displaystyle f(x)</math>, importance sampling is a technique that we can apply. Importance Sampling relies on another function <math>\displaystyle g(x)</math> which we know how to sample from. | |||

The above integral can be rewritten as follow:<br> | |||

<math>\begin{align} | |||

\displaystyle I & = \int h(x)f(x)\,dx \\ | |||

& = \int h(x)f(x)\frac{g(x)}{g(x)}\,dx \\ | |||

& = \int \frac{h(x)f(x)}{g(x)}g(x)\,dx \\ | |||

& = \int y(x)g(x)\,dx \\ | |||

& = E_g(y(x)) \\ | |||

\end{align} | |||

</math><br> | |||

<math>where \ y(x) = \frac{h(x)f(x)}{g(x)}</math><br> | |||

The integral can thus be simulated as <math>\displaystyle \hat{I} = \frac{1}{n} \sum_{i=1}^{n} Y_i \ , \ where \ Y_i = \frac{h(x_i)f(x_i)}{g(x_i)}</math><br> | |||

====Procedure==== | |||

Suppose we know how to sample from <math>\displaystyle g(x)</math><br> | |||

#Choose a suitable <math>\displaystyle g(x)</math> and draw n samples <math>x_1,x_2....,x_n \sim~ g(x)</math> | |||

#Set <math>Y_i =\frac{h(x_i)f(x_i)}{g(x_i)}</math> | |||

#Compute <math> \hat{I} = \frac{1}{n}\sum_{i=1}^{n} Y_i </math><br> | |||

By the Law of large numbers, <math>\displaystyle \hat{I} \rightarrow I </math> provided that the sample size n is large enough.<br><br> | |||

'''Remarks:''' One can think of <math>\frac{f(x)}{g(x)}</math> as a weight to <math>\displaystyle h(x)</math> in the computation of <math>\hat{I}</math><br><br> | |||

<math>\displaystyle i.e. \ \hat{I} = \frac{1}{n}\sum_{i=1}^{n} Y_i = \frac{1}{n}\sum_{i=1}^{n} (\frac{f(x_i)}{g(x_i)})h(x_i)</math><br><br> | |||

Therefore, <math>\displaystyle \hat{I} </math> is a weighted average of <math>\displaystyle h(x_i)</math><br><br> | |||

====Problem==== | |||

If <math>\displaystyle g(x)</math> is not chosen appropriately, then the variance of the estimate <math>\hat{I}</math> may be very large. The problem here is actually similar to what we encounter with the Rejection-Acceptance method. Consider the second moment of <math>y(x)</math>:<br><br> | |||

<math>\begin{align} | |||

E_g((y(x))^2) \\ | |||

& = \int (\frac{h(x)f(x)}{g(x)})^2 g(x) dx \\ | |||

& = \int \frac{h^2(x) f^2(x)}{g^2(x)} g(x) dx \\ | |||

& = \int \frac{h^2(x)f^2(x)}{g(x)} dx \\ | |||

\end{align} | |||

</math><br><br> | |||

When <math>\displaystyle g(x)</math> is very small, then the above integral could be very large, hence the variance can be very large when g is not chosen appropriately. This occurs when <math>\displaystyle g(x)</math> has a thinner tail than <math>\displaystyle f(x)</math> such that the quantity <math>\displaystyle \frac{h^2(x)f^2(x)}{g(x)}</math> is large. | |||

'''Remarks:''' | |||

1. We can actually compute the form of <math>\displaystyle g(x)</math> to have optimal variance. <br>Mathematically, it is to find <math>\displaystyle g(x)</math> subject to <math>\displaystyle \min_g [\ E_g([y(x)]^2) - (E_g[y(x)])^2\ ]</math><br> | |||

It can be shown that the optimal <math>\displaystyle g(x)</math> is <math>\displaystyle {|h(x)|f(x)}</math>. Using the optimal <math>\displaystyle g(x)</math> will minimize the variance of estimation in Importance Sampling. This is of theoretical interest but not useful in practice. As we can see, if we can actually show the expression of g(x), we must first have the value of the integration---which is what we want in the first place. | |||

2. In practice, we shall choose <math>\displaystyle g(x)</math> which has similar shape as <math>\displaystyle f(x)</math> but with a thicker tail than <math>\displaystyle f(x)</math> in order to avoid the problem mentioned above.<br> | |||

====Example==== | |||

Estimate <math>\displaystyle I = Pr(Z>3),\ where\ Z \sim N(0,1)</math><br><br> | |||

'''Method 1: Basic Monte Carlo''' | |||

<math>\begin{align} Pr(Z>3) & = \int^\infty_3 f(x)\,dx \\ | |||

& = \int^\infty_{-\infty} h(x)f(x)\,dx \end{align}</math><br /> | |||

<math> where \ | |||

h(x) = \begin{cases} | |||

0, & \text{if } x \le 3 \\ | |||

1, & \text{if } x > 3 | |||

\end{cases}</math> | |||

<math>\ ,\ f(x) = \frac{1}{\sqrt{2\pi}}e^{-\frac{1}{2}x^2}</math> | |||

MATLAB code to compute <math>\displaystyle I</math> from 100 samples of standard normal distribution: | |||

<pre> | |||

h = randn(100,1) > 3; | |||

I = mean(h) | |||

</pre> | |||

In one execution of the code, it returns a value of 0 for <math>\displaystyle I</math>, which differs significantly from the true value of <math>\displaystyle I \approx 0.0013 </math>. The problem of using Basic Monte Carlo in this example is that <math>\displaystyle Pr(Z>3)</math> has a small value, and hence many points sampled from the standard normal distribution will be wasted. Therefore, although Basic Monte Carlo is a feasible method to compute <math>\displaystyle I</math>, it gives a poor estimation. | |||

'''Method 2: Importance Sampling''' | |||

<math>\displaystyle I = Pr(Z>3)= \int^\infty_3 f(x)\,dx </math><br> | |||

To apply importance sampling, we have to choose a <math>\displaystyle g(x)</math> which we will sample from. In this example, we can choose <math>\displaystyle g(x)</math> to be the probability density function of exponential distribution, normal distribution with mean 0 and variance greater than 1 or normal distribution with mean greater than 0 and variance 1 etc. The goal is to minimize the number of rejected samples in order to produce a more accurate result! For the following, we take <math>\displaystyle g(x)</math> to be the pdf of <math>\displaystyle N(4,1)</math>.<br> | |||

Procedure: | |||

#Draw n samples <math>x_1,x_2....,x_n \sim~ g(x)</math> | |||

#Calculate <math>\begin{align} \frac{f(x)}{g(x)} & = \frac{ \frac{1}{\sqrt{2\pi}}e^{-\frac{1}{2}x^2} | |||

}{ \frac{1}{\sqrt{2\pi}}e^{-\frac{1}{2}(x-4)^2} } \\ | |||

& = e^{8-4x} \end{align} </math><br> | |||

#Set <math> Y_i = h(x_i)e^{8-4x_i}\ with\ h(x) = \begin{cases} | |||

0, & \text{if } x \le 3 \\ | |||

1, & \text{if } x > 3 | |||

\end{cases} | |||

</math><br> | |||

#Compute <math> \hat{Y} = \frac{1}{n}\sum_{i=1}^{n} Y_i </math><br> | |||

The above procedure from 100 samples of <math>\displaystyle g(x)</math>can be implemented in MATLAB as follow: | |||

<pre> | |||

for ii = 1:100 | |||

x = randn + 4 ; | |||

h = x > 3 ; | |||

y(ii) = h * exp(8-4*x) ; | |||

end | |||

mean(y) | |||

</pre> | |||

In one execution of the code, it returns a value of 0.001271 for <math> \hat{Y} </math>, which is much closer to the true value of <math>\displaystyle I \approx 0.0013 </math>. From many executions of the code, the variance of basic monte carlo is approximately 150 times that of importance sampling. This demonstrates that this method can provide a better estimate than the Basic Monte Carlo method. | |||

==''' Importance Sampling with Normalized Weight and Markov Chain Monte Carlo - October 13th, 2011'''== | |||

==='''Importance Sampling with Normalized Weight'''=== | |||

Recall that we can think of <math>\displaystyle b(x) = \frac{f(x)}{g(x)}</math> as a weight applied to the samples <math>\displaystyle h(x)</math>. If the form of <math>\displaystyle f(x)</math> is known only up to a constant, we can use an alternate, normalized form of the weight, <math>\displaystyle b^*(x)</math>. (This situation arises in Bayesian inference.) Importance sampling with normalized or standard weight is also called indirect importance sampling. | |||

We derive the normalized weight as follows:<br> | |||

<math>\begin{align} | |||

\displaystyle I & = \int h(x)f(x)\,dx \\ | |||

&= \int h(x)\frac{f(x)}{g(x)}g(x)\,dx \\ | |||

&= \frac{\int h(x)\frac{f(x)}{g(x)}g(x)\,dx}{\int f(x) dx} \\ | |||

&= \frac{\int h(x)\frac{f(x)}{g(x)}g(x)\,dx}{\int \frac{f(x)}{g(x)}g(x) dx} \\ | |||

&= \frac{\int h(x)b(x)g(x)\,dx}{\int\ b(x)g(x) dx} | |||

\end{align}</math> | |||

<math>\hat{I}= \frac{\sum_{i=1}^{n} h(x_i)b(x_i)}{\sum_{i=1}^{n} b(x_i)} </math> | |||

Then, the normalized weight is <math>b^*(x) = \displaystyle \frac{b(x_i)}{\sum_{i=1}^{n} b(x_i)}</math> | |||

Note that <math> \int f(x) dx = 1 = \int b(x)g(x) dx = 1 </math> | |||

We can also determine the associated Monte Carlo variance of this estimate by | |||

<math> Var(\hat{I})= \frac{\sum_{i=1}^{n} b(x_i)(h(x_i) - \hat{I})^2}{\sum_{i=1}^{n} b(x_i)} </math> | |||

==='''Markov Chain Monte Carlo'''=== | |||

We still want to solve <math> I = \displaystyle\int^\ h(x)f(x)\,dx </math> | |||

====Stochastic Process==== | |||

A stochastic process <math> \{ x_t : t \in T \}</math> is a collection of random variables. Variables <math>\displaystyle x_t</math> take values in some set <math>\displaystyle X</math> called the '''state space.''' The set <math>\displaystyle T</math> is called the '''index set.''' | |||

===='''Markov Chain'''==== | |||

A Markov Chain is a stochastic process for which the distribution of <math>\displaystyle x_t</math> depends only on <math>\displaystyle x_{t-1}</math>. It is a random process characterized as being memoryless, meaning that the next occurrence of a defined event is only dependent on the current event;not on the preceding sequence of events. | |||

Formal Definition: The process <math> \{ x_t : t \in T \}</math> is a Markov Chain if <math>\displaystyle Pr(x_t|x_0, x_1,..., x_{t-1})= Pr(x_t|x_{t-1})</math> for all <math> \{t \in T \}</math> and for all <math> \{x \in X \}</math> | |||

For a Markov Chain, <math>\displaystyle f(x_1,...x_n)= f(x_1)f(x_2|x_1)f(x_3|x_2)...f(x_n|x_{n-1})</math> | |||

<br><br>'''Real Life Example:''' | |||

<br>When going for an interview, the employer only looks at your highest education achieved. The employer would not look at the past educations received (elementary school, high school etc.) because the employer believes that the highest education achieved summarizes your previous educations. Therefore, anything before your most recent previous education is irrelevant. In other word, we can say that <math>\! x_t </math> is regarded as the summary of <math>\!x_{t-1},...,x_2,x_1</math>, so when we need to determine <math>\!x_{t+1}</math>, we only need to pay attention in <math>\!x_{t}</math>. | |||

====Transition Probabilities==== | |||

The <b>transition probability</b> is the probability of jumping from one state to another state in a Markov Chain. | |||

Formally, let us define <math>\displaystyle P_{ij} = Pr(x_{t+1}=j|x_t=i)</math> to be the transition probability. | |||

That is, <math>\displaystyle P_{ij}</math> is the probability of transitioning from state i to state j in a single step. Then the matrix <math>\displaystyle P</math> whose (i,j) element is <math>\displaystyle P_{ij}</math> is called the <b>transition matrix</b>. | |||

Properties of P: | |||

:1) <math>\displaystyle P_{ij} >= 0</math> (The probability of going to another state cannot be negative) | |||

:2) <math>\displaystyle \sum_{\forall j}P_{ij} = 1</math> (The probability of transitioning to some state from state i (including remaining in state i) is one) | |||

====Random Walk==== | |||

Example: Start at one point and flip a coin where <math>\displaystyle Pr(H)=p</math> and <math>\displaystyle Pr(T)=1-p=q</math>. Take one step right if heads and one step left if tails. If at an endpoint, stay there. | |||

The transition matrix is | |||

<math>P=\left(\begin{matrix}1&0&0&\dots&\dots&0\\ | |||

q&0&p&0&\dots&0\\ | |||

0&q&0&p&\dots&0\\ | |||

\vdots&\vdots&\vdots&\ddots&\vdots&\vdots\\ | |||

\vdots&\vdots&\vdots&\vdots&\ddots&\vdots\\ | |||

0&0&\dots&\dots&\dots&1 | |||

\end{matrix}\right)</math> | |||

Let <math>\displaystyle P_n</math> be the matrix such that its (i,j) element is <math>\displaystyle P_{ij}(n)</math>. This is called n-step probability. | |||

:<math>\displaystyle P_n = P^n</math> | |||

:<math>\displaystyle P_1 = P</math> | |||

:<math>\displaystyle P_2 = P^2</math> | |||

==''' Markov Chain Properties and Page Rank - October 18th, 2011'''== | |||

===Summary of Terminology=== | |||

====Transition Matrix==== | |||

A matrix <math>\!P</math> that defines a Markov Chain has the form: | |||

<math>P = \begin{bmatrix} | |||

P_{11} & \cdots & P_{1N} \\ | |||

\vdots & \ddots & \vdots \\ | |||

P_{N1} & \cdots & P_{NN} | |||

\end{bmatrix}</math> | |||

where <math>\!P(i,j) = P_{ij} = Pr(x_{t+1} = j | x_t = i) </math> is the probability of transitioning from state i to state j in the Markov Chain in a single step. Note that this implies that all rows add up to one. | |||

====n-step Transition matrix==== | |||

A matrix <math>\!P_n</math> whose (i,j)<sup>th</sup> entry is the probability of moving from state i to state j after n transitions: | |||

<math>\!P_n(i,j) = Pr(x_{m+n}=j|x_m = i)</math> | |||

This probability is called the n-step transition probability. A nice property of this matrix is that | |||

<math>\!P_n = P^n</math> | |||

For all n >= 0, where P is the transition matrix. Note that the rows of <math>\!P_n</math> should still add up to one. | |||

====Marginal distribution of a Markov Chain==== | |||

We represent the state at time t as a vector. | |||

<math>\mu_t = (\mu_t(1) \; \mu_t(2) \; ... \; \mu_t(n))</math> | |||

Consider this Markov Chain: | |||

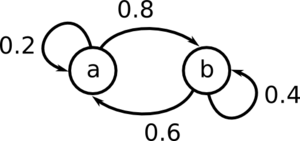

[[File:MarkovSample.png|300px]] | |||

<math>\mu_t = (A \; B)</math>, where A is the probability of being in state a at time t, and B is the probability of being in state b at time t. | |||

For example if <math>\mu_t = (0.1 \; 0.9)</math>, we have a 10% chance of being in state a at time t, and a 90% chance of being in state b at time t. | |||

Suppose we run this Markov chain many times, and record the state at each step. | |||

In this example, we run 4 trials, up until t=5. | |||

{| class="wikitable" | |||

|- | |||

! t | |||

! Trial 1 | |||

! Trial 2 | |||

! Trial 3 | |||

! Trial 4 | |||

! Observed <math>\mu</math> | |||

|- | |||

| 1 | |||

| a | |||

| b | |||

| b | |||

| a | |||

| (0.5, 0.5) | |||

|- | |||

| 2 | |||

| b | |||

| a | |||

| a | |||

| a | |||

| (0.75, 0.25) | |||

|- | |||

| 3 | |||

| a | |||

| a | |||

| b | |||

| a | |||

| (0.75, 0.25) | |||

|- | |||

| 4 | |||

| b | |||

| b | |||

| a | |||

| b | |||

| (0.25, 0.75) | |||

|- | |||

| 5 | |||

| b | |||

| b | |||

| b | |||

| a | |||

| (0.25, 0.75) | |||

|} | |||

Imagine simulating the chain many times. If we collect all the outcomes at time t from all the chains, the histogram of this data would look like <math>\!\mu_t</math>. | |||

We can find the marginal probabilities as <math>\!\mu_n = \mu_0 P^n</math> | |||

====Stationary Distribution==== | |||

Let <math>\pi = (\pi_i \mid i \in \chi)</math> be a vector of non-negative numbers that sum to 1. (i.e. <math>\!\pi</math> is a pmf) | |||

If <math>\!\pi = \pi P</math>, then <math>\!\pi</math> is a stationary distribution, also known as an invariant distribution. | |||

====Limiting Distribution==== | |||

A Markov chain has limiting distribution <math>\!\pi </math> if <math>\lim_{n \to \infty} P^n = \begin{bmatrix} \pi \\ \vdots \\ \pi \end{bmatrix}</math> | |||

That is, <math>\!\pi_j = \lim_{n \to \infty}\left [ P^n \right ]_{ij}</math> exists and is independent of i. | |||

Here is an example: | |||

Suppose we want to find the stationary distribution of | |||

<math>P=\left(\begin{matrix} | |||

1/3&1/3&1/3\\ | |||

1/4&3/4&0\\ | |||

1/2&0&1/2 | |||

\end{matrix}\right)</math> | |||

We want to solve <math>\pi=\pi P</math> and we want <math>\displaystyle \pi_0 + \pi_1 + \pi_2 = 1</math><br /> | |||

<math>\displaystyle \pi_0 = \frac{1}{3}\pi_0 + \frac{1}{4}\pi_1 + \frac{1}{2}\pi_2</math><br /> | |||

<math>\displaystyle \pi_1 = \frac{1}{3}\pi_0 + \frac{3}{4}\pi_1</math><br /> | |||

<math>\displaystyle \pi_2 = \frac{1}{3}\pi_0 + \frac{1}{2}\pi_2</math><br /> | |||

Solving the system of equations, we get <br /> | |||

<math>\displaystyle \pi_1 = \frac{4}{3}\pi_0</math><br /> | |||

<math>\displaystyle \pi_2 = \frac{2}{3}\pi_0</math><br /> | |||

So using our condition above, we have <math>\displaystyle \pi_0 + \frac{4}{3}\pi_0 + \frac{2}{3}\pi_0 = 1</math> and by solving we get <math>\displaystyle \pi_0 = \frac{1}{3}</math> | |||

Using this in our system of equations, we obtain: <br /> | |||

<math>\displaystyle \pi_1 = \frac{4}{9}</math><br /> | |||

<math>\displaystyle \pi_2 = \frac{2}{9}</math> | |||

Thus, the stationary distribution is <math>\displaystyle \pi = (\frac{1}{3}, \frac{4}{9}, \frac{2}{9})</math> | |||

====Detailed Balance==== | |||

<math>\!\pi</math> has the detailed balance property if <math>\!\pi_iP_{ij} = P_{ji}\pi_j</math> | |||

'''Theorem''' | |||

If <math>\!\pi</math> satisfies detailed balance, then <math>\!\pi</math> is a stationary distribution. | |||

In other words, if <math>\!\pi_iP_{ij} = P_{ji}\pi_j</math>, then <math>\!\pi = \pi P</math> | |||

'''Proof:''' | |||

<math>\!\pi P = | |||

\begin{bmatrix}\pi_1 & \pi_2 & \cdots & \pi_N\end{bmatrix} \begin{bmatrix}P_{11} & \cdots & P_{1N} \\ \vdots & \ddots & \vdots \\ P_{N1} & \cdots & P_{NN}\end{bmatrix}</math> | |||

Observe that the j<sup>th</sup> element of <math>\!\pi P</math> is | |||

<math>\!\left [ \pi P \right ]_j = \pi_1 P_{1j} + \pi_2 P_{2j} + \dots + \pi_N P_{Nj}</math> | |||

::<math>\! = \sum_{i=1}^N \pi_i P_{ij}</math> | |||

::<math>\! = \sum_{i=1}^N P_{ji} \pi_j</math>, by the definition of detailed balance. | |||

::<math>\! = \pi_j \sum_{i=1}^N P_{ji}</math> | |||

::<math>\! = \pi_j</math>, as the sum of the entries in a row of P must sum to 1. | |||

So <math>\!\pi = \pi P</math>. | |||

'''Example''' | |||

Find the marginal distribution of | |||

[[File:MarkovSample.png|300px]] | |||

Start by generating the matrix P. | |||

<math>\!P = \begin{pmatrix} 0.2 & 0.8 \\ 0.6 & 0.4 \end{pmatrix}</math> | |||

We must assume some starting value for <math>\mu_0</math> | |||

<math>\!\mu_0 = \begin{pmatrix} 0.1 & 0.9 \end{pmatrix}</math> | |||

For t = 1, the marginal distribution is | |||

<math>\!\mu_1 = \mu_0 P</math> | |||

Notice that this <math>\mu</math> converges. | |||

If you repeatedly run: | |||

<math>\!\mu_{i+1} = \mu_i P</math> | |||

It converges to <math>\mu = \begin{pmatrix} 0.4286 & 0.5714 \end{pmatrix}</math> | |||

This can be seen by running the following Matlab code: | |||

P = [0.2 0.8; 0.6 0.4]; | |||

mu = [0.1 0.9]; | |||

while 1 | |||

mu_old = mu; | |||

mu = mu * P; | |||

if mu_old == mu | |||

disp(mu); | |||

break; | |||

end | |||

end | |||

Another way of looking at this simple question is that we can see whether the ultimate pmf converges: | |||

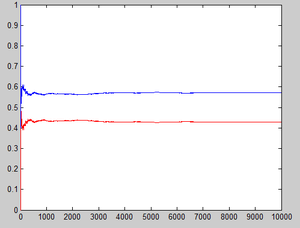

Let <math>\hat{p_n}(1)=\frac{1}{n}\sum_{k=1}^n I(X_k=1)</math> denote the estimator of the stationary probability of state 1,<math>\hat{p_n}(2)=\frac{1}{n}\sum_{k=1}^n I(X_k=2)</math> denote the estimator of the stationary probability of state 2, where <math>\displaystyle I(X_k=1)</math> and <math>\displaystyle I(X_k=2)</math> are indicator variables which equal 1 if <math>X_k=1</math>(or <math>X_k=2</math> for the latter one). | |||

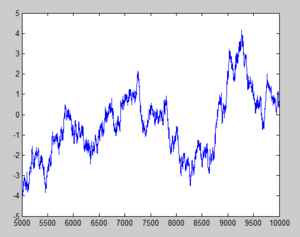

Matlab codes for this explanation is | |||

n=1; | |||

if rand<0.1 | |||

x(1)=1; | |||

else | |||

x(1)=0; | |||

end | |||

p1(1)=sum(x)/n; | |||

p2(1)=1-p1(1); | |||

for i=2:10000 | |||

n=n+1; | |||

if (x(i-1)==1&rand<0.2)|(x(i-1)==0&rand<0.6) | |||

x(i)=1; | |||

else | |||

x(i)=0; | |||

end | |||

p1(i)=sum(x)/n; | |||

p2(i)=1-p1(i); | |||

end | |||

plot(p1,'red'); | |||

hold on; | |||

plot(p2) | |||

The results can be easily seen from the graph below: | |||

[[File:Stationary distribution.png|300px]] | |||

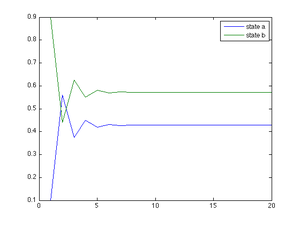

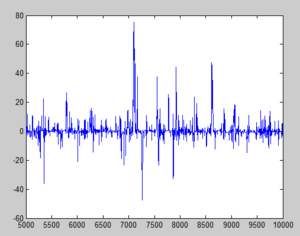

Additionally, we can plot the marginal distribution as it converges without estimating it. The following Matlab code shows this: | |||

%transition matrix | |||

P=[0.2 0.8; 0.6 0.4]; | |||

%mu at time 0 | |||

mu=[0.1 0.9]; | |||

%number of points for simulation | |||

n=20; | |||

for i=1:n | |||

mu_a(i)=mu(1); | |||

mu_b(i)=mu(2); | |||

mu=mu*P; | |||

end | |||

t=[1:n]; | |||

plot(t, mu_a, t, mu_b); | |||

hleg1=legend('state a', 'state b'); | |||

[[File:Marginal distribution convergence.png|300px]] | |||

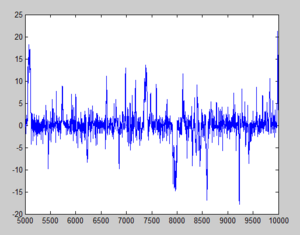

Note that there are chains with stationary distributions that don't converge (the chain might not naturally reach the stationary distribution, and isn't limiting). An example of this is: | |||

<math>P = \begin{pmatrix} 0 & 1 & 0 \\ 0 & 0 & 1 \\ 1 & 0 & 0 \end{pmatrix}, \mu_0 = \begin{pmatrix} 1/3 & 1/3 & 1/3 \end{pmatrix}</math> | |||

<math>\!\mu_0</math> is a stationary distribution, so <math>\!\mu P</math> is the same for all iterations. | |||

But, | |||

<math>P^{1000} = \begin{pmatrix} 0 & 0 & 1 \\ 1 & 0 & 0 \\ 0 & 1 & 0 \end{pmatrix} \ne \begin{pmatrix} \mu \\ \mu \\ \mu \end{pmatrix}</math> | |||

So <math>\!\mu</math> is not a limiting distribution. Also, if | |||

<math>\mu = \begin{pmatrix} 0.2 & 0.1 & 0.7 \end{pmatrix}</math> | |||

Then <math>\!\mu \neq \mu P</math>. | |||

This can be observed through the following Matlab code. | |||

P = [0 0 1; 1 0 0; 0 1 0]; | |||

mu = [0.2 0.1 0.7]; | |||

for i= 1:4 | |||

mu = mu * P; | |||

disp(mu); | |||

end | |||

Output: | |||

0.1000 0.7000 0.2000 | |||

0.7000 0.2000 0.1000 | |||

0.2000 0.1000 0.7000 | |||

0.1000 0.7000 0.2000 | |||

Note that <math>\!\mu_1 = \!\mu_4</math>, which indicates that <math>\!\mu</math> will cycle forever. | |||

This means that this chain has a stationary distribution, but is not limiting. A chain has a limiting distribution iff it is ergodic, that is, aperiodic and positive recurrent. While cycling breaks detailed balance and limiting distribution on the whole state space does not exist, the cycling behavior itself is the "limiting distribution". Also, for each cycles (closed class), we will have a mini-limiting distribution which is useful in analyzing small scale long-term behavior. | |||

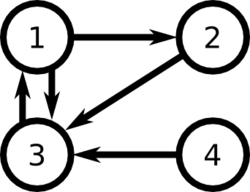

===Page Rank=== | |||

Page Rank was the original ranking algorithm used by Google's search engine to rank web pages.<ref> | |||

http://ilpubs.stanford.edu:8090/422/ | |||

</ref> The algorithm was created by the founders of Google, Larry Page and Sergey Brin as part of Page's PhD thesis. When a query is entered in a search engine, there are a set of web pages which are matched by this query, but this set of pages must be ordered by their "importance" in order to identify the most meaningful results first. Page Rank is an algorithm which assigns importance to every web page based on the links in each page. | |||

==== Intuition ==== | |||

We can represent web pages by a set of nodes, where web links are represented as edges connecting these nodes. Based on our intuition, there are three main factors in deciding whether a web page is important or not. | |||

# A web page is important if many other pages point to it. | |||

# The more important a webpage is, the more weight is placed on its links. | |||

# The more links a webpage has, the less weight is placed on its links. | |||

====Modelling==== | |||

We can model the set of links as a N-by-N matrix L, where N is the number of web pages we are interested in: | |||

<math>L_{ij} = | |||

\left\{ | |||

\begin{array}{lr} | |||

1 : \text{if page j points to i}\\ | |||

0 : \text{otherwise} | |||

\end{array} | |||

\right. | |||

</math> | |||

The number of outgoing links from page j is | |||

<math>c_j = \sum_{i=1}^N L_{ij}</math> | |||

For example, consider the following set of links between web pages: | |||

[[File:PageRank.png|250px]] | |||

According to the factors relating to importance of links, we can consider two possible rankings : | |||

<math>\displaystyle 3 > 2 > 1 > 4 </math> | |||

or | |||

<math>\displaystyle 3>1>2>4 </math> | |||

if we consider that the high importance of the link from 3 to 1 is more influent than the fact that there are two outgoing links from page 1 and only one from page 2. | |||

We have <math>L = \begin{bmatrix} | |||

0 & 0 & 1 & 0 \\ | |||

1 & 0 & 0 & 0 \\ | |||

1 & 1 & 0 & 1 \\ | |||

0 & 0 & 0 & 0 | |||

\end{bmatrix}</math>, and <math>c = \begin{pmatrix}2 & 1 & 1 & 1\end{pmatrix} </math> | |||

We can represent the ranks of web pages as the vector P, where the i<sup>th</sup> element is the rank of page i: | |||

<math>P_i = (1-d) + d\sum_j \frac{L_{ij}}{c_j} P_j</math> | |||

Here we take the sum of the weights of the incoming links, where links are reduced in weight if the linking page has a lot of outgoing links, and links are increased in weight if the linking page has a lot of incoming links. | |||

We don't want to completely ignore pages with no incoming links, which is why we add the constant (1 - d). | |||

If | |||

<math>L = \begin{bmatrix} L_{11} & \cdots & L_{1N} \\ | |||

\vdots & \ddots & \vdots \\ | |||

L_{N1} & \cdots & L_{NN} \end{bmatrix}</math> | |||

<math>D = \begin{bmatrix} c_1 & \cdots & 0 \\ | |||

\vdots & \ddots & \vdots \\ | |||

0 & \cdots & c_N \end{bmatrix}</math> | |||

Then <math>D^{-1} = \begin{bmatrix} c_1^{-1} & \cdots & 0 \\ | |||

\vdots & \ddots & \vdots \\ | |||

0 & \cdots & c_N^{-1} \end{bmatrix}</math> | |||

<math>\!P = (1-d)e + dLD^{-1}P</math> | |||

where <math>\!e = \begin{pmatrix} 1 \\ \vdots \\ 1 \end{pmatrix}</math> is the vector with all 1's | |||

To simplify the problem, we let <math>\!e^T P = N \Rightarrow \frac{e^T P}{N} = 1</math>. This means that the average importance of all pages on the internet is 1. | |||

Then | |||

<math>\!P = (1-d)\frac{ee^TP}{N} + dLD^{-1}P</math> | |||

::<math>\! = \left [ (1-d)\frac{ee^T}{N} + dLD^{-1} \right ] P</math> | |||

::<math>\! = \left [ \left ( \frac{1-d}{N} \right ) E + dLD^{-1} \right ] P</math>, where <math> E </math> is an NxN matrix filled with ones. | |||

Let <math>\!A = \left [ \left ( \frac{1-d}{N} \right ) E + dLD^{-1} \right ]</math> | |||

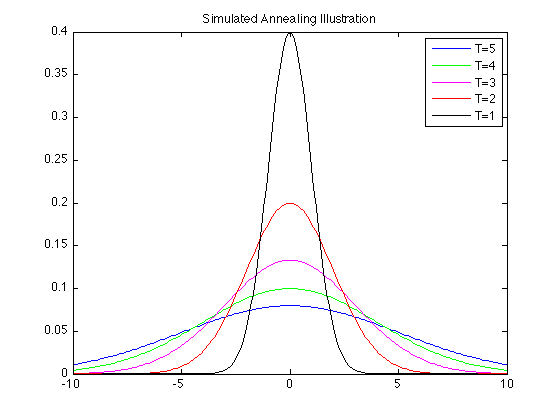

Then <math>\!P = AP</math>. | |||