probabilistic PCA with GPLVM: Difference between revisions

m (Conversion script moved page Probabilistic PCA with GPLVM to probabilistic PCA with GPLVM: Converting page titles to lowercase) |

|||

| (57 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

= Overview = | = Overview = | ||

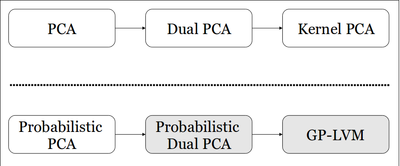

The development of principal component analysis (PCA) (a tutorial of it by Lindsay I. Smith can be found [http://users.ecs.soton.ac.uk/hbr03r/pa037042.pdf here]), a statistical tool which may be used for dimensionality reduction, spans from the initial formulation of PCA 100 years ago to the more recent formulation of the dual problem (Dual PCA) and subsequent kernelization of the PCA algorithm (Kernel PCA). | The development of [http://en.wikipedia.org/wiki/Principal_component_analysis principal component analysis] (PCA) (a tutorial of it by Lindsay I. Smith can be found [http://users.ecs.soton.ac.uk/hbr03r/pa037042.pdf here]), a statistical tool which may be used for dimensionality reduction, spans from the initial formulation of PCA 100 years ago to the more recent formulation of the dual problem (Dual PCA) and subsequent kernelization of the PCA algorithm (Kernel PCA). | ||

Inspired by this progression of the deterministic formulation of PCA, Neil Lawrence builds on a probabilistic PCA model (PPCA) developed by Tipping and Bishop, and proposes a novel dual formulation of PPCA and subsequently the Gaussian Process Latent Variable Model ( | Inspired by this progression of the deterministic formulation of PCA, Neil Lawrence builds on a probabilistic PCA model (PPCA) developed by Tipping and Bishop, and proposes a novel dual formulation of PPCA and subsequently the Gaussian Process Latent Variable Model (GP-LVM). | ||

A graphical representation of this can be see in Figure 1. | A graphical representation of this can be see in Figure 1. A probabilistic approach to the PCA problem is appealing as it provides several advantages such as enabling the application of Bayesian methods as well as dealing with missing data values. | ||

[[File:GplvmOverview.png|400px|thumb|right|Fig. 1: Overview of the | [[File:GplvmOverview.png|400px|thumb|right|Fig. 1: Overview of the GP-LVM Formulation]] | ||

While the probabilistic approach to dimensionality reduction - latent variable model (Jeroen K. Vermunt gives details regarding this type of model [http://spitswww.uvt.nl/~vermunt/esbs2005d.pdf here]) - accomplishes the same task of dimensionality reduction, the paradigm is not entirely the same. | While the probabilistic approach to dimensionality reduction - latent variable model (Jeroen K. Vermunt gives details regarding this type of model [http://spitswww.uvt.nl/~vermunt/esbs2005d.pdf here]) - accomplishes the same task of dimensionality reduction, the paradigm is not entirely the same. | ||

| Line 10: | Line 10: | ||

With latent variable models, however, we specify a functional form <math>\,y = g(x) + \epsilon</math>, where <math>\,y</math> is a high-dimensional original data point, <math>\,x</math> is the low-dimensional mapping of <math>\,y</math>, and <math>\,\epsilon</math> is a noise model. We then estimate the parameters using [http://en.wikipedia.org/wiki/Sampling_%28statistics%29 sampling techniques] or expectation maximization (Sean Borman provides a tutorial of it [http://www.isi.edu/natural-language/teaching/cs562/2009/readings/B06.pdf here]). | With latent variable models, however, we specify a functional form <math>\,y = g(x) + \epsilon</math>, where <math>\,y</math> is a high-dimensional original data point, <math>\,x</math> is the low-dimensional mapping of <math>\,y</math>, and <math>\,\epsilon</math> is a noise model. We then estimate the parameters using [http://en.wikipedia.org/wiki/Sampling_%28statistics%29 sampling techniques] or expectation maximization (Sean Borman provides a tutorial of it [http://www.isi.edu/natural-language/teaching/cs562/2009/readings/B06.pdf here]). | ||

Similar to the deterministic case, the probabilistic formulation has a linear form when <math>\,g</math> is linear, and a nonlinear form when <math>\,g</math> is nonlinear. | Similar to the deterministic case, the probabilistic formulation has a linear form when <math>\,g</math> is linear, and a nonlinear form when <math>\,g</math> is nonlinear. | ||

Examples of probabilistic linear latent variable | Examples of probabilistic linear latent variable modeling include Probabilistic PCA, which will be discussed next, and nonlinear techniques include [http://en.wikipedia.org/wiki/Generative_topographic_map Generative Topographic Modeling] (GTM). | ||

It is important to note, however, that the | It is important to note, however, that the GP-LVM does not define <math>\,g</math> directly; instead, <math>\,g</math> is defined via the kernel trick (Carlos C. Rodriguez gives details regarding it [http://omega.albany.edu:8008/machine-learning-dir/notes-dir/ker1/ker1.pdf here]). | ||

= Probabilistic PCA = | = Probabilistic PCA = | ||

In order to understand the | In order to understand the GP-LVM, we must first consider the previous work done by Tipping and Bishop in developing the PPCA. | ||

Consider a set of centered data with <math>\,n</math> observations and <math>\,d</math> dimensions, <math>\mathbf{Y} = [y_1,...,y_n]^T</math>. | Consider a set of centered data with <math>\,n</math> observations and <math>\,d</math> dimensions, <math>\mathbf{Y} = [y_1,...,y_n]^T</math>. | ||

| Line 22: | Line 22: | ||

Thus, we define a [http://en.wikipedia.org/wiki/Conditional_probability conditional (marginal) probability] model for the high dimensional data and, in doing so, specify a linear relationship between the latent space and data space, along with some Gaussian noise. Marginalizing both hidden variables together (<math>\,x_n</math> and <math>\,W</math>) is intractable, so we then take the standard approach of marginalizing <math>\,x_n</math>, putting a Gaussian prior on <math>\mathbf{W}</math> and solving using [http://en.wikipedia.org/wiki/Maximum_likelihood maximum likelihood]. | Thus, we define a [http://en.wikipedia.org/wiki/Conditional_probability conditional (marginal) probability] model for the high dimensional data and, in doing so, specify a linear relationship between the latent space and data space, along with some Gaussian noise. Marginalizing both hidden variables together (<math>\,x_n</math> and <math>\,W</math>) is intractable, so we then take the standard approach of marginalizing <math>\,x_n</math>, putting a Gaussian prior on <math>\mathbf{W}</math> and solving using [http://en.wikipedia.org/wiki/Maximum_likelihood maximum likelihood]. | ||

Then the marginal likelihood of <math>\,y_n</math> (after marginalizing <math>\,x_n</math>) is described as <math>p(\mathbf{y_n | W},\beta) = N(\mathbf{y_n |0, WW^{T}} +\beta^{-1}\mathbf{I})</math>. Assuming that the data is [http://en.wikipedia.org/wiki/Independent_identically_distributed i.i.d.], the likelihood of the full set is the product of the individual probabilities, i.e. it is <math>p(\mathbf{Y | W},\beta) = \prod^{N}_{n=1} p(\mathbf{y_n | W},\beta)</math>. | To obtain the marginal likelihood we integrate over the latent variables: | ||

<center><math>p(\mathbf{y}_n|\mathbf{W},b) = \int p(\mathbf{y}_n|x_n,\mathbf{W},b) p(x_n)dx_n</math></center> | |||

which requires us to specify a prior distribution over <math>x_n</math>. For probabilistic PCA the appropriate prior <math>p(x_n)</math> | |||

is a unit covariance, zero mean Gaussian distribution, Then the marginal likelihood of <math>\,y_n</math> (after marginalizing <math>\,x_n</math>) is described as <math>p(\mathbf{y_n | W},\beta) = N(\mathbf{y_n |0, WW^{T}} +\beta^{-1}\mathbf{I})</math>. Assuming that the data is [http://en.wikipedia.org/wiki/Independent_identically_distributed i.i.d.], the likelihood of the full set is the product of the individual probabilities, i.e. it is <math>p(\mathbf{Y | W},\beta) = \prod^{N}_{n=1} p(\mathbf{y_n | W},\beta)</math>. | |||

We can then find a solution for <math>\,W</math> by maximizing this probability. Tipping and Bishop did so in 1999; they found that the closed-form solution for <math>\,W</math> is achieved when <math>\,W</math> spans the principal sub-space of the data. This is the same answer that we arrive at with PCA, where we project the data onto the subspace which maximizes the variance of the data; consequently, this model can be interpreted as a ''probabilistic'' version of PCA, since the solution is obtained by maximizing a probability. | We can then find a solution for <math>\,W</math> by maximizing this probability. Tipping and Bishop did so in 1999<ref name="TB199">Michael E. Tipping and Christopher M. Bishop. Probabilistic principal component analysis. ''Journal of the Royal Statistical Society, B'', 6(3):611-622, 1999.</ref> ; they found that the closed-form solution for <math>\,W</math> is achieved when <math>\,W</math> spans the principal sub-space of the data. This is the same answer that we arrive at with PCA, where we project the data onto the subspace which maximizes the variance of the data; consequently, this model can be interpreted as a ''probabilistic'' version of PCA, since the solution is obtained by maximizing a probability. | ||

This is an acceptable formulation; however, it is only a linear model. Can it be extended to capture non-linear features? | This is an acceptable formulation; however, it is only a linear model. Can it be extended to capture non-linear features? | ||

The following re-formulation as a dual and the subsequent presentation of the | The following re-formulation as a dual and the subsequent presentation of the GP-LVM will show that this is in fact possible. | ||

= Dual PPCA = | = Dual PPCA = | ||

Dual PPCA is formulated by optimizing the latent variable <math>\,x_n</math>, and marginalizing <math>\mathbf{W}</math>. | Dual PPCA is formulated by optimizing the latent variable <math>\,x_n</math>, and marginalizing <math>\mathbf{W}</math>. | ||

It, in essence, follows the same steps as the work done by Bishop and Tipping. | It, in essence, follows the same steps as the work done by Bishop and Tipping. | ||

In this case, we start by defining a [http://en.wikipedia.org/wiki/Conjugate_prior conjugate prior], which is <math>P(\mathbf{W}) = \prod^{D}_{d=1} N(\mathbf{ | In this case, we start by defining a [http://en.wikipedia.org/wiki/Conjugate_prior conjugate prior], which is <math>P(\mathbf{W}) = \prod^{D}_{d=1} N(w_d \mathbf{|0,I})</math>, where <math>\,w_d</math> is the <math>\,d</math>th row of <math>\,\mathbf{W}</math> (i.e. it is <math>\,w_{d,:}</math>). | ||

Because it is straightforward due to using a conjugate prior, we marginalise <math>\mathbf{W}</math> rather than marginalise <math>\,X = [x_1 \dots x_N]^T</math>. By doing so, the resulting marginalised likelihood is <math>P(Y|X,\beta) = \prod^{D}_{d=1} p(y_{:,d}|X,\beta)</math>, where <math>\,y_{:,d}</math> is the <math>\,d</math>th column of <math>\,Y</math> and | Because it is straightforward due to using a conjugate prior, we marginalise <math>\mathbf{W}</math> rather than marginalise <math>\,\mathbf{X} = [x_1 \dots x_N]^T</math>. By doing so, the resulting marginalised likelihood is <math>P(Y|X,\beta) = \prod^{D}_{d=1} p(y_{:,d}|X,\beta)</math>, where <math>\,y_{:,d}</math> is the <math>\,d</math>th column of <math>\,\mathbf{Y}</math> and | ||

<math>p(\mathbf{y_{:,d} | X},\beta) = N(y_{:,d} | 0 , | <math>p(\mathbf{y_{:,d} | X},\beta) = N(y_{:,d} | 0 , \mathbf{X}\mathbf{X}^T + \beta^{-1}\mathbf{I})</math>. | ||

This is very similar to what we saw previously, with PPCA. | This is very similar to what we saw previously, with PPCA. | ||

Here, each dimension is a multivariate normal with a covariance specified by the dot product-matrix. | Here, each dimension is a multivariate normal with a covariance specified by the dot product-matrix. | ||

Assuming each dimension is independent, we can then obtain the joint likelihood of the data matrix <math>\,Y</math> as a product of each dimension, and the log-likelihood objective function is then <math>L = -\frac{DN}{2}\text{ln}2\pi - \frac{D}{2}\text{ln}|\mathbf{K}| - \frac{1}{2}\text{tr}(\mathbf{K^{-1}YY^T})</math>, where the linear kernel <math>\,K</math> is <math>\mathbf{K = XX^T} + \beta^{-1}\mathbf{I}</math>. | Assuming each dimension is independent, we can then obtain the joint likelihood of the data matrix <math>\,\mathbf{Y}</math> as a product of each dimension, and the log-likelihood objective function is then <math>L = -\frac{DN}{2}\text{ln}2\pi - \frac{D}{2}\text{ln}|\mathbf{K}| - \frac{1}{2}\text{tr}(\mathbf{K^{-1}YY^T})</math>, where the linear kernel <math>\,\mathbf{K}</math> is <math>\mathbf{K = XX^T} + \beta^{-1}\mathbf{I}</math>. | ||

A gradient descent algorithm is then applied to this objective function <math>\,L</math>. Magnus and Neudecker <ref name = "MN1999"> Jan R. Magnus and Heinz Neudecker. ''Matrix Differential Calculus with Application in Statistics and Econometrics.'' John Wiley and Sons, Chichester, West Sussex, 2nd edition, 1999.</ref>, in 1999, found that the gradients of <math>\,L</math> with respect to <math>\,\mathbf{X}</math> are <math>\,\frac{\delta L}{\delta \mathbf{X}} = \mathbf{K}^{-1}\mathbf{Y}\mathbf{Y}^T\mathbf{K}^{-1}\mathbf{X} - D\mathbf{K}^{-1}\mathbf{X}</math>. A point where the gradients are all zero can be found by setting <math>\,\frac{1}{D}\mathbf{Y}\mathbf{Y}^T\mathbf{K}^{-1}\mathbf{X} = \mathbf{X}</math>. Solving this, a solution is obtained in the form of an eigenvalue decomposition problem <math>\mathbf{X = ULV^T}</math>. Here, <math>\mathbf{U}</math> is an <math>\,N \times q</math> matrix, with the columns being the first <math>\,q</math> eigenvectors of the matrix <math>\mathbf{YY^T}</math>, <math>\,\mathbf{L}</math> is a <math>\,q \times q</math> diagonal matrix whose <math>\,j</math>th element is <math>\,l_j = (\lambda_j - \frac{1}{\beta})^{-\frac{1}{2}}</math>, where <math>\,\lambda_j</math> is the eigenvalue associated with the <math>\,j</math>th eigenvector of <math>\,D^{-1}\mathbf{Y}\mathbf{Y}^T</math> and <math>\,\mathbf{V}</math> is a <math>\,q \times q</math> rotation matrix that is chosen arbitrarily. Thus, the solution of this [http://en.wikipedia.org/wiki/Likelihood_function likelihood function] will then be the projection of the data points onto their principal subspace. | |||

Note that in this formulation, each dimension of the marginal distribution can be interpreted as a Gaussian process, and each dimension of the joint marginal is a product of Gaussian processes. | Note that in this formulation, each dimension of the marginal distribution can be interpreted as a [http://en.wikipedia.org/wiki/Gaussian_process Gaussian process], and each dimension of the joint marginal is a product of Gaussian processes. | ||

Thus, the objective function is expressed in terms of a linear kernel covariance. | Thus, the objective function is expressed in terms of a [http://en.wikipedia.org/wiki/Kernel_(linear_operator) linear kernel] covariance. | ||

The advantage of this dual formulation is two-fold: | The advantage of this dual formulation is two-fold: | ||

*The [http://en.wikipedia.org/wiki/Eigendecomposition_of_a_matrix eigendecomposition] is done on an <math>\,N \times q</math> matrix instead of a <math>\,d \times q</math> matrix, where <math>\,N << d</math>. | *The [http://en.wikipedia.org/wiki/Eigendecomposition_of_a_matrix eigendecomposition] is done on an <math>\,N \times q</math> matrix instead of a <math>\,d \times q</math> matrix, where <math>\,N << d</math>. Although this is not always the case particularly with microarray data. | ||

*<math>\,U</math> is now an inner product matrix, which can be [http://en.wikipedia.org/wiki/Kernel_trick kernelized]. | *<math>\,U</math> is now an inner product matrix, which can be [http://en.wikipedia.org/wiki/Kernel_trick kernelized]. | ||

Through the selection of an appropriate kernel, this technique can now be used to solve a much broader range of dimensionality reduction problems instead of being restricted to those with linear features. | Through the selection of an appropriate kernel, this technique can now be used to solve a much broader range of dimensionality reduction problems instead of being restricted to those with linear features. | ||

= GP-LVM = | = Gaussian Process Latent Variable Models (GP-LVM) = | ||

GP-LVM can be accomplished by replacing the inner product kernel with one that allows for non-linear functions. We use Gaussian processes to map from a latent space, <math>\,X</math>, to the observed-data space, <math>\,Y</math>. | GP-LVM can be accomplished by replacing the inner product kernel with one that allows for non-linear functions. We use Gaussian processes to map from a latent space, <math>\,\mathbf{X}</math>, to the observed-data space, <math>\,\mathbf{Y}</math>. | ||

In the above, we used a Gaussian prior over the space of functions that are fundamentally linear (but are corrupted by Gaussian noise having variance <math>\,\beta^{ | In the above, we used a Gaussian prior over the space of functions that are fundamentally linear (but are corrupted by Gaussian noise having variance <math>\,\beta^{-1}\mathbf{I}</math>. This Gaussian prior's covariance function, or kernel, is <math>k(x_i,x_j) = x_i^Tx_j + \beta^{-1} \delta_{i,j}</math>, where <math>\,x_i</math> and <math>\,x_j</math> are input vectors and <math>\,\delta_{i,j}</math> is the [http://en.wikipedia.org/wiki/Kronecker_delta Kronecker delta] function. Indeed, if the inputs are from <math>\,\mathbf{X}</math> and the [http://en.wikipedia.org/wiki/Covariance covariance] function is evaluated at each data point, then we would recover the [http://en.wikipedia.org/wiki/Covariance covariance] matrix <math>\,\mathbf{K} = \mathbf{X}\mathbf{X}^T + \beta^{-1}\mathbf{I}</math> whose <math>\,(i,j)</math>-th element is <math>\,k(x_i,x_j)</math> as given above. | ||

Instead of using this linear kernel, we can consider arbitrary non-linear kernels such as the [http://en.wikipedia.org/wiki/Radial_basis_function Radial Basis Function (RBF)] kernel <math>k(\mathbf{x_i,x_j}) = \theta_{\mathrm{rbf}} e^{\frac{-\gamma}{2}(\mathbf{x_i - x_j)}^{T}(\mathbf{x_i - x_j)}} + \theta_{\mathrm{bias}} + \theta_{\mathrm{white}}\delta_{i,j}</math>. | Instead of using this linear kernel, we can consider arbitrary non-linear kernels such as the [http://en.wikipedia.org/wiki/Radial_basis_function Radial Basis Function (RBF)] kernel <math>k(\mathbf{x_i,x_j}) = \theta_{\mathrm{rbf}} e^{\frac{-\gamma}{2}(\mathbf{x_i - x_j)}^{T}(\mathbf{x_i - x_j)}} + \theta_{\mathrm{bias}} + \theta_{\mathrm{white}}\delta_{i,j}</math>, where <math>\,\theta_{\mathrm{white}}</math>, <math>\,\theta_{\mathrm{bias}}</math>, <math>\,\theta_{\mathrm{rbf}}</math>, and <math>\,\gamma</math> are the kernel parameters. | ||

In order to use a particular kernel in the GP-LVM, we note that the gradients of the log-likelihood function <math>\,L</math> above with respect to the latent points <math>\,X</math> can be found by using the [http://en.wikipedia.org/wiki/Chain_rule chain rule]. First, we can find the gradients of <math>\,L</math> with respect to the kernel as <math>\,\frac{\delta L}{\delta \mathbf{K}} = \mathbf{K}^{-1}\mathbf{Y}\mathbf{Y}^T\mathbf{K}^{-1} - D\mathbf{K}^{-1}</math>, and then we can combine <math>\,\frac{\delta L}{\delta \mathbf{K}}</math> with <math>\,\frac{\delta \mathbf{K}}{\delta x_{n,j}}</math> to find <math>\,\frac{\delta L}{\delta \mathbf{X}}</math>. <math>\,\frac{\delta L}{\delta \mathbf{K}}</math> is in fact very straightforward to find, because it is independent of the choice of the kernel. Hence, we only need to make sure that we can compute <math>\,\frac{\delta \mathbf{K}}{\delta x_{n,j}}</math>. Ultimately, <math>\,L</math> is a highly non-linear function of the embeddings and the parameters. The author used scaled conjugate gradient (SCG) (more details regarding it are available [http://www.eie.polyu.edu.hk/~enzheru/snns/SNNSinfo/UserManual/node241.html here]) in their experiments for optimisation of <math>\,L</math>. | |||

However, with these non-linear kernels, we no longer have a closed-form solution. | |||

The use of any non-linear kernel leads to a non-linear probabilistic latent variable model - the GP-LVM. | |||

However, with these non-linear kernels, we no longer have a closed-form solution (up to an arbitrary rotation matrix) and, in addition, there would likely be multiple local optima. | |||

== Unifying Objective Function == | == Unifying Objective Function == | ||

We must now solve a non-convex objective function. | We must now solve a non-convex objective function. | ||

This | This is based on minimizing the following [http://en.wikipedia.org/wiki/Kullback%E2%80%93Leibler_divergence Kullback-Leibler (KL) divergence] between the two Gaussians which are induced by kernel matrices on the latent and data spaces: | ||

<center><math>\mathrm{KL}(N(\mathbf{z|0,S)} || N(\mathbf{z|0,K})) = -\int N(\mathbf{z|0,S)}\;ln\frac{N(\mathbf{z|0,K})}{N(\mathbf{z|0,S)}}\;d\mathbf{z} = \frac{1}{2} ln |\mathbf{K}| - \frac{1}{2} ln |\mathbf{S}| + \frac{1}{2} tr(\mathbf{SK^{-1}}) - \frac{N}{2},</math></center> | |||

where <math>\,\mathbf{S}</math> is a matrix of similarities like those used in methods such as [http://en.wikipedia.org/wiki/Multidimensional_scaling MDS] and [http://en.wikipedia.org/wiki/Kernel_PCA kernel PCA]. | |||

This KL divergence provides a unifying objective function that is valid for PCA, kernel PCA, PPCA and the GP-LVM, when the values for <math>\,\mathbf{S}</math> and <math>\,\mathbf{K}</math> are appropriately chosen. In the case of kernel PCA, <math>\,\mathbf{S}</math> is a non-linear kernel and <math>\,\mathbf{K}</math> is the linear kernel and, in the case of the GP-LVM, it is the other way around. The GP-LVM formulation is harder to optimize as compared to kernel PCA, because solving an eigenvalue problem is no longer sufficient for finding the solution. However, the GP-LVM formulation provides a probabilistic interpretation that kernel PCA does not provide. | |||

, | To solve it, the author used gradient-based optimization (gradient-based methods are described by Antony Jameson [http://aero-comlab.stanford.edu/Papers/jameson.gbom.pdf here]), and used deterministic [http://en.wikipedia.org/wiki/Dimension_reduction dimensionality reduction] to generate initial values. | ||

So, not only is this objective function non-convex, but each evaluation of it is very costly, requiring the inversion of the kernel matrix, which is an operation of the order <math>\,O(N^3)</math>. | |||

To speed up the optimization process, the author presented sparsification techniques (a relevant paper by Garg ''et al.'' on gradient descent with sparsification is available [http://www.cse.iitd.ernet.in/~rohitk/research/sparse.pdf here]) to infer which subset of the data is deemed to contain the most information. Specifically, the author based his approach on the informative vector machine algorithm<ref name="L2003">Neil D. Lawrence, Matthias Seeger and Ralf Herbrich. Fast sparse Gaussian process methods: The informative vector machine. In Sue Becker, Sebation Thrun and Kllaus Obermayer, editors, ''Advances in Neural Information Processing System,'' volume 15, pages 625-632, Cambridge, MA, 2003. MIT Press.</ref>. | |||

In sparsification, kernel methods are sped up by representing the data set by a subset <math>\,I</math> which is known as the ''active set''. <math>\,I</math> has <math>\,d < N</math> points, and the other points in the full set are denoted by <math>\,J</math>. The points in <math>\,I</math> can be selected using the informative vector machine (IVM) algorithm (implementation details for it are given in Lawrence ''et al.''<ref name="L2003"/>). The likelihood of the active set is <math>\,p(\mathbf{Y}_I) = \frac{1}{(2\pi)^{\frac{D}{2}}|\mathbf{K}_{I,I}|^{\frac{1}{2}}}\exp(-\frac{1}{2}\textrm{tr}(\mathbf{K}_{I,I}^{-1}\mathbf{Y}_I\mathbf{Y}_I^T))</math>, which can be optimized with respect to the kernel’s parameters and <math>\,X_I</math> using gradient evaluations at a cost of <math>\,O(d^3)</math>, which is much less than the prohibitive cost of <math>\,O(N^3)</math> in the case of using the full model. | |||

The following pseudo-code (taken from the author's paper listed in Reference) summarizes the GP-LVM algorithm for visualization data: | |||

[[File:c2f1.jpg]] | |||

The following figure (also taken from the author's paper listed in Reference) provides a simplified view of GP-LVM: | |||

[[File:c2f2.jpg]] | |||

= Results = | = Results = | ||

The author empirically evaluates | The author empirically evaluates GP-LVM on several datasets. | ||

He begins with an experiment using simulated oil data, where each point is 12 dimensional, and the points can be classified into one of three flow rates---stratified, annular and homogeneous. | He begins with an experiment using simulated oil data, where each point is 12 dimensional, and the points can be classified into one of three flow rates---stratified, annular and homogeneous. | ||

However, the author uses only a reduced dataset of 100 points in order to make the problem computationally tractable. | However, the author uses only a reduced dataset of 100 points in order to make the problem computationally tractable. | ||

If we evaluate the performance of | If we evaluate the performance of GP-LVM against PCA through classification error, using a nearest-neighbor classifier that classified each data point to the class of its nearest neighbor in the two dimensional latent-space <math>\,X</math>, PCA had 20 errors, kernel PCA had 13 errors, and the GP-LVM performed the best with 4 errors. Furthermore, due to the above formulation of the GP-LVM, the level of uncertainty is shared across all <math>\,D</math> dimensions and so can be visualized in the latent-space <math>\,X</math>. | ||

The following figures (taken from the author's paper) provide visualizations of the oil data. Figures a), b), and f) show the two-dimensional latent-spaces obtained by PCA (a linear GP-LVM), a GP-LVM that uses an RBF kernel, and kernel PCA, respectively. | |||

[[File:a.jpg]] [[File:b.jpg]] [[File:f.jpg]] | |||

From the above figures, one can clearly see that GP-LVM with an RBF kernel provides the best demarcation between the three flow rates. | |||

Thus, while there is some improvement in classification, it is not remarkable. | Thus, while there is some improvement in classification, it is not remarkable. | ||

This is likely due, in part, to the fact that PCA is used to initialize the | This is likely due, in part, to the fact that PCA is used to initialize the GP-LVM algorithm. | ||

The author then applies the sparsified | The author then applies the sparsified GP-LVM to all 1000 oil data points; he compares it with GTM and PCA. | ||

We see that the sparsification process required to make the | We see that the sparsification process required to make the GP-LVM tractable degrades the performance considerably. | ||

However, while the paper shows that the | However, while the paper shows that the GP-LVM does not provide a significantly better method for classification, other domains have already put the model to use. | ||

For example, immediately after this paper was published, other researchers in the field of animation ([http://grail.cs.washington.edu/projects/styleik/ style-based inverse kinematics]) used the | For example, immediately after this paper was published, other researchers in the field of animation ([http://grail.cs.washington.edu/projects/styleik/ style-based inverse kinematics]) used the GP-LVM on motion capture data to interpolate natural poses. | ||

= Conclusion = | = Conclusion = | ||

The | The GP-LVM is a novel approach to probabilistic latent [http://en.wikipedia.org/wiki/Embedding embedding], which is distinct from other approaches. | ||

It is probabilistic and can | It is probabilistic and therefore it can be used to evaluate likelihoods, handle missing data and illustrate areas of confidence in the latent embedding. | ||

Further, it is | Further, it is non-linear, and can consider different types of noise and kernel types. | ||

However, solving the optimization problem is difficult. | However, solving the optimization problem is difficult. | ||

It does not learn manifolds effectively, and it is computationally expensive so it does not scale well. | It does not learn manifolds effectively, and it is computationally expensive so it does not scale well. | ||

Ultimately, however, the paper has already proved useful, particularly for missing data problems. | Ultimately, however, the paper has already proved useful, particularly for missing data problems. | ||

= Code = | |||

Software for implementing GP-LVM can be found at the author's home page [http://staffwww.dcs.shef.ac.uk/people/N.Lawrence/software.html]. | |||

= Reference = | |||

Neil Lawrence. Probabilistic Non-linear Principal Component Analysis with Gaussian Process Latent Variable Models. Journal of Machine Learning Research 6 (2005) 1783–1816. November, 2005. | |||

<references /> | |||

Latest revision as of 08:45, 30 August 2017

Overview

The development of principal component analysis (PCA) (a tutorial of it by Lindsay I. Smith can be found here), a statistical tool which may be used for dimensionality reduction, spans from the initial formulation of PCA 100 years ago to the more recent formulation of the dual problem (Dual PCA) and subsequent kernelization of the PCA algorithm (Kernel PCA). Inspired by this progression of the deterministic formulation of PCA, Neil Lawrence builds on a probabilistic PCA model (PPCA) developed by Tipping and Bishop, and proposes a novel dual formulation of PPCA and subsequently the Gaussian Process Latent Variable Model (GP-LVM). A graphical representation of this can be see in Figure 1. A probabilistic approach to the PCA problem is appealing as it provides several advantages such as enabling the application of Bayesian methods as well as dealing with missing data values.

While the probabilistic approach to dimensionality reduction - latent variable model (Jeroen K. Vermunt gives details regarding this type of model here) - accomplishes the same task of dimensionality reduction, the paradigm is not entirely the same. With dimensionality reduction, we have some high-dimensional observations, and we wish to learn a functional mapping which will transform the data from high-dimensional space into a lower-dimensional, embedded space. With latent variable models, however, we specify a functional form [math]\displaystyle{ \,y = g(x) + \epsilon }[/math], where [math]\displaystyle{ \,y }[/math] is a high-dimensional original data point, [math]\displaystyle{ \,x }[/math] is the low-dimensional mapping of [math]\displaystyle{ \,y }[/math], and [math]\displaystyle{ \,\epsilon }[/math] is a noise model. We then estimate the parameters using sampling techniques or expectation maximization (Sean Borman provides a tutorial of it here). Similar to the deterministic case, the probabilistic formulation has a linear form when [math]\displaystyle{ \,g }[/math] is linear, and a nonlinear form when [math]\displaystyle{ \,g }[/math] is nonlinear. Examples of probabilistic linear latent variable modeling include Probabilistic PCA, which will be discussed next, and nonlinear techniques include Generative Topographic Modeling (GTM). It is important to note, however, that the GP-LVM does not define [math]\displaystyle{ \,g }[/math] directly; instead, [math]\displaystyle{ \,g }[/math] is defined via the kernel trick (Carlos C. Rodriguez gives details regarding it here).

Probabilistic PCA

In order to understand the GP-LVM, we must first consider the previous work done by Tipping and Bishop in developing the PPCA. Consider a set of centered data with [math]\displaystyle{ \,n }[/math] observations and [math]\displaystyle{ \,d }[/math] dimensions, [math]\displaystyle{ \mathbf{Y} = [y_1,...,y_n]^T }[/math].

We can view this data as having a linear relationship with some embedded latent-space data [math]\displaystyle{ \mathbf{x_n} }[/math], assuming Gaussian noise, as [math]\displaystyle{ \mathbf{y_n} = \mathbf{Wx_n} + \mathbf{n} }[/math], where [math]\displaystyle{ \,x_n }[/math] is the [math]\displaystyle{ \,q }[/math]-dimensional latent variable associated with each observation, and [math]\displaystyle{ W \in \mathbb{R}^{D\times q} }[/math] is the transformation matrix which relates the observed space to the latent space. Assuming a spherical Gaussian distribution for the noise with a mean of zero and a covariance of [math]\displaystyle{ \beta^{-1}\mathbf{I} }[/math], the likelihood for a particular observation [math]\displaystyle{ \,y_n }[/math] can be expressed as [math]\displaystyle{ p(\mathbf{y_n | x_n, W},\beta) = N(\mathbf{y_n | Wx_n},\beta^{-1}\mathbf{I}) }[/math], where [math]\displaystyle{ \,N }[/math] denotes the distribution function of a multivariate normal distribution for [math]\displaystyle{ \,y_n }[/math] with, in this case, mean [math]\displaystyle{ \,Wx_n }[/math] and covariance matrix [math]\displaystyle{ \beta^{-1}\mathbf{I} }[/math].

Thus, we define a conditional (marginal) probability model for the high dimensional data and, in doing so, specify a linear relationship between the latent space and data space, along with some Gaussian noise. Marginalizing both hidden variables together ([math]\displaystyle{ \,x_n }[/math] and [math]\displaystyle{ \,W }[/math]) is intractable, so we then take the standard approach of marginalizing [math]\displaystyle{ \,x_n }[/math], putting a Gaussian prior on [math]\displaystyle{ \mathbf{W} }[/math] and solving using maximum likelihood.

To obtain the marginal likelihood we integrate over the latent variables:

which requires us to specify a prior distribution over [math]\displaystyle{ x_n }[/math]. For probabilistic PCA the appropriate prior [math]\displaystyle{ p(x_n) }[/math] is a unit covariance, zero mean Gaussian distribution, Then the marginal likelihood of [math]\displaystyle{ \,y_n }[/math] (after marginalizing [math]\displaystyle{ \,x_n }[/math]) is described as [math]\displaystyle{ p(\mathbf{y_n | W},\beta) = N(\mathbf{y_n |0, WW^{T}} +\beta^{-1}\mathbf{I}) }[/math]. Assuming that the data is i.i.d., the likelihood of the full set is the product of the individual probabilities, i.e. it is [math]\displaystyle{ p(\mathbf{Y | W},\beta) = \prod^{N}_{n=1} p(\mathbf{y_n | W},\beta) }[/math].

We can then find a solution for [math]\displaystyle{ \,W }[/math] by maximizing this probability. Tipping and Bishop did so in 1999<ref name="TB199">Michael E. Tipping and Christopher M. Bishop. Probabilistic principal component analysis. Journal of the Royal Statistical Society, B, 6(3):611-622, 1999.</ref> ; they found that the closed-form solution for [math]\displaystyle{ \,W }[/math] is achieved when [math]\displaystyle{ \,W }[/math] spans the principal sub-space of the data. This is the same answer that we arrive at with PCA, where we project the data onto the subspace which maximizes the variance of the data; consequently, this model can be interpreted as a probabilistic version of PCA, since the solution is obtained by maximizing a probability.

This is an acceptable formulation; however, it is only a linear model. Can it be extended to capture non-linear features? The following re-formulation as a dual and the subsequent presentation of the GP-LVM will show that this is in fact possible.

Dual PPCA

Dual PPCA is formulated by optimizing the latent variable [math]\displaystyle{ \,x_n }[/math], and marginalizing [math]\displaystyle{ \mathbf{W} }[/math]. It, in essence, follows the same steps as the work done by Bishop and Tipping. In this case, we start by defining a conjugate prior, which is [math]\displaystyle{ P(\mathbf{W}) = \prod^{D}_{d=1} N(w_d \mathbf{|0,I}) }[/math], where [math]\displaystyle{ \,w_d }[/math] is the [math]\displaystyle{ \,d }[/math]th row of [math]\displaystyle{ \,\mathbf{W} }[/math] (i.e. it is [math]\displaystyle{ \,w_{d,:} }[/math]).

Because it is straightforward due to using a conjugate prior, we marginalise [math]\displaystyle{ \mathbf{W} }[/math] rather than marginalise [math]\displaystyle{ \,\mathbf{X} = [x_1 \dots x_N]^T }[/math]. By doing so, the resulting marginalised likelihood is [math]\displaystyle{ P(Y|X,\beta) = \prod^{D}_{d=1} p(y_{:,d}|X,\beta) }[/math], where [math]\displaystyle{ \,y_{:,d} }[/math] is the [math]\displaystyle{ \,d }[/math]th column of [math]\displaystyle{ \,\mathbf{Y} }[/math] and [math]\displaystyle{ p(\mathbf{y_{:,d} | X},\beta) = N(y_{:,d} | 0 , \mathbf{X}\mathbf{X}^T + \beta^{-1}\mathbf{I}) }[/math].

This is very similar to what we saw previously, with PPCA. Here, each dimension is a multivariate normal with a covariance specified by the dot product-matrix. Assuming each dimension is independent, we can then obtain the joint likelihood of the data matrix [math]\displaystyle{ \,\mathbf{Y} }[/math] as a product of each dimension, and the log-likelihood objective function is then [math]\displaystyle{ L = -\frac{DN}{2}\text{ln}2\pi - \frac{D}{2}\text{ln}|\mathbf{K}| - \frac{1}{2}\text{tr}(\mathbf{K^{-1}YY^T}) }[/math], where the linear kernel [math]\displaystyle{ \,\mathbf{K} }[/math] is [math]\displaystyle{ \mathbf{K = XX^T} + \beta^{-1}\mathbf{I} }[/math].

A gradient descent algorithm is then applied to this objective function [math]\displaystyle{ \,L }[/math]. Magnus and Neudecker <ref name = "MN1999"> Jan R. Magnus and Heinz Neudecker. Matrix Differential Calculus with Application in Statistics and Econometrics. John Wiley and Sons, Chichester, West Sussex, 2nd edition, 1999.</ref>, in 1999, found that the gradients of [math]\displaystyle{ \,L }[/math] with respect to [math]\displaystyle{ \,\mathbf{X} }[/math] are [math]\displaystyle{ \,\frac{\delta L}{\delta \mathbf{X}} = \mathbf{K}^{-1}\mathbf{Y}\mathbf{Y}^T\mathbf{K}^{-1}\mathbf{X} - D\mathbf{K}^{-1}\mathbf{X} }[/math]. A point where the gradients are all zero can be found by setting [math]\displaystyle{ \,\frac{1}{D}\mathbf{Y}\mathbf{Y}^T\mathbf{K}^{-1}\mathbf{X} = \mathbf{X} }[/math]. Solving this, a solution is obtained in the form of an eigenvalue decomposition problem [math]\displaystyle{ \mathbf{X = ULV^T} }[/math]. Here, [math]\displaystyle{ \mathbf{U} }[/math] is an [math]\displaystyle{ \,N \times q }[/math] matrix, with the columns being the first [math]\displaystyle{ \,q }[/math] eigenvectors of the matrix [math]\displaystyle{ \mathbf{YY^T} }[/math], [math]\displaystyle{ \,\mathbf{L} }[/math] is a [math]\displaystyle{ \,q \times q }[/math] diagonal matrix whose [math]\displaystyle{ \,j }[/math]th element is [math]\displaystyle{ \,l_j = (\lambda_j - \frac{1}{\beta})^{-\frac{1}{2}} }[/math], where [math]\displaystyle{ \,\lambda_j }[/math] is the eigenvalue associated with the [math]\displaystyle{ \,j }[/math]th eigenvector of [math]\displaystyle{ \,D^{-1}\mathbf{Y}\mathbf{Y}^T }[/math] and [math]\displaystyle{ \,\mathbf{V} }[/math] is a [math]\displaystyle{ \,q \times q }[/math] rotation matrix that is chosen arbitrarily. Thus, the solution of this likelihood function will then be the projection of the data points onto their principal subspace.

Note that in this formulation, each dimension of the marginal distribution can be interpreted as a Gaussian process, and each dimension of the joint marginal is a product of Gaussian processes.

Thus, the objective function is expressed in terms of a linear kernel covariance.

The advantage of this dual formulation is two-fold:

- The eigendecomposition is done on an [math]\displaystyle{ \,N \times q }[/math] matrix instead of a [math]\displaystyle{ \,d \times q }[/math] matrix, where [math]\displaystyle{ \,N \lt \lt d }[/math]. Although this is not always the case particularly with microarray data.

- [math]\displaystyle{ \,U }[/math] is now an inner product matrix, which can be kernelized.

Through the selection of an appropriate kernel, this technique can now be used to solve a much broader range of dimensionality reduction problems instead of being restricted to those with linear features.

Gaussian Process Latent Variable Models (GP-LVM)

GP-LVM can be accomplished by replacing the inner product kernel with one that allows for non-linear functions. We use Gaussian processes to map from a latent space, [math]\displaystyle{ \,\mathbf{X} }[/math], to the observed-data space, [math]\displaystyle{ \,\mathbf{Y} }[/math].

In the above, we used a Gaussian prior over the space of functions that are fundamentally linear (but are corrupted by Gaussian noise having variance [math]\displaystyle{ \,\beta^{-1}\mathbf{I} }[/math]. This Gaussian prior's covariance function, or kernel, is [math]\displaystyle{ k(x_i,x_j) = x_i^Tx_j + \beta^{-1} \delta_{i,j} }[/math], where [math]\displaystyle{ \,x_i }[/math] and [math]\displaystyle{ \,x_j }[/math] are input vectors and [math]\displaystyle{ \,\delta_{i,j} }[/math] is the Kronecker delta function. Indeed, if the inputs are from [math]\displaystyle{ \,\mathbf{X} }[/math] and the covariance function is evaluated at each data point, then we would recover the covariance matrix [math]\displaystyle{ \,\mathbf{K} = \mathbf{X}\mathbf{X}^T + \beta^{-1}\mathbf{I} }[/math] whose [math]\displaystyle{ \,(i,j) }[/math]-th element is [math]\displaystyle{ \,k(x_i,x_j) }[/math] as given above.

Instead of using this linear kernel, we can consider arbitrary non-linear kernels such as the Radial Basis Function (RBF) kernel [math]\displaystyle{ k(\mathbf{x_i,x_j}) = \theta_{\mathrm{rbf}} e^{\frac{-\gamma}{2}(\mathbf{x_i - x_j)}^{T}(\mathbf{x_i - x_j)}} + \theta_{\mathrm{bias}} + \theta_{\mathrm{white}}\delta_{i,j} }[/math], where [math]\displaystyle{ \,\theta_{\mathrm{white}} }[/math], [math]\displaystyle{ \,\theta_{\mathrm{bias}} }[/math], [math]\displaystyle{ \,\theta_{\mathrm{rbf}} }[/math], and [math]\displaystyle{ \,\gamma }[/math] are the kernel parameters.

In order to use a particular kernel in the GP-LVM, we note that the gradients of the log-likelihood function [math]\displaystyle{ \,L }[/math] above with respect to the latent points [math]\displaystyle{ \,X }[/math] can be found by using the chain rule. First, we can find the gradients of [math]\displaystyle{ \,L }[/math] with respect to the kernel as [math]\displaystyle{ \,\frac{\delta L}{\delta \mathbf{K}} = \mathbf{K}^{-1}\mathbf{Y}\mathbf{Y}^T\mathbf{K}^{-1} - D\mathbf{K}^{-1} }[/math], and then we can combine [math]\displaystyle{ \,\frac{\delta L}{\delta \mathbf{K}} }[/math] with [math]\displaystyle{ \,\frac{\delta \mathbf{K}}{\delta x_{n,j}} }[/math] to find [math]\displaystyle{ \,\frac{\delta L}{\delta \mathbf{X}} }[/math]. [math]\displaystyle{ \,\frac{\delta L}{\delta \mathbf{K}} }[/math] is in fact very straightforward to find, because it is independent of the choice of the kernel. Hence, we only need to make sure that we can compute [math]\displaystyle{ \,\frac{\delta \mathbf{K}}{\delta x_{n,j}} }[/math]. Ultimately, [math]\displaystyle{ \,L }[/math] is a highly non-linear function of the embeddings and the parameters. The author used scaled conjugate gradient (SCG) (more details regarding it are available here) in their experiments for optimisation of [math]\displaystyle{ \,L }[/math].

The use of any non-linear kernel leads to a non-linear probabilistic latent variable model - the GP-LVM. However, with these non-linear kernels, we no longer have a closed-form solution (up to an arbitrary rotation matrix) and, in addition, there would likely be multiple local optima.

Unifying Objective Function

We must now solve a non-convex objective function. This is based on minimizing the following Kullback-Leibler (KL) divergence between the two Gaussians which are induced by kernel matrices on the latent and data spaces:

where [math]\displaystyle{ \,\mathbf{S} }[/math] is a matrix of similarities like those used in methods such as MDS and kernel PCA.

This KL divergence provides a unifying objective function that is valid for PCA, kernel PCA, PPCA and the GP-LVM, when the values for [math]\displaystyle{ \,\mathbf{S} }[/math] and [math]\displaystyle{ \,\mathbf{K} }[/math] are appropriately chosen. In the case of kernel PCA, [math]\displaystyle{ \,\mathbf{S} }[/math] is a non-linear kernel and [math]\displaystyle{ \,\mathbf{K} }[/math] is the linear kernel and, in the case of the GP-LVM, it is the other way around. The GP-LVM formulation is harder to optimize as compared to kernel PCA, because solving an eigenvalue problem is no longer sufficient for finding the solution. However, the GP-LVM formulation provides a probabilistic interpretation that kernel PCA does not provide.

To solve it, the author used gradient-based optimization (gradient-based methods are described by Antony Jameson here), and used deterministic dimensionality reduction to generate initial values. So, not only is this objective function non-convex, but each evaluation of it is very costly, requiring the inversion of the kernel matrix, which is an operation of the order [math]\displaystyle{ \,O(N^3) }[/math].

To speed up the optimization process, the author presented sparsification techniques (a relevant paper by Garg et al. on gradient descent with sparsification is available here) to infer which subset of the data is deemed to contain the most information. Specifically, the author based his approach on the informative vector machine algorithm<ref name="L2003">Neil D. Lawrence, Matthias Seeger and Ralf Herbrich. Fast sparse Gaussian process methods: The informative vector machine. In Sue Becker, Sebation Thrun and Kllaus Obermayer, editors, Advances in Neural Information Processing System, volume 15, pages 625-632, Cambridge, MA, 2003. MIT Press.</ref>.

In sparsification, kernel methods are sped up by representing the data set by a subset [math]\displaystyle{ \,I }[/math] which is known as the active set. [math]\displaystyle{ \,I }[/math] has [math]\displaystyle{ \,d \lt N }[/math] points, and the other points in the full set are denoted by [math]\displaystyle{ \,J }[/math]. The points in [math]\displaystyle{ \,I }[/math] can be selected using the informative vector machine (IVM) algorithm (implementation details for it are given in Lawrence et al.<ref name="L2003"/>). The likelihood of the active set is [math]\displaystyle{ \,p(\mathbf{Y}_I) = \frac{1}{(2\pi)^{\frac{D}{2}}|\mathbf{K}_{I,I}|^{\frac{1}{2}}}\exp(-\frac{1}{2}\textrm{tr}(\mathbf{K}_{I,I}^{-1}\mathbf{Y}_I\mathbf{Y}_I^T)) }[/math], which can be optimized with respect to the kernel’s parameters and [math]\displaystyle{ \,X_I }[/math] using gradient evaluations at a cost of [math]\displaystyle{ \,O(d^3) }[/math], which is much less than the prohibitive cost of [math]\displaystyle{ \,O(N^3) }[/math] in the case of using the full model.

The following pseudo-code (taken from the author's paper listed in Reference) summarizes the GP-LVM algorithm for visualization data:

The following figure (also taken from the author's paper listed in Reference) provides a simplified view of GP-LVM:

Results

The author empirically evaluates GP-LVM on several datasets. He begins with an experiment using simulated oil data, where each point is 12 dimensional, and the points can be classified into one of three flow rates---stratified, annular and homogeneous. However, the author uses only a reduced dataset of 100 points in order to make the problem computationally tractable. If we evaluate the performance of GP-LVM against PCA through classification error, using a nearest-neighbor classifier that classified each data point to the class of its nearest neighbor in the two dimensional latent-space [math]\displaystyle{ \,X }[/math], PCA had 20 errors, kernel PCA had 13 errors, and the GP-LVM performed the best with 4 errors. Furthermore, due to the above formulation of the GP-LVM, the level of uncertainty is shared across all [math]\displaystyle{ \,D }[/math] dimensions and so can be visualized in the latent-space [math]\displaystyle{ \,X }[/math].

The following figures (taken from the author's paper) provide visualizations of the oil data. Figures a), b), and f) show the two-dimensional latent-spaces obtained by PCA (a linear GP-LVM), a GP-LVM that uses an RBF kernel, and kernel PCA, respectively.

File:a.jpg File:b.jpg File:f.jpg

From the above figures, one can clearly see that GP-LVM with an RBF kernel provides the best demarcation between the three flow rates.

Thus, while there is some improvement in classification, it is not remarkable. This is likely due, in part, to the fact that PCA is used to initialize the GP-LVM algorithm.

The author then applies the sparsified GP-LVM to all 1000 oil data points; he compares it with GTM and PCA. We see that the sparsification process required to make the GP-LVM tractable degrades the performance considerably.

However, while the paper shows that the GP-LVM does not provide a significantly better method for classification, other domains have already put the model to use. For example, immediately after this paper was published, other researchers in the field of animation (style-based inverse kinematics) used the GP-LVM on motion capture data to interpolate natural poses.

Conclusion

The GP-LVM is a novel approach to probabilistic latent embedding, which is distinct from other approaches. It is probabilistic and therefore it can be used to evaluate likelihoods, handle missing data and illustrate areas of confidence in the latent embedding. Further, it is non-linear, and can consider different types of noise and kernel types. However, solving the optimization problem is difficult. It does not learn manifolds effectively, and it is computationally expensive so it does not scale well. Ultimately, however, the paper has already proved useful, particularly for missing data problems.

Code

Software for implementing GP-LVM can be found at the author's home page [1].

Reference

Neil Lawrence. Probabilistic Non-linear Principal Component Analysis with Gaussian Process Latent Variable Models. Journal of Machine Learning Research 6 (2005) 1783–1816. November, 2005. <references />