consistency of Trace Norm Minimization: Difference between revisions

m (Conversion script moved page Consistency of Trace Norm Minimization to consistency of Trace Norm Minimization: Converting page titles to lowercase) |

|||

| (106 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

==Introduction== | ==Introduction== | ||

In many applications, including collaborative filtering | In many applications, including [http://en.wikipedia.org/wiki/Collaborative_filtering collaborative filtering], [http://en.wikipedia.org/wiki/Multi-task_learning multi-task learning], and [http://en.wikipedia.org/wiki/Document_classification classification], the main objective is to estimate a low-[http://en.wikipedia.org/wiki/Rank_%28linear_algebra%29 rank] rectangular matrix. More formally, this problem can be written as follows: | ||

<center><math>\min_{W\in \mathbb{R}^{p \times q}} \frac{1}{2n} \sum_{i=1}^n (z_i - \textrm{tr}W^TM_i)^2 \;\; \textrm{subject} \; \textrm{to} \;\; \textrm{rank}(W) \leq \delta, | <center><math>\min_{W\in \mathbb{R}^{p \times q}} \frac{1}{2n} \sum_{i=1}^n (z_i - \textrm{tr}(W^TM_i))^2 \;\; \textrm{subject} \; \textrm{to} \;\; \textrm{rank}(W) \leq \delta, | ||

</math></center> | </math></center> | ||

where <math>\,(z_i,M_i),</math> <math>i = 1, \ldots, n,</math> are given observations. Unfortunately, this is a difficult problem to solve because of the rank constraint. If the rank function can be replaced by a convex function then the optimization problem becomes much easier to solve. However, this convex function must still generate low rank solutions. Given these two objectives, many authors have proposed using the trace norm as an alternative to the rank function. The trace norm, or nuclear norm, of a matrix, is the sum of its singular values and it is the convex envelope (the largest lower bounding convex function) of the rank function over the unit ball of the spectral norm. Since the trace norm is the convex envelope of the rank function, adding the trace norm as a regularization term to the matrix approximation problem should still give low rank solutions. It is then natural to explore what happens when the data is actually generated by a low rank matrix. Under what conditions will the trace norm regularization problem accurately approximate the underlying matrix and under what conditions will the rank be accurately approximated? The author of this paper<ref name="B2008a">F. Bach. Consistency of Trace Norm Minimization. ''Journal of Machine Learning Research'', 8:1019-1048, 2008.</ref> explores these questions and provides necessary and sufficient conditions for rank consistency. | where <math>\,(z_i,M_i),</math> <math>i = 1, \ldots, n,</math> are given observations. Unfortunately, this is a difficult problem to solve because of the rank constraint. | ||

In fact, the rank-minimization problem is generally NP-hard<ref name="fazel2004">Fazel, M. and Hindi, H. and Boyd, S. Rank minimization and applications in system theory. Proceedings of the 2004 IEEE American Control Conference (4):3273--3278, 2004.</ref>. | |||

If the rank function can be replaced by a [http://en.wikipedia.org/wiki/Convex_function convex function] then the optimization problem becomes much easier to solve. However, this convex function must still generate low rank solutions. Given these two objectives, many authors have proposed using the [http://en.wikipedia.org/wiki/Singular_Value_Decomposition#Ky_Fan_norms trace norm] as an alternative to the rank function. | |||

In other words, because using the rank-function results in an NP-hard problem, we resort to the trace norm as a proxy measure for the rank. | |||

The trace norm, or nuclear norm, of a matrix, is the sum of its [http://en.wikipedia.org/wiki/Singular_value singular values] and it is the convex envelope (the largest lower bounding convex function) of the rank function over the unit ball of the spectral norm. Since the trace norm is the convex envelope of the rank function, adding the trace norm as a regularization term to the matrix approximation problem should still give low rank solutions. It is then natural to explore what happens when the data is actually generated by a low rank matrix. Under what conditions will the trace norm regularization problem accurately approximate the underlying matrix and under what conditions will the rank be accurately approximated? The author of this paper<ref name="B2008a">F. Bach. Consistency of Trace Norm Minimization. ''Journal of Machine Learning Research'', 8:1019-1048, 2008.</ref> explores these questions and provides necessary and sufficient conditions for rank consistency. | |||

<br /><br/> | <br /><br/> | ||

The use of the trace norm regularization term in place of the rank function in approximating low rank matrices is similar to the use of the <math>l_1</math>-norm regularization term in place of the <math>l_0</math>-norm in approximating sparse vectors. In this case, the main objective is to estimate vectors with low cardinality. However, optimization problems with <math>l_0</math>-norm constraints are hard to solve. Instead the <math>l_1</math>-norm is often used as a regularization term to induce sparse loadings since the <math>l_1</math>-norm is the convex | The use of the trace norm regularization term in place of the rank function in approximating low-rank matrices is similar to the use of the <math>\,l_1</math>-norm (more details [http://mathworld.wolfram.com/L1-Norm.html here]) regularization term in place of the <math>\,l_0</math>-norm (more details [http://en.wikipedia.org/wiki/L0_norm#Zero_norm here]) in approximating [http://www.cs.umd.edu/Outreach/hsContest99/questions/node3.html sparse vectors]. In this case, the main objective is to estimate vectors with low [http://en.wikipedia.org/wiki/Dimension_%28vector_space%29 cardinality]. However, optimization problems with <math>\,l_0</math>-norm constraints are hard to solve. Instead the <math>\,l_1</math>-norm is often used as a regularization term to induce sparse loadings (vector coefficients), since the <math>\,l_1</math>-norm is the convex envelope of the <math>\,l_0</math>-norm on the unit ball. For least squares regression, this regularization scheme is known as the [http://en.wikipedia.org/wiki/Least_squares#LASSO_method LASSO]<ref name="T1994">R. Tibshirani. Regression shrinkage and selection via the lasso. ''Journal Royal Statistics'', 58(1): 267-299, 1994.</ref>. Once again we are interested in whether this procedure can consistently estimate sparse vectors and their sparsity patterns. Recent work by Yuan and Lin<ref name="YL2007">M. Yuan and Y. Lin. On the non-negative garrotte estimator. ''Journal of The Royal Statistical Society Series B'', 69(2): 143-161, 2007.</ref>, Zhao and You<ref name="ZY2006">P. Zhao and B. Yu. On model selection consistency of lasso. ''Journal of Machine Learning Research'', 7:2541-2563, 2006.</ref> and Zou<ref name="Z2006">H. Zou. The adaptive lasso and its oracle properties. ''Journal of the American Statistical Association'', 101:1418-1429, December 2006.</ref> looked at this question for the LASSO procedure. Similar work by Bach<ref name="B2008b">F.R. Bach. Consistency of the group lasso and multiple kernel learning. ''Journal of Machine Learning Research, 9:1179-1225, 2009.</ref> looked at this question for the group Lasso procedure (more details regarding it can be found in Friedman ''et al.'' | ||

's [http://www-stat.stanford.edu/~tibs/ftp/sparse-grlasso.pdf paper]). Given the similarities between the low rank matrix approximation problem and the sparse vector approximation problem (one such similarity is detailed in Shen ''et al.'''s [http://webdocs.cs.ualberta.ca/~mahdavif/ReadingGroup/Papers/sparsePCA.pdf paper] titled ''Sparse principal component analysis via regularized low rank matrix approximation''), the author compared the consistency results that he obtained with the corresponding results for the LASSO and the group LASSO. In fact, he showed that the LASSO and the group LASSO procedures are in fact embedded in the trace norm regularization procedure itself, and he found that the consistency conditions are merely extensions of the results obtained for the LASSO and the group LASSO. The author also considered simulated data to test the consistency results. In addition, an adaptive version is developed by the authors that is capable of providing <math> n^{-\frac{1}{2}}</math>-consistency and rank consistency when the necessary conditions are not satisfied. | |||

==Notation== | ==Notation== | ||

Before outlining the major results of this paper, we must first introduce some notation. Throughout the remainder of this summary, all vectors will be denoted by lowercase letters | Before outlining the major results of this paper, we must first introduce some notation. Throughout the remainder of this summary, all vectors will be denoted by lowercase letters and all matrices by uppercase letters. For vectors <math>x \in \mathbb{R}^d</math>, let <math>\|\cdot\|</math> denote the Euclidean norm, that is <math>\|x\| = \|x\|_2 = (x^Tx)^{1/2}</math>. On rectangular matrices <math>M \in \mathbb{R}^{p \times q}</math>, consider the following three norms, where <math>\,\sigma</math> is the vector of singular values. | ||

<br /><br /> | <br /><br /> | ||

*The spectral norm: The largest singular value, <math>\| M \|_2 = \|\mathbf{\sigma}\|_\infty</math>. | *The spectral norm: The largest singular value, <math>\| M \|_2 = \|\mathbf{\sigma}\|_\infty</math>. | ||

*The Frobenius norm: The <math>l_2</math>-norm of singular values, <math>\| M \|_F = \|\mathbf{\sigma}\|_2 = (\textrm{tr}(M^TM))^{1/2}</math>. | *The Frobenius norm: The <math>\,l_2</math>-norm of singular values, <math>\| M \|_F = \|\mathbf{\sigma}\|_2 = (\textrm{tr}(M^TM))^{1/2}</math>. | ||

*The trace norm: The sum of singular values, <math>\| M \|_* = \|\mathbf{\sigma}\|_1</math>. | *The trace norm: The sum of singular values, <math>\| M \|_* = \|\mathbf{\sigma}\|_1</math>. | ||

<br/> | <br/> | ||

Next, we define several other operators on the matrix <math>\,M</math>. Given a matrix <math>M \in \mathbb{R}^{p \times q}</math>, let <math>\,\textrm{vec}(M)</math> denote the vector in <math>\mathbb{R}^{pq}</math> obtained by stacking its columns into a single vector; and <math>A \otimes B</math> denote the Kronecker product between matrices <math>A \in \mathbb{R}^{p_1 \times q_1}</math> and <math>B \in \mathbb{R}^{p_2 \times q_2}</math> where the Kronecker product is defined as <center><math>A \otimes B = \begin{bmatrix} | Next, we define several other operators on the matrix <math>\,M</math>. Given a matrix <math>M \in \mathbb{R}^{p \times q}</math>, let <math>\,\textrm{vec}(M)</math> denote the vector in <math>\mathbb{R}^{pq}</math> obtained by stacking its columns into a single vector; and <math>A \otimes B</math> denote the [http://en.wikipedia.org/wiki/Kronecker_product Kronecker product] between matrices <math>A \in \mathbb{R}^{p_1 \times q_1}</math> and <math>B \in \mathbb{R}^{p_2 \times q_2}</math> where the Kronecker product is defined as <center><math>A \otimes B = \begin{bmatrix} | ||

a_{11}B & a_{12}B & \ldots & a_{1q_1}B \\ | a_{11}B & a_{12}B & \ldots & a_{1q_1}B \\ | ||

a_{21}B & a_{22}B & \ldots & a_{2q_1}B \\ | a_{21}B & a_{22}B & \ldots & a_{2q_1}B \\ | ||

| Line 26: | Line 29: | ||

</math></center> | </math></center> | ||

<br /><br /> | <br /><br /> | ||

Finally, we introduce the following standard asymptotic notation. A random variable <math>\,Z_n</math> is said to be of order <math>\,O_p(a_n)</math> if for any <math>\,\eta > 0</math>, there exists a <math>\,M > 0</math> such that <math>\sup_nP(|Z_n| > Ma_n) < \eta</math>. <math>\,Z_n</math> is said to be | Finally, we introduce the following standard asymptotic notation. A random variable <math>\,Z_n</math> is said to be of order <math>\,O_p(a_n)</math> if for any <math>\,\eta > 0</math>, there exists a <math>\,M > 0</math> such that <math>\sup_nP(|Z_n| > Ma_n) < \eta</math>. <math>\,Z_n</math> is said to be of order <math>\,o_p(a_n)</math> if <math>\,\frac{Z_n}{a_n}</math> converges to zero in probability. In other words, <math>\,Z_n</math> is of order <math>\,o_p(a_n)</math> if <math>P(|Z_n| \geq \eta a_n) \rightarrow 0</math> as <math>n \rightarrow \infty</math> for any <math>\,\eta > 0</math>. | ||

==Problem Formulation== | ==Problem Formulation== | ||

Suppose we are given <math>\,n</math> observations <math>\,(M_i,z_i)</math>, <math>i = 1,\ldots, n</math> and we want to predict each <math>\,z_i</math> as a linear function of <math>M_i \in \mathbb{R}^{p \times q}</math> using as few entries of <math>\,M_i</math> as possible. This optimization problem, which we will refer to as the trace norm matrix approximation problem, can be written formally as | Suppose we are given <math>\,n</math> observations <math>\,(M_i,z_i)</math>, <math>i = 1,\ldots, n</math> and we want to predict each <math>\,z_i</math> as a linear function of <math>M_i \in \mathbb{R}^{p \times q}</math> using as few entries of <math>\,M_i</math> as possible. This optimization problem, which we will refer to as the trace norm matrix approximation problem, can be written formally as | ||

<center><math>\min_{W \in \mathbb{R}^{p \times q}} \frac{1}{2n} \sum_{i=1}^n (z_i -\textrm{tr}W^TM_i)^2 + \lambda_n\|W\|_* | <center><math>\min_{W \in \mathbb{R}^{p \times q}} \frac{1}{2n} \sum_{i=1}^n (z_i -\textrm{tr}W^TM_i)^2 + \lambda_n\|W\|_* \;\;\; (1) | ||

</math></center><br /> | </math></center><br /> | ||

where the trace norm regularization term is used as a heuristic to minimize the rank of <math>\,W</math>. Note that, if <math>M_i = \textrm{Diag}(x_i) \in \mathbb{R}^{m \times m}</math> then the solution, <math>\,\hat{W}</math>, will be a diagonal matrix and in this case <math>\,\|W\|_*</math> reduces to the <math>l_1</math> norm of the diagonal. The optimization can be rewritten as | , where the trace norm regularization term is used as a heuristic to minimize the rank of <math>\,W</math>. Note that, if <math>M_i = \textrm{Diag}(x_i) \in \mathbb{R}^{m \times m}</math> then the solution, <math>\,\hat{W}</math>, will be a diagonal matrix and in this case <math>\,\|W\|_*</math> reduces to the <math>\,l_1</math> norm of the diagonal. The optimization can be rewritten as | ||

<center><math>\min_{W \in \mathbb{R}^{p \times q}} \frac{1}{2n} \sum_{i=1}^n (z_i -w^Tx_i)^2 + \lambda_n\|w\|_1, | <center><math>\min_{W \in \mathbb{R}^{p \times q}} \frac{1}{2n} \sum_{i=1}^n (z_i -w^Tx_i)^2 + \lambda_n\|w\|_1, | ||

</math></center> | </math></center> | ||

which is the | which is the LASSO formulation. So, the consistency results for the trace norm estimate should be extensions of the LASSO consistency results. In fact, the author showed that the trace norm consistency results are the same as the LASSO consistency results when the <math>\,M_i</math>'s are diagonal. The group LASSO is another special case embedded in the trace norm matrix approximation problem. If <math>x_{ij} \in \mathbb{R}^{d_j}</math> for <math>j = 1,\ldots,m,i = 1, \ldots, n</math> and each <math>M_{i} \in \mathbb{R}^{\sum_{j=1}^md_j \times m}</math> is a block diagonal matrix, then the solution will have the same structure. The resulting simplification of the trace norm matrix approximation problem is the group LASSO formulation. Once again, the author was able to show how his consistency results matched the previous group LASSO consistency results. | ||

==Assumptions== | ==Assumptions== | ||

Before we can derive the consistency results for <math>\hat{W}</math>, the solution to the matrix approximation problem, we must first make several assumptions about the sampling distributions of <math>M_i \in \mathbb{R}^{p \times q}</math>. Let <math>\hat\Sigma_{mm} = \frac{1}{n}\textrm{vec}(M_i)\textrm{vec}({M_i})^T \in \mathbb{R}^{pq \times pq}</math> then<br/> | Before we can derive the consistency results for <math>\hat{W}</math>, the solution to the matrix approximation problem, we must first make several assumptions about the [http://en.wikipedia.org/wiki/Sampling_distribution sampling distributions] of each <math>M_i \in \mathbb{R}^{p \times q}</math>. Let <math>\hat\Sigma_{mm} = \frac{1}{n}\textrm{vec}(M_i)\textrm{vec}({M_i})^T \in \mathbb{R}^{pq \times pq}</math>, then<br/> | ||

#Suppose the <math>n</math> values of <math>z_i</math> are <math>i.i.d.</math>, the <math>\,M_i</math>, <math>i = 1,\ldots,n</math> are given and there exists a <math>\textbf{W} \in \mathbb{R}^{p \times q}</math> such that for all <math>\,i</math>, <math>\mathbb{E}(z_i | M_1,\ldots, M_n) = \textrm{tr}\textbf{W}^TM_i</math> and <math>\textrm{var}(z_i | M_1, \ldots, M_n) = \sigma^2</math> where <math>\,\sigma^2</math> is a strictly positive constant. <math>\textbf{W}</math> is not equal to zero and does not have full rank. So, | #Suppose the <math>\,n</math> values of <math>\,z_i</math> are [http://en.wikipedia.org/wiki/Independent_and_identically_distributed_random_variables <math>i.i.d.</math>], the <math>\,M_i</math>, <math>i = 1,\ldots,n</math> are given and there exists a <math>\textbf{W} \in \mathbb{R}^{p \times q}</math> such that for all <math>\,i</math>, <math>\mathbb{E}(z_i | M_1,\ldots, M_n) = \textrm{tr}\textbf{W}^TM_i</math> and <math>\textrm{var}(z_i | M_1, \ldots, M_n) = \sigma^2</math> where <math>\,\sigma^2</math> is a strictly positive constant. <math>\textbf{W}</math> is not equal to zero and does not have full rank. So, <math>\,rank(\textbf{W}) = \textbf{r} < \min\{p,q\}</math>.<br /><br /> | ||

#There exists an invertible matrix <math>\Sigma_{mm} \in \mathbb{R}^{pq \times pq}</math> such that <math>\mathbb{E}\|\hat{\Sigma}_{mm}-\Sigma_{mm}\|_F^2 = O(\xi_n^2)</math> for a certain sequence <math>\,\xi_n</math> that tends to zero. In other words, the expected difference between <math>\hat{\Sigma}_{mm}</math> and <math>\,\Sigma_{mm}</math> should go to zero as <math>n \rightarrow \infty</math> | #There exists an invertible matrix <math>\Sigma_{mm} \in \mathbb{R}^{pq \times pq}</math> such that <math>\mathbb{E}\|\hat{\Sigma}_{mm}-\Sigma_{mm}\|_F^2 = O(\xi_n^2)</math> for a certain sequence <math>\,\xi_n</math> that tends to zero. In other words, the expected difference between <math>\hat{\Sigma}_{mm}</math> and <math>\,\Sigma_{mm}</math> should go to zero as <math>n \rightarrow \infty</math> and <math>\hat{\Sigma}_{mm}=\frac{1}{n}\sum_{i=1}^n Vec(M_i)Vec(M_i)^T</math> <br /><br /> | ||

#The random variable <math>n^{-1/2}\sum_{i=1}^n\epsilon_i \textrm{vec}(M_i)</math> is converging in distribution to a normal distribution with mean zero and covariance matrix <math>\,\sigma^2\Sigma_{mm}</math>, where <math>\epsilon_i = z_i - \textrm{tr}\textbf{W}^TM_i</math>. | #The random variable <math>n^{-1/2}\sum_{i=1}^n\epsilon_i \textrm{vec}(M_i)</math> is converging in distribution to a normal distribution with mean zero and covariance matrix <math>\,\sigma^2\Sigma_{mm}</math>, where <math>\epsilon_i = z_i - \textrm{tr}\textbf{W}^TM_i</math>. | ||

<br/> | <br/> | ||

If the first assumption holds then, given the input matrices <math>M_i</math>, <math>i = 1,\ldots, n,</math> we have a linear prediction model | If the first assumption holds then, given the input matrices <math>\,M_i</math>, <math>i = 1,\ldots, n,</math>, we would have a linear prediction model in which the loading matrix (matrix of the coefficients) <math>\textbf{W}</math> is non-trivial and rank-deficient (more details regarding it are available in Bindel's [http://www.cs.cornell.edu/~bindel/class/cs6210-f09/lec22.pdf lecture notes]). This is the matrix we are interested in estimating. Although the second and third conditions seem restrictive, there are two cases where they are naturally satisfied. Assume the first condition holds in both cases. | ||

*Case 1: If the matrices <math>M_i</math> are sampled i.i.d., <math>z</math> and <math>M</math> have finite fourth order moments | *Case 1: If the matrices <math>\,M_i</math> are sampled i.i.d., <math>\,z</math> and <math>\,M</math> have finite fourth order [http://en.wikipedia.org/wiki/Moment_%28mathematics%29 moments] and <math>\Sigma_{mm}^{-1}</math> exists, then conditions 2 and 3 will be satisfied with <math>\,\xi_n = n^{-1/2}</math>. | ||

*Case 2: Suppose for each <math>i</math>, <math>M_i = x_iy_i^T</math> where <math>x_i</math> and <math>y_i</math> are independent | *Case 2: This situation is representative of collaborative filtering. Suppose for each <math>\,i</math>, <math>M_i = x_iy_i^T</math> where <math>\,x_i</math> and <math>\,y_i</math> are [http://en.wikipedia.org/wiki/Independence_%28probability_theory%29 independent]. If the values of <math>\,x_i</math> and <math>\,y_i</math> are both sampled <math>i.i.d</math> from [http://en.wikipedia.org/wiki/Probability_distribution distributions] with finite fourth order moments and invertible second order moment matrices ( <math>\,\Sigma_{xx}</math> and <math>\,\Sigma_{yy}</math> ) and if the <math>\,n</math> values of <math>\,M_i</math> are chosen [http://en.wikipedia.org/wiki/Uniform_distribution_%28continuous%29 uniformly] from the <math>\,n_x n_y</math> possible pairs of <math>\,x_i</math> and <math>\,y_i</math>, then conditions 2 and 3 are also satisfied with <math>\xi_n = n^{-1/2}+n_x^{-1/2}+n_y^{-1/2}</math>. | ||

== Optimality Conditions == | |||

From the expression of the subdifferential (details regarding it are available in Kusraev ''et al.'''s [http://www.math.nsc.ru/LBRT/g2/english/ssk/sub_calculus_e.pdf paper] ) of the trace norm in Proposition 21 (Appendix B) of the author's paper, the author identified the optimality condition for the original trace norm matrix approximation problem ( which is <math>\,(1)</math> above ), which the author used constantly in his paper. This optimality condition is given as follows: | |||

''Proposition'': The matrix <math>W</math> with singular value decomposition <math>W = U Diag(s)V^T</math> (with strictly | |||

positive singular values <math>s</math>) is optimal for the problem in Eq. (1) if and only if: | |||

<center><math>\hat{\Sigma}_{mm}W-\hat{\Sigma}_{mz}+\lambda_nUV^T+N=0</math></center> | |||

with <math>U^TN=0,NV=0</math> and <math>\|N\|_2\leq\lambda_n</math>. | |||

This implies notably that <math>\hat{\Sigma}_{mm}W-\hat{\Sigma}_{mz}</math> and <math>W</math> have simultaneous singular value decompositions, and the largest singular values are less than <math>\lambda</math>It should be noted that, in the LASSO case mentioned above, the usual optimality conditions would be obtained. | |||

==Consistency Results== | ==Consistency Results== | ||

The author | The author considered two types of [http://en.wikipedia.org/wiki/Consistency consistency] for <math>\hat{W}</math> defined as: | ||

*Regular consistency: The estimate <math>\hat{W}</math> is consistent if <math>P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow 0</math> as <math>n \rightarrow \infty</math> for all <math>\,\epsilon > 0</math>. | *Regular [http://en.wikipedia.org/wiki/Consistency consistency]: The estimate <math>\hat{W}</math> is consistent if <math>P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow 0</math> as <math>n \rightarrow \infty</math> for all <math>\,\epsilon > 0</math>. | ||

*Rank consistency: The estimate <math>\hat{W}</math> is rank consistent if <math>P(\textrm{rank}(\hat{W})\neq \textrm{rank}(\textbf{W})) \rightarrow 0</math> as <math>n \rightarrow \infty</math>. | *Rank [http://en.wikipedia.org/wiki/Consistency consistency]: The estimate <math>\hat{W}</math> is rank consistent if <math>P(\textrm{rank}(\hat{W})\neq \textrm{rank}(\textbf{W})) \rightarrow 0</math> as <math>n \rightarrow \infty</math>. | ||

Assuming all three conditions from the previous section hold the author | Assuming all three conditions from the previous section hold, the author obtained the following results for the consistency of <math>\hat{W}</math>. | ||

#If <math>\lambda_n</math> does not tend to zero, then the trace norm estimate <math>\hat{W}</math> is not consistent. | #If <math>\,\lambda_n</math> does not tend to zero, then the [http://en.wikipedia.org/wiki/Trace_norm trace norm] estimate <math>\hat{W}</math> is not consistent. | ||

#*<math>P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow l \neq 0</math> as <math>n \rightarrow \infty</math>. | #*<math>P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow l \neq 0</math> as <math>n \rightarrow \infty</math>. | ||

#If <math>\lambda_n</math> tends to zero faster than <math>n^{-1/2}</math>, then the estimate is consistent and its error is <math>O_p(n^{-1/2})</math> while it is not rank-consistent with probability tending to one. | #If <math>\,\lambda_n</math> tends to zero faster than <math>\,n^{-1/2}</math>, then the estimate is consistent and its error is <math>\,O_p(n^{-1/2})</math> while it is not rank-consistent with probability tending to one. | ||

#*<math>P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow 0</math> as <math>n \rightarrow \infty</math>. | #*<math>P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow 0</math> as <math>n \rightarrow \infty</math>. | ||

#*<math>P(\textrm{rank}(\hat{W})\neq \textrm{rank}(\textbf{W})) \rightarrow 1</math> as <math>n \rightarrow \infty</math> | #*<math>P(\textrm{rank}(\hat{W})\neq \textrm{rank}(\textbf{W})) \rightarrow 1</math> as <math>n \rightarrow \infty</math> | ||

#If <math>\lambda_n</math> tends to zero exactly at rate <math>n^{-1/2}</math>, then the estimate is consistent and its error is <math>O_p(n^{-1/2})</math> but the probability of estimating the correct rank is converging to a limit in (0,1). | #If <math>\,\lambda_n</math> tends to zero exactly at rate <math>\,n^{-1/2}</math>, then the estimate is consistent and its error is <math>\,O_p(n^{-1/2})</math> but the probability of estimating the correct rank is converging to a limit in <math>\,(0,1)</math>. | ||

#*<math>P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow 0</math> as <math>n \rightarrow \infty</math>. | #*<math>P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow 0</math> as <math>n \rightarrow \infty</math>. | ||

#*<math>P(\textrm{rank}(\hat{W})\neq \textrm{rank}(\textbf{W})) \rightarrow l \in (0,1)</math> as <math>n \rightarrow \infty</math> | #*<math>P(\textrm{rank}(\hat{W})\neq \textrm{rank}(\textbf{W})) \rightarrow l \in (0,1)</math> as <math>n \rightarrow \infty</math> | ||

#If <math>\lambda_n</math> tends to zero more slowly than <math>n^{-1/2}</math>, then the estimate is consistent and its rank-consistency depends on specific consistency conditions. | #If <math>\,\lambda_n</math> tends to zero more slowly than <math>\,n^{-1/2}</math>, then the estimate is consistent and its rank-consistency depends on specific consistency conditions. | ||

#*<math>P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow 0</math> as <math>n \rightarrow \infty</math>. | #*<math>P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow 0</math> as <math>n \rightarrow \infty</math>. | ||

#*<math>P(\textrm{rank}(\hat{W})\neq \textrm{rank}(\textbf{W})) \rightarrow \; 0</math> as <math>n \rightarrow \infty</math> if certain conditions hold. | #*<math>P(\textrm{rank}(\hat{W})\neq \textrm{rank}(\textbf{W})) \rightarrow \; 0</math> as <math>n \rightarrow \infty</math> if certain conditions hold. | ||

So, if <math>\lambda_n</math> tends to zero more slowly than <math>n^{-1/2}</math> there | So, if <math>\,\lambda_n</math> tends to zero more slowly than <math>\,n^{-1/2}</math>, then there would be certain conditions under which <math>\hat{W}</math> will be rank consistent. We can take a closer look at what these conditions are. First we must introduce some new notation. Let <math>\textbf{W} = \textbf{U}\textrm{Diag}(\textbf{s})\textbf{V}^T</math> be the [http://en.wikipedia.org/wiki/Singular_value_decomposition singular value decomposition] of <math>\textbf{W}</math> where <math>\textbf{U} \in \mathbb{R}^{p \times \textbf{r}}</math>, <math>\textbf{V} \in \mathbb{R}^{q \times \textbf{r}}</math> and <math>\textbf{r} < \min\{p,q\}</math> is the rank of <math>\textbf{W}</math>. Also, let <math>\textbf{U}_\perp \in \mathbb{R}^{p \times (p-\textbf{r})}</math>, and <math>\textbf{V}_\perp \in \mathbb{R}^{q \times (q-\textbf{r})}</math> be the [http://en.wikipedia.org/wiki/Orthogonal_complement orthogonal complements] of <math>\textbf{U}</math> and <math>\textbf{V}</math>. Finally, defining the matrix <math>\Lambda \in \mathbb{R}^{(p-r) \times (q-r)}</math> as | ||

<br /><br /> | <br /><br /> | ||

<center><math>\textrm{vec}(\Lambda) = ((\textbf{V}_\perp \otimes \textbf{U}_\perp)^T \Sigma_{mm}^{-1} (\textbf{V}_\perp \otimes \textbf{U}_\perp))^{-1}((\textbf{V}_\perp \otimes \textbf{U}_\perp)^T \Sigma_{mm}^{-1} (\textbf{V}_\perp \otimes \textbf{U}_\perp)\textrm{vec}(\textbf{I})) | <center><math>\textrm{vec}(\Lambda) = ((\textbf{V}_\perp \otimes \textbf{U}_\perp)^T \Sigma_{mm}^{-1} (\textbf{V}_\perp \otimes \textbf{U}_\perp))^{-1}((\textbf{V}_\perp \otimes \textbf{U}_\perp)^T \Sigma_{mm}^{-1} (\textbf{V}_\perp \otimes \textbf{U}_\perp)\textrm{vec}(\textbf{I})) | ||

</math></center><br />then | </math></center><br />, we then have the following necessary and sufficient conditions for the rank consistency of <math>\hat{W}:</math><br /><br /> | ||

*Necessary condition: <math>\|\Lambda\|_2 \leq 1</math>.<br /><br /> | *Necessary condition: <math>\|\Lambda\|_2 \leq 1</math>.<br /><br /> | ||

*Sufficient condition: <math>\|\Lambda\|_2 < 1</math>. | *Sufficient condition: <math>\|\Lambda\|_2 < 1</math>. | ||

Note that if <math>\Sigma_{mm}</math> is proportional to identity, they are always satisfied because then <math>\Lambda=0</math>. | |||

==Adaptive Version== | ==Adaptive Version== | ||

Suppose we would like a rank consistent estimator, <math>\hat{W}</math>, without any conditions such as those derived in the previous section (i.e. <math>\|\Lambda\|_2 \leq 1</math>). We can get such an estimator by modifying the trace norm matrix approximation problem. We begin by considering the least-squares estimate <math>\textrm{vec}(\hat{W}_{LS}) = \hat{\Sigma}_{mm}^{-1}\textrm{vec}(\hat{\Sigma}_{Mz})</math> where <math>\hat{\Sigma}_{Mz} = \frac{1}{n}\sum_iz_iM_i</math>. Consider the full singular value decomposition of <math>\hat{W}_{LS} = U_{LS}\textrm{Diag}(s_{LS})V_{LS}^T</math>, where <math>U_{LS}</math> and <math>V_{LS}</math> are orthogonal square matrices and the matrix <math>\textrm{Diag}(s_{LS})</math> is completed by setting the <math>r - \min\{p,q\}</math> singular values in <math>s_{LS} \in \mathbb{R}^{\min\{p,q\}}</math> to <math>n^{-1/2}</math>. For <math>\gamma \in (0,1]</math>, define | Suppose we would like a rank-consistent estimator, <math>\hat{W}</math>, without any conditions such as those derived in the previous section (i.e. <math>\|\Lambda\|_2 \leq 1</math>). We can get such an estimator by modifying the trace norm matrix approximation problem. We begin by considering the least-squares estimate <math>\textrm{vec}(\hat{W}_{LS}) = \hat{\Sigma}_{mm}^{-1}\textrm{vec}(\hat{\Sigma}_{Mz})</math> where <math>\hat{\Sigma}_{Mz} = \frac{1}{n}\sum_iz_iM_i</math>. Consider the full singular value decomposition of <math>\hat{W}_{LS} = U_{LS}\textrm{Diag}(s_{LS})V_{LS}^T</math>, where <math>\,U_{LS}</math> and <math>\,V_{LS}</math> are [http://en.wikipedia.org/wiki/Orthogonal_matrix orthogonal] square matrices and the matrix <math>\,\textrm{Diag}(s_{LS})</math> is completed by setting the <math>\,r - \min\{p,q\}</math> singular values in <math>\,s_{LS} \in \mathbb{R}^{\min\{p,q\}}</math> to <math>\,n^{-1/2}</math>. For <math>\gamma \in (0,1]</math>, define | ||

<center><math>A = U_{LS}\textrm{Diag}(s_{LS})^{-\gamma}U_{LS}^T \in \mathbb{R}^{p \times p} | <center><math>A = U_{LS}\textrm{Diag}(s_{LS})^{-\gamma}U_{LS}^T \in \mathbb{R}^{p \times p} | ||

</math></center>and | </math></center>and | ||

<center><math>B = V_{LS}\textrm{Diag}(s_{LS})^{-\gamma}V_{LS}^T \in \mathbb{R}^{q \times q}. | <center><math>B = V_{LS}\textrm{Diag}(s_{LS})^{-\gamma}V_{LS}^T \in \mathbb{R}^{q \times q}. | ||

</math></center><br /> | </math></center><br /> | ||

Then we can replace <math>\| W \|_*</math> by <math>\|AWB\|_*</math> in the trace norm matrix approximation problem to get the adaptive trace norm matrix approximation problem. This same methodology is applied in Zou | Then, we can replace <math>\| W \|_*</math> by <math>\|AWB\|_*</math> in the trace norm matrix approximation problem to get the adaptive trace norm matrix approximation problem. This same methodology is applied in Zou<ref name="Z2006"/> to derive an adaptive version of the LASSO. Our new optimization problem is | ||

<center><math>\min_{W \in \mathbb{R}^{p \times q}} \frac{1}{2n} \sum_{i=1}^n (z_i -\textrm{tr}W^TM_i)^2 + \lambda_n\|AWB\|_* | <center><math>\min_{W \in \mathbb{R}^{p \times q}} \frac{1}{2n} \sum_{i=1}^n (z_i -\textrm{tr}W^TM_i)^2 + \lambda_n\|AWB\|_*</math></center><br /> | ||

</math></center><br /> | , and the global minimum of this problem, <math>\hat{W}</math>, is both consistent and rank consistent provided that <math>\gamma \in (0,1]</math>, <math>n^{1/2}\lambda_n \rightarrow 0</math>, <math>\lambda_nn^{1/2+\gamma/2} \rightarrow \infty </math> and there is no longer any dependency on the matrix <math>\,\Lambda_2</math> for rank consistency.<br/> | ||

and the global | |||

==Simulations== | ==Simulations== | ||

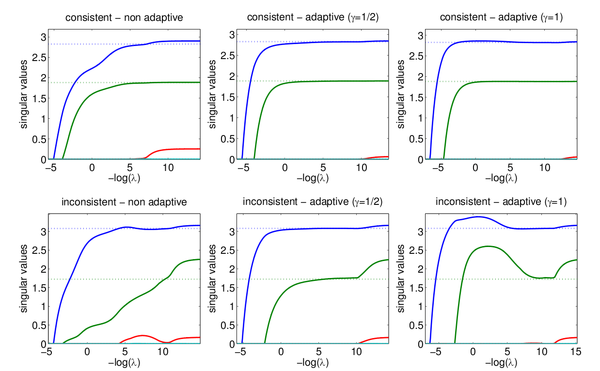

The author | The author performed some simple experiments to demonstrate the consistency results. In these experiments, he let <math>\,X</math> and <math>\,Y</math> be i.i.d data from a normal distribution and let <math>\textbf{W}</math> be a low rank matrix selected at random. He then generated <math>Z = \textrm{diag}(X^T\textbf{W}Y) + \epsilon</math>, where <math>\,\epsilon \sim N(0, \sigma)</math>. For all of the experiments, <math>\textbf{r} = 2</math> and <math>\,p = q = 4</math>, while the sample size <math>\,n</math> varied. The following figure (taken from the author's paper) shows the singular values for <math>\hat{W}</math> plotted against the true singular values indicated by the dotted lines. Both the consistent case | ||

The following figure from the paper shows the singular values for <math>\hat{W}</math> plotted against the true singular values indicated by the dotted lines. Both the consistent (<math>\|\Lambda\|_2 = 0.49 < 1</math>) and inconsistent case (<math>\|\Lambda\|_2 = 4.78 > 1</math>) | (<math>\|\Lambda\|_2 = 0.49 < 1</math>) and the inconsistent case (<math>\|\Lambda\|_2 = 4.78 > 1</math>) were considered and both the regular and adaptive trace norm penalization terms were used, where <math>\,\gamma = 1/2</math> and <math>\,\gamma = 1</math> were considered for the adaptive version. The value of <math>\,n</math> was <math>\,10^3</math>. The estimate was consistent if it was able to correctly estimate the singular values and it was rank consistent if the rank was correct, which was determined by the cardinality of the singular values. In the consistent case, in all three versions, the singular values and the rank were correctly estimated for a range of <math>\,\lambda</math> values, as was expected from the consistency results. From the diagram we can see that the range of <math>\,\lambda</math> values for which the rank was correctly estimated was much narrower for the regularized version versus the adaptive versions. The results from the inconsistent case were also reflective of the consistency results. | ||

[[File:CTNM1.png|600px|center|thumbnail]] | [[File:CTNM1.png|600px|center|thumbnail]] | ||

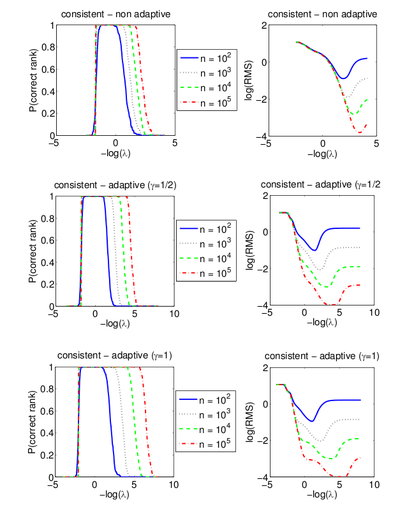

As another test to illustrate the consistency results, the author | As another test to illustrate the consistency results, the author used the rank-consistent case from the first experiment and for <math>\,n = 10^2, 10^3, 10^4</math> and <math>\,10^5</math> computed the paths from <math>\,200</math> replications. The following figure, also taken from the author's paper, shows the results, where the plots on the left are the proportion of estimates that correctly estimated the rank while the plots on the right are the logarithms of the average root mean squared estimation errors. | ||

[[File:CTNM2.png|400px|center|thumbnail]] | [[File:CTNM2.png|400px|center|thumbnail]] | ||

These simulations can be reproduced using MATLAB code available from http://www.di.ens.fr/~fbach/tracenorm. | |||

==Further Reading== | |||

Searching for low-rank representations of data, (the field of [http://en.wikipedia.org/wiki/Dimension_reduction dimensionality reduction]), has broad applications. | |||

The trace norm is commonly used as proxy for the rank operator in dimensionality reduction, since the rank operator results in an NP-hard problem; so, the trace norm, too, has broad applications. | |||

But how can we be sure that switching the rank operator with the trace norm will guarantee that we will get the results that we wish (ie rank minimization)? | |||

For more information about the conditions for when the trace norm is an appropriate proxy, see <ref name="recht2010">Null Space Conditions and Thresholds for Rank Minimization (full and updated version of "Necessary and Sufficient Conditions for Success of the Nuclear Norm Heuristic for Rank Minimization"). Benjamin Recht, Weiyu Xu, and Babak Hassibi. To appear in Mathematical Programming Revised, 2010.</ref> | |||

==References== | ==References== | ||

<references /> | <references /> | ||

Latest revision as of 09:45, 30 August 2017

Introduction

In many applications, including collaborative filtering, multi-task learning, and classification, the main objective is to estimate a low-rank rectangular matrix. More formally, this problem can be written as follows:

where [math]\displaystyle{ \,(z_i,M_i), }[/math] [math]\displaystyle{ i = 1, \ldots, n, }[/math] are given observations. Unfortunately, this is a difficult problem to solve because of the rank constraint.

In fact, the rank-minimization problem is generally NP-hard<ref name="fazel2004">Fazel, M. and Hindi, H. and Boyd, S. Rank minimization and applications in system theory. Proceedings of the 2004 IEEE American Control Conference (4):3273--3278, 2004.</ref>.

If the rank function can be replaced by a convex function then the optimization problem becomes much easier to solve. However, this convex function must still generate low rank solutions. Given these two objectives, many authors have proposed using the trace norm as an alternative to the rank function.

In other words, because using the rank-function results in an NP-hard problem, we resort to the trace norm as a proxy measure for the rank.

The trace norm, or nuclear norm, of a matrix, is the sum of its singular values and it is the convex envelope (the largest lower bounding convex function) of the rank function over the unit ball of the spectral norm. Since the trace norm is the convex envelope of the rank function, adding the trace norm as a regularization term to the matrix approximation problem should still give low rank solutions. It is then natural to explore what happens when the data is actually generated by a low rank matrix. Under what conditions will the trace norm regularization problem accurately approximate the underlying matrix and under what conditions will the rank be accurately approximated? The author of this paper<ref name="B2008a">F. Bach. Consistency of Trace Norm Minimization. Journal of Machine Learning Research, 8:1019-1048, 2008.</ref> explores these questions and provides necessary and sufficient conditions for rank consistency.

The use of the trace norm regularization term in place of the rank function in approximating low-rank matrices is similar to the use of the [math]\displaystyle{ \,l_1 }[/math]-norm (more details here) regularization term in place of the [math]\displaystyle{ \,l_0 }[/math]-norm (more details here) in approximating sparse vectors. In this case, the main objective is to estimate vectors with low cardinality. However, optimization problems with [math]\displaystyle{ \,l_0 }[/math]-norm constraints are hard to solve. Instead the [math]\displaystyle{ \,l_1 }[/math]-norm is often used as a regularization term to induce sparse loadings (vector coefficients), since the [math]\displaystyle{ \,l_1 }[/math]-norm is the convex envelope of the [math]\displaystyle{ \,l_0 }[/math]-norm on the unit ball. For least squares regression, this regularization scheme is known as the LASSO<ref name="T1994">R. Tibshirani. Regression shrinkage and selection via the lasso. Journal Royal Statistics, 58(1): 267-299, 1994.</ref>. Once again we are interested in whether this procedure can consistently estimate sparse vectors and their sparsity patterns. Recent work by Yuan and Lin<ref name="YL2007">M. Yuan and Y. Lin. On the non-negative garrotte estimator. Journal of The Royal Statistical Society Series B, 69(2): 143-161, 2007.</ref>, Zhao and You<ref name="ZY2006">P. Zhao and B. Yu. On model selection consistency of lasso. Journal of Machine Learning Research, 7:2541-2563, 2006.</ref> and Zou<ref name="Z2006">H. Zou. The adaptive lasso and its oracle properties. Journal of the American Statistical Association, 101:1418-1429, December 2006.</ref> looked at this question for the LASSO procedure. Similar work by Bach<ref name="B2008b">F.R. Bach. Consistency of the group lasso and multiple kernel learning. Journal of Machine Learning Research, 9:1179-1225, 2009.</ref> looked at this question for the group Lasso procedure (more details regarding it can be found in Friedman et al.

's paper). Given the similarities between the low rank matrix approximation problem and the sparse vector approximation problem (one such similarity is detailed in Shen et al.'s paper titled Sparse principal component analysis via regularized low rank matrix approximation), the author compared the consistency results that he obtained with the corresponding results for the LASSO and the group LASSO. In fact, he showed that the LASSO and the group LASSO procedures are in fact embedded in the trace norm regularization procedure itself, and he found that the consistency conditions are merely extensions of the results obtained for the LASSO and the group LASSO. The author also considered simulated data to test the consistency results. In addition, an adaptive version is developed by the authors that is capable of providing [math]\displaystyle{ n^{-\frac{1}{2}} }[/math]-consistency and rank consistency when the necessary conditions are not satisfied.

Notation

Before outlining the major results of this paper, we must first introduce some notation. Throughout the remainder of this summary, all vectors will be denoted by lowercase letters and all matrices by uppercase letters. For vectors [math]\displaystyle{ x \in \mathbb{R}^d }[/math], let [math]\displaystyle{ \|\cdot\| }[/math] denote the Euclidean norm, that is [math]\displaystyle{ \|x\| = \|x\|_2 = (x^Tx)^{1/2} }[/math]. On rectangular matrices [math]\displaystyle{ M \in \mathbb{R}^{p \times q} }[/math], consider the following three norms, where [math]\displaystyle{ \,\sigma }[/math] is the vector of singular values.

- The spectral norm: The largest singular value, [math]\displaystyle{ \| M \|_2 = \|\mathbf{\sigma}\|_\infty }[/math].

- The Frobenius norm: The [math]\displaystyle{ \,l_2 }[/math]-norm of singular values, [math]\displaystyle{ \| M \|_F = \|\mathbf{\sigma}\|_2 = (\textrm{tr}(M^TM))^{1/2} }[/math].

- The trace norm: The sum of singular values, [math]\displaystyle{ \| M \|_* = \|\mathbf{\sigma}\|_1 }[/math].

Next, we define several other operators on the matrix [math]\displaystyle{ \,M }[/math]. Given a matrix [math]\displaystyle{ M \in \mathbb{R}^{p \times q} }[/math], let [math]\displaystyle{ \,\textrm{vec}(M) }[/math] denote the vector in [math]\displaystyle{ \mathbb{R}^{pq} }[/math] obtained by stacking its columns into a single vector; and [math]\displaystyle{ A \otimes B }[/math] denote the Kronecker product between matrices [math]\displaystyle{ A \in \mathbb{R}^{p_1 \times q_1} }[/math] and [math]\displaystyle{ B \in \mathbb{R}^{p_2 \times q_2} }[/math] where the Kronecker product is defined as

Finally, we introduce the following standard asymptotic notation. A random variable [math]\displaystyle{ \,Z_n }[/math] is said to be of order [math]\displaystyle{ \,O_p(a_n) }[/math] if for any [math]\displaystyle{ \,\eta \gt 0 }[/math], there exists a [math]\displaystyle{ \,M \gt 0 }[/math] such that [math]\displaystyle{ \sup_nP(|Z_n| \gt Ma_n) \lt \eta }[/math]. [math]\displaystyle{ \,Z_n }[/math] is said to be of order [math]\displaystyle{ \,o_p(a_n) }[/math] if [math]\displaystyle{ \,\frac{Z_n}{a_n} }[/math] converges to zero in probability. In other words, [math]\displaystyle{ \,Z_n }[/math] is of order [math]\displaystyle{ \,o_p(a_n) }[/math] if [math]\displaystyle{ P(|Z_n| \geq \eta a_n) \rightarrow 0 }[/math] as [math]\displaystyle{ n \rightarrow \infty }[/math] for any [math]\displaystyle{ \,\eta \gt 0 }[/math].

Problem Formulation

Suppose we are given [math]\displaystyle{ \,n }[/math] observations [math]\displaystyle{ \,(M_i,z_i) }[/math], [math]\displaystyle{ i = 1,\ldots, n }[/math] and we want to predict each [math]\displaystyle{ \,z_i }[/math] as a linear function of [math]\displaystyle{ M_i \in \mathbb{R}^{p \times q} }[/math] using as few entries of [math]\displaystyle{ \,M_i }[/math] as possible. This optimization problem, which we will refer to as the trace norm matrix approximation problem, can be written formally as

, where the trace norm regularization term is used as a heuristic to minimize the rank of [math]\displaystyle{ \,W }[/math]. Note that, if [math]\displaystyle{ M_i = \textrm{Diag}(x_i) \in \mathbb{R}^{m \times m} }[/math] then the solution, [math]\displaystyle{ \,\hat{W} }[/math], will be a diagonal matrix and in this case [math]\displaystyle{ \,\|W\|_* }[/math] reduces to the [math]\displaystyle{ \,l_1 }[/math] norm of the diagonal. The optimization can be rewritten as

which is the LASSO formulation. So, the consistency results for the trace norm estimate should be extensions of the LASSO consistency results. In fact, the author showed that the trace norm consistency results are the same as the LASSO consistency results when the [math]\displaystyle{ \,M_i }[/math]'s are diagonal. The group LASSO is another special case embedded in the trace norm matrix approximation problem. If [math]\displaystyle{ x_{ij} \in \mathbb{R}^{d_j} }[/math] for [math]\displaystyle{ j = 1,\ldots,m,i = 1, \ldots, n }[/math] and each [math]\displaystyle{ M_{i} \in \mathbb{R}^{\sum_{j=1}^md_j \times m} }[/math] is a block diagonal matrix, then the solution will have the same structure. The resulting simplification of the trace norm matrix approximation problem is the group LASSO formulation. Once again, the author was able to show how his consistency results matched the previous group LASSO consistency results.

Assumptions

Before we can derive the consistency results for [math]\displaystyle{ \hat{W} }[/math], the solution to the matrix approximation problem, we must first make several assumptions about the sampling distributions of each [math]\displaystyle{ M_i \in \mathbb{R}^{p \times q} }[/math]. Let [math]\displaystyle{ \hat\Sigma_{mm} = \frac{1}{n}\textrm{vec}(M_i)\textrm{vec}({M_i})^T \in \mathbb{R}^{pq \times pq} }[/math], then

- Suppose the [math]\displaystyle{ \,n }[/math] values of [math]\displaystyle{ \,z_i }[/math] are [math]\displaystyle{ i.i.d. }[/math], the [math]\displaystyle{ \,M_i }[/math], [math]\displaystyle{ i = 1,\ldots,n }[/math] are given and there exists a [math]\displaystyle{ \textbf{W} \in \mathbb{R}^{p \times q} }[/math] such that for all [math]\displaystyle{ \,i }[/math], [math]\displaystyle{ \mathbb{E}(z_i | M_1,\ldots, M_n) = \textrm{tr}\textbf{W}^TM_i }[/math] and [math]\displaystyle{ \textrm{var}(z_i | M_1, \ldots, M_n) = \sigma^2 }[/math] where [math]\displaystyle{ \,\sigma^2 }[/math] is a strictly positive constant. [math]\displaystyle{ \textbf{W} }[/math] is not equal to zero and does not have full rank. So, [math]\displaystyle{ \,rank(\textbf{W}) = \textbf{r} \lt \min\{p,q\} }[/math].

- There exists an invertible matrix [math]\displaystyle{ \Sigma_{mm} \in \mathbb{R}^{pq \times pq} }[/math] such that [math]\displaystyle{ \mathbb{E}\|\hat{\Sigma}_{mm}-\Sigma_{mm}\|_F^2 = O(\xi_n^2) }[/math] for a certain sequence [math]\displaystyle{ \,\xi_n }[/math] that tends to zero. In other words, the expected difference between [math]\displaystyle{ \hat{\Sigma}_{mm} }[/math] and [math]\displaystyle{ \,\Sigma_{mm} }[/math] should go to zero as [math]\displaystyle{ n \rightarrow \infty }[/math] and [math]\displaystyle{ \hat{\Sigma}_{mm}=\frac{1}{n}\sum_{i=1}^n Vec(M_i)Vec(M_i)^T }[/math]

- The random variable [math]\displaystyle{ n^{-1/2}\sum_{i=1}^n\epsilon_i \textrm{vec}(M_i) }[/math] is converging in distribution to a normal distribution with mean zero and covariance matrix [math]\displaystyle{ \,\sigma^2\Sigma_{mm} }[/math], where [math]\displaystyle{ \epsilon_i = z_i - \textrm{tr}\textbf{W}^TM_i }[/math].

If the first assumption holds then, given the input matrices [math]\displaystyle{ \,M_i }[/math], [math]\displaystyle{ i = 1,\ldots, n, }[/math], we would have a linear prediction model in which the loading matrix (matrix of the coefficients) [math]\displaystyle{ \textbf{W} }[/math] is non-trivial and rank-deficient (more details regarding it are available in Bindel's lecture notes). This is the matrix we are interested in estimating. Although the second and third conditions seem restrictive, there are two cases where they are naturally satisfied. Assume the first condition holds in both cases.

- Case 1: If the matrices [math]\displaystyle{ \,M_i }[/math] are sampled i.i.d., [math]\displaystyle{ \,z }[/math] and [math]\displaystyle{ \,M }[/math] have finite fourth order moments and [math]\displaystyle{ \Sigma_{mm}^{-1} }[/math] exists, then conditions 2 and 3 will be satisfied with [math]\displaystyle{ \,\xi_n = n^{-1/2} }[/math].

- Case 2: This situation is representative of collaborative filtering. Suppose for each [math]\displaystyle{ \,i }[/math], [math]\displaystyle{ M_i = x_iy_i^T }[/math] where [math]\displaystyle{ \,x_i }[/math] and [math]\displaystyle{ \,y_i }[/math] are independent. If the values of [math]\displaystyle{ \,x_i }[/math] and [math]\displaystyle{ \,y_i }[/math] are both sampled [math]\displaystyle{ i.i.d }[/math] from distributions with finite fourth order moments and invertible second order moment matrices ( [math]\displaystyle{ \,\Sigma_{xx} }[/math] and [math]\displaystyle{ \,\Sigma_{yy} }[/math] ) and if the [math]\displaystyle{ \,n }[/math] values of [math]\displaystyle{ \,M_i }[/math] are chosen uniformly from the [math]\displaystyle{ \,n_x n_y }[/math] possible pairs of [math]\displaystyle{ \,x_i }[/math] and [math]\displaystyle{ \,y_i }[/math], then conditions 2 and 3 are also satisfied with [math]\displaystyle{ \xi_n = n^{-1/2}+n_x^{-1/2}+n_y^{-1/2} }[/math].

Optimality Conditions

From the expression of the subdifferential (details regarding it are available in Kusraev et al.'s paper ) of the trace norm in Proposition 21 (Appendix B) of the author's paper, the author identified the optimality condition for the original trace norm matrix approximation problem ( which is [math]\displaystyle{ \,(1) }[/math] above ), which the author used constantly in his paper. This optimality condition is given as follows:

Proposition: The matrix [math]\displaystyle{ W }[/math] with singular value decomposition [math]\displaystyle{ W = U Diag(s)V^T }[/math] (with strictly

positive singular values [math]\displaystyle{ s }[/math]) is optimal for the problem in Eq. (1) if and only if:

with [math]\displaystyle{ U^TN=0,NV=0 }[/math] and [math]\displaystyle{ \|N\|_2\leq\lambda_n }[/math].

This implies notably that [math]\displaystyle{ \hat{\Sigma}_{mm}W-\hat{\Sigma}_{mz} }[/math] and [math]\displaystyle{ W }[/math] have simultaneous singular value decompositions, and the largest singular values are less than [math]\displaystyle{ \lambda }[/math]It should be noted that, in the LASSO case mentioned above, the usual optimality conditions would be obtained.

Consistency Results

The author considered two types of consistency for [math]\displaystyle{ \hat{W} }[/math] defined as:

- Regular consistency: The estimate [math]\displaystyle{ \hat{W} }[/math] is consistent if [math]\displaystyle{ P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow 0 }[/math] as [math]\displaystyle{ n \rightarrow \infty }[/math] for all [math]\displaystyle{ \,\epsilon \gt 0 }[/math].

- Rank consistency: The estimate [math]\displaystyle{ \hat{W} }[/math] is rank consistent if [math]\displaystyle{ P(\textrm{rank}(\hat{W})\neq \textrm{rank}(\textbf{W})) \rightarrow 0 }[/math] as [math]\displaystyle{ n \rightarrow \infty }[/math].

Assuming all three conditions from the previous section hold, the author obtained the following results for the consistency of [math]\displaystyle{ \hat{W} }[/math].

- If [math]\displaystyle{ \,\lambda_n }[/math] does not tend to zero, then the trace norm estimate [math]\displaystyle{ \hat{W} }[/math] is not consistent.

- [math]\displaystyle{ P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow l \neq 0 }[/math] as [math]\displaystyle{ n \rightarrow \infty }[/math].

- If [math]\displaystyle{ \,\lambda_n }[/math] tends to zero faster than [math]\displaystyle{ \,n^{-1/2} }[/math], then the estimate is consistent and its error is [math]\displaystyle{ \,O_p(n^{-1/2}) }[/math] while it is not rank-consistent with probability tending to one.

- [math]\displaystyle{ P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow 0 }[/math] as [math]\displaystyle{ n \rightarrow \infty }[/math].

- [math]\displaystyle{ P(\textrm{rank}(\hat{W})\neq \textrm{rank}(\textbf{W})) \rightarrow 1 }[/math] as [math]\displaystyle{ n \rightarrow \infty }[/math]

- If [math]\displaystyle{ \,\lambda_n }[/math] tends to zero exactly at rate [math]\displaystyle{ \,n^{-1/2} }[/math], then the estimate is consistent and its error is [math]\displaystyle{ \,O_p(n^{-1/2}) }[/math] but the probability of estimating the correct rank is converging to a limit in [math]\displaystyle{ \,(0,1) }[/math].

- [math]\displaystyle{ P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow 0 }[/math] as [math]\displaystyle{ n \rightarrow \infty }[/math].

- [math]\displaystyle{ P(\textrm{rank}(\hat{W})\neq \textrm{rank}(\textbf{W})) \rightarrow l \in (0,1) }[/math] as [math]\displaystyle{ n \rightarrow \infty }[/math]

- If [math]\displaystyle{ \,\lambda_n }[/math] tends to zero more slowly than [math]\displaystyle{ \,n^{-1/2} }[/math], then the estimate is consistent and its rank-consistency depends on specific consistency conditions.

- [math]\displaystyle{ P(\|\hat{W}-\textbf{W}\| \geq \epsilon) \rightarrow 0 }[/math] as [math]\displaystyle{ n \rightarrow \infty }[/math].

- [math]\displaystyle{ P(\textrm{rank}(\hat{W})\neq \textrm{rank}(\textbf{W})) \rightarrow \; 0 }[/math] as [math]\displaystyle{ n \rightarrow \infty }[/math] if certain conditions hold.

So, if [math]\displaystyle{ \,\lambda_n }[/math] tends to zero more slowly than [math]\displaystyle{ \,n^{-1/2} }[/math], then there would be certain conditions under which [math]\displaystyle{ \hat{W} }[/math] will be rank consistent. We can take a closer look at what these conditions are. First we must introduce some new notation. Let [math]\displaystyle{ \textbf{W} = \textbf{U}\textrm{Diag}(\textbf{s})\textbf{V}^T }[/math] be the singular value decomposition of [math]\displaystyle{ \textbf{W} }[/math] where [math]\displaystyle{ \textbf{U} \in \mathbb{R}^{p \times \textbf{r}} }[/math], [math]\displaystyle{ \textbf{V} \in \mathbb{R}^{q \times \textbf{r}} }[/math] and [math]\displaystyle{ \textbf{r} \lt \min\{p,q\} }[/math] is the rank of [math]\displaystyle{ \textbf{W} }[/math]. Also, let [math]\displaystyle{ \textbf{U}_\perp \in \mathbb{R}^{p \times (p-\textbf{r})} }[/math], and [math]\displaystyle{ \textbf{V}_\perp \in \mathbb{R}^{q \times (q-\textbf{r})} }[/math] be the orthogonal complements of [math]\displaystyle{ \textbf{U} }[/math] and [math]\displaystyle{ \textbf{V} }[/math]. Finally, defining the matrix [math]\displaystyle{ \Lambda \in \mathbb{R}^{(p-r) \times (q-r)} }[/math] as

, we then have the following necessary and sufficient conditions for the rank consistency of [math]\displaystyle{ \hat{W}: }[/math]

- Necessary condition: [math]\displaystyle{ \|\Lambda\|_2 \leq 1 }[/math].

- Sufficient condition: [math]\displaystyle{ \|\Lambda\|_2 \lt 1 }[/math].

Note that if [math]\displaystyle{ \Sigma_{mm} }[/math] is proportional to identity, they are always satisfied because then [math]\displaystyle{ \Lambda=0 }[/math].

Adaptive Version

Suppose we would like a rank-consistent estimator, [math]\displaystyle{ \hat{W} }[/math], without any conditions such as those derived in the previous section (i.e. [math]\displaystyle{ \|\Lambda\|_2 \leq 1 }[/math]). We can get such an estimator by modifying the trace norm matrix approximation problem. We begin by considering the least-squares estimate [math]\displaystyle{ \textrm{vec}(\hat{W}_{LS}) = \hat{\Sigma}_{mm}^{-1}\textrm{vec}(\hat{\Sigma}_{Mz}) }[/math] where [math]\displaystyle{ \hat{\Sigma}_{Mz} = \frac{1}{n}\sum_iz_iM_i }[/math]. Consider the full singular value decomposition of [math]\displaystyle{ \hat{W}_{LS} = U_{LS}\textrm{Diag}(s_{LS})V_{LS}^T }[/math], where [math]\displaystyle{ \,U_{LS} }[/math] and [math]\displaystyle{ \,V_{LS} }[/math] are orthogonal square matrices and the matrix [math]\displaystyle{ \,\textrm{Diag}(s_{LS}) }[/math] is completed by setting the [math]\displaystyle{ \,r - \min\{p,q\} }[/math] singular values in [math]\displaystyle{ \,s_{LS} \in \mathbb{R}^{\min\{p,q\}} }[/math] to [math]\displaystyle{ \,n^{-1/2} }[/math]. For [math]\displaystyle{ \gamma \in (0,1] }[/math], define

and

Then, we can replace [math]\displaystyle{ \| W \|_* }[/math] by [math]\displaystyle{ \|AWB\|_* }[/math] in the trace norm matrix approximation problem to get the adaptive trace norm matrix approximation problem. This same methodology is applied in Zou<ref name="Z2006"/> to derive an adaptive version of the LASSO. Our new optimization problem is

, and the global minimum of this problem, [math]\displaystyle{ \hat{W} }[/math], is both consistent and rank consistent provided that [math]\displaystyle{ \gamma \in (0,1] }[/math], [math]\displaystyle{ n^{1/2}\lambda_n \rightarrow 0 }[/math], [math]\displaystyle{ \lambda_nn^{1/2+\gamma/2} \rightarrow \infty }[/math] and there is no longer any dependency on the matrix [math]\displaystyle{ \,\Lambda_2 }[/math] for rank consistency.

Simulations

The author performed some simple experiments to demonstrate the consistency results. In these experiments, he let [math]\displaystyle{ \,X }[/math] and [math]\displaystyle{ \,Y }[/math] be i.i.d data from a normal distribution and let [math]\displaystyle{ \textbf{W} }[/math] be a low rank matrix selected at random. He then generated [math]\displaystyle{ Z = \textrm{diag}(X^T\textbf{W}Y) + \epsilon }[/math], where [math]\displaystyle{ \,\epsilon \sim N(0, \sigma) }[/math]. For all of the experiments, [math]\displaystyle{ \textbf{r} = 2 }[/math] and [math]\displaystyle{ \,p = q = 4 }[/math], while the sample size [math]\displaystyle{ \,n }[/math] varied. The following figure (taken from the author's paper) shows the singular values for [math]\displaystyle{ \hat{W} }[/math] plotted against the true singular values indicated by the dotted lines. Both the consistent case ([math]\displaystyle{ \|\Lambda\|_2 = 0.49 \lt 1 }[/math]) and the inconsistent case ([math]\displaystyle{ \|\Lambda\|_2 = 4.78 \gt 1 }[/math]) were considered and both the regular and adaptive trace norm penalization terms were used, where [math]\displaystyle{ \,\gamma = 1/2 }[/math] and [math]\displaystyle{ \,\gamma = 1 }[/math] were considered for the adaptive version. The value of [math]\displaystyle{ \,n }[/math] was [math]\displaystyle{ \,10^3 }[/math]. The estimate was consistent if it was able to correctly estimate the singular values and it was rank consistent if the rank was correct, which was determined by the cardinality of the singular values. In the consistent case, in all three versions, the singular values and the rank were correctly estimated for a range of [math]\displaystyle{ \,\lambda }[/math] values, as was expected from the consistency results. From the diagram we can see that the range of [math]\displaystyle{ \,\lambda }[/math] values for which the rank was correctly estimated was much narrower for the regularized version versus the adaptive versions. The results from the inconsistent case were also reflective of the consistency results.

As another test to illustrate the consistency results, the author used the rank-consistent case from the first experiment and for [math]\displaystyle{ \,n = 10^2, 10^3, 10^4 }[/math] and [math]\displaystyle{ \,10^5 }[/math] computed the paths from [math]\displaystyle{ \,200 }[/math] replications. The following figure, also taken from the author's paper, shows the results, where the plots on the left are the proportion of estimates that correctly estimated the rank while the plots on the right are the logarithms of the average root mean squared estimation errors.

These simulations can be reproduced using MATLAB code available from http://www.di.ens.fr/~fbach/tracenorm.

Further Reading

Searching for low-rank representations of data, (the field of dimensionality reduction), has broad applications. The trace norm is commonly used as proxy for the rank operator in dimensionality reduction, since the rank operator results in an NP-hard problem; so, the trace norm, too, has broad applications. But how can we be sure that switching the rank operator with the trace norm will guarantee that we will get the results that we wish (ie rank minimization)? For more information about the conditions for when the trace norm is an appropriate proxy, see <ref name="recht2010">Null Space Conditions and Thresholds for Rank Minimization (full and updated version of "Necessary and Sufficient Conditions for Success of the Nuclear Norm Heuristic for Rank Minimization"). Benjamin Recht, Weiyu Xu, and Babak Hassibi. To appear in Mathematical Programming Revised, 2010.</ref>

References

<references />