Poison Frogs Neural Networks: Difference between revisions

| (34 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

== Presented by == | == Presented by == | ||

Eric Anderson, Chengzhi Wang, | Eric Anderson, Chengzhi Wang, YiJing Zhou, Kai Zhong | ||

== Introduction == | == Introduction == | ||

''Poison Frogs! Targeted Clean-Label Poisoning Attacks on Neural Networks'' from NeurIPS 2018 is written by Ali Shafahi, W. Ronny Huang, Mahyar Najibi, Octavian Suciu, Christoph Studer, Tudor Dumitras, and Tom Goldstein. | |||

Data poisoning attacks are performed by an attacker by adding examples (poisons) to a training set to manipulate the model’s behavior on a test set. The ability for deep learning models to tolerate such poisons is necessary if they are to be deployed in high stakes, security-critical situations. ''Targeted'' attacks aim to only manipulate classifier behavior with respect to a specific test instance, and ''clean-label'' attacks do not require the attacker to have control over the poison’s labeling. | |||

This paper presents a method to create poisons to effectively make ''targeted'', ''clean-label'' poisoning attacks on neural networks, along with techniques to boost lethality. The proposed poisoning technique achieves an 100% success rate on a pretrained InceptionV3 network and sees up to 70% success rate on end-to-end trained scaled-down AlexNet architecture when using watermarks and multiple poison instances. | |||

1. | == Previous Work == | ||

Comprehensive studies on ''classical'' poisoning attacks that aim to degrade classifier accuracy indiscriminately have been conducted for support vector machines [1] and Bayesian classifiers [2] respectively. The authors highlight Chen et al.’s 2016 study [3] for its advancements in targeted poisoning attacks with relatively low cost (~50 training examples needed), but note that its backdoor attack method still requires instances to be modified at test time. Finally, prior work exists on targeted attacks on neural networks [4], but the method it proposes requires large doses (>12.5% per mini-batch) to succeed. | |||

== Motivation == | |||

The previous section shows that while there are studies related to poisoning attacks on SVMs and Bayesian classifiers, poisons for deep neural networks (DNNs) have rarely been studied, with the existing few studies indicating DNNs are extremely susceptible to poison attacks [5]. | |||

Furthermore, poisons studied prior to this paper can be classified into at least one of the following: | |||

*Focus on classical attacks that degrade model accuracy indiscriminately | |||

*Require test-time instances to be manually modified | |||

*Require the attacker to have some degree of control over the labelling of the training set | |||

*Only achieve acceptable success rates with high poison doses | |||

The proposal of targeted, clean-label poisons in this paper thus opens the door to more efficient, deadly poisons that future neural networks will have to find ways to tolerate. | |||

== Basic Concept == | |||

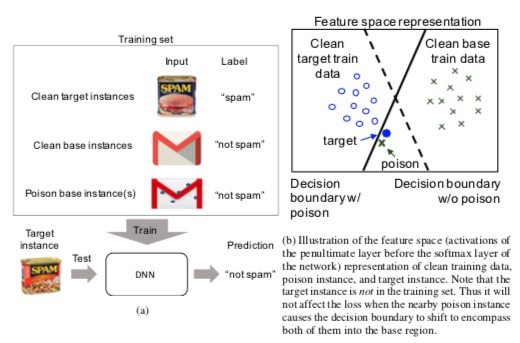

Crafting the basic concoction assumes the attacker has no knowledge of the training data but does have knowledge of the model they intend to poison. Base instance '''b''' is chosen from the training set and test instance t is chosen from the testing set. The goal is to have the poisoned model misclassify '''t''' to class '''b''' at testing, while the poison '''p''' is discernible from '''b''' to the human data labeller. | |||

[[File:Picture1.jpg | center]] | |||

<div align="center">Figure 1 (a) Schematic of the clean-label poisoning attack. (b) Schematic of how a successful attack might work by shifting the decision boundary.</div> | |||

= | This leads to: | ||

<math> | |||

\mathbf{p} = argmin_x||f(\mathbf{x}) - f(\mathbf{t})||_2^2 + \beta||\mathbf{x}-\mathbf{b}||_2^2 | |||

</math> | |||

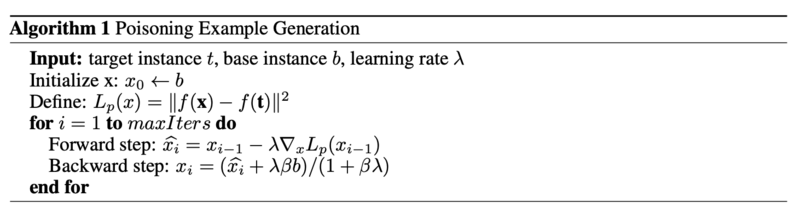

With ''f'' being the function that propagates input '''x''' through the network to the penultimate layer. So the first term aims to minimize differences between the poison and test instance '''t''' in the eyes of the model, while the second term aims to minimize differences between the poison and base instance '''b''' to a human. The authors then use the following algorithm for optimization: | |||

[[File:Algorithm1.png | center]] | |||

Human assistance is needed to tune β so that the poison still resembles the base instance to someone who labels the data. | |||

== Boosting Poison Effectiveness == | |||

With the basic concoction in place, the authors introduce several methods to further boost lethality. As we will see in the results section, while the basic concoction is sufficiently deadly for a transfer learning trained model, successful attacks on end-to-end trained models require the help of the following techniques: | |||

*Watermarking: The poison is overlapped with a low-opacity base instance (the watermark) to “allow for some inseparable feature[s] [to] overlap while remaining visually distinct” (P7) | |||

*Multiple poison instance attacks: Multiple poison instances derived from different base instances are introduced into the training set | |||

The | *Targeting outliers: By targeting instances farther away from instances in the training class (like in Figure 1b), the authors reason the class label should easier to flip | ||

== | == Results == | ||

'''Attacks on Transfer Learning Trained Models''' | |||

[ | For this section, the model is a pretrained InceptionV3 network where all layers except the last one are frozen [6] (Footnote). Adding only one poison instance to the training set causes misclassification of the target with 100% success rate. The experiment was performed 1099 times with different test sets each time, the resulting success rate of an attack is 100%. Overall test accuracy was barely affected by the poisoning. The accuracy was on average lowered by 0.2%, with the worst case being lowered by 0.4%, and the original accuracy was 99.5%. | ||

'''Attacks on End-to-End Trained Models''' | |||

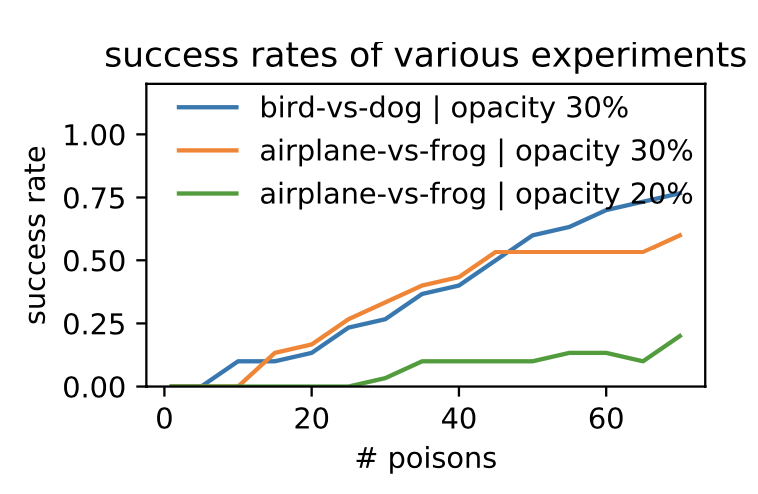

The | For this section, an AlexNet architecture modified for the CIFAR-10 dataset [7] was used. The target and the poison instance overlapped, therefore the poison-crafting optimization procedure works. However, the decision bound remains the same after retraining on the poisoned dataset. When retraining on the poisonsed dataset, “the lower-level feature extraction kernels in the shallow layers are modified so that the poison instance is returned to the base class distribution in the deep layers” (P6). Imperfections in the feature extraction kernels are exploited in earlier layers so that the poison instance is placed alongside the target. In order for the attack to be successful, the target and the poison instances cannot be separated in the feature space when retraining. Success rate after using watermark to boost the attacks is over 70% for a single attack. For multiple attacks, the success rate increases as the number of poison increases, and the success rate is higher with the opacity of the watermark set at 30% as compared to 20%. | ||

[[File: | [[File:result.png | center]] | ||

<div align="center">Figure 2: Success rate of attacks on different targets from different bases as a function of # of poison instances used and different target opacity added to the base instances</div> | |||

The success rate can be increased by targeting data outliers because they lie far from other training samples in the class and should be easier to flip the class label. Clean-label attacks under the end-to-end scenario required optimization via the combination of Algorithm 1, a diverse mix of poison instances and watermarking in order to have a decent success rate. | |||

== Conclusion == | == Conclusion == | ||

This article studied targeted clean-label poisoning methods. These attacks are difficult to detect because they involve non-suspicious (correctly labeled) training data, and do not degrade the performance on non-targeted examples. | |||

This article studied targeted clean-label poisoning methods. These attacks are difficult to detect because they involve non-suspicious (correctly labeled) training data, and do not degrade the performance on non-targeted examples. Poisoned images collide with a target image in the feature space, making it hard for the network to distinguish them. Multiple poisoned images and a watermarking trick will make the attack more powerful. Our poisoned dataset training makes the network more robust to base-class adversarial examples designed to be misclassified as the target. This also has the effect of causing the unaltered target instance to be misclassified as a base. Finally the paper hopes that it can raise attention for the important issues of data reliability and data sourcing. | |||

== Critiques == | == Critiques == | ||

- | Does the article exaggerate the severity and possibility of data poisoning? | ||

*How often does the assumption that the attacker has knowledge of the model and its parameters hold? In high-risk scenarios this would also be confidential information. | |||

*How frequently are people of misintent able to retrain targeted models using poisoned data sets? | |||

*How often do attackers have access to data sources / training data to add poisoned data points? | |||

How susceptible are different types of models? The article only talks about deep neural networks, but surely these concepts apply to other types of classifiers. | |||

The researchers performed honest testing for both transfer learning and end-to-end trained models, but at the same time they also boosted their odds of success through selecting outliers as targets (which isn’t always feasible) and attacking overfitted models in the case of the InceptionV3 model. | |||

Is possible in most scenarios to obtain a poison that can’t be discerned manually? If not, can we quantify how often such an attack is feasible? | |||

== References == | == References == | ||

[1] | [1] Battista Biggio, Blaine Nelson, and Pavel Laskov. Poisoning attacks against support vector machines. ''arXiv preprint arXiv:1206.6389'', 2012. | ||

[2] Blaine Nelson, Marco Barreno, Fuching Jack Chi, Anthony D. Joseph, Benjamin I. P. Rubinstein, Udam Saini, Charles Sutton, J. D. Tygar, and Kai Xia. Exploiting machine learning to subvert your spam filter. In ''Proceedings of the 1st Usenix Workshop on Large-Scale Exploits and Emergent Threats'', pages 7:1–7:9, Berkeley, CA, USA, 2008. | |||

[3] Xinyun Chen, Chang Liu, Bo Li, Kimberly Lu, and Dawn Song. Targeted Backdoor Attacks on Deep Learning Systems Using Data Poisoning. ''arXiv preprint arXiv:1712.05526'', 2017. URL http://arxiv.org/abs/1712.05526. | |||

[ | [4]Octavian Suciu, Radu Mărginean, Yiǧitcan Kaya, Hal Daumé III, and Tudor Dumitraş. When does machine learning fail? generalized transferability for evasion and poisoning attacks. ''arXiv preprint arXiv:1803.06975'', 2018. | ||

[ | [5] Jacob Steinhardt, Pang Wei Koh, and Percy Liang. Certified Defenses for Data Poisoning Attacks. ''arXiv preprint arXiv:1706.03691'', (i), 2017. URL http://arxiv.org/abs/1706.03691. | ||

[ | [6] Christian Szegedy, Vincent Vanhoucke, Sergey Ioffe, Jon Shlens, and ZbigniewWojna. Rethinking the inception architecture for computer vision. In ''Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition'', pages 2818–2826, 2016. | ||

[7] Alex Krizhevsky and Geoffrey Hinton. Learning multiple layers of features from tiny images. 2009. URL https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf. | |||

Footnote | Footnote: Researchers' code for attacking a transfer learning trained model: https://github.com/ashafahi/inceptionv3-transferLearn-poison | ||

Latest revision as of 18:29, 26 November 2021

Presented by

Eric Anderson, Chengzhi Wang, YiJing Zhou, Kai Zhong

Introduction

Poison Frogs! Targeted Clean-Label Poisoning Attacks on Neural Networks from NeurIPS 2018 is written by Ali Shafahi, W. Ronny Huang, Mahyar Najibi, Octavian Suciu, Christoph Studer, Tudor Dumitras, and Tom Goldstein.

Data poisoning attacks are performed by an attacker by adding examples (poisons) to a training set to manipulate the model’s behavior on a test set. The ability for deep learning models to tolerate such poisons is necessary if they are to be deployed in high stakes, security-critical situations. Targeted attacks aim to only manipulate classifier behavior with respect to a specific test instance, and clean-label attacks do not require the attacker to have control over the poison’s labeling.

This paper presents a method to create poisons to effectively make targeted, clean-label poisoning attacks on neural networks, along with techniques to boost lethality. The proposed poisoning technique achieves an 100% success rate on a pretrained InceptionV3 network and sees up to 70% success rate on end-to-end trained scaled-down AlexNet architecture when using watermarks and multiple poison instances.

Previous Work

Comprehensive studies on classical poisoning attacks that aim to degrade classifier accuracy indiscriminately have been conducted for support vector machines [1] and Bayesian classifiers [2] respectively. The authors highlight Chen et al.’s 2016 study [3] for its advancements in targeted poisoning attacks with relatively low cost (~50 training examples needed), but note that its backdoor attack method still requires instances to be modified at test time. Finally, prior work exists on targeted attacks on neural networks [4], but the method it proposes requires large doses (>12.5% per mini-batch) to succeed.

Motivation

The previous section shows that while there are studies related to poisoning attacks on SVMs and Bayesian classifiers, poisons for deep neural networks (DNNs) have rarely been studied, with the existing few studies indicating DNNs are extremely susceptible to poison attacks [5].

Furthermore, poisons studied prior to this paper can be classified into at least one of the following:

- Focus on classical attacks that degrade model accuracy indiscriminately

- Require test-time instances to be manually modified

- Require the attacker to have some degree of control over the labelling of the training set

- Only achieve acceptable success rates with high poison doses

The proposal of targeted, clean-label poisons in this paper thus opens the door to more efficient, deadly poisons that future neural networks will have to find ways to tolerate.

Basic Concept

Crafting the basic concoction assumes the attacker has no knowledge of the training data but does have knowledge of the model they intend to poison. Base instance b is chosen from the training set and test instance t is chosen from the testing set. The goal is to have the poisoned model misclassify t to class b at testing, while the poison p is discernible from b to the human data labeller.

This leads to: [math]\displaystyle{ \mathbf{p} = argmin_x||f(\mathbf{x}) - f(\mathbf{t})||_2^2 + \beta||\mathbf{x}-\mathbf{b}||_2^2 }[/math]

With f being the function that propagates input x through the network to the penultimate layer. So the first term aims to minimize differences between the poison and test instance t in the eyes of the model, while the second term aims to minimize differences between the poison and base instance b to a human. The authors then use the following algorithm for optimization:

Human assistance is needed to tune β so that the poison still resembles the base instance to someone who labels the data.

Boosting Poison Effectiveness

With the basic concoction in place, the authors introduce several methods to further boost lethality. As we will see in the results section, while the basic concoction is sufficiently deadly for a transfer learning trained model, successful attacks on end-to-end trained models require the help of the following techniques:

- Watermarking: The poison is overlapped with a low-opacity base instance (the watermark) to “allow for some inseparable feature[s] [to] overlap while remaining visually distinct” (P7)

- Multiple poison instance attacks: Multiple poison instances derived from different base instances are introduced into the training set

- Targeting outliers: By targeting instances farther away from instances in the training class (like in Figure 1b), the authors reason the class label should easier to flip

Results

Attacks on Transfer Learning Trained Models

For this section, the model is a pretrained InceptionV3 network where all layers except the last one are frozen [6] (Footnote). Adding only one poison instance to the training set causes misclassification of the target with 100% success rate. The experiment was performed 1099 times with different test sets each time, the resulting success rate of an attack is 100%. Overall test accuracy was barely affected by the poisoning. The accuracy was on average lowered by 0.2%, with the worst case being lowered by 0.4%, and the original accuracy was 99.5%.

Attacks on End-to-End Trained Models

For this section, an AlexNet architecture modified for the CIFAR-10 dataset [7] was used. The target and the poison instance overlapped, therefore the poison-crafting optimization procedure works. However, the decision bound remains the same after retraining on the poisoned dataset. When retraining on the poisonsed dataset, “the lower-level feature extraction kernels in the shallow layers are modified so that the poison instance is returned to the base class distribution in the deep layers” (P6). Imperfections in the feature extraction kernels are exploited in earlier layers so that the poison instance is placed alongside the target. In order for the attack to be successful, the target and the poison instances cannot be separated in the feature space when retraining. Success rate after using watermark to boost the attacks is over 70% for a single attack. For multiple attacks, the success rate increases as the number of poison increases, and the success rate is higher with the opacity of the watermark set at 30% as compared to 20%.

The success rate can be increased by targeting data outliers because they lie far from other training samples in the class and should be easier to flip the class label. Clean-label attacks under the end-to-end scenario required optimization via the combination of Algorithm 1, a diverse mix of poison instances and watermarking in order to have a decent success rate.

Conclusion

This article studied targeted clean-label poisoning methods. These attacks are difficult to detect because they involve non-suspicious (correctly labeled) training data, and do not degrade the performance on non-targeted examples. Poisoned images collide with a target image in the feature space, making it hard for the network to distinguish them. Multiple poisoned images and a watermarking trick will make the attack more powerful. Our poisoned dataset training makes the network more robust to base-class adversarial examples designed to be misclassified as the target. This also has the effect of causing the unaltered target instance to be misclassified as a base. Finally the paper hopes that it can raise attention for the important issues of data reliability and data sourcing.

Critiques

Does the article exaggerate the severity and possibility of data poisoning?

- How often does the assumption that the attacker has knowledge of the model and its parameters hold? In high-risk scenarios this would also be confidential information.

- How frequently are people of misintent able to retrain targeted models using poisoned data sets?

- How often do attackers have access to data sources / training data to add poisoned data points?

How susceptible are different types of models? The article only talks about deep neural networks, but surely these concepts apply to other types of classifiers.

The researchers performed honest testing for both transfer learning and end-to-end trained models, but at the same time they also boosted their odds of success through selecting outliers as targets (which isn’t always feasible) and attacking overfitted models in the case of the InceptionV3 model.

Is possible in most scenarios to obtain a poison that can’t be discerned manually? If not, can we quantify how often such an attack is feasible?

References

[1] Battista Biggio, Blaine Nelson, and Pavel Laskov. Poisoning attacks against support vector machines. arXiv preprint arXiv:1206.6389, 2012.

[2] Blaine Nelson, Marco Barreno, Fuching Jack Chi, Anthony D. Joseph, Benjamin I. P. Rubinstein, Udam Saini, Charles Sutton, J. D. Tygar, and Kai Xia. Exploiting machine learning to subvert your spam filter. In Proceedings of the 1st Usenix Workshop on Large-Scale Exploits and Emergent Threats, pages 7:1–7:9, Berkeley, CA, USA, 2008.

[3] Xinyun Chen, Chang Liu, Bo Li, Kimberly Lu, and Dawn Song. Targeted Backdoor Attacks on Deep Learning Systems Using Data Poisoning. arXiv preprint arXiv:1712.05526, 2017. URL http://arxiv.org/abs/1712.05526.

[4]Octavian Suciu, Radu Mărginean, Yiǧitcan Kaya, Hal Daumé III, and Tudor Dumitraş. When does machine learning fail? generalized transferability for evasion and poisoning attacks. arXiv preprint arXiv:1803.06975, 2018.

[5] Jacob Steinhardt, Pang Wei Koh, and Percy Liang. Certified Defenses for Data Poisoning Attacks. arXiv preprint arXiv:1706.03691, (i), 2017. URL http://arxiv.org/abs/1706.03691.

[6] Christian Szegedy, Vincent Vanhoucke, Sergey Ioffe, Jon Shlens, and ZbigniewWojna. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2818–2826, 2016.

[7] Alex Krizhevsky and Geoffrey Hinton. Learning multiple layers of features from tiny images. 2009. URL https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf.

Footnote: Researchers' code for attacking a transfer learning trained model: https://github.com/ashafahi/inceptionv3-transferLearn-poison