Evaluating Machine Accuracy on ImageNet: Difference between revisions

| (73 intermediate revisions by 42 users not shown) | |||

| Line 3: | Line 3: | ||

== Introduction == | == Introduction == | ||

ImageNet is the most influential | ImageNet is one of the most influential dataset in machine learning with a million human annotated images and corresponding labels for over 1000 classes. This paper intends to explore the causes for performance differences between human experts and machine learning models, more specifically, CNN, on ImageNet. | ||

Firstly, some images | Firstly, some images could belong to multiple classes. As a result, it is possible to underestimate the performance if we assign each image with only one label, which is what is being done in the top-1 metric. On the other hand, the top-5 metric looks at the top five predictions by the model for an image and checks if the target label is within those five predictions (Krizhevsky, Sutskever, & Hinton). Therefore, we adopt both top-1 and top-5 metrics where the performances of models, unlike human labelers, are linearly correlated in both cases. | ||

Secondly, in contrast to the uniform performance of models | Secondly, in contrast to the uniform performance of models in classes, humans tend to achieve better performances on inanimate objects. Human labelers achieve similar overall accuracies as the models, which indicates spaces of improvements on specific classes for machines. | ||

Lastly, the setup of drawing training and test sets from the same distribution may | Lastly, the setup of drawing training and test sets from the same distribution may favor models over human labelers. That is, the accuracy of multi-class prediction from models drops when the testing set is drawn from a different distribution than the training set, ImageNetV2. But this shift in distribution does not cause a problem for human labelers. | ||

== Experiment Setup == | == Experiment Setup == | ||

| Line 16: | Line 16: | ||

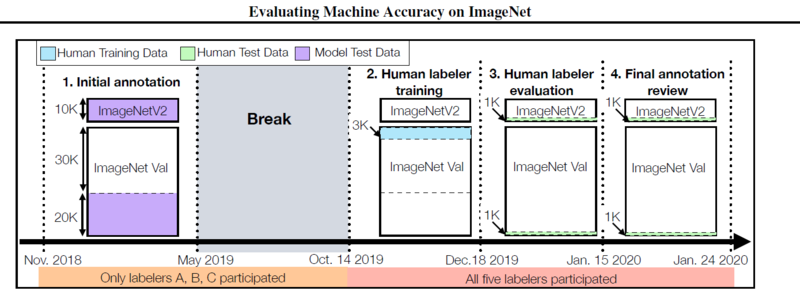

A brief overview of the four phases is as follows: | A brief overview of the four phases is as follows: | ||

[[File:Experiment Set Up.png | center]] | [[File:Experiment Set Up.png |800px| center]] | ||

=== Initial multi-label annotation === | === Initial multi-label annotation === | ||

Three labelers A, B, and C provided multi-label annotations for a subset from the ImageNet validation set, and all images from the ImageNetV2 test sets. These experiences give A, B, and C extensive experience with the ImageNet dataset. | Three labelers A, B, and C provided multi-label annotations for a subset of size 20,000 from the ImageNet validation set, and all 20,683 images from the ImageNetV2 test sets. These experiences give A, B, and C extensive experience with the ImageNet dataset. | ||

=== Human Labeler Training === | === Human Labeler Training === | ||

All five labelers trained on labeling a subset of the remaining ImageNet images. "Training" the human labelers consisted of teaching the humans the distinctions between very similar classes in the training set. For example, there are 118 classes of "dog" within ImageNet and typical human participants will not have working knowledge of the names of each breed of dog seen even if they can recognize and distinguish that breed from others. Local members of the American Kennel Club were even contacted to help with dog breed classification. To do this labelers were trained on class-specific tasks for groups like dogs, insects, monkeys beaver and others. They were also given immediate feedback on whether they were correct and then were asked where they thought they needed more training to improve. Unlike the two annotators in (Russakovsky et al., 2015), who had insufficient training data, the labelers in this experiment had up to 100 training images per class while labeling. This allowed the labelers to really understand the finer details of each class. | |||

=== Human Labeler Evaluation === | === Human Labeler Evaluation === | ||

Class-balanced random samples are generated from both the ImageNet validation set and ImageNetV2. Five participants labeled these images over 28 days. | Class-balanced random samples, which contain 1,000 images from the 20,000 annotated images are generated from both the ImageNet validation set and ImageNetV2. Five participants labeled these images over 28 days. | ||

=== Final annotation Review === | === Final annotation Review === | ||

| Line 31: | Line 31: | ||

== Multi-label annotations== | == Multi-label annotations== | ||

[[File:Categories Multilabel.png|800px|center]] | |||

<div align="center">Figure 3</div> | |||

===Top-1 accuracy=== | ===Top-1 accuracy=== | ||

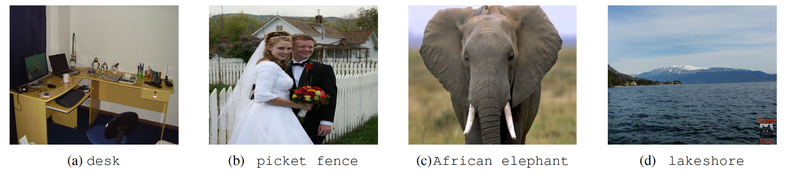

With Top-1 accuracy being the standard accuracy measure used in classification studies, it measures the proportions of examples for which the predicted label matches the single target label. As many images often contain more than one object for classification, for example, Figure 3a contains a desk, laptop, keyboard, space bar, and more. With Figure 3b showing a centered prominent figure yet labeled otherwise (people vs picket fence), it can be seen how a single target label is inaccurate for such a task since identifying the main objects in the image does not suffice due to its overly stringent and punishes predictions that are the main image yet does not match its label. | With Top-1 accuracy being the standard accuracy measure used in classification studies, it measures the proportions of examples for which the predicted label matches the single target label. As many images often contain more than one object for classification, for example, Figure 3a contains a desk, laptop, keyboard, space bar, and more. With Figure 3b showing a centered prominent figure yet labeled otherwise (people vs picket fence), it can be seen how a single target label is inaccurate for such a task since identifying the main objects in the image does not suffice due to its overly stringent and punishes predictions that are the main image yet does not match its label. | ||

===Top-5 accuracy=== | ===Top-5 accuracy=== | ||

With Top-5 considers a classification correct if the object label is in the top 5 predicted labels | With Top-5 considers a classification correct if the object label is in the top 5 predicted labels. Although it partially resolves the problem with Top-1 labeling, it is still not ideal since it can trivialize class distinctions. For instance, within the dataset, five turtle classes are given which is difficult to distinguish under such classification evaluations. | ||

===Multi-label accuracy=== | ===Multi-label accuracy=== | ||

The paper then proposes that for every image, the image shall have a set of target labels and a prediction; if such prediction matches one of the labels, it will be considered as correct labeling. Due to the above-discussed limitations of Top-1 and Top-5 metrics, the paper claims it is necessary for rigorous accuracy evaluation on the dataset. | The paper then proposes that for every image, the image shall have a set of target labels and a prediction; if such prediction matches one of the labels, it will be considered as correct labeling. Due to the above-discussed limitations of Top-1 and Top-5 metrics, the paper claims it is necessary for rigorous accuracy evaluation on the dataset. | ||

| Line 44: | Line 48: | ||

For similar classes, the paper considers them as under the same bigger class, that is, for two similarly labeled images, classification is considered correct if the produced label matches either one of the labels. For instance, warthog, African elephant, and Indian element all have prominent tusks, they will be considered subclasses of the tusker, Figure 3c shows a modification of labels to contain tusker as a correct label. | For similar classes, the paper considers them as under the same bigger class, that is, for two similarly labeled images, classification is considered correct if the produced label matches either one of the labels. For instance, warthog, African elephant, and Indian element all have prominent tusks, they will be considered subclasses of the tusker, Figure 3c shows a modification of labels to contain tusker as a correct label. | ||

====Unclear Image==== | ====Unclear Image==== | ||

In certain cases such as Figure 3d, there is a distinctive difficulty to determine whether a label was correct due to ambiguities in the class hierarchy. | In certain cases such as Figure 3d, there is a distinctive difficulty to determine whether a label was correct due to ambiguities in the class hierarchy, such as differentiating between a lakeshore or a seashore. | ||

===Collecting multi-label annotations=== | ===Collecting multi-label annotations=== | ||

Participants reviewed all predictions made by the models on the dataset ImageNet and ImageNet-V2, the participants then categorized every unique prediction made by the models on the dataset into correct and incorrect labels in order to allow all images to have multiple correct labels to satisfy the above-listed method. | Participants reviewed all predictions made by the models on the dataset ImageNet and ImageNet-V2, the participants then categorized every unique prediction made by the models on the dataset into correct and incorrect labels in order to allow all images to have multiple correct labels to satisfy the above-listed method. | ||

| Line 51: | Line 56: | ||

== Human Accuracy Measurement Process == | == Human Accuracy Measurement Process == | ||

=== Bias Control === | |||

Since three participants participated in the initial round of annotation, they did not look at the data for six months to forget about the information that they might have unintentionally momorized; also, two additional annotators are introduced in the final evaluation phase to ensure fairness of the experiment. | |||

=== Human Labeler Training === | |||

The three main difficulties encountered during human labeler training are fine-grained distinctions, class unawareness, and insufficient training images. Thus, three training regimens are provided to address the problems listed above, respectively. First, labelers will be assigned extra training tasks with immediate feedbacks on similar classes. Second, labelers will be provided access to search for specific classes during labeling. Finally, the training set will contain a reasonable amount of images for each class. There are difficult class distinctions for humans especially with the fine-grained distinction. To help humans perform well on these class distinction, the training tasks only contained images from certain animal families. | |||

=== Labeling Guide === | |||

A labeling guide is constructed to distill class analysis learned during training into discriminative traits that could be used as a reference during the final labeling evaluation. | |||

=== Final Evaluation and Review === | |||

Two samples, each containing 1000 images, are sampled from ImageNet and ImageNetV2, respectively, They are sampled in a class-balanced manner and shuffled together. Over 28 days, all five participants labeled all images. They spent a median of 26 seconds per image. After labeling is completed, an additional multi-label annotation session was conducted, in which human predictions for all images are manually reviewed. Comparing to the initial round of labeling, 37% of the labels changes due to participants' greater familiarity with the classes. | |||

== Main Results == | == Main Results == | ||

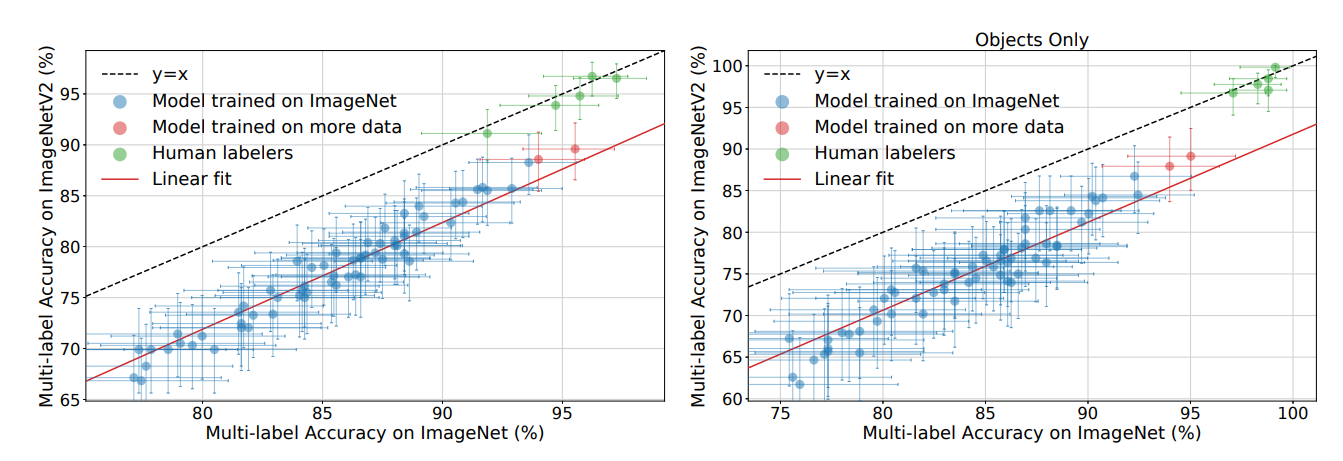

[[File:Evaluating Machine Accuracy on ImageNet Figure 1.png | center]] | |||

<div align="center">Figure 1</div> | |||

===Comparison of Human and Machine Accuracies on Image Net=== | |||

From Figure 1, we can see that the difference in accuracies between the datasets is within 1% for all human participants. As hypothesized, human testers indeed performed better than the automated models on both datasets. It's worth noticing that labelers D and E, who did not participate in the initial annotation period, actually performed better than the best automated model. | |||

===Comparison of Human and Machine Accuracies on Image Net=== | |||

Based on the results shown in Figure 1, we can see that the confidence interval of the best 4 human participants and 4 best model overlap; however, with a p-value of 0.037 using the McNemar's paired test, it rejects the hypothesis that the FixResNeXt model and Human E labeler have the same accuracy with respect to the ImageNet validation dataset. Figure 1 also shows that the confidence intervals of the labeling accuracies for human labelers C, D, E do not overlap with the confidence interval of the best model with respect to ImageNet-V2 and with the McNemar's test yielding a p-value of <math>2\times 10^{-4}</math>, it is clear that the hypothesis human and machined models have same robustness to model distribution shifts ought to be rejected. | |||

== Other Observations == | == Other Observations == | ||

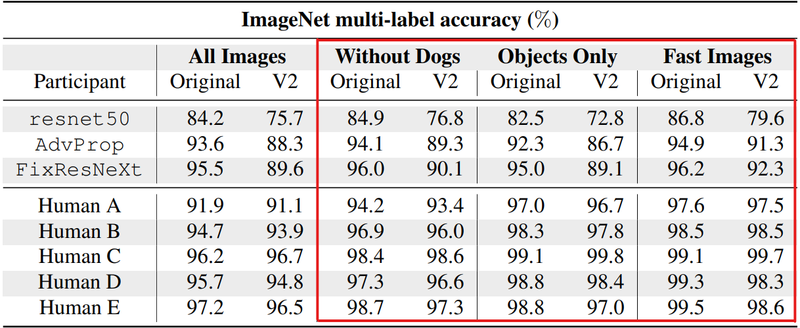

[[File: Results_Summary_Table.png| center]] | [[File: Results_Summary_Table.png| 800px|center]] | ||

=== Difficult Images === | === Difficult Images === | ||

| Line 64: | Line 88: | ||

=== Accuracies without dogs === | === Accuracies without dogs === | ||

As previously discussed in the paper, machine learning models tend to outperform human labelers when classifying the 118 dog classes. To better understand to what extent | As previously discussed in the paper, machine learning models tend to outperform human labelers when classifying the 118 dog classes. To better understand to what extent models outperform human labelers, researchers computed the accuracies again by excluding all the dog classes. Results showed a 0.6% increase in accuracy on the ImageNet images using the best model and a 1.1% increase on the ImageNet V2 images. In comparison, the mean increases in accuracy for human labelers are 1.9% and 1.8% on the ImageNet and ImageNet V2 images respectively. Researchers also conducted a simulation to demonstrate that the increase in human labeling accuracy on non-dog images is significant. This simulation was done by bootstrapping to estimate the changes in accuracy when only using data for the non-dog classes, and simulation results show smaller increases than in the experiment. | ||

In conclusion, it's more difficult for human labelers to classify images with dogs than it is for machine learning models. | In conclusion, it's more difficult for human labelers to classify images with dogs than it is for machine learning models. | ||

| Line 88: | Line 112: | ||

=== Multi-label annotations === | === Multi-label annotations === | ||

Stock & Cissé (2017) also studied ImageNet's multi-label nature, which aligns with the researchers' study in this paper. According to Stock & Cissé (2017), the top-1 accuracy measure could underestimate multi-label by up to 13.2%. | Stock & Cissé (2017) also studied ImageNet's multi-label nature, which aligns with the researchers' study in this paper. According to Stock & Cissé (2017), the top-1 accuracy measure could underestimate multi-label by up to 13.2%. The authors suggest that releasing these labelled data to the public will allow for more robust models in the future. | ||

=== ImageNet inconsistencies and label error === | === ImageNet inconsistencies and label error === | ||

| Line 94: | Line 118: | ||

=== Distribution shift === | === Distribution shift === | ||

There has been an increasing amount of studies in this area. One focus of the studies is distributionally robust optimization (DRO), which finds the model that has the smallest worst-case expected error over a set of probability distributions. Another focus is on finding the model with the lowest error rates on adversarial examples. Work in both areas has been productive, but none was shown to resolve the drop in accuracies between ImageNet and ImageNet V2. | There has been an increasing amount of studies in this area. One focus of the studies is distributionally robust optimization (DRO), which finds the model that has the smallest worst-case expected error over a set of probability distributions. Another focus is on finding the model with the lowest error rates on adversarial examples. Work in both areas has been productive, but none was shown to resolve the drop in accuracies between ImageNet and ImageNet V2. A recent [https://papers.nips.cc/paper/2019/file/8558cb408c1d76621371888657d2eb1d-Paper.pdf paper] also discusses quantifying uncertainty under a distribution shift, in other words whether the output of probabilistic deep learning models should or should not be trusted. | ||

== Conclusion and Future Work == | == Conclusion and Future Work == | ||

=== Conclusion === | === Conclusion === | ||

Researchers noted that in order to achieve truly reliable machine learning, | Researchers noted that in order to achieve truly reliable machine learning, they need a deeper understanding of the range of parameters where the model still remain robust. Techniques from Combinatorics and sensitivity analysis, in particular, might yield fruitful results. This study has provided valuable insights into the desired robustness properties by comparing model performance to human performance. This is especially evident given the results of the experiment which show humans drastically outperforming machine learning in many cases and proposes the question of how much accuracy one is willing to give up in exchange for efficiency. The results have shown that current performance benchmarks are not addressing the robustness to small and natural distribution shifts, which are easily handled by humans. | ||

=== Future work === | === Future work === | ||

Other than improving the robustness of models, researchers should consider investigating if less-trained human labelers can achieve a similar level of robustness to distributional shifts. In addition, researchers can study the robustness to temporal changes, which is another form of natural distribution shift (Gu et al., 2019; Shankar et al., 2019). | Other than improving the robustness of models, researchers should consider investigating if less-trained human labelers can achieve a similar level of robustness to distributional shifts. Since the accuracy of untrained labeling machines may be lower in the system, this will further illustrate that human robustness is a more stable attribute than direct accuracy measurement. In addition, researchers can study the robustness to temporal changes, which is another form of natural distribution shift (Gu et al., 2019; Shankar et al., 2019). Also, Convolutional Neural Network can be a candidate to improve the accuracy of classifying images. | ||

== Critiques == | == Critiques == | ||

# The method of using human to classify Imagenet is fully circular, since the label of imagenet itself is originally annotated by human beings. In fact, the classification scheme itself is intrinsically human construction. It is not logical to test human performance with human performance. This circular contsruction completely violates scientific principles. | |||

# Table 1 simply showed a difference in ImageNet multi-label accuracy yet does not give an explicit reason as to why such a difference is present. Although the paper suggested the distribution shift has caused the difference, it does not give other factors to concretely explain why the distribution shift was the cause. | # Table 1 simply showed a difference in ImageNet multi-label accuracy yet does not give an explicit reason as to why such a difference is present. Although the paper suggested the distribution shift has caused the difference, it does not give other factors to concretely explain why the distribution shift was the cause. | ||

# With the recommendation to future machine evaluations, the paper proposed to "Report performances on dogs, other animals, and inanimate objects separately.". Despite its intentions, it is narrowly specific and requires further generalization for it to be convincing. | # With the recommendation to future machine evaluations, the paper proposed to "Report performances on dogs, other animals, and inanimate objects separately.". Despite its intentions, it is narrowly specific and requires further generalization for it to be convincing. | ||

# With choosing human subjects as samplers, no further information was given as to how they are chosen nor there are any background information was given. As it is a classification problem involving many classes as specific to species, a biology student would give far more accurate results than a computer science student or a | # With choosing human subjects as samplers, no further information was given as to how they are chosen nor there are any background information was given. As it is a classification problem involving many classes as specific to species, a biology student would give far more accurate results than a computer science student or a math student. To make this study more plausible, more human labelers should be sampled. | ||

# As explaining the importance of multi-label metrics using comparison to Top-5 metric, the turtle example falls within the overall similarity (simony) classification of the multi-label evaluation metric, as such, if the Top-5 evaluation suggests any one of the turtle species were selected, the algorithm is considered to produce a correct prediction which is the intention. The example does not convey the necessity of changing to the proposed metric over the Top-5 metric. | # As explaining the importance of multi-label metrics using comparison to Top-5 metric, the turtle example falls within the overall similarity (simony) classification of the multi-label evaluation metric, as such, if the Top-5 evaluation suggests any one of the turtle species were selected, the algorithm is considered to produce a correct prediction which is the intention. The example does not convey the necessity of changing to the proposed metric over the Top-5 metric. | ||

# With the definition in the paper regarding multi-label metrics, it is hard to see why expanding the label set is different from a traditional Top-5 metric or rather necessary, ergo does not yield the claim which the proposed metric is necessary for rigorous accuracy evaluation on ImageNet. | # With the definition in the paper regarding multi-label metrics, it is hard to see why expanding the label set is different from a traditional Top-5 metric or rather necessary, ergo does not yield the claim which the proposed metric is necessary for rigorous accuracy evaluation on ImageNet. | ||

# When discussing the main results, the paper discusses the hypothesis on distribution shift having no effects on human and machine model accuracies; the presentation is poor at best with no clear centric to what they are trying to convey to how (in detail) they resulted in such claims. | # When discussing the main results, the paper discusses the hypothesis on distribution shift having no effects on human and machine model accuracies; the presentation is poor at best with no clear centric to what they are trying to convey to how (in detail) they resulted in such claims. | ||

# In the experiment setup of the presentation, there are a lot of key terms without detailed description. For example, Human labeler training using a subset of the remaining 30,000 unannotated images in the ImageNet validation set, labelers A, B, C, D, and E underwent extensive training to understand the intricacies of fine-grained class distinctions in the ImageNet class hierarchy. Authors should clarify each key term in the presentation otherwise readers are hard to follow. | |||

# Not sure how the human samplers were determined and simply picking several people will have really high bias because the sample is too small and they have different background which will definitely affect the results a lot. Also, it will be better if there are more comparisons between the model introduced and other models. | |||

# Given the low amount of human participants, it is hard to take the results seriously (there is too much variance). Also it's not exactly clear how the authors determined that the multi-label accuracy metric measures a semantically more meaningful notion of accuracy compared to its counterparts. For example, one of the issues with top-5 accuracy that they mention is: "For instance, within the dataset, five turtle classes are given which is difficult to distinguish under such classification evaluations." But it's not clear how multi-label accuracy would be better in this instance. | |||

# It is unclear how well the human labeler can perform labeling after training. So the final result is not that trust-worthy. | |||

# In this experiment set up, label annotators are the same as participants of the experiments. Even if there's a break between the annotating and evaluating human labeler evaluation, the impact of the break in reducing bias is not clear. One potential human labeling data is google's "I'm not a robot" verification test. One variation of the verification test asks users to select all the photos from 9 images that are related to a certain keyword. This allows for a more accurate measurement of human performance vs ImageNet performance. In addition, it's going to reduce the biases from the small number of experiment participants. | |||

# Following Table 2, the authors appear to try and claim that the model is better than the human labelers, simply because the model experienced a better increase in classification following the removal of dog photos then the human labeler did, however, a quick look at the table shows that most human labelers still performed better than the best model. The authors should be making the claim that human labelers are better at labeling dogs than the modal, but are still better overall after removing the dogs dataset. | |||

# The reason why human labeler outperforms CNN could be human had much more training. It would be more convincing if the paper could provide a metric in order to measure human labelers' training data set size. | |||

# Actually, in the multi-label case, it is vague to determine whether the machine learning model or the human labellers were giving the correct label. The structure of the dataset is pretty essential in training a network, in which data with uncertain label (even determined by human) should be avoided. | |||

# The authors mentioned that untrained labelers will likely be in lower accuracy, they can give a standard or definition about a well-trained labeler. | |||

# I believe the authors needed to include more information about how they determined the samples such as human samplers, and also more details on how to define unclear images. | |||

# It would be more convincing if the author could provide the criteria of being human samplers and unclear images, and the accuracy of the human labeler. | |||

# The summary only explains some model components but does not thoroughly goes through the big picture of the model; data-preprocessing, training, and prediction procedures. It would be nice to know the details as well. | |||

# It seems the core problem is more about the dataset itself and not the evaluation procedure. We would not have issues with top 1 and top 5 if Imagenet contained discernable classes with good labels. Of course, this is very expensive, and imagenet is an _excellent_ dataset given these constraints. It does not seem like their proposed solution, multiple labels per image, addresses their concerns properly, as other critiques have already mentioned. Furthermore, having multiple labels per image does not translate to real-life value the same way that the top 5 or top 1 metric does, as in the common case, there is one right answer for a classification problem. | |||

# The paper could provide details on ways to improve the accuracy and robustness of the model. Since the paper mentions CNN, it could provide details of the model and why CNN is a good candidate. | |||

# The accuracy of the model is directly correlated with how the images are labelled. In all multi-label annotations, the authors describe a predicted label as correct if it is within a set of "correct labels" where each image has a different number of correct labels. Perhaps it would yield better results if the model were to first identify the number of objects in the image first and then by using some form of criteria, it labels those identified objects in order of importance (i.e. objects that are closer are labelled first). The authors also never specified what criteria the model uses to "pick out" which object it will label in the image. | |||

# The paper mentions difficult images and fast images. It would be better if the paper had generalized the type of images that constitute difficult images (i.e., the paper mentions 118 dog classes, what are some general characteristics of difficult images?) In addition, it would be interesting to compare the performance between human and machine accuracy on non-fast images. | |||

# The paper meaingfully and correctly points out the problem that the current evaluation of ML algorithm by only using the accuracy on Imagnet as the bechmark is simplistic and probelmatic. However, the idea of comparing human performance with ML models is problematic, since it is hard or even impossible to control the various variables that can drastically change human performance: training time, domain knowledge, cognitive function, amount of work-load, and various environmental factors. In order to compare different experimental methods, the most important step is to carefully control the confounding variables to reach any meaningful conclusion. | |||

# The original question would be how ImageNet was created? The images can only be labeled by human labelers. There might be some mistakes and missing details. The combination of human evaluation and machine evaluation might be helpful to resolve this problem. | |||

# Regarding the difficult problems, CatsandDogs is a very popular dataset that in this day it is trivial for a non-deep CNN to classify with high accuracies. The author would do well to test this CNN with other classes, since its inconceivable that a CNN cannot learn the characteristics of a dog with relation to the other classes in Imagenet. Why would it be able to differentiate the breeds of dogs, but not other organisms? | |||

# The model is limited by the representativeness of small samples and human participants. For the evaluation performance of the human group, the randomness of the sampling of participants should be confirmed first. Or the author need to formulate a rule to compare the accuracy of the human group and the machine group under this framework, to make sure the result is robustness. | |||

# This is rather late, but I just want to point out that the relationship between top-1 accuracy and multi-label accuracy(same with top-5 accuracy and multi-label accuracy) are not illustrated very clear. Also, with ImageNet test for all the models, it should matter how multi-label accuracy is highly correlated with top-1 and top-5. How they change with respect to others can be explained a little more. | |||

== Reference == | |||

[1] Shankar, V., Roelofs, R., Mania, H., Fang, A., Recht, B., & Schmidt, L. (2020). Evaluating Machine Accuracy on ImageNet. ICML 2020. | |||

[2] Krizhevsky, A., Sutskever, I., & Hinton, G. E. ImageNet Classification with Deep Convolutional Neural Networks. 2. Retrieved from http://www.cs.toronto.edu/~fritz/absps/imagenet.pdf | |||

Latest revision as of 00:08, 15 December 2020

Presented by

Siyuan Xia, Jiaxiang Liu, Jiabao Dong, Yipeng Du

Introduction

ImageNet is one of the most influential dataset in machine learning with a million human annotated images and corresponding labels for over 1000 classes. This paper intends to explore the causes for performance differences between human experts and machine learning models, more specifically, CNN, on ImageNet.

Firstly, some images could belong to multiple classes. As a result, it is possible to underestimate the performance if we assign each image with only one label, which is what is being done in the top-1 metric. On the other hand, the top-5 metric looks at the top five predictions by the model for an image and checks if the target label is within those five predictions (Krizhevsky, Sutskever, & Hinton). Therefore, we adopt both top-1 and top-5 metrics where the performances of models, unlike human labelers, are linearly correlated in both cases.

Secondly, in contrast to the uniform performance of models in classes, humans tend to achieve better performances on inanimate objects. Human labelers achieve similar overall accuracies as the models, which indicates spaces of improvements on specific classes for machines.

Lastly, the setup of drawing training and test sets from the same distribution may favor models over human labelers. That is, the accuracy of multi-class prediction from models drops when the testing set is drawn from a different distribution than the training set, ImageNetV2. But this shift in distribution does not cause a problem for human labelers.

Experiment Setup

Overview

There are four main phases to the experiment, which are (i) initial multilabel annotation, (ii) human labeler training, (iii) human labeler evaluation, and (iv) final annotation overview. The five authors of the paper are the participants in the experiments.

A brief overview of the four phases is as follows:

Initial multi-label annotation

Three labelers A, B, and C provided multi-label annotations for a subset of size 20,000 from the ImageNet validation set, and all 20,683 images from the ImageNetV2 test sets. These experiences give A, B, and C extensive experience with the ImageNet dataset.

Human Labeler Training

All five labelers trained on labeling a subset of the remaining ImageNet images. "Training" the human labelers consisted of teaching the humans the distinctions between very similar classes in the training set. For example, there are 118 classes of "dog" within ImageNet and typical human participants will not have working knowledge of the names of each breed of dog seen even if they can recognize and distinguish that breed from others. Local members of the American Kennel Club were even contacted to help with dog breed classification. To do this labelers were trained on class-specific tasks for groups like dogs, insects, monkeys beaver and others. They were also given immediate feedback on whether they were correct and then were asked where they thought they needed more training to improve. Unlike the two annotators in (Russakovsky et al., 2015), who had insufficient training data, the labelers in this experiment had up to 100 training images per class while labeling. This allowed the labelers to really understand the finer details of each class.

Human Labeler Evaluation

Class-balanced random samples, which contain 1,000 images from the 20,000 annotated images are generated from both the ImageNet validation set and ImageNetV2. Five participants labeled these images over 28 days.

Final annotation Review

All labelers reviewed the additional annotations generated in the human labeler evaluation phase.

Multi-label annotations

Top-1 accuracy

With Top-1 accuracy being the standard accuracy measure used in classification studies, it measures the proportions of examples for which the predicted label matches the single target label. As many images often contain more than one object for classification, for example, Figure 3a contains a desk, laptop, keyboard, space bar, and more. With Figure 3b showing a centered prominent figure yet labeled otherwise (people vs picket fence), it can be seen how a single target label is inaccurate for such a task since identifying the main objects in the image does not suffice due to its overly stringent and punishes predictions that are the main image yet does not match its label.

Top-5 accuracy

With Top-5 considers a classification correct if the object label is in the top 5 predicted labels. Although it partially resolves the problem with Top-1 labeling, it is still not ideal since it can trivialize class distinctions. For instance, within the dataset, five turtle classes are given which is difficult to distinguish under such classification evaluations.

Multi-label accuracy

The paper then proposes that for every image, the image shall have a set of target labels and a prediction; if such prediction matches one of the labels, it will be considered as correct labeling. Due to the above-discussed limitations of Top-1 and Top-5 metrics, the paper claims it is necessary for rigorous accuracy evaluation on the dataset.

Types of Multi-label annotations

Multiple objects or organisms

For the images containing more than one object or organism that corresponds to ImageNet, the paper proposed to add an additional target label for each entity in the image. With the discussed image in Figure 3b, the class groom, bow tie, suit, gown, and hoopskirt are all present in the foreground which is then subsequently added to the set of labels.

Synonym or subset relations

For similar classes, the paper considers them as under the same bigger class, that is, for two similarly labeled images, classification is considered correct if the produced label matches either one of the labels. For instance, warthog, African elephant, and Indian element all have prominent tusks, they will be considered subclasses of the tusker, Figure 3c shows a modification of labels to contain tusker as a correct label.

Unclear Image

In certain cases such as Figure 3d, there is a distinctive difficulty to determine whether a label was correct due to ambiguities in the class hierarchy, such as differentiating between a lakeshore or a seashore.

Collecting multi-label annotations

Participants reviewed all predictions made by the models on the dataset ImageNet and ImageNet-V2, the participants then categorized every unique prediction made by the models on the dataset into correct and incorrect labels in order to allow all images to have multiple correct labels to satisfy the above-listed method.

The multi-label accuracy metric

One prediction is only correct if and only if it was marked correct by the expert reviewers during the annotation stage. As discussed in the experiment setup section, after human labelers have completed labeling, a second annotation stage is conducted. In Figure 4, a comparison of Top-1, Top-5, and multi-label accuracies showed higher Top-1 and Top-5 accuracy corresponds with higher multi-label accuracy as expected. With multi-label accuracies measures consistently higher than Top-1 yet lower than Top-5 which shows a high correlation between the three metrics, the paper concludes that multi-label metrics measures a semantically more meaningful notion of accuracy compared to its counterparts.

Human Accuracy Measurement Process

Bias Control

Since three participants participated in the initial round of annotation, they did not look at the data for six months to forget about the information that they might have unintentionally momorized; also, two additional annotators are introduced in the final evaluation phase to ensure fairness of the experiment.

Human Labeler Training

The three main difficulties encountered during human labeler training are fine-grained distinctions, class unawareness, and insufficient training images. Thus, three training regimens are provided to address the problems listed above, respectively. First, labelers will be assigned extra training tasks with immediate feedbacks on similar classes. Second, labelers will be provided access to search for specific classes during labeling. Finally, the training set will contain a reasonable amount of images for each class. There are difficult class distinctions for humans especially with the fine-grained distinction. To help humans perform well on these class distinction, the training tasks only contained images from certain animal families.

Labeling Guide

A labeling guide is constructed to distill class analysis learned during training into discriminative traits that could be used as a reference during the final labeling evaluation.

Final Evaluation and Review

Two samples, each containing 1000 images, are sampled from ImageNet and ImageNetV2, respectively, They are sampled in a class-balanced manner and shuffled together. Over 28 days, all five participants labeled all images. They spent a median of 26 seconds per image. After labeling is completed, an additional multi-label annotation session was conducted, in which human predictions for all images are manually reviewed. Comparing to the initial round of labeling, 37% of the labels changes due to participants' greater familiarity with the classes.

Main Results

Comparison of Human and Machine Accuracies on Image Net

From Figure 1, we can see that the difference in accuracies between the datasets is within 1% for all human participants. As hypothesized, human testers indeed performed better than the automated models on both datasets. It's worth noticing that labelers D and E, who did not participate in the initial annotation period, actually performed better than the best automated model.

Comparison of Human and Machine Accuracies on Image Net

Based on the results shown in Figure 1, we can see that the confidence interval of the best 4 human participants and 4 best model overlap; however, with a p-value of 0.037 using the McNemar's paired test, it rejects the hypothesis that the FixResNeXt model and Human E labeler have the same accuracy with respect to the ImageNet validation dataset. Figure 1 also shows that the confidence intervals of the labeling accuracies for human labelers C, D, E do not overlap with the confidence interval of the best model with respect to ImageNet-V2 and with the McNemar's test yielding a p-value of [math]\displaystyle{ 2\times 10^{-4} }[/math], it is clear that the hypothesis human and machined models have same robustness to model distribution shifts ought to be rejected.

Other Observations

Difficult Images

The experiment also shed some light on images that are difficult to label. 10 images were misclassified by all of the human labelers. Among those 10 images, there was 1 image of a monkey and 9 of dogs. In addition, 27 images, with 19 in object classes and 8 in organism classes, were misclassified by all 72 machine learning models in this experiment. Only 2 images were labeled wrong by all human labelers and models. Both images contained dogs. Researchers also noted that difficult images for models are mostly images of objects and exclusively images of animals for human labelers.

Accuracies without dogs

As previously discussed in the paper, machine learning models tend to outperform human labelers when classifying the 118 dog classes. To better understand to what extent models outperform human labelers, researchers computed the accuracies again by excluding all the dog classes. Results showed a 0.6% increase in accuracy on the ImageNet images using the best model and a 1.1% increase on the ImageNet V2 images. In comparison, the mean increases in accuracy for human labelers are 1.9% and 1.8% on the ImageNet and ImageNet V2 images respectively. Researchers also conducted a simulation to demonstrate that the increase in human labeling accuracy on non-dog images is significant. This simulation was done by bootstrapping to estimate the changes in accuracy when only using data for the non-dog classes, and simulation results show smaller increases than in the experiment.

In conclusion, it's more difficult for human labelers to classify images with dogs than it is for machine learning models.

Accuracies on objects

Researchers also computed machine and human labelers' accuracies on a subset of data with only objects, as opposed to organisms, to better illustrate the differences in performance. This test involved 590 object classes. As shown in the table above, there is a 3.3% and 3.4% increase in mean accuracies for human labelers on the ImageNet and ImageNet V2 images. In contrast, there is a 0.5% decrease in accuracy for the best model on both ImageNet and ImageNet V2. This indicates that human labelers are much better at classifying objects than these models are.

Accuracies on fast images

Unlike the CNN models, human labelers spent different amounts of time on different images, spanning from several seconds to 40 minutes. To further analyze the images that take human labelers less time to classify, researchers took a subset of images with median labeling time spent by human labelers of at most 60 seconds. These images were referred to as "fast images". There are 756 and 714 fast images from ImageNet and ImageNet V2 respectively, out of the total 2000 images used for evaluation. Accuracies of models and humans on the fast images increased significantly, especially for humans.

This result suggests that human labelers know when an image is difficult to label and would spend more time on it. It also shows that the models are more likely to correctly label images that human labelers can label relatively quickly.

Related Work

Human accuracy on ImageNet

Russakovsky et al. (2015) studied two trained human labelers' accuracies on 1500 and 258 images in the context of the ImageNet challenge. The top-5 accuracy of the labeler who labeled 1500 images was the well-known human baseline on ImageNet.

As introduced before, the researchers went beyond by using multi-label accuracy, using more labelers, and focusing on robustness to small distribution shifts. Although the researchers had some different findings, some results are also consistent with results from (Russakovsky et al., 2015). An example is that both experiments indicated that it takes human labelers around one minute to label an image. The time distribution also has a long tail, due to the difficult images as mentioned before.

Human performance in computer vision broadly

There are many examples of recent studies about humans in the area of computer vision, such as investigating human robustness to synthetic distribution change (Geirhos et al., 2017) and studying what characteristics do humans use to recognize objects (Geirhos et al., 2018). Other examples include the adversarial examples constructed to fool both machines and time-limited humans (Elsayed et al., 2018) and illustrating foreground/background objects' effects on human and machine performance (Zhu et al., 2016).

Multi-label annotations

Stock & Cissé (2017) also studied ImageNet's multi-label nature, which aligns with the researchers' study in this paper. According to Stock & Cissé (2017), the top-1 accuracy measure could underestimate multi-label by up to 13.2%. The authors suggest that releasing these labelled data to the public will allow for more robust models in the future.

ImageNet inconsistencies and label error

Researches have found and recorded some incorrectly labeled images from ImageNet and ImageNet V2 during this study. Earlier studies (Van Horn et al., 2015) also shown that at least 4% of the birds in ImageNet are misclassified. This work also noted that the inconsistent taxonomic structure in birds' classes could lead to weak class boundaries. Researchers also noted that the majority of the fine-grained organism classes also had similar taxonomic issues.

Distribution shift

There has been an increasing amount of studies in this area. One focus of the studies is distributionally robust optimization (DRO), which finds the model that has the smallest worst-case expected error over a set of probability distributions. Another focus is on finding the model with the lowest error rates on adversarial examples. Work in both areas has been productive, but none was shown to resolve the drop in accuracies between ImageNet and ImageNet V2. A recent paper also discusses quantifying uncertainty under a distribution shift, in other words whether the output of probabilistic deep learning models should or should not be trusted.

Conclusion and Future Work

Conclusion

Researchers noted that in order to achieve truly reliable machine learning, they need a deeper understanding of the range of parameters where the model still remain robust. Techniques from Combinatorics and sensitivity analysis, in particular, might yield fruitful results. This study has provided valuable insights into the desired robustness properties by comparing model performance to human performance. This is especially evident given the results of the experiment which show humans drastically outperforming machine learning in many cases and proposes the question of how much accuracy one is willing to give up in exchange for efficiency. The results have shown that current performance benchmarks are not addressing the robustness to small and natural distribution shifts, which are easily handled by humans.

Future work

Other than improving the robustness of models, researchers should consider investigating if less-trained human labelers can achieve a similar level of robustness to distributional shifts. Since the accuracy of untrained labeling machines may be lower in the system, this will further illustrate that human robustness is a more stable attribute than direct accuracy measurement. In addition, researchers can study the robustness to temporal changes, which is another form of natural distribution shift (Gu et al., 2019; Shankar et al., 2019). Also, Convolutional Neural Network can be a candidate to improve the accuracy of classifying images.

Critiques

- The method of using human to classify Imagenet is fully circular, since the label of imagenet itself is originally annotated by human beings. In fact, the classification scheme itself is intrinsically human construction. It is not logical to test human performance with human performance. This circular contsruction completely violates scientific principles.

- Table 1 simply showed a difference in ImageNet multi-label accuracy yet does not give an explicit reason as to why such a difference is present. Although the paper suggested the distribution shift has caused the difference, it does not give other factors to concretely explain why the distribution shift was the cause.

- With the recommendation to future machine evaluations, the paper proposed to "Report performances on dogs, other animals, and inanimate objects separately.". Despite its intentions, it is narrowly specific and requires further generalization for it to be convincing.

- With choosing human subjects as samplers, no further information was given as to how they are chosen nor there are any background information was given. As it is a classification problem involving many classes as specific to species, a biology student would give far more accurate results than a computer science student or a math student. To make this study more plausible, more human labelers should be sampled.

- As explaining the importance of multi-label metrics using comparison to Top-5 metric, the turtle example falls within the overall similarity (simony) classification of the multi-label evaluation metric, as such, if the Top-5 evaluation suggests any one of the turtle species were selected, the algorithm is considered to produce a correct prediction which is the intention. The example does not convey the necessity of changing to the proposed metric over the Top-5 metric.

- With the definition in the paper regarding multi-label metrics, it is hard to see why expanding the label set is different from a traditional Top-5 metric or rather necessary, ergo does not yield the claim which the proposed metric is necessary for rigorous accuracy evaluation on ImageNet.

- When discussing the main results, the paper discusses the hypothesis on distribution shift having no effects on human and machine model accuracies; the presentation is poor at best with no clear centric to what they are trying to convey to how (in detail) they resulted in such claims.

- In the experiment setup of the presentation, there are a lot of key terms without detailed description. For example, Human labeler training using a subset of the remaining 30,000 unannotated images in the ImageNet validation set, labelers A, B, C, D, and E underwent extensive training to understand the intricacies of fine-grained class distinctions in the ImageNet class hierarchy. Authors should clarify each key term in the presentation otherwise readers are hard to follow.

- Not sure how the human samplers were determined and simply picking several people will have really high bias because the sample is too small and they have different background which will definitely affect the results a lot. Also, it will be better if there are more comparisons between the model introduced and other models.

- Given the low amount of human participants, it is hard to take the results seriously (there is too much variance). Also it's not exactly clear how the authors determined that the multi-label accuracy metric measures a semantically more meaningful notion of accuracy compared to its counterparts. For example, one of the issues with top-5 accuracy that they mention is: "For instance, within the dataset, five turtle classes are given which is difficult to distinguish under such classification evaluations." But it's not clear how multi-label accuracy would be better in this instance.

- It is unclear how well the human labeler can perform labeling after training. So the final result is not that trust-worthy.

- In this experiment set up, label annotators are the same as participants of the experiments. Even if there's a break between the annotating and evaluating human labeler evaluation, the impact of the break in reducing bias is not clear. One potential human labeling data is google's "I'm not a robot" verification test. One variation of the verification test asks users to select all the photos from 9 images that are related to a certain keyword. This allows for a more accurate measurement of human performance vs ImageNet performance. In addition, it's going to reduce the biases from the small number of experiment participants.

- Following Table 2, the authors appear to try and claim that the model is better than the human labelers, simply because the model experienced a better increase in classification following the removal of dog photos then the human labeler did, however, a quick look at the table shows that most human labelers still performed better than the best model. The authors should be making the claim that human labelers are better at labeling dogs than the modal, but are still better overall after removing the dogs dataset.

- The reason why human labeler outperforms CNN could be human had much more training. It would be more convincing if the paper could provide a metric in order to measure human labelers' training data set size.

- Actually, in the multi-label case, it is vague to determine whether the machine learning model or the human labellers were giving the correct label. The structure of the dataset is pretty essential in training a network, in which data with uncertain label (even determined by human) should be avoided.

- The authors mentioned that untrained labelers will likely be in lower accuracy, they can give a standard or definition about a well-trained labeler.

- I believe the authors needed to include more information about how they determined the samples such as human samplers, and also more details on how to define unclear images.

- It would be more convincing if the author could provide the criteria of being human samplers and unclear images, and the accuracy of the human labeler.

- The summary only explains some model components but does not thoroughly goes through the big picture of the model; data-preprocessing, training, and prediction procedures. It would be nice to know the details as well.

- It seems the core problem is more about the dataset itself and not the evaluation procedure. We would not have issues with top 1 and top 5 if Imagenet contained discernable classes with good labels. Of course, this is very expensive, and imagenet is an _excellent_ dataset given these constraints. It does not seem like their proposed solution, multiple labels per image, addresses their concerns properly, as other critiques have already mentioned. Furthermore, having multiple labels per image does not translate to real-life value the same way that the top 5 or top 1 metric does, as in the common case, there is one right answer for a classification problem.

- The paper could provide details on ways to improve the accuracy and robustness of the model. Since the paper mentions CNN, it could provide details of the model and why CNN is a good candidate.

- The accuracy of the model is directly correlated with how the images are labelled. In all multi-label annotations, the authors describe a predicted label as correct if it is within a set of "correct labels" where each image has a different number of correct labels. Perhaps it would yield better results if the model were to first identify the number of objects in the image first and then by using some form of criteria, it labels those identified objects in order of importance (i.e. objects that are closer are labelled first). The authors also never specified what criteria the model uses to "pick out" which object it will label in the image.

- The paper mentions difficult images and fast images. It would be better if the paper had generalized the type of images that constitute difficult images (i.e., the paper mentions 118 dog classes, what are some general characteristics of difficult images?) In addition, it would be interesting to compare the performance between human and machine accuracy on non-fast images.

- The paper meaingfully and correctly points out the problem that the current evaluation of ML algorithm by only using the accuracy on Imagnet as the bechmark is simplistic and probelmatic. However, the idea of comparing human performance with ML models is problematic, since it is hard or even impossible to control the various variables that can drastically change human performance: training time, domain knowledge, cognitive function, amount of work-load, and various environmental factors. In order to compare different experimental methods, the most important step is to carefully control the confounding variables to reach any meaningful conclusion.

- The original question would be how ImageNet was created? The images can only be labeled by human labelers. There might be some mistakes and missing details. The combination of human evaluation and machine evaluation might be helpful to resolve this problem.

- Regarding the difficult problems, CatsandDogs is a very popular dataset that in this day it is trivial for a non-deep CNN to classify with high accuracies. The author would do well to test this CNN with other classes, since its inconceivable that a CNN cannot learn the characteristics of a dog with relation to the other classes in Imagenet. Why would it be able to differentiate the breeds of dogs, but not other organisms?

- The model is limited by the representativeness of small samples and human participants. For the evaluation performance of the human group, the randomness of the sampling of participants should be confirmed first. Or the author need to formulate a rule to compare the accuracy of the human group and the machine group under this framework, to make sure the result is robustness.

- This is rather late, but I just want to point out that the relationship between top-1 accuracy and multi-label accuracy(same with top-5 accuracy and multi-label accuracy) are not illustrated very clear. Also, with ImageNet test for all the models, it should matter how multi-label accuracy is highly correlated with top-1 and top-5. How they change with respect to others can be explained a little more.

Reference

[1] Shankar, V., Roelofs, R., Mania, H., Fang, A., Recht, B., & Schmidt, L. (2020). Evaluating Machine Accuracy on ImageNet. ICML 2020.

[2] Krizhevsky, A., Sutskever, I., & Hinton, G. E. ImageNet Classification with Deep Convolutional Neural Networks. 2. Retrieved from http://www.cs.toronto.edu/~fritz/absps/imagenet.pdf