Adversarial Attacks on Copyright Detection Systems: Difference between revisions

| (102 intermediate revisions by 35 users not shown) | |||

| Line 2: | Line 2: | ||

Luwen Chang, Qingyang Yu, Tao Kong, Tianrong Sun | Luwen Chang, Qingyang Yu, Tao Kong, Tianrong Sun | ||

== | ==Introduction == | ||

The copyright detection system is one of the most commonly used machine learning systems. Important real-world applications include tools such as Google Jigsaw, which can identify and remove video promoting terrorism, or applications like YouTube, where a machine learning system is used to flag content that infringes copyrights. Failure to do so will result in legal consequences. However, the public's adversarial attacks have not been widely addressed and remain largely unexplored. Adversarial attacks are instances where people intentionally design inputs to cause misclassification in the model. | |||

Copyright detection systems are vulnerable to attacks for three reasons: | |||

1., Unlike physical-world attacks where adversarial samples need to survive under different conditions like resolutions and viewing angles, any digital files can be uploaded directly to the web without going through a camera or microphone. | |||

2. The detection system is an open-set problem, which means that the uploaded files may not correspond to an existing class. This case prevents users from uploading unprotected audio/video, whereas most of the uploaded files nowadays are not protected. | |||

3. The detection system needs to handle a vast majority of content with different labels but similar features. For example, in the ImageNet classification task, the system is easily attacked when there are two cats/dogs/birds with high similarities but different classes. | |||

In this paper, different types of copyright detection systems will be introduced. | The goal of this paper is to raise awareness of the security threats faced by copyright detection systems. In this paper, different types of copyright detection systems will be introduced. For example, a widely used detection model from Shazam, a popular application used for recognizing music, will be discussed in the paper. As a proof-of-concept, the paper generates audio fingerprints using convolutional neural networks and formulates the adversarial loss function using standard gradient methods. An example of remixing music is given to show how adversarial examples can be created. Then, the adversarial attacks are applied to the industrial systems like AudioTag and YouTube Content ID to evaluate the systems' effectiveness. | ||

== | == Types of copyright detection systems == | ||

Fingerprinting algorithms work by extracting the features of a source file as a hash and then utilizing a matching algorithm to compare that to the materials protected by copyright in the database. If enough matches are found between the source and existing data, then the two samples are considered identical. Thus, the copyright detection system is able to reject the copyright declaration of the source. Most audio, image, and video fingerprinting algorithms work by training a neural network to output features or extracting hand-crafted features. | |||

In terms of video fingerprinting, a useful algorithm is to detect the entering/leaving time of the objects in the video (Saviaga & Toxtli, 2018). The final hash consists of the entering/leaving of different objects and a unique relationship of the objects. However, most of these video fingerprinting algorithms only train their neural networks by using simple distortions such as adding noise or flipping the video rather than adversarial perturbations. This leads to algorithms that are strong against pre-defined distortions, but not adversarial attacks. | |||

== | Moreover, some plagiarism detection systems also depend on neural networks to generate a fingerprint of the input document. Though using deep feature representations as a fingerprint is efficient in detecting plagiarism, it still might be weak to adversarial attacks. | ||

The generic neural network model consists two convolutional layers and a max-pooling layer, depicted in the figure below. As mentioned above, the convolutional neural network is well-known for its properties of | |||

Audio fingerprinting may perform better than the algorithms above. This is because the hash is usually generated by extracting hand-crafted features rather than training a neural network. That being said, it still is easy to attack. | |||

== Case study: evading audio fingerprinting == | |||

=== Audio Fingerprinting Model=== | |||

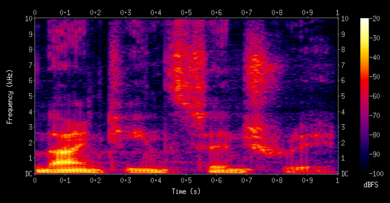

The audio fingerprinting model plays an important role in copyright detection. It is useful for quickly locating or finding similar samples inside an audio database. Shazam is a popular music recognition application, which uses one of the most well-known fingerprinting models. Because of the three properties: temporal locality, translation invariance, and robustness, Shazam's algorithm is treated as a good fingerprinting algorithm. It shows strong robustness even in presence of noise by using local maximum in spectrogram to form hashes. Spectrograms are two-dimensional representations of audio frequency spectra over time. An example is shown below. | |||

<div style="text-align:center;">[[File:Spectrogram-19thC.png|Spectrogram-19thC|390px]]</div> | |||

<div align="center"><span style="font-size:80%">Source:https://commons.wikimedia.org/wiki/File:Spectrogram-19thC.png</span></div> | |||

=== Interpreting the fingerprint extractor as a CNN === | |||

The intention of this section is to build a differentiable neural network whose function resembles that of an audio fingerprinting algorithm, which is well-known for its ability to identify the meta-data, i.e. song names, artists, and albums, while independent of an audio format (Group et al., 2005). The generic neural network model will then be used as an example of black-box attacks on many popular real-world systems, in this case, YouTube and audio tag. | |||

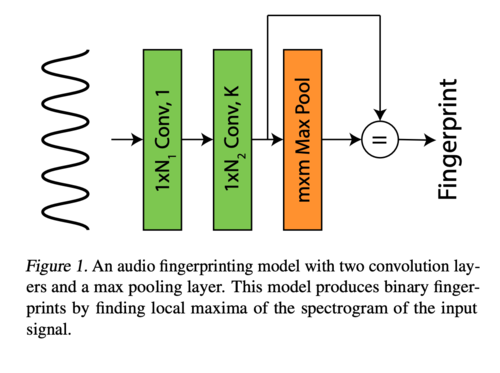

The generic neural network model consists of two convolutional layers and a max-pooling layer, which is used for dimension reduction. This is depicted in the figure below. As mentioned above, the convolutional neural network is well-known for its properties of temporal locality and transformational invariance. In which, temporal locality refers to a program accessing the same memory location within a small time frame. This is in contrast to spatial locality, where the program accesses nearby memory locations within a small time frame (Pingali, 2011). The purpose of this network is to generate audio fingerprinting signals that extract features that uniquely identify a signal, regardless of the starting and ending times of the inputs. | |||

[[File:cov network.png | thumb | center | 500px ]] | [[File:cov network.png | thumb | center | 500px ]] | ||

| Line 26: | Line 41: | ||

While an audio sample enters the neural network, it is first transformed by the initial network layer, which can be described as a normalized Hann function. The form of the function is shown below, with N being the width of the Kernel. | While an audio sample enters the neural network, it is first transformed by the initial network layer, which can be described as a normalized Hann function. The form of the function is shown below, with N being the width of the Kernel. | ||

$$ f_{1}(n)=\frac {sin^2(\frac{\pi n} {N})} {\ | $$ f_{1}(n)=\frac {\sin^2(\frac{\pi n} {N})} {\sum_{i=0}^N \sin^2(\frac{\pi i}{N})} $$ | ||

The intention of the normalized Hann function is to smooth the adversarial perturbation of the input audio signal, which removes the discontinuity as well as the bad spectral properties. This transformation enhances the efficiency of black-box attacks that is later implemented. | The intention of the normalized Hann function is to smooth the adversarial perturbation of the input audio signal, which removes the discontinuity as well as the bad spectral properties. This transformation enhances the efficiency of black-box attacks that is later implemented. | ||

| Line 36: | Line 51: | ||

The output of this layer is described as φ(x) (x being the input signal), a feature representation of the audio signal sample. | The output of this layer is described as φ(x) (x being the input signal), a feature representation of the audio signal sample. | ||

However, this representation is flawed due to its vulnerability to noise and perturbation, as well as its difficulty to store and inspect. Therefore, a maximum pooling layer is being implemented to φ(x), in which the network computes a local maximum using a max-pooling function. This network layer outputs a binary fingerprint | However, this representation is flawed due to its vulnerability to noise and perturbation, as well as its difficulty to store and inspect. Therefore, a maximum pooling layer is being implemented to φ(x), in which the network computes a local maximum using a max-pooling function to become robust to changes in the position of the feature. This network layer outputs a binary fingerprint ψ (x) (x being the input signal) that will be used later to search for a signal against a database of previously processed signals. | ||

== | === Formulating the adversarial loss function === | ||

In the previous section, local maxima of spectrogram are used to generate fingerprints by CNN, but a loss has not been quantified how similar | In the previous section, local maxima of the spectrogram are used to generate fingerprints by CNN, but a loss has not been quantified to compare how similar two fingerprints are. After the loss is found, standard gradient methods can be used to find a perturbation <math>{\delta}</math>, which can be added to a signal so that the copyright detection system will be tricked. Also, a bound is set to make sure the generated fingerprints are close enough to the original audio signal. | ||

$$\text{bound:}\ ||\delta||_p\le\epsilon$$ | $$\text{bound:}\ ||\delta||_p\le\epsilon$$ | ||

| Line 46: | Line 61: | ||

To compare how similar two binary fingerprints are, Hamming distance is employed. Hamming distance between two strings is the number of digits that are different. For example, the Hamming distance between 101100 and 100110 is 2. | To compare how similar two binary fingerprints are, Hamming distance is employed. Hamming distance between two strings is the number of digits that are different (Hamming distance, 2020). For example, the Hamming distance between 101100 and 100110 is 2. | ||

Let <math>{\psi(x)}</math> and <math>{\psi(y)}</math> be two binary fingerprints outputted from the model, the number of peaks shared by <math>{x}</math> and <math>{y}</math> can be found through <math>{|\psi(x)\cdot\psi(y)|}</math>. Now, to get a differentiable loss function, the equation is found to be | Let <math>{\psi(x)}</math> and <math>{\psi(y)}</math> be two binary fingerprints outputted from the model, the number of peaks shared by <math>{x}</math> and <math>{y}</math> can be found through <math>{|\psi(x)\cdot\psi(y)|}</math>. Now, to get a differentiable loss function, the equation is found to be | ||

| Line 53: | Line 68: | ||

This is effective for white-box attacks | This is effective for white-box attacks by knowing the fingerprinting system. However, the loss can be easily minimized by modifying the location of the peaks by one pixel, which would not be reliable to transfer to black-box industrial systems. To make it more transferable, a new loss function that involves more movements of the local maxima of the spectrogram is proposed. The idea is to move the locations of peaks in <math>{\psi(x)}</math> outside of neighborhood of the peaks of <math>{\psi(y)}</math>. In order to implement the model more efficiently, two max-pooling layers are used. One of the layers has a bigger width <math>{w_1}</math> while the other one has a smaller width <math>{w_2}</math>. For any location, if the output of <math>{w_1}</math> pooling is strictly greater than the output of <math>{w_2}</math> pooling, then it can be concluded that no peak is in that location with radius <math>{w_2}</math>. | ||

The loss function is as the following: | The loss function is as the following: | ||

$$J(x,y) = \sum_i\bigg(ReLU\bigg(c-\bigg(\underset{|j| \leq w_1}{\max}\phi(i+j;x)-\underset{|j| \leq w_2}{\max}\phi(i+j;x)\bigg)\bigg)\cdot\psi(i;y)\bigg)$$ | $$J(x,y) = \sum_i\bigg(\text{ReLU}\bigg(c-\bigg(\underset{|j| \leq w_1}{\max}\phi(i+j;x)-\underset{|j| \leq w_2}{\max}\phi(i+j;x)\bigg)\bigg)\cdot\psi(i;y)\bigg)$$ | ||

The equation above penalizes the peaks of <math>{x}</math> which are in neighborhood of peaks of <math>{y}</math> with radius of <math>{w_2}</math>. The activation function uses <math>{ReLU}</math>. <math>{c}</math> is the difference between the | The equation above penalizes the peaks of <math>{x}</math> which are in neighborhood of peaks of <math>{y}</math> with radius of <math>{w_2}</math>. The activation function uses <math>{ReLU}</math>. <math>{c}</math> is the difference between the outputs of two max-pooling layers. | ||

| Line 71: | Line 86: | ||

s.t.||\delta||_{\infty}\le\epsilon | s.t.||\delta||_{\infty}\le\epsilon | ||

$$ | $$ | ||

where <math>{x}</math> is the input signal, <math>{J}</math> is the loss function with the smoothed max function. | where <math>{x}</math> is the input signal, <math>{J}</math> is the loss function with the smoothed max function. Note that <math>||\delta||_\infty = \max_k |\delta_k|</math> (the largest component of <math>\delta</math>) which makes it relatively easy to satisfy the above constraint by clipping the values of each iteration of <math>\delta</math> to be less than <math>\epsilon</math>. However, since <math>||\delta||_\infty \leq \dots \leq ||\delta||_2 \leq ||\delta||_1</math> this choice of norm results in the loosest bound, and therefore admits a larger selection of perturbations <math>\delta</math> than might be permitted by other choices of norms. | ||

== | === Remix adversarial examples=== | ||

While solving the optimization problem, the resulted example would be able to fool the copyright detection system. But it could sound unnatural with the perturbations. | |||

Instead, the fingerprinting could be made in a more natural way (i.e., a different audio signal). | |||

[[File:Table_1_White-box.jpg | center]] | By modifying the loss function, which switches the order of the max-pooling layers in the smooth maximum components in the loss function, this remix loss function is to make two signals x and y look as similar as possible. | ||

$$J_{remix}(x,y) = \sum_i\bigg(ReLU\bigg(c-\bigg(\underset{|j| \leq w_2}{\max}\phi(i+j;x)-\underset{|j| \leq w_1}{\max}\phi(i+j;x)\bigg)\bigg)\cdot\psi(i;y)\bigg)$$ | |||

By adding this new loss function, a new optimization problem could be defined. | |||

$$ | |||

\underset{\delta}{\min}J(x+\delta,x) + \lambda J_{remix}(x+\delta,y)\\ | |||

s.t.||\delta||_{p}\le\epsilon | |||

$$ | |||

where <math>{\lambda}</math> is a scalar parameter that controls the similarity of <math>{x+\delta}</math> and <math>{y}</math>. | |||

This optimization problem is able to generate an adversarial example from the selected source, and also enforce the adversarial example to be similar to another signal. The resulting adversarial example is called Remix adversarial example because it gets the references to its source signal and another signal. | |||

== Evaluating transfer attacks on industrial systems== | |||

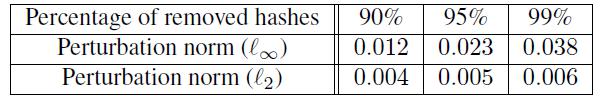

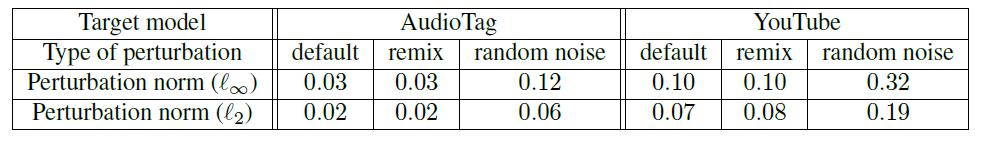

The effectiveness of default and remix adversarial examples is tested through white-box attacks on the proposed model and black-box attacks on two real-world audio copyright detection systems - AudioTag and YouTube “Content ID” system. <math>{l_{\infty}}</math> norm and <math>{l_{2}}</math> norm of perturbations are two measures of modification. Both of them are calculated after normalizing the signals so that the samples could lie between 0 and 1. | |||

Before evaluating black-box attacks against real-world systems, white-box attacks against our own proposed model is used to provide the baseline of adversarial examples’ effectiveness. Loss function <math>{J(x,y)=|\phi(x)\cdot\psi(x)\cdot\psi(y)|}</math> is used to generate white-box attacks. The unnoticeable fingerprints of the audio with the noise can be changed or removed by optimizing the loss function. | |||

[[File:Table_1_White-box.jpg |center ]] | |||

<div align="center">Table 1: Norms of the perturbations for white-box attacks</div> | <div align="center">Table 1: Norms of the perturbations for white-box attacks</div> | ||

In black-box attacks, the AudioTag system is found to be relatively sensitive to the attacks since it can detect the songs with a benign signal while it failed to detect both default and remix adversarial examples. The architecture of the AudioTag fingerprint model and surrogate CNN model is guessed to be similar based on the experimental observations. | In black-box attacks, by applying random perturbations to the audio recordings, AudioTag’s claim of being robust to input | ||

distortions was verified. However, the AudioTag system is found to be relatively sensitive to the attacks since it can detect the songs with a benign signal while it failed to detect both default and remix adversarial examples. The architecture of the AudioTag fingerprint model and surrogate CNN model is guessed to be similar based on the experimental observations. | |||

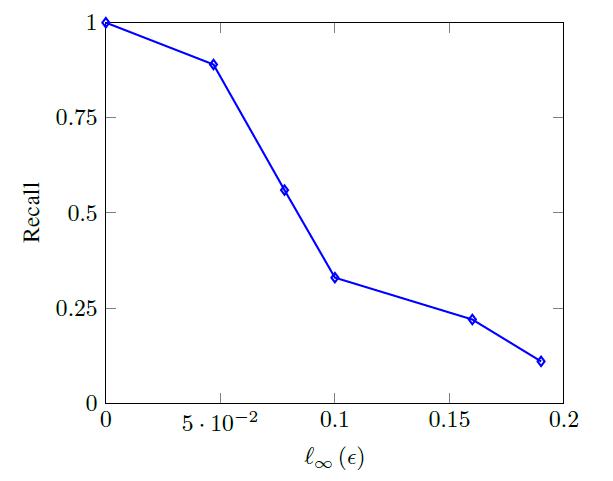

Similar to AudioTag, the YouTube “Content ID” system also got the result with successful identification of benign songs but failure to detect adversarial examples. However, to fool the YouTube Content ID system, a larger value of the parameter is required. YouTube Content ID system has a more robust fingerprint model. | Similar to AudioTag, the YouTube “Content ID” system also got the result with successful identification of benign songs but failure to detect adversarial examples. However, to fool the YouTube Content ID system, a larger value of the parameter <math>{\epsilon}</math> is required, which makes perturbations obvious although songs can still be recognized by humans. YouTube Content ID system has a more robust fingerprint model. | ||

[[File:Table_2_Black-box.jpg | center]] | [[File:Table_2_Black-box.jpg |center]] | ||

<div align="center">Table 2: Norms of the perturbations for black-box attacks</div> | <div align="center">Table 2: Norms of the perturbations for black-box attacks</div> | ||

[[File:YouTube_Figure.jpg | center]] | [[File:YouTube_Figure.jpg |center]] | ||

<div align="center">Figure 2: YouTube’s copyright detection recall against the magnitude of noise</div> | <div align="center">Figure 2: YouTube’s copyright detection recall against the magnitude of noise</div> | ||

== | == Conclusion == | ||

In | In this paper, we obtain that many industrial copyright detection systems used in popular video and music websites, such as YouTube and AudioTag, are significantly vulnerable to adversarial attacks established in the existing literature. By building a simple music identification system resembling that of Shazam using a neural network and attack it by the well-known gradient method, this paper firmly proved the lack of robustness of the current online detector. | ||

Although the method used in the paper isn't optimal, the attacks can be strengthened using sharper technical. Also, we could transfer attacks using rudimentary surrogate models that rely on hand-crafted features, while commercial systems likely rely on trainable neural nets. | |||

Our goal here is not to facilitate but the intention of this paper is to raise the awareness of the vulnerability of the current online system to adversarial attacks and to emphasize the significance of copyright detection system. A number of mitigating approaches already exist, such as adversarial training, but they need to be further developed and examined in order to robustly protect against the threat of adversarial copyright attacks. | |||

== Appendix == | |||

=== Feature Extraction Process in Audio-Fingerprinting System === | |||

1. Preprocessing. In this step, the audio signal is digitalized and quantized at first. | |||

Then, it is converted to a mono signal by averaging two channels if necessary. | |||

Finally, it is resampled if the sampling rate is different from the target rate. | |||

2. Framing. Framing means dividing the audio signal into frames of equal length | |||

by a window function. | |||

3. Transformation. This step is designed to transform the set of frames into a new set | |||

of features, in order to reduce the redundancy. | |||

4. Feature Extraction. After transformation, final acoustic features are extracted | |||

from the time-frequency representation. The main purpose is to reduce the | |||

dimensionality and increase the robustness to distortions. | |||

5. Post-processing. To capture the temporal variations of the audio signal, higher | |||

order time derivatives are required sometimes. | |||

== Critiques == | |||

- The experiments in this paper appear to be a proof-of-concept rather than a serious evaluation of a model. One problem is that the norm is used to evaluate the perturbation. Unlike the norm in image domains which can be visualized and easily understood, the perturbations in the audio domain are more difficult to comprehend. A cognitive study or something like a user study might need to be conducted in order to understand this. Another question related to this is that if the random noise is 2x bigger or 3x bigger in terms of the norm, does this make a huge difference when listening to it? Are these two perturbations both very obvious or unnoticeable? In addition, it seems that a dataset is built but the stats are missing. Third, no baseline methods are being compared to in this paper, not even an ablation study. The proposed two methods (default and remix) seem to perform similarly. | |||

- There could be an improvement in term of how to find the threshold in general, it mentioned how to measure the similarity of two pieces of content but have not discussed what threshold should we set for this model. In fact, it is always a challenge to determine the boundary of "Copyright Issue" or "Not Copyright Issue" and this is some important information that may be discussed in the paper. | |||

- The fingerprinting technique used in this paper seems rather elementary, which is a downfall in this context because the focus of this paper is adversarial attacks on these methods. A recent 2019 work (https://arxiv.org/pdf/1907.12956.pdf) proposed a deep fingerprinting algorithm along with some novel framing of the problem. There are several other older works in this area that also give useful insights that would have improved the algorithm in this paper. | |||

- Figure 1 clearly indicates the stricture of an audio fingerprinting model with 2 convolution layers and a max pooling layer. In the following paragraphs, it shows how and why the author choose to use each layer and what we could get at the output layer. This gives us a general thought of how this type of neural network is used to deal with data. | |||

- In the experiment section, authors could provide more background information on the dataset used. For example, the number of songs in the dataset and a brief introduction of different features. | |||

- Since the paper didn't go into details of how features are extracted in an audio-fingerprinting system, the details are listed out above in "Feature Extraction Process in Audio-Fingerprinting System" | |||

- In Shazam algorithm, the author should explore more on when the system will detect the copyright issue. Such as should the copyright issue be raised if there is only 5 seconds of melody or lyrics that are similar to other music? | |||

- In addition to Audio files, can this system detect copyright attacks on mixed data such as say, embedded audio files in the text? It will be interesting to see if it can do it. Nowadays, a lot of data is coming in mixed form, e.g. text+video, audio+text etc and therefore having a system to detect adversarial attacks on copyright detection systems for mixed data will be a useful development. | |||

- When introducing the types of copyright detection systems, there is a lack of clearness in explaining fingerprinting algorithms for audios and images. Besides a brief, one-line explanation, justifications regarding why audio fingerprinting algorithms are better compared to video fingerprinting algorithms were not provided. Moreover, discussions regarding image fingerprinting algorithms were missing. Hence, itt extent of the would be beneficial to add these two sections to enhance the readers' understanding of this summary. | |||

- It says: "Fingerprinting algorithms work by extracting the features of a source file as a hash", but it seems like they should have included more information about it so that readers could understand how feature extraction works in this case. | |||

- It would be better if explanations on all algorithms and terminologies are included, maybe just point somewhere contains such information to keep this summary short and clear. | |||

- The fingerprint algorithm in this article is the only algorithm discussed in the paper, the author should discuss the evolution of the algorithm and add some comparisons of with other algorithms that currently exist. | |||

== References == | == References == | ||

Group, P., Cano, P., Group, M., Group, E., Batlle, E., Ton Kalker Philips Research Laboratories Eindhoven, . . . Authors: Pedro Cano Music Technology Group. (2005, November 01). A Review of Audio Fingerprinting. Retrieved November 13, 2020, from https://dl.acm.org/doi/10.1007/s11265-005-4151-3 | |||

Hamming distance. (2020, November 1). In ''Wikipedia''. https://en.wikipedia.org/wiki/Hamming_distance | |||

Jovanovic. (2015, February 2). ''How does Shazam work? Music Recognition Algorithms, Fingerprinting, and Processing''. Toptal Engineering Blog. https://www.toptal.com/algorithms/shazam-it-music-processing-fingerprinting-and-recognition | |||

Saadatpanah, P., Shafahi, A., & Goldstein, T. (2019, June 17). ''Adversarial attacks on copyright detection systems''. Retrieved November 13, 2020, from https://arxiv.org/abs/1906.07153. | |||

Saviaga, C. and Toxtli, C. ''Deepiracy: Video piracy detection system by using longest common subsequence and deep learning'', 2018. https://medium.com/hciwvu/piracy-detection-using-longestcommon-subsequence-and-neuralnetworks-a6f689a541a6 | |||

Wang, A. et al. ''An industrial strength audio search algorithm''. In Ismir, volume 2003, pp. 7–13. Washington, DC, 2003. | |||

Pingali, K. (2011). Locality of Reference and Parallel Processing. Encyclopedia of Parallel Computing, 21. doi:https://doi.org/10.1007/978-0-387-09766-4_206 | |||

Latest revision as of 15:03, 7 December 2020

Presented by

Luwen Chang, Qingyang Yu, Tao Kong, Tianrong Sun

Introduction

The copyright detection system is one of the most commonly used machine learning systems. Important real-world applications include tools such as Google Jigsaw, which can identify and remove video promoting terrorism, or applications like YouTube, where a machine learning system is used to flag content that infringes copyrights. Failure to do so will result in legal consequences. However, the public's adversarial attacks have not been widely addressed and remain largely unexplored. Adversarial attacks are instances where people intentionally design inputs to cause misclassification in the model.

Copyright detection systems are vulnerable to attacks for three reasons:

1., Unlike physical-world attacks where adversarial samples need to survive under different conditions like resolutions and viewing angles, any digital files can be uploaded directly to the web without going through a camera or microphone.

2. The detection system is an open-set problem, which means that the uploaded files may not correspond to an existing class. This case prevents users from uploading unprotected audio/video, whereas most of the uploaded files nowadays are not protected.

3. The detection system needs to handle a vast majority of content with different labels but similar features. For example, in the ImageNet classification task, the system is easily attacked when there are two cats/dogs/birds with high similarities but different classes.

The goal of this paper is to raise awareness of the security threats faced by copyright detection systems. In this paper, different types of copyright detection systems will be introduced. For example, a widely used detection model from Shazam, a popular application used for recognizing music, will be discussed in the paper. As a proof-of-concept, the paper generates audio fingerprints using convolutional neural networks and formulates the adversarial loss function using standard gradient methods. An example of remixing music is given to show how adversarial examples can be created. Then, the adversarial attacks are applied to the industrial systems like AudioTag and YouTube Content ID to evaluate the systems' effectiveness.

Types of copyright detection systems

Fingerprinting algorithms work by extracting the features of a source file as a hash and then utilizing a matching algorithm to compare that to the materials protected by copyright in the database. If enough matches are found between the source and existing data, then the two samples are considered identical. Thus, the copyright detection system is able to reject the copyright declaration of the source. Most audio, image, and video fingerprinting algorithms work by training a neural network to output features or extracting hand-crafted features.

In terms of video fingerprinting, a useful algorithm is to detect the entering/leaving time of the objects in the video (Saviaga & Toxtli, 2018). The final hash consists of the entering/leaving of different objects and a unique relationship of the objects. However, most of these video fingerprinting algorithms only train their neural networks by using simple distortions such as adding noise or flipping the video rather than adversarial perturbations. This leads to algorithms that are strong against pre-defined distortions, but not adversarial attacks.

Moreover, some plagiarism detection systems also depend on neural networks to generate a fingerprint of the input document. Though using deep feature representations as a fingerprint is efficient in detecting plagiarism, it still might be weak to adversarial attacks.

Audio fingerprinting may perform better than the algorithms above. This is because the hash is usually generated by extracting hand-crafted features rather than training a neural network. That being said, it still is easy to attack.

Case study: evading audio fingerprinting

Audio Fingerprinting Model

The audio fingerprinting model plays an important role in copyright detection. It is useful for quickly locating or finding similar samples inside an audio database. Shazam is a popular music recognition application, which uses one of the most well-known fingerprinting models. Because of the three properties: temporal locality, translation invariance, and robustness, Shazam's algorithm is treated as a good fingerprinting algorithm. It shows strong robustness even in presence of noise by using local maximum in spectrogram to form hashes. Spectrograms are two-dimensional representations of audio frequency spectra over time. An example is shown below.

Interpreting the fingerprint extractor as a CNN

The intention of this section is to build a differentiable neural network whose function resembles that of an audio fingerprinting algorithm, which is well-known for its ability to identify the meta-data, i.e. song names, artists, and albums, while independent of an audio format (Group et al., 2005). The generic neural network model will then be used as an example of black-box attacks on many popular real-world systems, in this case, YouTube and audio tag.

The generic neural network model consists of two convolutional layers and a max-pooling layer, which is used for dimension reduction. This is depicted in the figure below. As mentioned above, the convolutional neural network is well-known for its properties of temporal locality and transformational invariance. In which, temporal locality refers to a program accessing the same memory location within a small time frame. This is in contrast to spatial locality, where the program accesses nearby memory locations within a small time frame (Pingali, 2011). The purpose of this network is to generate audio fingerprinting signals that extract features that uniquely identify a signal, regardless of the starting and ending times of the inputs.

While an audio sample enters the neural network, it is first transformed by the initial network layer, which can be described as a normalized Hann function. The form of the function is shown below, with N being the width of the Kernel.

$$ f_{1}(n)=\frac {\sin^2(\frac{\pi n} {N})} {\sum_{i=0}^N \sin^2(\frac{\pi i}{N})} $$

The intention of the normalized Hann function is to smooth the adversarial perturbation of the input audio signal, which removes the discontinuity as well as the bad spectral properties. This transformation enhances the efficiency of black-box attacks that is later implemented.

The next convolutional layer applies a Short Term Fourier Transformation to the input signal by computing the spectrogram of the waveform and converts the input into a feature representation. Once the input signal enters this network layer, it is being transformed by the convolutional function below.

$$f_{2}(k,n)=e^{-i 2 \pi k n / N} $$ where k [math]\displaystyle{ {\in} }[/math] 0,1,...,N-1 (output channel index) and n [math]\displaystyle{ {\in} }[/math] 0,1,...,N-1 (index of filter coefficient)

The output of this layer is described as φ(x) (x being the input signal), a feature representation of the audio signal sample. However, this representation is flawed due to its vulnerability to noise and perturbation, as well as its difficulty to store and inspect. Therefore, a maximum pooling layer is being implemented to φ(x), in which the network computes a local maximum using a max-pooling function to become robust to changes in the position of the feature. This network layer outputs a binary fingerprint ψ (x) (x being the input signal) that will be used later to search for a signal against a database of previously processed signals.

Formulating the adversarial loss function

In the previous section, local maxima of the spectrogram are used to generate fingerprints by CNN, but a loss has not been quantified to compare how similar two fingerprints are. After the loss is found, standard gradient methods can be used to find a perturbation [math]\displaystyle{ {\delta} }[/math], which can be added to a signal so that the copyright detection system will be tricked. Also, a bound is set to make sure the generated fingerprints are close enough to the original audio signal. $$\text{bound:}\ ||\delta||_p\le\epsilon$$

where [math]\displaystyle{ {||\delta||_p\le\epsilon} }[/math] is the [math]\displaystyle{ {l_p} }[/math]-norm of the perturbation and [math]\displaystyle{ {\epsilon} }[/math] is the bound of the difference between the original file and the adversarial example.

To compare how similar two binary fingerprints are, Hamming distance is employed. Hamming distance between two strings is the number of digits that are different (Hamming distance, 2020). For example, the Hamming distance between 101100 and 100110 is 2.

Let [math]\displaystyle{ {\psi(x)} }[/math] and [math]\displaystyle{ {\psi(y)} }[/math] be two binary fingerprints outputted from the model, the number of peaks shared by [math]\displaystyle{ {x} }[/math] and [math]\displaystyle{ {y} }[/math] can be found through [math]\displaystyle{ {|\psi(x)\cdot\psi(y)|} }[/math]. Now, to get a differentiable loss function, the equation is found to be

$$J(x,y)=|\phi(x)\cdot\psi(x)\cdot\psi(y)|$$

This is effective for white-box attacks by knowing the fingerprinting system. However, the loss can be easily minimized by modifying the location of the peaks by one pixel, which would not be reliable to transfer to black-box industrial systems. To make it more transferable, a new loss function that involves more movements of the local maxima of the spectrogram is proposed. The idea is to move the locations of peaks in [math]\displaystyle{ {\psi(x)} }[/math] outside of neighborhood of the peaks of [math]\displaystyle{ {\psi(y)} }[/math]. In order to implement the model more efficiently, two max-pooling layers are used. One of the layers has a bigger width [math]\displaystyle{ {w_1} }[/math] while the other one has a smaller width [math]\displaystyle{ {w_2} }[/math]. For any location, if the output of [math]\displaystyle{ {w_1} }[/math] pooling is strictly greater than the output of [math]\displaystyle{ {w_2} }[/math] pooling, then it can be concluded that no peak is in that location with radius [math]\displaystyle{ {w_2} }[/math].

The loss function is as the following:

$$J(x,y) = \sum_i\bigg(\text{ReLU}\bigg(c-\bigg(\underset{|j| \leq w_1}{\max}\phi(i+j;x)-\underset{|j| \leq w_2}{\max}\phi(i+j;x)\bigg)\bigg)\cdot\psi(i;y)\bigg)$$ The equation above penalizes the peaks of [math]\displaystyle{ {x} }[/math] which are in neighborhood of peaks of [math]\displaystyle{ {y} }[/math] with radius of [math]\displaystyle{ {w_2} }[/math]. The activation function uses [math]\displaystyle{ {ReLU} }[/math]. [math]\displaystyle{ {c} }[/math] is the difference between the outputs of two max-pooling layers.

Lastly, instead of the maximum operator, smoothed max function is used here:

$$S_\alpha(x_1,x_2,...,x_n) = \frac{\sum_{i=1}^{n}x_ie^{\alpha x_i}}{\sum_{i=1}^{n}e^{\alpha x_i}}$$

where [math]\displaystyle{ {\alpha} }[/math] is a smoothing hyper parameter. When [math]\displaystyle{ {\alpha} }[/math] approaches positive infinity, [math]\displaystyle{ {S_\alpha} }[/math] is closer to the actual max function.

To summarize, the optimization problem can be formulated as the following:

$$ \underset{\delta}{\min}J(x+\delta,x)\\ s.t.||\delta||_{\infty}\le\epsilon $$ where [math]\displaystyle{ {x} }[/math] is the input signal, [math]\displaystyle{ {J} }[/math] is the loss function with the smoothed max function. Note that [math]\displaystyle{ ||\delta||_\infty = \max_k |\delta_k| }[/math] (the largest component of [math]\displaystyle{ \delta }[/math]) which makes it relatively easy to satisfy the above constraint by clipping the values of each iteration of [math]\displaystyle{ \delta }[/math] to be less than [math]\displaystyle{ \epsilon }[/math]. However, since [math]\displaystyle{ ||\delta||_\infty \leq \dots \leq ||\delta||_2 \leq ||\delta||_1 }[/math] this choice of norm results in the loosest bound, and therefore admits a larger selection of perturbations [math]\displaystyle{ \delta }[/math] than might be permitted by other choices of norms.

Remix adversarial examples

While solving the optimization problem, the resulted example would be able to fool the copyright detection system. But it could sound unnatural with the perturbations.

Instead, the fingerprinting could be made in a more natural way (i.e., a different audio signal).

By modifying the loss function, which switches the order of the max-pooling layers in the smooth maximum components in the loss function, this remix loss function is to make two signals x and y look as similar as possible.

$$J_{remix}(x,y) = \sum_i\bigg(ReLU\bigg(c-\bigg(\underset{|j| \leq w_2}{\max}\phi(i+j;x)-\underset{|j| \leq w_1}{\max}\phi(i+j;x)\bigg)\bigg)\cdot\psi(i;y)\bigg)$$

By adding this new loss function, a new optimization problem could be defined.

$$ \underset{\delta}{\min}J(x+\delta,x) + \lambda J_{remix}(x+\delta,y)\\ s.t.||\delta||_{p}\le\epsilon $$

where [math]\displaystyle{ {\lambda} }[/math] is a scalar parameter that controls the similarity of [math]\displaystyle{ {x+\delta} }[/math] and [math]\displaystyle{ {y} }[/math].

This optimization problem is able to generate an adversarial example from the selected source, and also enforce the adversarial example to be similar to another signal. The resulting adversarial example is called Remix adversarial example because it gets the references to its source signal and another signal.

Evaluating transfer attacks on industrial systems

The effectiveness of default and remix adversarial examples is tested through white-box attacks on the proposed model and black-box attacks on two real-world audio copyright detection systems - AudioTag and YouTube “Content ID” system. [math]\displaystyle{ {l_{\infty}} }[/math] norm and [math]\displaystyle{ {l_{2}} }[/math] norm of perturbations are two measures of modification. Both of them are calculated after normalizing the signals so that the samples could lie between 0 and 1.

Before evaluating black-box attacks against real-world systems, white-box attacks against our own proposed model is used to provide the baseline of adversarial examples’ effectiveness. Loss function [math]\displaystyle{ {J(x,y)=|\phi(x)\cdot\psi(x)\cdot\psi(y)|} }[/math] is used to generate white-box attacks. The unnoticeable fingerprints of the audio with the noise can be changed or removed by optimizing the loss function.

In black-box attacks, by applying random perturbations to the audio recordings, AudioTag’s claim of being robust to input distortions was verified. However, the AudioTag system is found to be relatively sensitive to the attacks since it can detect the songs with a benign signal while it failed to detect both default and remix adversarial examples. The architecture of the AudioTag fingerprint model and surrogate CNN model is guessed to be similar based on the experimental observations.

Similar to AudioTag, the YouTube “Content ID” system also got the result with successful identification of benign songs but failure to detect adversarial examples. However, to fool the YouTube Content ID system, a larger value of the parameter [math]\displaystyle{ {\epsilon} }[/math] is required, which makes perturbations obvious although songs can still be recognized by humans. YouTube Content ID system has a more robust fingerprint model.

Conclusion

In this paper, we obtain that many industrial copyright detection systems used in popular video and music websites, such as YouTube and AudioTag, are significantly vulnerable to adversarial attacks established in the existing literature. By building a simple music identification system resembling that of Shazam using a neural network and attack it by the well-known gradient method, this paper firmly proved the lack of robustness of the current online detector.

Although the method used in the paper isn't optimal, the attacks can be strengthened using sharper technical. Also, we could transfer attacks using rudimentary surrogate models that rely on hand-crafted features, while commercial systems likely rely on trainable neural nets.

Our goal here is not to facilitate but the intention of this paper is to raise the awareness of the vulnerability of the current online system to adversarial attacks and to emphasize the significance of copyright detection system. A number of mitigating approaches already exist, such as adversarial training, but they need to be further developed and examined in order to robustly protect against the threat of adversarial copyright attacks.

Appendix

Feature Extraction Process in Audio-Fingerprinting System

1. Preprocessing. In this step, the audio signal is digitalized and quantized at first. Then, it is converted to a mono signal by averaging two channels if necessary. Finally, it is resampled if the sampling rate is different from the target rate.

2. Framing. Framing means dividing the audio signal into frames of equal length by a window function.

3. Transformation. This step is designed to transform the set of frames into a new set of features, in order to reduce the redundancy.

4. Feature Extraction. After transformation, final acoustic features are extracted from the time-frequency representation. The main purpose is to reduce the dimensionality and increase the robustness to distortions.

5. Post-processing. To capture the temporal variations of the audio signal, higher order time derivatives are required sometimes.

Critiques

- The experiments in this paper appear to be a proof-of-concept rather than a serious evaluation of a model. One problem is that the norm is used to evaluate the perturbation. Unlike the norm in image domains which can be visualized and easily understood, the perturbations in the audio domain are more difficult to comprehend. A cognitive study or something like a user study might need to be conducted in order to understand this. Another question related to this is that if the random noise is 2x bigger or 3x bigger in terms of the norm, does this make a huge difference when listening to it? Are these two perturbations both very obvious or unnoticeable? In addition, it seems that a dataset is built but the stats are missing. Third, no baseline methods are being compared to in this paper, not even an ablation study. The proposed two methods (default and remix) seem to perform similarly.

- There could be an improvement in term of how to find the threshold in general, it mentioned how to measure the similarity of two pieces of content but have not discussed what threshold should we set for this model. In fact, it is always a challenge to determine the boundary of "Copyright Issue" or "Not Copyright Issue" and this is some important information that may be discussed in the paper.

- The fingerprinting technique used in this paper seems rather elementary, which is a downfall in this context because the focus of this paper is adversarial attacks on these methods. A recent 2019 work (https://arxiv.org/pdf/1907.12956.pdf) proposed a deep fingerprinting algorithm along with some novel framing of the problem. There are several other older works in this area that also give useful insights that would have improved the algorithm in this paper.

- Figure 1 clearly indicates the stricture of an audio fingerprinting model with 2 convolution layers and a max pooling layer. In the following paragraphs, it shows how and why the author choose to use each layer and what we could get at the output layer. This gives us a general thought of how this type of neural network is used to deal with data.

- In the experiment section, authors could provide more background information on the dataset used. For example, the number of songs in the dataset and a brief introduction of different features.

- Since the paper didn't go into details of how features are extracted in an audio-fingerprinting system, the details are listed out above in "Feature Extraction Process in Audio-Fingerprinting System"

- In Shazam algorithm, the author should explore more on when the system will detect the copyright issue. Such as should the copyright issue be raised if there is only 5 seconds of melody or lyrics that are similar to other music?

- In addition to Audio files, can this system detect copyright attacks on mixed data such as say, embedded audio files in the text? It will be interesting to see if it can do it. Nowadays, a lot of data is coming in mixed form, e.g. text+video, audio+text etc and therefore having a system to detect adversarial attacks on copyright detection systems for mixed data will be a useful development.

- When introducing the types of copyright detection systems, there is a lack of clearness in explaining fingerprinting algorithms for audios and images. Besides a brief, one-line explanation, justifications regarding why audio fingerprinting algorithms are better compared to video fingerprinting algorithms were not provided. Moreover, discussions regarding image fingerprinting algorithms were missing. Hence, itt extent of the would be beneficial to add these two sections to enhance the readers' understanding of this summary.

- It says: "Fingerprinting algorithms work by extracting the features of a source file as a hash", but it seems like they should have included more information about it so that readers could understand how feature extraction works in this case.

- It would be better if explanations on all algorithms and terminologies are included, maybe just point somewhere contains such information to keep this summary short and clear.

- The fingerprint algorithm in this article is the only algorithm discussed in the paper, the author should discuss the evolution of the algorithm and add some comparisons of with other algorithms that currently exist.

References

Group, P., Cano, P., Group, M., Group, E., Batlle, E., Ton Kalker Philips Research Laboratories Eindhoven, . . . Authors: Pedro Cano Music Technology Group. (2005, November 01). A Review of Audio Fingerprinting. Retrieved November 13, 2020, from https://dl.acm.org/doi/10.1007/s11265-005-4151-3

Hamming distance. (2020, November 1). In Wikipedia. https://en.wikipedia.org/wiki/Hamming_distance

Jovanovic. (2015, February 2). How does Shazam work? Music Recognition Algorithms, Fingerprinting, and Processing. Toptal Engineering Blog. https://www.toptal.com/algorithms/shazam-it-music-processing-fingerprinting-and-recognition

Saadatpanah, P., Shafahi, A., & Goldstein, T. (2019, June 17). Adversarial attacks on copyright detection systems. Retrieved November 13, 2020, from https://arxiv.org/abs/1906.07153.

Saviaga, C. and Toxtli, C. Deepiracy: Video piracy detection system by using longest common subsequence and deep learning, 2018. https://medium.com/hciwvu/piracy-detection-using-longestcommon-subsequence-and-neuralnetworks-a6f689a541a6

Wang, A. et al. An industrial strength audio search algorithm. In Ismir, volume 2003, pp. 7–13. Washington, DC, 2003.

Pingali, K. (2011). Locality of Reference and Parallel Processing. Encyclopedia of Parallel Computing, 21. doi:https://doi.org/10.1007/978-0-387-09766-4_206