policy optimization with demonstrations: Difference between revisions

No edit summary |

|||

| (60 intermediate revisions by 24 users not shown) | |||

| Line 1: | Line 1: | ||

This is a summary of the paper titled: "Policy Optimization with Demonstrations", authored by Bingyi Kang, Zequn Jie, and Jiashi Feng. Available online at URL http://proceedings.mlr.press/v80/kang18a.html | |||

= Introduction = | = Introduction = | ||

Reinforcement learning (RL) method has led to state-of-the-art results in a variety of applications. However, problems that involve learning from novel policies (to improve long-term performance) still pose challenges - especially in environments where reward signals are sparse and rare. There are currently two ways to solve such exploration problems in RL: | |||

1) Guide the agent to explore states that have never been seen. | |||

2) Guide the agent to imitate a demonstration trajectory sampled from an expert policy to learn. | |||

When guiding the agent to imitate the expert behavior for learning, there are also two methods: putting the demonstration directly into the replay memory [1] [2] [3] or using the demonstration trajectory to pre-train the policy in a supervised manner [4]. However, neither of these methods takes full advantage of the demonstration data. They instead treat the demonstration data identically to self-generated data, requiring a tremendous number of difficult to collect examples to learn effectively. To address this problem, a novel policy optimization method from demonstration (POfD) is proposed, which takes full advantage of the demonstration and there is no need to ensure that the expert policy is the optimal policy. To summarize, the authors bring forth this idea through the following techniques: | |||

1) A demonstration guided exploration term measuring the divergence between current and the expert policy is added to the policy optimization objective, increasing the similarity to expert-like exploration. | |||

2) They say that for better learning from demonstrations and getting an optimization friendly lower bound, the proposed objective could be defined on an occupancy measure as in [14]. | |||

3) Finally, they show that the optimization can move towards optimizing the derived lower bound and the generative adversarial training. | |||

The authors also evaluate the performance of POfD on Mujoco [5] in sparse-reward environments. The experiments results show that the performance of POfD is greatly improved compared with some strong baselines and even to the policy gradient method in dense-reward environments. | |||

==Intuition== | ==Intuition== | ||

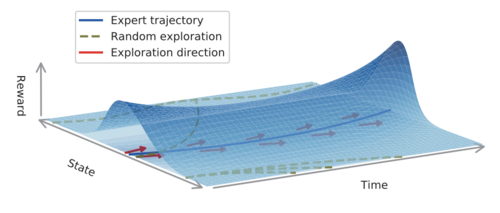

The agent should imitate the demonstrated behavior when rewards are sparse and then explore new states on its own after acquiring sufficient skills, which is a dynamic intrinsic reward mechanism that can be reshaped the native rewards in RL. | The agent should imitate the demonstrated behavior when rewards are sparse and then explore new states on its own after acquiring sufficient skills, which is a dynamic intrinsic reward mechanism that can be reshaped in terms of the native rewards in RL. At present, the state of the art exploration in Reinforcement learning is simply epsilon greedy which just makes random moves for a small percentage of times to explore unexplored moves. This is very naive and is one of the main reasons for the high sample complexity in RL. On the other hand, if there is an expert demonstrator who can guide exploration, the agent can make more guided and accurate exploratory moves. | ||

=Related Work = | =Related Work = | ||

There are some | There are some related works in overcoming exploration difficulties by learning from demonstration [6] and imitation learning in RL. | ||

For | For learning from demonstration (LfD), | ||

# Most LfD methods adopt value-based RL algorithms, such as DQfD | # Most LfD methods adopt value-based RL algorithms, such as DQfD (Deep Q-learning from Demonstrations) [2] which are applied into the discrete action spaces and DDPGfD (Deep Deterministic Policy Gradient from Demonstrations) [3] which extends this idea to the continuous spaces. But both of them under-utilize the demonstration data. | ||

#There are some methods based on policy iteration, which shapes the value function by using demonstration data. But they get the bad performance when demonstration data is imperfect. | # There are some methods based on policy iteration [7] [8], which shapes the value function by using demonstration data. But they get the bad performance when demonstration data is imperfect. | ||

# A hybrid framework that learns the policy in which the probability of taking demonstrated actions is maximized is proposed, which considers | # A hybrid framework [9] that learns the policy in which the probability of taking demonstrated actions is maximized is proposed, which considers fewer demonstration data. | ||

# A reward reshaping mechanism that encourages taking actions close to the demonstrated ones is proposed. It is similar | # A reward reshaping mechanism [10] that encourages taking actions close to the demonstrated ones is proposed. It is similar to the method in this paper, but there exist some differences as it is defined as a potential function based on multi-variate Gaussian to model the distribution of state-actions. | ||

All of the above methods require a lot of perfect | All of the above methods require a lot of perfect demonstrations to get satisfactory performance, which is different from POfD in this paper. | ||

For imitation learning, | For imitation learning, | ||

# Inverse Reinforce Learning problems are solved by alternating between fitting the reward function and selecting the policy. But it cannot be extended to big-scale problems. | # Inverse Reinforce Learning [11] problems are solved by alternating between fitting the reward function and selecting the policy [12] [13]. But it cannot be extended to big-scale problems. | ||

# Generative Adversarial Imitation Learning (GAIL) uses a discriminator to distinguish whether a state-action pair is from the expert or the learned policy and it can be applied into the high-dimensional continuous control problems. | # Generative Adversarial Imitation Learning (GAIL) [14] uses a discriminator to distinguish whether a state-action pair is from the expert or the learned policy and it can be applied into the high-dimensional continuous control problems. | ||

# An alternative imitation learning [26] is that an agent explores the environment without any expert supervision and distills this exploration data into goal-directed skills. These skills can then be used to imitate the visual demonstration provided by the expert. | |||

Both of the above methods are effective for imitation learning, but cannot leverage the valuable feedback given by the environments and usually suffer from bad performance when the expert data is imperfect. That is different from POfD in this paper. | |||

There is also another idea in which an agent learns using hybrid imitation learning and reinforcement learning reward[23, 24]. However, unlike this paper, they did not provide some theoretical support for their method and only explained some intuitive explanations. | |||

=Background= | =Background= | ||

==Preliminaries== | ==Preliminaries== | ||

Markov Decision Process (MDP) is defined by a tuple <math> | Markov Decision Process (MDP) [15] is defined by a tuple <math>⟨\mathcal{S}, \mathcal{A}, \mathcal{P}, r, \gamma⟩ </math>, where <math>\mathcal{S}</math> is the state space, <math>\mathcal{A} </math> is the action space, <math>\mathcal{P}(s'|s,a)</math> is the transition distribution of taking action <math> a </math> at state <math>s </math>, <math> r(s,a) </math>is the reward function, and <math> \gamma </math> is the discount factor between 0 and 1. Policy <math> \pi(a|s) </math> is a mapping from state to action probabilities, the performance of <math> \pi </math> is usually evaluated by its expected discounted reward <math> \eta(\pi) </math>: | ||

\[\eta(\pi)=\mathbb{E}_{\pi}[r(s,a)]=\mathbb{E}_{(s_0,a_0,s_1,...)}[\sum_{t=0}^\infty\gamma^{t}r(s_t,a_t)] \] | \[\eta(\pi)=\mathbb{E}_{\pi}[r(s,a)]=\mathbb{E}_{(s_0,a_0,s_1,...)}[\sum_{t=0}^\infty\gamma^{t}r(s_t,a_t)] \] | ||

The value function is <math> V_{\pi}(s) =\mathbb{E}_{\pi}[r(·,·)|s_0=s] </math>, the action value function is <math> Q_{\pi}(s,a) =\mathbb{E}_{\pi}[r(·,·)|s_0=s,a_0=a] </math>, and the advantage function that reflects the expected additional reward after taking action a at state s is <math> A_{\pi}(s,a)=Q_{\pi}(s,a)-V_{\pi}(s)</math>. | The value function is <math> V_{\pi}(s) =\mathbb{E}_{\pi}[r(·,·)|s_0=s] </math>, the action value function is <math> Q_{\pi}(s,a) =\mathbb{E}_{\pi}[r(·,·)|s_0=s,a_0=a] </math>, and the advantage function that reflects the expected additional reward after taking action a at state s is <math> A_{\pi}(s,a)=Q_{\pi}(s,a)-V_{\pi}(s)</math>. | ||

| Line 36: | Line 55: | ||

==Problem Definition== | ==Problem Definition== | ||

In this paper, the authors aim to develop a method that can boost exploration by leveraging effectively the demonstrations <math>D^E </math>from the expert policy <math> \pi_E </math> and maximize <math> \eta(\pi) </math> in the sparse-reward environment. The authors define the demonstrations <math>D^E=\{\tau_1,\tau_2,...,\tau_N\} </math>, where <math>\tau_i=\{(s_0^i,a_0^i),(s_1^i,a_1^i),...,(s_T^i,a_T^i)\} </math> is generated from the expert policy. In addition, there is an assumption on the quality of the expert policy: | Generally, RL tasks and environments do not provide a comprehensive reward and instead rely on sparse feedback indicating whether the goal is reached. | ||

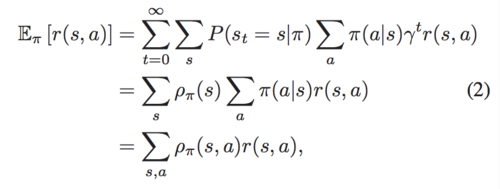

In this paper, the authors aim to develop a method that can boost exploration by leveraging effectively the demonstrations <math>D^E </math>from the expert policy <math> \pi_E </math> and maximize <math> \eta(\pi) </math> in the sparse-reward environment. The authors define the demonstrations <math>D^E=\{\tau_1,\tau_2,...,\tau_N\} </math>, where the i-th trajectory <math>\tau_i=\{(s_0^i,a_0^i),(s_1^i,a_1^i),...,(s_T^i,a_T^i)\} </math> is generated from the unknown expert policy <math>\pi_E </math>. In addition, there is an assumption on the quality of the expert policy: | |||

[[File:asp1.png|500px|center]] | [[File:asp1.png|500px|center]] | ||

Moreover, it is not necessary to ensure that the expert policy is advantageous over all the policies. | |||

Throughout the paper, they use <math>\pi_E </math> to denote the expert policy that gives the relatively good <math>\eta_\pi </math>, and use <math>\hat{\mathbb{E}}_D </math>to denote empirical expectation estimated from the demonstrated trajectories <math>D^E </math>. We have the following reasonable and necessary assumption on the quality of the expert policy <math>\pi_E </math>. | |||

Moreover, it is not necessary to ensure that the expert policy is advantageous over all the policies. This is because that POfD will learn a better policy than expert policy by exploring on its own in later learning stages. | |||

=Method= | =Method= | ||

==Policy Optimization with Demonstration (POfD)== | ==Policy Optimization with Demonstration (POfD)== | ||

[[File:ff1.png|500px|center]] | |||

This method optimizes the policy by forcing the policy to explore in the nearby region of the expert policy that is specified by several demonstrated trajectories <math>D^E </math> (as shown in Fig.1) in order to avoid causing slow convergence or failure when the environment feedback is sparse. In addition, the authors encourage the policy π to explore by "following" the demonstrations | [[File:ff1.png|thumb|500px|center |Figure 1: Demonstrations (the blue curve) enables POfD to explore in the high-reward regions (red arrows). On the other hand random explorations (olive green dashed curves) occur in sparse-reward environments.]] | ||

This method optimizes the policy by forcing the policy to explore in the nearby region of the expert policy that is specified by several demonstrated trajectories <math>D^E </math> (as shown in Fig.1) in order to avoid causing slow convergence or failure when the environment feedback is sparse. In addition, the authors encourage the policy π to explore by "following" the demonstrations <math>D^E </math>. Thus, a new learning objective is given: | |||

\[ \mathcal{L}(\pi_{\theta})=-\eta(\pi_{\theta})+\lambda_{1}D_{JS}(\pi_{\theta},\pi_{E})\] | \[ \mathcal{L}(\pi_{\theta})=-\eta(\pi_{\theta})+\lambda_{1}D_{JS}(\pi_{\theta},\pi_{E})\] | ||

where <math>D_{JS}(\pi_{\theta},\pi_{E})</math> is Jensen-Shannon divergence between current policy <math>\pi_{\theta}</math> and the expert policy <math>\pi_{E}</math> , <math>\lambda_1</math> is a trading-off parameter, and <math>\theta</math> is policy parameter. According to Lemma 1, the authors use <math>D_{JS}(\rho_{\theta},\rho_{E})</math> to instead of <math>D_{JS}(\pi_{\theta},\pi_{E})</math>, because it is easier to optimize through adversarial training on demonstrations. The learning objective is: | where <math>D_{JS}(\pi_{\theta},\pi_{E})</math> is Jensen-Shannon divergence between current policy <math>\pi_{\theta}</math> and the expert policy <math>\pi_{E}</math> , <math>\lambda_1</math> is a trading-off parameter, and <math>\theta</math> is policy parameter. According to Lemma 1, the authors use <math>D_{JS}(\rho_{\theta},\rho_{E})</math> to instead of <math>D_{JS}(\pi_{\theta},\pi_{E})</math>, because it is easier to optimize through adversarial training on demonstrations. The learning objective is: | ||

| Line 50: | Line 78: | ||

==Benefits of Exploration with Demonstrations== | ==Benefits of Exploration with Demonstrations== | ||

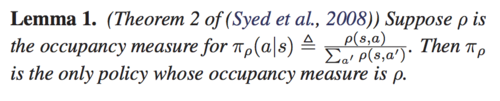

The authors introduce the benefits of POfD. Firstly, we consider the expression of expected return in policy gradient methods. | The authors introduce the benefits of POfD. Firstly, we consider the expression of expected return in policy gradient methods [16]. | ||

\[ \eta(\pi)=\eta(\pi_{old})+\mathbb{E}_{\tau\sim\pi}[\sum_{t=0}^\infty\gamma^{t}A_{\pi_{old}}( | \[ \eta(\pi)=\eta(\pi_{old})+\mathbb{E}_{\tau\sim\pi}[\sum_{t=0}^\infty\gamma^{t}A_{\pi_{old}}(s,a)]\] | ||

<math>\eta(\pi)</math>is the advantage over the policy | <math>\eta(\pi)</math>is the advantage over the policy <math>\pi_{old}</math> in the previous iteration, so the expression can be rewritten by | ||

\[ \eta(\pi)=\eta(\pi_{old})+\sum_{s}\rho_{\pi}(s)\sum_{a}\pi(a|s)A_{\pi_{old}}(s,a)\] | \[ \eta(\pi)=\eta(\pi_{old})+\sum_{s}\rho_{\pi}(s)\sum_{a}\pi(a|s)A_{\pi_{old}}(s,a)\] | ||

The local approximation to <math>\eta(\pi)</math> up to first order is usually as the surrogate learning objective to be optimized by policy gradient methods due to the difficulties brought by complex dependency of <math>\rho_{\pi}(s)</math> over <math> \pi </math>: | The local approximation to <math>\eta(\pi)</math> up to first order is usually as the surrogate learning objective to be optimized by policy gradient methods due to the difficulties brought by complex dependency of <math>\rho_{\pi}(s)</math> over <math> \pi </math>: | ||

\[ J_{\pi_{old}}(\pi)=\eta(\pi_{old})+\sum_{s}\rho_{\pi_{old}}(s)\sum_{a}\pi(a|s)A_{\pi_{old}}(s,a)\] | \[ J_{\pi_{old}}(\pi)=\eta(\pi_{old})+\sum_{s}\rho_{\pi_{old}}(s)\sum_{a}\pi(a|s)A_{\pi_{old}}(s,a)\] | ||

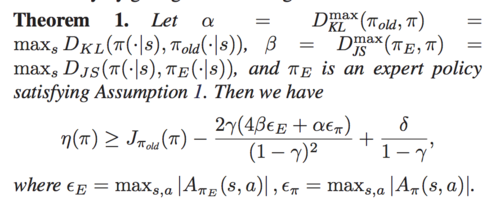

The policy gradient methods improve <math>\eta(\pi)</math> monotonically by optimizing the above <math>J_{\pi_{old}}(\pi)</math> with a sufficiently small update step from <math>\pi_{old}</math> to <math>\pi</math> such that <math>D_{KL}^{max}(\pi, \pi_{old})</math> is bounded. | The policy gradient methods improve <math>\eta(\pi)</math> monotonically by optimizing the above <math>J_{\pi_{old}}(\pi)</math> with a sufficiently small update step from <math>\pi_{old}</math> to <math>\pi</math> such that <math>D_{KL}^{max}(\pi, \pi_{old})</math> is bounded [16] [17] [18]. POfD imposes an additional regularization <math>D_{JS}(\pi_{\theta}, \pi_{E})</math> between <math>\pi_\theta</math> and <math>\pi_{E}</math> in order to encourage explorations around regions demonstrated by the expert policy. Theorem 1 shows such benefits, | ||

[[File:them1.png|500px|center]] | [[File:them1.png|500px|center]] | ||

In fact, POfD brings another factor, <math>D_{J S}^{max}(\pi_{i}, \pi_{E})</math>, that would fully use the advantage <math>{\hat \delta}</math>and add improvements with a margin over pure policy gradient methods. | |||

==Optimization== | ==Optimization== | ||

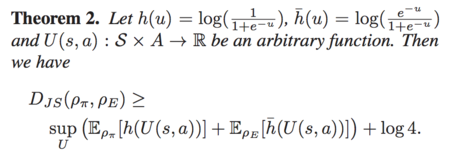

For POfD, the authors choose to optimize the lower bound of | For POfD, the authors choose to optimize the lower bound of the Jensen-Shannon divergence instead of directly optimizing the difficult Jensen-Shannon divergence. This optimization method is compatible with any policy gradient methods. Theorem 2 gives the lower bound of <math>D_{JS}(\rho_{\theta}, \rho_{E})</math>: | ||

[[File:them2.png| | [[File:them2.png|450px|center]] | ||

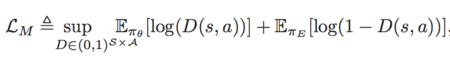

Thus, the | Thus, the occupancy measure matching objective can be written as: | ||

[[File:eqnlm.png| | [[File:eqnlm.png|450px|center]] | ||

where <math> D(s,a)=\frac{1}{1+e^{-U(s,a)}}: S\times A \rightarrow (0,1)</math>, and its supremum ranging is like a discriminator for distinguishing whether the state-action pair is a current policy or an expert policy. | where <math> D(s,a)=\frac{1}{1+e^{-U(s,a)}}: \mathcal{S}\times \mathcal{A} \rightarrow (0,1)</math> is an arbitrary mapping function followed by a sigmoid activation function used for scaling, and its supremum ranging is like a discriminator for distinguishing whether the state-action pair is a current policy or an expert policy. | ||

To avoid overfitting, the authors add causal entropy <math>−H (\pi_{\theta}) </math>as the regularization term. Thus, the learning objective is: | To avoid overfitting, the authors add causal entropy <math>−H (\pi_{\theta}) </math> as the regularization term. Thus, the learning objective is: | ||

\[\min_{\theta}\mathcal{L}=-\eta(\pi_{\theta})-\lambda_{2}H(\pi_{\theta})+\lambda_{1} \sup_{{D\in(0,1)}^{S\times A}} \mathbb{E}_{\pi_{\theta}}[\log(D(s,a))]+\mathbb{E}_{\pi_{E}}[\log(1-D(s,a))]\] | \[\min_{\theta}\mathcal{L}=-\eta(\pi_{\theta})-\lambda_{2}H(\pi_{\theta})+\lambda_{1} \sup_{{D\in(0,1)}^{S\times A}} \mathbb{E}_{\pi_{\theta}}[\log(D(s,a))]+\mathbb{E}_{\pi_{E}}[\log(1-D(s,a))]\] | ||

At this point, the problem | At this point, the problem closely resembles the minimax problem related to the Generative Adversarial Networks (GANs) [19]. The difference is that the discriminative model D of GANs is well-trained but the expert policy of POfD is not optimal. Then suppose D is parameterized by w. If it is from an expert policy, <math>D_w</math>is toward 1, otherwise it is toward 0. Thus, the minimax learning objective is: | ||

\[\min_{\theta}\max_{w}\mathcal{L}=-\eta(\pi_{\theta})-\lambda_{2}H (\pi_{\theta})+\lambda_{1}( \mathbb{E}_{\pi_{\theta}}[\log(D_{w}(s,a))]+\mathbb{E}_{\pi_{E}}[\log(1-D_{w}(s,a))])\] | \[\min_{\theta}\max_{w}\mathcal{L}=-\eta(\pi_{\theta})-\lambda_{2}H (\pi_{\theta})+\lambda_{1}( \mathbb{E}_{\pi_{\theta}}[\log(D_{w}(s,a))]+\mathbb{E}_{\pi_{E}}[\log(1-D_{w}(s,a))])\] | ||

The minimax learning objective can be rewritten by substituting the expression of <math> \eta(\pi) </math>: | The minimax learning objective can be rewritten by substituting the expression of <math> \eta(\pi) </math>: | ||

| Line 78: | Line 108: | ||

\[\nabla_{\theta}\mathbb{E}_{\pi_{\theta}}[r'(s,a)]=\mathbb{E}_{\pi_{\theta}}[\nabla_{\theta}\log\pi_{\theta}(a|s)Q'(s,a)]\] | \[\nabla_{\theta}\mathbb{E}_{\pi_{\theta}}[r'(s,a)]=\mathbb{E}_{\pi_{\theta}}[\nabla_{\theta}\log\pi_{\theta}(a|s)Q'(s,a)]\] | ||

where <math>Q'(\bar{s},\bar{a})=\mathbb{E}_{\pi_{\theta}}[r'(s,a)|s_0=\bar{s},a_0=\bar{a}]</math>. | where <math>Q'(\bar{s},\bar{a})=\mathbb{E}_{\pi_{\theta}}[r'(s,a)|s_0=\bar{s},a_0=\bar{a}]</math>. | ||

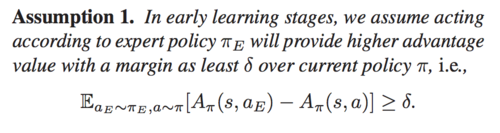

At the end, Algorithm 1 gives the detailed process. | At the end, Algorithm 1 gives the detailed process. | ||

[[File:pofd.png| | [[File:pofd.png|450px|center]] | ||

=Discussion on Existing LfD Methods= | =Discussion on Existing LfD Methods= | ||

To connect with the proposed POfD method, interpretation of the existing methods DQfD and DDPGfD through occupancy measure matching is provided. Both of the existing methods leverage demonstrations to aid exploration in RL. | |||

==DQFD== | ==DQFD== | ||

DQFD puts the demonstrations into a replay memory D and keeps them throughout the Q-learning process. The objective for DQFD is: | DQFD [2] puts the demonstrations into a replay memory D and keeps them throughout the Q-learning process. The objective for DQFD is: | ||

\[J_{DQfD}={\hat{\mathbb{E}}}_{D}[(R_t(n)-Q_w(s_t,a_t))^2]+\alpha{\hat{\mathbb{E}}}_{D^E}[(R_t(n)-Q_w(s_t,a_t))^2]\] | \[J_{DQfD}={\hat{\mathbb{E}}}_{D}[(R_t(n)-Q_w(s_t,a_t))^2]+\alpha{\hat{\mathbb{E}}}_{D^E}[(R_t(n)-Q_w(s_t,a_t))^2]\] | ||

The second term can be rewritten as <math> {\hat{\mathbb{E}}}_{D^E}[(R_t(n)-Q_w(s_t,a_t))^2]={\hat{\mathbb{E}}}_{D^E}[(\hat{\rho}_E(s,a)-\rho_{\pi}(s,a))^{2}r^2(s,a)]</math>, which can be regarded as a regularization forcing current policy's occupancy measure to match the expert's empirical occupancy measure, weighted by the potential reward. | The second term can be rewritten as <math> {\hat{\mathbb{E}}}_{D^E}[(R_t(n)-Q_w(s_t,a_t))^2]={\hat{\mathbb{E}}}_{D^E}[(\hat{\rho}_E(s,a)-\rho_{\pi}(s,a))^{2}r^2(s,a)]</math>, which can be regarded as a regularization forcing current policy's occupancy measure to match the expert's empirical occupancy measure, weighted by the potential reward. Thus minimizing the objective | ||

with expert demonstration and self-generated off-policy datais actually equivalent to imposing an occupancy measure matching regularization to the original DQN objective. | |||

==DDPGfD== | ==DDPGfD== | ||

DDPGfD also puts the demonstrations into a replay memory D, but it is based on an actor-critic framework. The objective for DDPGfD is the same as DQFD. Its policy gradient is: | DDPGfD [3] also puts the demonstrations into a replay memory D, but it is based on an actor-critic framework [21]. The objective for DDPGfD is the same as DQFD. Its policy gradient is: | ||

\[\nabla_{\theta}J_{DDPGfD}\approx \mathbb{E}_{s,a}[\nabla_{a}Q_w(s,a)\nabla_{\theta}\pi_{\theta}(s)], a=\pi_{\theta}(s) \] | \[\nabla_{\theta}J_{DDPGfD}\approx \mathbb{E}_{s,a}[\nabla_{a}Q_w(s,a)\nabla_{\theta}\pi_{\theta}(s)], a=\pi_{\theta}(s) \] | ||

From this equation, policy is updated relying on learned Q-network <math>Q_w </math>rather than the demonstrations <math>D^{E} </math>. DDPGfD shares the same objective function for <math>Q_w </math> as DQfD, thus they have the same way of leveraging demonstrations, that is the demonstrations in DQfD and DDPGfD induce an occupancy measure matching regularization. | From this equation, policy is updated relying on learned Q-network <math>Q_w </math>rather than the demonstrations <math>D^{E} </math>. DDPGfD shares the same objective function for <math>Q_w </math> as DQfD, thus they have the same way of leveraging demonstrations, that is the demonstrations in DQfD and DDPGfD induce an occupancy measure matching regularization. | ||

Although the above replay memory based LfD methods can benefit RL algorithms to some extent in sparse-reward environments, they have some limitations for sufficiently exploiting the demonstration data. First, such a paradigm utilizes expert trajectories only by treating them as learningreference, whose effect may be significantly underexploited when demonstrations are few, as indicated by the authors' experiments. Second, to be compatible with collected data during training, the demonstrated trajectories are required to be associated with rewards for each state transition. However, the rewards in demonstrations may differ from the ones used for learning the policy in the current environment [25], or they may be unavailable. | |||

=Experiments= | =Experiments= | ||

| Line 99: | Line 135: | ||

==Settings== | ==Settings== | ||

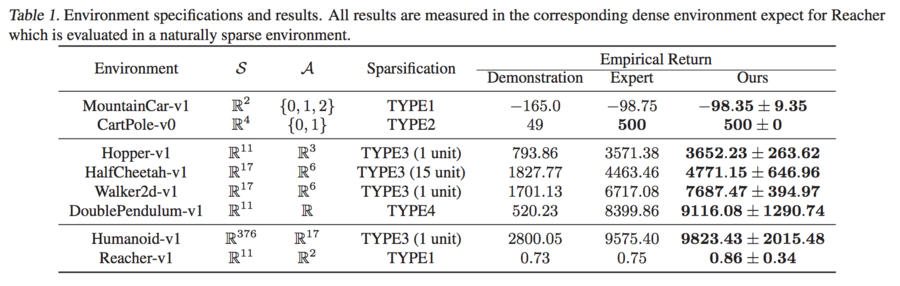

The authors conduct the experiments on 8 physical control tasks, ranging from low-dimensional spaces to high-dimensional spaces and naturally sparse environments based on OpenAI Gym and Mujoco. Due to the uniqueness of the environments, the authors introduce 4 ways to | The authors conduct the experiments on 8 physical control tasks, ranging from low-dimensional spaces to high-dimensional spaces and naturally sparse environments based on OpenAI Gym [20] and Mujoco (Multi-Joint dynamics with Contact) [5] (Gym is a toolkit for developing and comparing reinforcement learning algorithms. It supports teaching agents everything from walking to playing games like Pong or Pinball. MuJoCo is a physics engine aiming to facilitate research and development in robotics, biomechanics, graphics and animation, and other areas where fast and accurate simulation is needed. In order to get familiar with OpenAI Gym and Mujoco environment, you can watch these videos, respectively: [http://www.mujoco.org/image/home/mujocodemo.mp4 Mujoco], [https://gym.openai.com/v2018-02-21/videos/SpaceInvaders-v0-4184afb3-1223-4ac6-b52b-8e863cbe24a5/original.mp4 OpenAI Gym]). Due to the uniqueness of the environments, the authors introduce 4 ways to sparsify their built-in dense rewards. TYPE1: a reward of +1 is given when the agent reaches the terminal state, and otherwise 0. TYPE2: a reward of +1 is given when the agent survives for a while. TYPE3: a reward of +1 is given for every time the agent moves forward over a specific number of units in Mujoco environments. TYPE4: specially designed for InvertedDoublePendulum, a reward +1 is given when the second pole stays above a specific height of 0.89. The details are shown in Table 1. Moreover, only one single imperfect trajectory is used as the demonstrations in this paper. The authors collect the demonstrations by training an agent insufficiently by running TRPO (Trust Region Policy Optimization) in the corresponding dense environment. | ||

[[File:pofdt1.png|900px|center]] | [[File:pofdt1.png|900px|center]] | ||

==Baselines== | ==Baselines== | ||

The authors compare POfD against 5 strong baselines: | The authors compare POfD against 5 strong baselines: | ||

* training the policy with TRPO in dense environments, which is called expert | * training the policy with TRPO [17] in dense environments, which is called expert | ||

* training the policy with TRPO in sparse environments | * training the policy with TRPO [17] in sparse environments | ||

* applying GAIL to learn the policy from demonstrations | * applying GAIL [14] to learn the policy from demonstrations | ||

* DQfD | * DQfD [2] | ||

* DDPGfD | * DDPGfD [3] | ||

1. Trust Region Policy Optimization (TRPO) is an iterative procedure for optimizing policies, with guaranteed monotonic improvement. By making several approximations to the theoretically-justified procedure, a practical algorithm such as this can be developed. This algorithm is similar to natural policy gradient methods and is effective for optimizing neural networks. | |||

2. Generative Adversarial Imitation Learning (GAIL) is a method to directly extract a policy from data as if it were obtained by reinforcement learning and by following inverse reinforcement learning. | |||

3. Deep Q-learning from Demonstrations (DQfD), is a method that leverages small sets of demonstration data to speed up the learning process from relatively small amounts of demonstration data and is able to automatically assess the necessary ratio of demonstration data while learning thanks to a prioritized replay mechanism. | |||

4. DDPGfD (Deep Deterministic Policy Gradients From Demonstrations) uses prioritized replay to enable efficient propagation of the reward information, which is essential in problems with sparse rewards. | |||

==Results== | ==Results== | ||

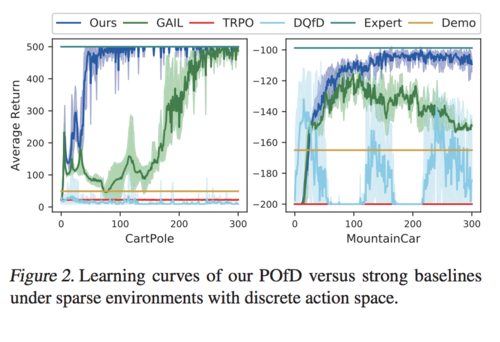

Firstly, the authors test the performance of POfD in sparse control environments with discrete actions. From Table 1, POfD achieves performance comparable with the policy learned under dense environments. From Figure 2, only POfD successes to explore sufficiently and achieves great performance in both sparse environments. TRPO and DQFD fail to explore and GAIL | Firstly, the authors test the performance of POfD in sparse control environments with discrete actions. From Table 1, POfD achieves performance comparable with the policy learned under dense environments. From Figure 2, only POfD successes to explore sufficiently and achieves great performance in both sparse environments. TRPO [17] and DQFD [2] fail to explore and GAIL [14] converges to the imperfect demonstration in MountainCar [22]. | ||

[[File:pofdf2.png|500px|center]] | [[File:pofdf2.png|500px|center]] | ||

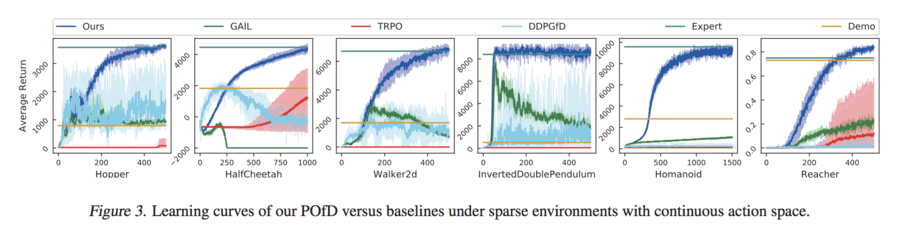

Then, the authors test the performance of POfD under spares environments with continuous actions space. From Figure 3, POfD achieves expert-level performance in terms of | |||

Then, the authors test the performance of POfD under spares environments with continuous actions space. From Figure 3, POfD achieves expert-level performance in terms of accumulated rewards and surpasses other strong baselines training the policy with TRPO. By watching the learning process of different methods, we can see that TRPO consistently fails to explore the environments when the feedback is sparse, except for HalfCheetah. This may be because there is no terminal state in HalfCheetah, thus a random agent can perform reasonably well as long as the time horizon is sufficiently long. This is shown in Figure3 where the improvement of TRPO begins to show after 400 iterations. DDPGfD and GAIL have common drawback: during training process, they both converge to the imperfect demonstration data. For HalfCheetah, GAIL fails to converge and DDPGfD converges to an even worse point. This situation is expected because the policy and value networks tend to over-fit when having few data, so the training process of GAIL and DDPGfD is severely biased by the imperfect data. Finally, our proposed method can effectively explore the environment with the help of demonstration-based intrinsic reward reshaping and succeeds consistently across different tasks both in terms of learning stability and convergence speed. | |||

[[File:pofdf3.png|900px|center]] | [[File:pofdf3.png|900px|center]] | ||

The authors also implement a locomotion task <math>Humanoid</math>, which teaches a human-like robot to walk. The state space of dimension is 376, which is very hard to render. As a result, POfD still outperformed all three baselike methods, as they failed to learn policies in such a sparse reward environment. | |||

The reacher environment is a task that the target is to control a robot arm to touch an object. the location of the object is random for each instantiation. The environment reward is sparse: every time the arm reaches the ball and holds for a while (e.g., 5 time steps), it receives a reward of +1; otherwise, it gets zero reward. The authors select 15 random trajectories as demonstration data, and the performance of POfD is much better than the expert, while all other baseline methods failed. | |||

=Conclusion= | =Conclusion= | ||

In this paper, POfD is proposed that acquires knowledge from a limited amount of imperfect demonstration data to aid exploration in environments with sparse feedback. It is compatible with any policy gradient method. POfD induces implicit dynamic reward shaping and brings provable benefits for policy improvement. Moreover, the results of the experiments have shown the validity and effectiveness of POfD in encouraging the agent to explore around the nearby region of the expert policy and learn better policies. The key contribution is that POfD helps the agent work with few and imperfect demonstrations in an environment with sparse rewards. | |||

=Critique= | =Critique= | ||

# A novel demonstration-based policy optimization method is proposed. In the process of policy optimization, POfD reshapes the reward function. This new reward function can guide the agent to imitate the expert behavior when the reward is sparse and explore on its own when the reward value can be obtained, which can take full advantage of the demonstration data and there is no need to ensure that the expert policy is the optimal policy. | # A novel demonstration-based policy optimization method is proposed. In the process of policy optimization, POfD reshapes the reward function. This new reward function can guide the agent to imitate the expert behavior when the reward is sparse and explore on its own when the reward value can be obtained, which can take full advantage of the demonstration data and there is no need to ensure that the expert policy is the optimal policy. | ||

# POfD can be combined with any policy gradient methods. Its performance surpasses five strong baselines and can be comparable to the agents | # POfD can be combined with any policy gradient methods. Its performance surpasses five strong baselines and can be comparable to the agents trained in the dense-reward environment. | ||

# The paper is structured and the flow of ideas is easy to follow. For related work, the authors clearly explain similarities and differences among these related works. | # The paper is structured and the flow of ideas is easy to follow. For related work, the authors clearly explain similarities and differences among these related works. | ||

# This paper's scalability is demonstrated. The experiments environments are ranging from low-dimensional spaces to high-dimensional spaces and from discrete action spaces to continuous actions spaces. For future work, can it be realized in the real world? | # This paper's scalability is demonstrated. The experiments environments are ranging from low-dimensional spaces to high-dimensional spaces and from discrete action spaces to continuous actions spaces. For future work, can it be realized in the real world? | ||

# There is a doubt that whether it is a correct method to use the trajectory that was insufficiently learned in dense-reward environment as the imperfect demonstration. | # There is a doubt that whether it is a correct method to use the trajectory that was insufficiently learned in a dense-reward environment as the imperfect demonstration. | ||

# In this paper, the performance only is judged by the cumulative reward, can other evaluation terms be considered? For example, the convergence rate. | # In this paper, the performance only is judged by the cumulative reward, can other evaluation terms be considered? For example, the convergence rate. | ||

# The performance of this algorithm hinges on the assumption that expert demonstrations are near optimal in the action space. As seen in figure 3, there appears to be an upper bound to performance near (or just above) the expert accuracy -- this may be an indication of a performance ceiling. In games where near-optimal policies can differ greatly (e.g.; offensive or defensive strategies in chess), the success of the model will depend on the selection of expert demonstrations that are closest to a truly optimal policy (i.e.; just because a policy is the current expert, it does not mean it resembles the true optimal policy). | |||

=References= | |||

[1] Nair, A., McGrew, B., Andrychowicz, M., Zaremba, W., and Abbeel, P. Overcoming exploration in reinforcement learning with demonstrations. arXiv preprint arXiv:1709.10089, 2017. | |||

[2] Hester, T., Vecerik, M., Pietquin, O., Lanctot, M., Schaul, T., Piot, B., Sendonaris, A., Dulac-Arnold, G., Osband, I., Agapiou, J., et al. Learning from demonstrations for real world reinforcement learning. arXiv preprint arXiv:1704.03732, 2017. | |||

[3] Večerík, M., Hester, T., Scholz, J., Wang, F., Pietquin, O., Piot, B., Heess, N., Rotho ̈rl, T., Lampe, T., and Riedmiller, M. Leveraging demonstrations for deep reinforcement learning on robotics problems with sparse rewards. arXiv preprint arXiv:1707.08817, 2017. | |||

[4] Silver, D., Huang, A., Maddison, C. J., Guez, A., Sifre, L., Van Den Driessche, G., Schrittwieser, J., Antonoglou, I., Panneershelvam, V., Lanctot, M., et al. Mastering the game of go with deep neural networks and tree search. nature, 529(7587):484–489, 2016. | |||

[5] Todorov, E., Erez, T., and Tassa, Y. Mujoco: A physics engine for model-based control. In Intelligent Robots and Systems (IROS), 2012 IEEE/RSJ International Con- ference on, pp. 5026–5033. IEEE, 2012. | |||

[6] Schaal, S. Learning from demonstration. In Advances in neural information processing systems, pp. 1040–1046, 1997. | |||

[7] Kim, B., Farahmand, A.-m., Pineau, J., and Precup, D. Learning from limited demonstrations. In Advances in Neural Information Processing Systems, pp. 2859–2867, 2013. | |||

[8] Piot, B., Geist, M., and Pietquin, O. Boosted bellman resid- ual minimization handling expert demonstrations. In Joint European Conference on Machine Learning and Knowl- edge Discovery in Databases, pp. 549–564. Springer, 2014. | |||

[9] Aravind S. Lakshminarayanan, Sherjil Ozair, Y. B. Rein- forcement learning with few expert demonstrations. In NIPS workshop, 2016. | |||

[10] Brys, T., Harutyunyan, A., Suay, H. B., Chernova, S., Tay- lor, M. E., and Nowe ́, A. Reinforcement learning from demonstration through shaping. In IJCAI, pp. 3352–3358, 2015. | |||

[11] Ng, A. Y., Russell, S. J., et al. Algorithms for inverse reinforcement learning. In Icml, pp. 663–670, 2000. | |||

[12] Syed, U. and Schapire, R. E. A game-theoretic approach to apprenticeship learning. In Advances in neural informa- tion processing systems, pp. 1449–1456, 2008. | |||

[13] Syed, U., Bowling, M., and Schapire, R. E. Apprenticeship learning using linear programming. In Proceedings of the 25th international conference on Machine learning, pp. 1032–1039. ACM, 2008. | |||

[14] Ho, J. and Ermon, S. Generative adversarial imitation learn- ing. In Advances in Neural Information Processing Sys- tems, pp. 4565–4573, 2016. | |||

[15] Sutton, R. S. and Barto, A. G. Reinforcement learning: An introduction, volume 1. MIT press Cambridge, 1998. | |||

[16] Kakade, S. M. A natural policy gradient. In Advances in neural information processing systems, pp. 1531–1538, 2002. | |||

[17] Schulman, J., Levine, S., Abbeel, P., Jordan, M., and Moritz, P. Trust region policy optimization. In Proceedings of the 32nd International Conference on Machine Learning (ICML-15), pp. 1889–1897, 2015. | |||

[18] Schulman, J., Wolski, F., Dhariwal, P., Radford, A., and Klimov, O. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347, 2017. | |||

[19] Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y. Generative adversarial nets. In Advances in neural information processing systems, pp. 2672–2680, 2014. | |||

[20] Brockman, G., Cheung, V., Pettersson, L., Schneider, J., Schulman, J., Tang, J., and Zaremba, W. Openai gym, 2016. | |||

[21] Lillicrap, T. P., Hunt, J. J., Pritzel, A., Heess, N., Erez, T., Tassa, Y., Silver, D., and Wierstra, D. Continuous control with deep reinforcement learning. arXiv preprint arXiv:1509.02971, 2015. | |||

[22] Moore, A. W. Efficient memory-based learning for robot control. 1990. | |||

[23] Zhu, Y., Wang, Z., Merel, J., Rusu, A., Erez, T., Cabi, S., Tunyasuvunakool, S., Kramar, J., Hadsell, R., de Freitas, N., et al. Reinforcement and imitation learning for diverse visuomotor skills. arXiv preprint arXiv:1802.09564, 2018. | |||

[24] Li, Y., Song, J., and Ermon, S. Infogail: Interpretable imitation learning from visual demonstrations. In Advances in Neural Information Processing Systems, pp. 3815–3825, 2017. | |||

[25] Ziebart, B. D., Maas, A. L., Bagnell, J. A., and Dey, A. K. Maximum entropy inverse reinforcement learning. In AAAI, volume 8, pp. 1433–1438. Chicago, IL, USA, 2008. | |||

[26] Pathak, D., Mahmoudieh, P., Luo, G., Agrawal, P., Chen, D., Shentu, Y., Shelhamer, E., Malik, J., Efros, A. A., and Darrell, T. Zero-Shot Visual Imitation. In International Conference on Learning Representations (ICLR), 2018. | |||

Latest revision as of 00:32, 17 December 2018

This is a summary of the paper titled: "Policy Optimization with Demonstrations", authored by Bingyi Kang, Zequn Jie, and Jiashi Feng. Available online at URL http://proceedings.mlr.press/v80/kang18a.html

Introduction

Reinforcement learning (RL) method has led to state-of-the-art results in a variety of applications. However, problems that involve learning from novel policies (to improve long-term performance) still pose challenges - especially in environments where reward signals are sparse and rare. There are currently two ways to solve such exploration problems in RL:

1) Guide the agent to explore states that have never been seen.

2) Guide the agent to imitate a demonstration trajectory sampled from an expert policy to learn.

When guiding the agent to imitate the expert behavior for learning, there are also two methods: putting the demonstration directly into the replay memory [1] [2] [3] or using the demonstration trajectory to pre-train the policy in a supervised manner [4]. However, neither of these methods takes full advantage of the demonstration data. They instead treat the demonstration data identically to self-generated data, requiring a tremendous number of difficult to collect examples to learn effectively. To address this problem, a novel policy optimization method from demonstration (POfD) is proposed, which takes full advantage of the demonstration and there is no need to ensure that the expert policy is the optimal policy. To summarize, the authors bring forth this idea through the following techniques:

1) A demonstration guided exploration term measuring the divergence between current and the expert policy is added to the policy optimization objective, increasing the similarity to expert-like exploration.

2) They say that for better learning from demonstrations and getting an optimization friendly lower bound, the proposed objective could be defined on an occupancy measure as in [14].

3) Finally, they show that the optimization can move towards optimizing the derived lower bound and the generative adversarial training.

The authors also evaluate the performance of POfD on Mujoco [5] in sparse-reward environments. The experiments results show that the performance of POfD is greatly improved compared with some strong baselines and even to the policy gradient method in dense-reward environments.

Intuition

The agent should imitate the demonstrated behavior when rewards are sparse and then explore new states on its own after acquiring sufficient skills, which is a dynamic intrinsic reward mechanism that can be reshaped in terms of the native rewards in RL. At present, the state of the art exploration in Reinforcement learning is simply epsilon greedy which just makes random moves for a small percentage of times to explore unexplored moves. This is very naive and is one of the main reasons for the high sample complexity in RL. On the other hand, if there is an expert demonstrator who can guide exploration, the agent can make more guided and accurate exploratory moves.

Related Work

There are some related works in overcoming exploration difficulties by learning from demonstration [6] and imitation learning in RL.

For learning from demonstration (LfD),

- Most LfD methods adopt value-based RL algorithms, such as DQfD (Deep Q-learning from Demonstrations) [2] which are applied into the discrete action spaces and DDPGfD (Deep Deterministic Policy Gradient from Demonstrations) [3] which extends this idea to the continuous spaces. But both of them under-utilize the demonstration data.

- There are some methods based on policy iteration [7] [8], which shapes the value function by using demonstration data. But they get the bad performance when demonstration data is imperfect.

- A hybrid framework [9] that learns the policy in which the probability of taking demonstrated actions is maximized is proposed, which considers fewer demonstration data.

- A reward reshaping mechanism [10] that encourages taking actions close to the demonstrated ones is proposed. It is similar to the method in this paper, but there exist some differences as it is defined as a potential function based on multi-variate Gaussian to model the distribution of state-actions.

All of the above methods require a lot of perfect demonstrations to get satisfactory performance, which is different from POfD in this paper.

For imitation learning,

- Inverse Reinforce Learning [11] problems are solved by alternating between fitting the reward function and selecting the policy [12] [13]. But it cannot be extended to big-scale problems.

- Generative Adversarial Imitation Learning (GAIL) [14] uses a discriminator to distinguish whether a state-action pair is from the expert or the learned policy and it can be applied into the high-dimensional continuous control problems.

- An alternative imitation learning [26] is that an agent explores the environment without any expert supervision and distills this exploration data into goal-directed skills. These skills can then be used to imitate the visual demonstration provided by the expert.

Both of the above methods are effective for imitation learning, but cannot leverage the valuable feedback given by the environments and usually suffer from bad performance when the expert data is imperfect. That is different from POfD in this paper.

There is also another idea in which an agent learns using hybrid imitation learning and reinforcement learning reward[23, 24]. However, unlike this paper, they did not provide some theoretical support for their method and only explained some intuitive explanations.

Background

Preliminaries

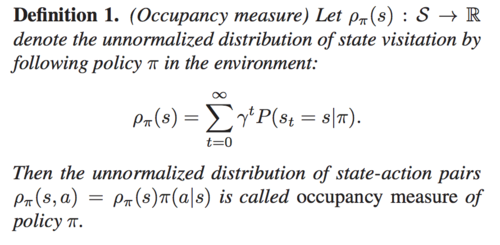

Markov Decision Process (MDP) [15] is defined by a tuple [math]\displaystyle{ ⟨\mathcal{S}, \mathcal{A}, \mathcal{P}, r, \gamma⟩ }[/math], where [math]\displaystyle{ \mathcal{S} }[/math] is the state space, [math]\displaystyle{ \mathcal{A} }[/math] is the action space, [math]\displaystyle{ \mathcal{P}(s'|s,a) }[/math] is the transition distribution of taking action [math]\displaystyle{ a }[/math] at state [math]\displaystyle{ s }[/math], [math]\displaystyle{ r(s,a) }[/math]is the reward function, and [math]\displaystyle{ \gamma }[/math] is the discount factor between 0 and 1. Policy [math]\displaystyle{ \pi(a|s) }[/math] is a mapping from state to action probabilities, the performance of [math]\displaystyle{ \pi }[/math] is usually evaluated by its expected discounted reward [math]\displaystyle{ \eta(\pi) }[/math]: \[\eta(\pi)=\mathbb{E}_{\pi}[r(s,a)]=\mathbb{E}_{(s_0,a_0,s_1,...)}[\sum_{t=0}^\infty\gamma^{t}r(s_t,a_t)] \] The value function is [math]\displaystyle{ V_{\pi}(s) =\mathbb{E}_{\pi}[r(·,·)|s_0=s] }[/math], the action value function is [math]\displaystyle{ Q_{\pi}(s,a) =\mathbb{E}_{\pi}[r(·,·)|s_0=s,a_0=a] }[/math], and the advantage function that reflects the expected additional reward after taking action a at state s is [math]\displaystyle{ A_{\pi}(s,a)=Q_{\pi}(s,a)-V_{\pi}(s) }[/math]. Then the authors define Occupancy measure, which is used to estimate the probability that state [math]\displaystyle{ s }[/math] and state action pairs [math]\displaystyle{ (s,a) }[/math] when executing a certain policy.

Then the performance of [math]\displaystyle{ \pi }[/math] can be rewritten to:

At the same time, the authors propose a lemma:

Problem Definition

Generally, RL tasks and environments do not provide a comprehensive reward and instead rely on sparse feedback indicating whether the goal is reached.

In this paper, the authors aim to develop a method that can boost exploration by leveraging effectively the demonstrations [math]\displaystyle{ D^E }[/math]from the expert policy [math]\displaystyle{ \pi_E }[/math] and maximize [math]\displaystyle{ \eta(\pi) }[/math] in the sparse-reward environment. The authors define the demonstrations [math]\displaystyle{ D^E=\{\tau_1,\tau_2,...,\tau_N\} }[/math], where the i-th trajectory [math]\displaystyle{ \tau_i=\{(s_0^i,a_0^i),(s_1^i,a_1^i),...,(s_T^i,a_T^i)\} }[/math] is generated from the unknown expert policy [math]\displaystyle{ \pi_E }[/math]. In addition, there is an assumption on the quality of the expert policy:

Throughout the paper, they use [math]\displaystyle{ \pi_E }[/math] to denote the expert policy that gives the relatively good [math]\displaystyle{ \eta_\pi }[/math], and use [math]\displaystyle{ \hat{\mathbb{E}}_D }[/math]to denote empirical expectation estimated from the demonstrated trajectories [math]\displaystyle{ D^E }[/math]. We have the following reasonable and necessary assumption on the quality of the expert policy [math]\displaystyle{ \pi_E }[/math].

Moreover, it is not necessary to ensure that the expert policy is advantageous over all the policies. This is because that POfD will learn a better policy than expert policy by exploring on its own in later learning stages.

Method

Policy Optimization with Demonstration (POfD)

This method optimizes the policy by forcing the policy to explore in the nearby region of the expert policy that is specified by several demonstrated trajectories [math]\displaystyle{ D^E }[/math] (as shown in Fig.1) in order to avoid causing slow convergence or failure when the environment feedback is sparse. In addition, the authors encourage the policy π to explore by "following" the demonstrations [math]\displaystyle{ D^E }[/math]. Thus, a new learning objective is given: \[ \mathcal{L}(\pi_{\theta})=-\eta(\pi_{\theta})+\lambda_{1}D_{JS}(\pi_{\theta},\pi_{E})\] where [math]\displaystyle{ D_{JS}(\pi_{\theta},\pi_{E}) }[/math] is Jensen-Shannon divergence between current policy [math]\displaystyle{ \pi_{\theta} }[/math] and the expert policy [math]\displaystyle{ \pi_{E} }[/math] , [math]\displaystyle{ \lambda_1 }[/math] is a trading-off parameter, and [math]\displaystyle{ \theta }[/math] is policy parameter. According to Lemma 1, the authors use [math]\displaystyle{ D_{JS}(\rho_{\theta},\rho_{E}) }[/math] to instead of [math]\displaystyle{ D_{JS}(\pi_{\theta},\pi_{E}) }[/math], because it is easier to optimize through adversarial training on demonstrations. The learning objective is: \[ \mathcal{L}(\pi_{\theta})=-\eta(\pi_{\theta})+\lambda_{1}D_{JS}(\rho_{\theta},\rho_{E})\]

Benefits of Exploration with Demonstrations

The authors introduce the benefits of POfD. Firstly, we consider the expression of expected return in policy gradient methods [16]. \[ \eta(\pi)=\eta(\pi_{old})+\mathbb{E}_{\tau\sim\pi}[\sum_{t=0}^\infty\gamma^{t}A_{\pi_{old}}(s,a)]\] [math]\displaystyle{ \eta(\pi) }[/math]is the advantage over the policy [math]\displaystyle{ \pi_{old} }[/math] in the previous iteration, so the expression can be rewritten by \[ \eta(\pi)=\eta(\pi_{old})+\sum_{s}\rho_{\pi}(s)\sum_{a}\pi(a|s)A_{\pi_{old}}(s,a)\] The local approximation to [math]\displaystyle{ \eta(\pi) }[/math] up to first order is usually as the surrogate learning objective to be optimized by policy gradient methods due to the difficulties brought by complex dependency of [math]\displaystyle{ \rho_{\pi}(s) }[/math] over [math]\displaystyle{ \pi }[/math]: \[ J_{\pi_{old}}(\pi)=\eta(\pi_{old})+\sum_{s}\rho_{\pi_{old}}(s)\sum_{a}\pi(a|s)A_{\pi_{old}}(s,a)\] The policy gradient methods improve [math]\displaystyle{ \eta(\pi) }[/math] monotonically by optimizing the above [math]\displaystyle{ J_{\pi_{old}}(\pi) }[/math] with a sufficiently small update step from [math]\displaystyle{ \pi_{old} }[/math] to [math]\displaystyle{ \pi }[/math] such that [math]\displaystyle{ D_{KL}^{max}(\pi, \pi_{old}) }[/math] is bounded [16] [17] [18]. POfD imposes an additional regularization [math]\displaystyle{ D_{JS}(\pi_{\theta}, \pi_{E}) }[/math] between [math]\displaystyle{ \pi_\theta }[/math] and [math]\displaystyle{ \pi_{E} }[/math] in order to encourage explorations around regions demonstrated by the expert policy. Theorem 1 shows such benefits,

In fact, POfD brings another factor, [math]\displaystyle{ D_{J S}^{max}(\pi_{i}, \pi_{E}) }[/math], that would fully use the advantage [math]\displaystyle{ {\hat \delta} }[/math]and add improvements with a margin over pure policy gradient methods.

Optimization

For POfD, the authors choose to optimize the lower bound of the Jensen-Shannon divergence instead of directly optimizing the difficult Jensen-Shannon divergence. This optimization method is compatible with any policy gradient methods. Theorem 2 gives the lower bound of [math]\displaystyle{ D_{JS}(\rho_{\theta}, \rho_{E}) }[/math]:

Thus, the occupancy measure matching objective can be written as:

where [math]\displaystyle{ D(s,a)=\frac{1}{1+e^{-U(s,a)}}: \mathcal{S}\times \mathcal{A} \rightarrow (0,1) }[/math] is an arbitrary mapping function followed by a sigmoid activation function used for scaling, and its supremum ranging is like a discriminator for distinguishing whether the state-action pair is a current policy or an expert policy. To avoid overfitting, the authors add causal entropy [math]\displaystyle{ −H (\pi_{\theta}) }[/math] as the regularization term. Thus, the learning objective is: \[\min_{\theta}\mathcal{L}=-\eta(\pi_{\theta})-\lambda_{2}H(\pi_{\theta})+\lambda_{1} \sup_{{D\in(0,1)}^{S\times A}} \mathbb{E}_{\pi_{\theta}}[\log(D(s,a))]+\mathbb{E}_{\pi_{E}}[\log(1-D(s,a))]\] At this point, the problem closely resembles the minimax problem related to the Generative Adversarial Networks (GANs) [19]. The difference is that the discriminative model D of GANs is well-trained but the expert policy of POfD is not optimal. Then suppose D is parameterized by w. If it is from an expert policy, [math]\displaystyle{ D_w }[/math]is toward 1, otherwise it is toward 0. Thus, the minimax learning objective is: \[\min_{\theta}\max_{w}\mathcal{L}=-\eta(\pi_{\theta})-\lambda_{2}H (\pi_{\theta})+\lambda_{1}( \mathbb{E}_{\pi_{\theta}}[\log(D_{w}(s,a))]+\mathbb{E}_{\pi_{E}}[\log(1-D_{w}(s,a))])\] The minimax learning objective can be rewritten by substituting the expression of [math]\displaystyle{ \eta(\pi) }[/math]: \[\min_{\theta}\max_{w}-\mathbb{E}_{\pi_{\theta}}[r'(s,a)]-\lambda_{2}H (\pi_{\theta})+\lambda_{1}\mathbb{E}_{\pi_{E}}[\log(1-D_{w}(s,a))]\] where [math]\displaystyle{ r'(s,a)=r(a,b)-\lambda_{1}\log(D_{w}(s,a)) }[/math] is the reshaped reward function. The above objective can be optimized efficiently by alternately updating policy parameters θ and discriminator parameters w, then the gradient is given by: \[\mathbb{E}_{\pi}[\nabla_{w}\log(D_{w}(s,a))]+\mathbb{E}_{\pi_{E}}[\nabla_{w}\log(1-D_{w}(s,a))]\] Then, fixing the discriminator [math]\displaystyle{ D_w }[/math], the reshaped policy gradient is: \[\nabla_{\theta}\mathbb{E}_{\pi_{\theta}}[r'(s,a)]=\mathbb{E}_{\pi_{\theta}}[\nabla_{\theta}\log\pi_{\theta}(a|s)Q'(s,a)]\] where [math]\displaystyle{ Q'(\bar{s},\bar{a})=\mathbb{E}_{\pi_{\theta}}[r'(s,a)|s_0=\bar{s},a_0=\bar{a}] }[/math].

At the end, Algorithm 1 gives the detailed process.

Discussion on Existing LfD Methods

To connect with the proposed POfD method, interpretation of the existing methods DQfD and DDPGfD through occupancy measure matching is provided. Both of the existing methods leverage demonstrations to aid exploration in RL.

DQFD

DQFD [2] puts the demonstrations into a replay memory D and keeps them throughout the Q-learning process. The objective for DQFD is: \[J_{DQfD}={\hat{\mathbb{E}}}_{D}[(R_t(n)-Q_w(s_t,a_t))^2]+\alpha{\hat{\mathbb{E}}}_{D^E}[(R_t(n)-Q_w(s_t,a_t))^2]\] The second term can be rewritten as [math]\displaystyle{ {\hat{\mathbb{E}}}_{D^E}[(R_t(n)-Q_w(s_t,a_t))^2]={\hat{\mathbb{E}}}_{D^E}[(\hat{\rho}_E(s,a)-\rho_{\pi}(s,a))^{2}r^2(s,a)] }[/math], which can be regarded as a regularization forcing current policy's occupancy measure to match the expert's empirical occupancy measure, weighted by the potential reward. Thus minimizing the objective with expert demonstration and self-generated off-policy datais actually equivalent to imposing an occupancy measure matching regularization to the original DQN objective.

DDPGfD

DDPGfD [3] also puts the demonstrations into a replay memory D, but it is based on an actor-critic framework [21]. The objective for DDPGfD is the same as DQFD. Its policy gradient is: \[\nabla_{\theta}J_{DDPGfD}\approx \mathbb{E}_{s,a}[\nabla_{a}Q_w(s,a)\nabla_{\theta}\pi_{\theta}(s)], a=\pi_{\theta}(s) \] From this equation, policy is updated relying on learned Q-network [math]\displaystyle{ Q_w }[/math]rather than the demonstrations [math]\displaystyle{ D^{E} }[/math]. DDPGfD shares the same objective function for [math]\displaystyle{ Q_w }[/math] as DQfD, thus they have the same way of leveraging demonstrations, that is the demonstrations in DQfD and DDPGfD induce an occupancy measure matching regularization.

Although the above replay memory based LfD methods can benefit RL algorithms to some extent in sparse-reward environments, they have some limitations for sufficiently exploiting the demonstration data. First, such a paradigm utilizes expert trajectories only by treating them as learningreference, whose effect may be significantly underexploited when demonstrations are few, as indicated by the authors' experiments. Second, to be compatible with collected data during training, the demonstrated trajectories are required to be associated with rewards for each state transition. However, the rewards in demonstrations may differ from the ones used for learning the policy in the current environment [25], or they may be unavailable.

Experiments

Goal

The authors aim at investigating 1) whether POfD can aid exploration by leveraging a few demonstrations, even though the demonstrations are imperfect. 2) whether POfD can succeed and achieve high empirical return, especially in environments where reward signals are sparse and rare.

Settings

The authors conduct the experiments on 8 physical control tasks, ranging from low-dimensional spaces to high-dimensional spaces and naturally sparse environments based on OpenAI Gym [20] and Mujoco (Multi-Joint dynamics with Contact) [5] (Gym is a toolkit for developing and comparing reinforcement learning algorithms. It supports teaching agents everything from walking to playing games like Pong or Pinball. MuJoCo is a physics engine aiming to facilitate research and development in robotics, biomechanics, graphics and animation, and other areas where fast and accurate simulation is needed. In order to get familiar with OpenAI Gym and Mujoco environment, you can watch these videos, respectively: Mujoco, OpenAI Gym). Due to the uniqueness of the environments, the authors introduce 4 ways to sparsify their built-in dense rewards. TYPE1: a reward of +1 is given when the agent reaches the terminal state, and otherwise 0. TYPE2: a reward of +1 is given when the agent survives for a while. TYPE3: a reward of +1 is given for every time the agent moves forward over a specific number of units in Mujoco environments. TYPE4: specially designed for InvertedDoublePendulum, a reward +1 is given when the second pole stays above a specific height of 0.89. The details are shown in Table 1. Moreover, only one single imperfect trajectory is used as the demonstrations in this paper. The authors collect the demonstrations by training an agent insufficiently by running TRPO (Trust Region Policy Optimization) in the corresponding dense environment.

Baselines

The authors compare POfD against 5 strong baselines:

- training the policy with TRPO [17] in dense environments, which is called expert

- training the policy with TRPO [17] in sparse environments

- applying GAIL [14] to learn the policy from demonstrations

- DQfD [2]

- DDPGfD [3]

1. Trust Region Policy Optimization (TRPO) is an iterative procedure for optimizing policies, with guaranteed monotonic improvement. By making several approximations to the theoretically-justified procedure, a practical algorithm such as this can be developed. This algorithm is similar to natural policy gradient methods and is effective for optimizing neural networks.

2. Generative Adversarial Imitation Learning (GAIL) is a method to directly extract a policy from data as if it were obtained by reinforcement learning and by following inverse reinforcement learning.

3. Deep Q-learning from Demonstrations (DQfD), is a method that leverages small sets of demonstration data to speed up the learning process from relatively small amounts of demonstration data and is able to automatically assess the necessary ratio of demonstration data while learning thanks to a prioritized replay mechanism.

4. DDPGfD (Deep Deterministic Policy Gradients From Demonstrations) uses prioritized replay to enable efficient propagation of the reward information, which is essential in problems with sparse rewards.

Results

Firstly, the authors test the performance of POfD in sparse control environments with discrete actions. From Table 1, POfD achieves performance comparable with the policy learned under dense environments. From Figure 2, only POfD successes to explore sufficiently and achieves great performance in both sparse environments. TRPO [17] and DQFD [2] fail to explore and GAIL [14] converges to the imperfect demonstration in MountainCar [22].

Then, the authors test the performance of POfD under spares environments with continuous actions space. From Figure 3, POfD achieves expert-level performance in terms of accumulated rewards and surpasses other strong baselines training the policy with TRPO. By watching the learning process of different methods, we can see that TRPO consistently fails to explore the environments when the feedback is sparse, except for HalfCheetah. This may be because there is no terminal state in HalfCheetah, thus a random agent can perform reasonably well as long as the time horizon is sufficiently long. This is shown in Figure3 where the improvement of TRPO begins to show after 400 iterations. DDPGfD and GAIL have common drawback: during training process, they both converge to the imperfect demonstration data. For HalfCheetah, GAIL fails to converge and DDPGfD converges to an even worse point. This situation is expected because the policy and value networks tend to over-fit when having few data, so the training process of GAIL and DDPGfD is severely biased by the imperfect data. Finally, our proposed method can effectively explore the environment with the help of demonstration-based intrinsic reward reshaping and succeeds consistently across different tasks both in terms of learning stability and convergence speed.

The authors also implement a locomotion task [math]\displaystyle{ Humanoid }[/math], which teaches a human-like robot to walk. The state space of dimension is 376, which is very hard to render. As a result, POfD still outperformed all three baselike methods, as they failed to learn policies in such a sparse reward environment.

The reacher environment is a task that the target is to control a robot arm to touch an object. the location of the object is random for each instantiation. The environment reward is sparse: every time the arm reaches the ball and holds for a while (e.g., 5 time steps), it receives a reward of +1; otherwise, it gets zero reward. The authors select 15 random trajectories as demonstration data, and the performance of POfD is much better than the expert, while all other baseline methods failed.

Conclusion

In this paper, POfD is proposed that acquires knowledge from a limited amount of imperfect demonstration data to aid exploration in environments with sparse feedback. It is compatible with any policy gradient method. POfD induces implicit dynamic reward shaping and brings provable benefits for policy improvement. Moreover, the results of the experiments have shown the validity and effectiveness of POfD in encouraging the agent to explore around the nearby region of the expert policy and learn better policies. The key contribution is that POfD helps the agent work with few and imperfect demonstrations in an environment with sparse rewards.

Critique

- A novel demonstration-based policy optimization method is proposed. In the process of policy optimization, POfD reshapes the reward function. This new reward function can guide the agent to imitate the expert behavior when the reward is sparse and explore on its own when the reward value can be obtained, which can take full advantage of the demonstration data and there is no need to ensure that the expert policy is the optimal policy.

- POfD can be combined with any policy gradient methods. Its performance surpasses five strong baselines and can be comparable to the agents trained in the dense-reward environment.

- The paper is structured and the flow of ideas is easy to follow. For related work, the authors clearly explain similarities and differences among these related works.

- This paper's scalability is demonstrated. The experiments environments are ranging from low-dimensional spaces to high-dimensional spaces and from discrete action spaces to continuous actions spaces. For future work, can it be realized in the real world?

- There is a doubt that whether it is a correct method to use the trajectory that was insufficiently learned in a dense-reward environment as the imperfect demonstration.

- In this paper, the performance only is judged by the cumulative reward, can other evaluation terms be considered? For example, the convergence rate.

- The performance of this algorithm hinges on the assumption that expert demonstrations are near optimal in the action space. As seen in figure 3, there appears to be an upper bound to performance near (or just above) the expert accuracy -- this may be an indication of a performance ceiling. In games where near-optimal policies can differ greatly (e.g.; offensive or defensive strategies in chess), the success of the model will depend on the selection of expert demonstrations that are closest to a truly optimal policy (i.e.; just because a policy is the current expert, it does not mean it resembles the true optimal policy).

References

[1] Nair, A., McGrew, B., Andrychowicz, M., Zaremba, W., and Abbeel, P. Overcoming exploration in reinforcement learning with demonstrations. arXiv preprint arXiv:1709.10089, 2017.

[2] Hester, T., Vecerik, M., Pietquin, O., Lanctot, M., Schaul, T., Piot, B., Sendonaris, A., Dulac-Arnold, G., Osband, I., Agapiou, J., et al. Learning from demonstrations for real world reinforcement learning. arXiv preprint arXiv:1704.03732, 2017.

[3] Večerík, M., Hester, T., Scholz, J., Wang, F., Pietquin, O., Piot, B., Heess, N., Rotho ̈rl, T., Lampe, T., and Riedmiller, M. Leveraging demonstrations for deep reinforcement learning on robotics problems with sparse rewards. arXiv preprint arXiv:1707.08817, 2017.

[4] Silver, D., Huang, A., Maddison, C. J., Guez, A., Sifre, L., Van Den Driessche, G., Schrittwieser, J., Antonoglou, I., Panneershelvam, V., Lanctot, M., et al. Mastering the game of go with deep neural networks and tree search. nature, 529(7587):484–489, 2016.

[5] Todorov, E., Erez, T., and Tassa, Y. Mujoco: A physics engine for model-based control. In Intelligent Robots and Systems (IROS), 2012 IEEE/RSJ International Con- ference on, pp. 5026–5033. IEEE, 2012.

[6] Schaal, S. Learning from demonstration. In Advances in neural information processing systems, pp. 1040–1046, 1997.

[7] Kim, B., Farahmand, A.-m., Pineau, J., and Precup, D. Learning from limited demonstrations. In Advances in Neural Information Processing Systems, pp. 2859–2867, 2013.

[8] Piot, B., Geist, M., and Pietquin, O. Boosted bellman resid- ual minimization handling expert demonstrations. In Joint European Conference on Machine Learning and Knowl- edge Discovery in Databases, pp. 549–564. Springer, 2014.

[9] Aravind S. Lakshminarayanan, Sherjil Ozair, Y. B. Rein- forcement learning with few expert demonstrations. In NIPS workshop, 2016.

[10] Brys, T., Harutyunyan, A., Suay, H. B., Chernova, S., Tay- lor, M. E., and Nowe ́, A. Reinforcement learning from demonstration through shaping. In IJCAI, pp. 3352–3358, 2015.

[11] Ng, A. Y., Russell, S. J., et al. Algorithms for inverse reinforcement learning. In Icml, pp. 663–670, 2000.

[12] Syed, U. and Schapire, R. E. A game-theoretic approach to apprenticeship learning. In Advances in neural informa- tion processing systems, pp. 1449–1456, 2008.

[13] Syed, U., Bowling, M., and Schapire, R. E. Apprenticeship learning using linear programming. In Proceedings of the 25th international conference on Machine learning, pp. 1032–1039. ACM, 2008.

[14] Ho, J. and Ermon, S. Generative adversarial imitation learn- ing. In Advances in Neural Information Processing Sys- tems, pp. 4565–4573, 2016.

[15] Sutton, R. S. and Barto, A. G. Reinforcement learning: An introduction, volume 1. MIT press Cambridge, 1998.

[16] Kakade, S. M. A natural policy gradient. In Advances in neural information processing systems, pp. 1531–1538, 2002.

[17] Schulman, J., Levine, S., Abbeel, P., Jordan, M., and Moritz, P. Trust region policy optimization. In Proceedings of the 32nd International Conference on Machine Learning (ICML-15), pp. 1889–1897, 2015.

[18] Schulman, J., Wolski, F., Dhariwal, P., Radford, A., and Klimov, O. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347, 2017.

[19] Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y. Generative adversarial nets. In Advances in neural information processing systems, pp. 2672–2680, 2014.

[20] Brockman, G., Cheung, V., Pettersson, L., Schneider, J., Schulman, J., Tang, J., and Zaremba, W. Openai gym, 2016.

[21] Lillicrap, T. P., Hunt, J. J., Pritzel, A., Heess, N., Erez, T., Tassa, Y., Silver, D., and Wierstra, D. Continuous control with deep reinforcement learning. arXiv preprint arXiv:1509.02971, 2015.

[22] Moore, A. W. Efficient memory-based learning for robot control. 1990.

[23] Zhu, Y., Wang, Z., Merel, J., Rusu, A., Erez, T., Cabi, S., Tunyasuvunakool, S., Kramar, J., Hadsell, R., de Freitas, N., et al. Reinforcement and imitation learning for diverse visuomotor skills. arXiv preprint arXiv:1802.09564, 2018.

[24] Li, Y., Song, J., and Ermon, S. Infogail: Interpretable imitation learning from visual demonstrations. In Advances in Neural Information Processing Systems, pp. 3815–3825, 2017.

[25] Ziebart, B. D., Maas, A. L., Bagnell, J. A., and Dey, A. K. Maximum entropy inverse reinforcement learning. In AAAI, volume 8, pp. 1433–1438. Chicago, IL, USA, 2008.

[26] Pathak, D., Mahmoudieh, P., Luo, G., Agrawal, P., Chen, D., Shentu, Y., Shelhamer, E., Malik, J., Efros, A. A., and Darrell, T. Zero-Shot Visual Imitation. In International Conference on Learning Representations (ICLR), 2018.