Co-Teaching: Difference between revisions

(→Intuition: Minor grammar corrections) |

(Technical) |

||

| (32 intermediate revisions by 15 users not shown) | |||

| Line 3: | Line 3: | ||

Co-teaching: Robust Training Deep Neural Networks with Extremely Noisy Labels | Co-teaching: Robust Training Deep Neural Networks with Extremely Noisy Labels | ||

==Contributions== | ==Contributions== | ||

The paper proposes a novel approach to training deep neural networks on data with noisy labels. The proposed architecture, named ‘co-teaching’, maintains two networks simultaneously. The experiments are conducted on noisy versions of MNIST, CIFAR-10 and CIFAR-100 datasets. Empirical results demonstrate that, under extremely noisy circumstances (i.e., 45% of noisy labels), the robustness | The paper proposes a novel approach to training deep neural networks on data with noisy labels. The proposed architecture, named ‘co-teaching’, maintains two networks simultaneously, which focuses on training on selected clean instances and avoids estimating the noise transition matrix. In addition, using stochastic optimization with momentum to train the deep networks and clean data can be memorized by nonlinear deep networks, which becomes robust. The experiments are conducted on noisy versions of MNIST, CIFAR-10 and CIFAR-100 datasets. Empirical results demonstrate that, under extremely noisy circumstances (i.e., 45% of noisy labels), the robustness of deep learning models trained by the co-teaching approach is much superior to state-of-the-art baselines | ||

of deep learning models trained by the | |||

baselines | |||

==Terminology== | ==Terminology== | ||

Ground-Truth Labels: The proper objective labels (i.e. the real, or ‘true’, labels) of the data. | Ground-Truth Labels: The proper objective labels (i.e. the real, or ‘true’, labels) of the data. | ||

Noisy Labels: Labels that are corrupted (either manually or through the data collection process) from ground-truth labels. | Noisy Labels: Labels that are corrupted (either manually or through the data collection process) from ground-truth labels. This can result in false positives. | ||

=Intuition= | =Intuition= | ||

The Co-teaching architecture maintains two networks with different learning abilities simultaneously. The reason why Co-teaching is more robust can be explained as follows. Usually | The Co-teaching architecture maintains two networks with different learning abilities simultaneously. The reason why Co-teaching is more robust can be explained as follows. Usually when learning on a batch of noisy data, only the error from the network itself is transferred back to facilitate learning. But in the case of Co-teaching, the two networks are able to filter different type of errors, and flow back to itself and the other network. As a result, the two models learn together, from the network itself and the partner network. | ||

=Motivation= | =Motivation= | ||

| Line 22: | Line 20: | ||

=Related Works= | =Related Works= | ||

Some approaches use statistical learning methods for the problem of learning from extremely noisy labels. These approaches can be divided into 3 strands: surrogate loss, noise estimation, and probabilistic | 1. Statistical learning methods: Some approaches use statistical learning methods for the problem of learning from extremely noisy labels. These approaches can be divided into 3 strands: surrogate loss, noise estimation, and probabilistic modelling. In the surrogate loss category, one work proposes an unbiased estimator to provide the noise corrected loss approach. Another work presented a robust non-convex loss, which is the special case in a family of robust losses. In the noise rate estimation category, some authors propose a class-probability estimator using order statistics on the range of scores. Another work presented the same estimator using the slope of ROC curve. In the probabilistic modelling category, there is a two coin model proposed to handle noise labels from multiple annotators. | ||

2. Deep learning methods: There are also deep learning approaches that can be used to approach data with noisy labels. One work proposed a unified framework to distill knowledge from clean labels and knowledge graphs. Another work trained a label cleaning network by a small set of clean labels and used it to reduce the noise in large-scale noisy labels. There is also a proposed joint optimization framework to learn parameters and estimate true labels simultaneously. | |||

Another work leverages an additional validation set to adaptively assign weights to training examples in every iteration. One particular paper ads a crowd layer after the output layer for noisy labels from multiple annotators. | |||

3. Learning to teach methods: It is another approach to this problem. The methods are made up by the teacher and student networks. The teacher network selects more informative instances for better training of student networks. Most works did not account for noisy labels, with exception to MentorNet, which applied the idea on data with noisy labels. | |||

=Co-Teaching Algorithm= | |||

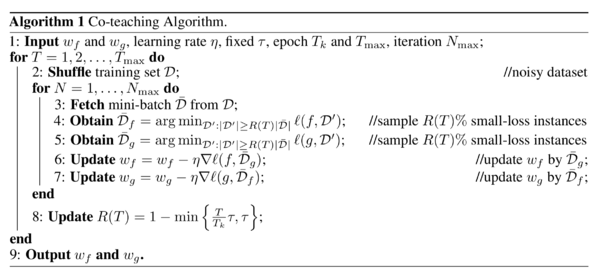

[[File:Co-Teaching_Algorithm.png|600px|center]] | |||

The idea as shown in the algorithm above is to train two deep networks simultaneously. In each mini-batch using mini-batch gradient descent, each network selects its small-loss instances as useful knowledge and then teaches these useful instances to the peer network. <math>R(T)</math> governs the percentage of small-loss instances to be used in updating the parameters of each network. | |||

=Summary of Experiment= | =Summary of Experiment= | ||

==Proposed Method== | ==Proposed Method== | ||

The proposed co-teaching method | The proposed co-teaching method trains two networks simultaneously, and samples instances with small loss at each mini batch as useful knowledge. The sample of small-loss instances is then taught to the peer network for updating the parameters. | ||

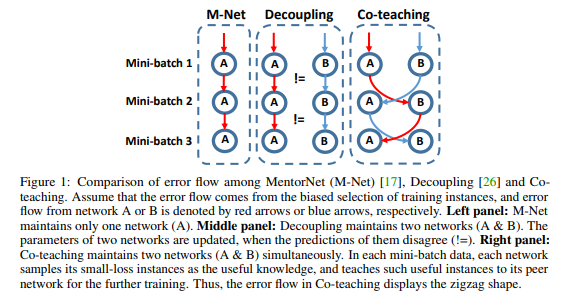

[[File:Co-Teaching Fig 1.png|600px|center]] | [[File:Co-Teaching Fig 1.png|600px|center]] | ||

The co-teaching method relies on research that suggests deep networks learn clean and easy patterns in initial epochs, but are susceptible to overfitting noisy labels as the number of epochs grows. To counteract this, the co-teaching method reduces the mini-batch size by gradually increasing a drop rate (i.e., noisy instances with higher loss will be dropped at an increasing rate). | The co-teaching method relies on research that suggests deep networks learn clean and easy patterns in initial epochs, but are susceptible to overfitting noisy labels as the number of epochs grows. To counteract this, the co-teaching method reduces the mini-batch size by gradually increasing a drop rate (i.e., noisy instances with higher loss will be dropped at an increasing rate). | ||

The mini-batches are swapped between peer networks due to the underlying intuition that different classifiers will generate different decision boundaries. Swapping the mini-batches constitutes a sort of ‘peer-reviewing’ that promotes noise reduction since the error from a network is not directly transferred back to itself. | The mini-batches are swapped between peer networks due to the underlying intuition that different classifiers will generate different decision boundaries. Swapping the mini-batches constitutes a sort of ‘peer-reviewing’ that promotes noise reduction since the error from a network is not directly transferred back to itself. To summarize, as error from one network will not be directly transferred back itself, the authors expect that the Co-teaching method will be able to deal with heavier noise compared with the self-evolving one. | ||

==Dataset Corruption== | ==Dataset Corruption== | ||

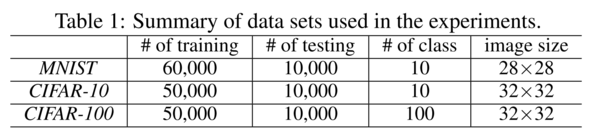

To simulate learning with noisy labels, the datasets (which are clean by default) are manually corrupted by applying a noise transformation matrix. Two methods are used for generating such noise transformation matrices: pair flipping and symmetry. | The datasets incorporated by this paper include MNIST, CIFAR-10 and CIFAR-100. A summary of these datasets are shown as below. | ||

[[File:co_teaching_data.png|600px|center]] | |||

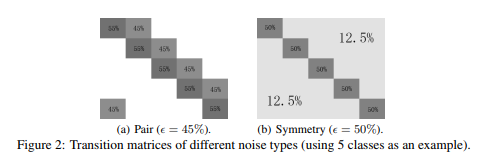

To simulate learning with noisy labels, the datasets (which are clean by default) are manually corrupted by applying a noise transformation matrix<math>Q</math>, where where <math>Q_{ij} = Pr(\widetilde{y} = j|y = i)</math> given that noisy <math>\widetilde{y}</math> is flipped from clean <math>y</math>. Two methods are used for generating such noise transformation matrices: pair flipping and symmetry. | |||

[[File:Co-Teaching Fig 2.png|600px|center]] | [[File:Co-Teaching Fig 2.png|600px|center]] | ||

Three noise conditions are simulated for comparing co-teaching with baseline methods. | Three noise conditions are simulated for comparing co-teaching with baseline methods. | ||

Note: Corruption of Dataset here means randomly choosing a wrong label instead of the target label by applying noise. | |||

{| class="wikitable" | {| class="wikitable" | ||

{| border="1" cellpadding="3" | {| border="1" cellpadding="3" | ||

| Line 50: | Line 60: | ||

| Pair Flipping || 45% || Almost half of the instances have noisy labels. Simulates erroneous labels which are similar to true labels. | | Pair Flipping || 45% || Almost half of the instances have noisy labels. Simulates erroneous labels which are similar to true labels. | ||

|- | |- | ||

| Symmetry || 50% || Half of the instances have noisy labels. Further rationale can be found at [1]. | | Symmetry || 50% || Half of the instances have noisy labels. Labels have a constant probability of being corrupted. Further rationale can be found at [1]. | ||

|- | |- | ||

| Symmetry || 20% || Verify the robustness of co-teaching in a low-level noise scenario. | | Symmetry || 20% || Verify the robustness of co-teaching in a low-level noise scenario. | ||

|} | |} | ||

|} | |} | ||

==Baseline Comparisons== | ==Baseline Comparisons== | ||

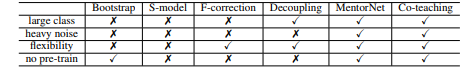

The co-teaching method is compared with several baseline approaches, which have varying: | The co-teaching method is compared with several baseline approaches, which have varying: | ||

| Line 64: | Line 75: | ||

[[File:Co-Teaching Fig 3.png|600px|center]] | [[File:Co-Teaching Fig 3.png|600px|center]] | ||

===Bootstrap=== | ===Bootstrap=== | ||

The general idea behind bootstrapping is to dynamically change (correct) noisy labels during training. The idea is to take a value derived from the original and predicted class. The final label is some convex combination of the two. It should be noted that the weighting of the prediction is increased over time to account for the model itself improving. Of course, this procedure needs to be finely tuned to prevent it from rampantly changing correct labels before it becomes accurate. [2]. | |||

===S-Model=== | ===S-Model=== | ||

Using an additional softmax layer to model the noise transition matrix [3]. | Using an additional softmax layer to model the noise transition matrix [3]. | ||

| Line 72: | Line 84: | ||

Two separate classifiers are used in this technique. Parameters are updated using only the samples that are classified differently between the two models [5]. | Two separate classifiers are used in this technique. Parameters are updated using only the samples that are classified differently between the two models [5]. | ||

===MentorNet=== | ===MentorNet=== | ||

A | A mentor network weights the probability of data instances being clean/noisy in order to train the student network on cleaner instances [6]. | ||

As shown in the above table - few of the advantages of Co-teaaching method include - Co-teaching | |||

method does not rely on any specific network architectures, which can also deal with a large number of classes and is more robust to noise. Besides, it can be trained from scratch. This makes teaching more appealing for practical usage. | |||

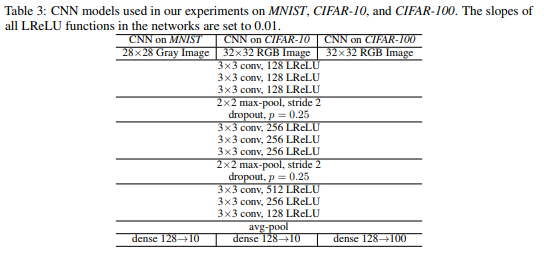

==Implementation Details== | ==Implementation Details== | ||

Two CNN models using the same architecture (shown below) are used as the peer networks for the co-teaching method. They are initialized with different parameters in order to be significantly different from one another (different initial parameters can lead to different local minima). An Adam optimizer (momentum=0.9), a learning rate of 0.001, a batch size of 128, and 200 epochs are used for each dataset. | Two CNN models using the same architecture (shown below) are used as the peer networks for the co-teaching method. They are initialized with different parameters in order to be significantly different from one another (different initial parameters can lead to different local minima). An Adam optimizer (momentum=0.9), a learning rate of 0.001, a batch size of 128, and 200 epochs are used for each dataset. The networks also utilize dropout and batch normalization. | ||

[[File: Co-Teaching Table 3.png|center]] | [[File: Co-Teaching Table 3.png|center]] | ||

=Results and Discussion= | =Results and Discussion= | ||

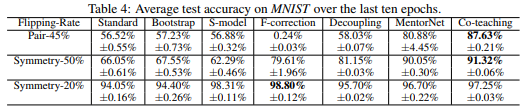

The co-teaching algorithm is compared to the baseline approaches under the noise conditions previously described. The results are as follows. | The co-teaching algorithm is compared to the baseline approaches under the noise conditions previously described. The results are as follows. | ||

==MNIST== | ==MNIST== | ||

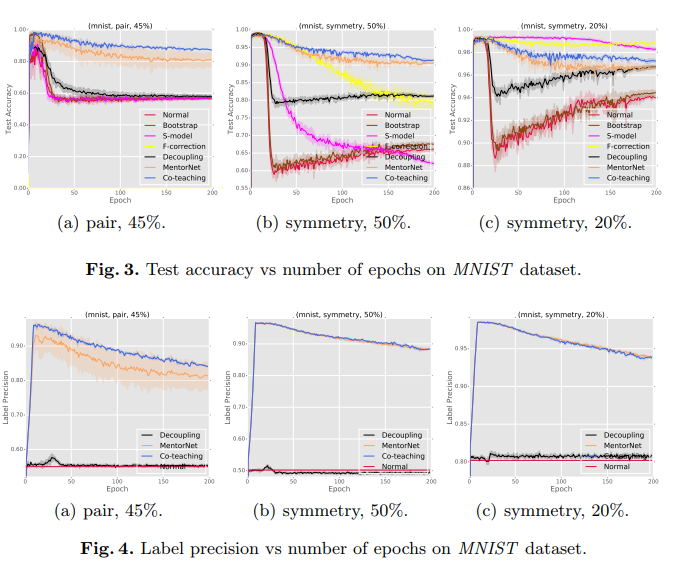

The results of testing on the MNIST dataset are shown below. The Symmetry-20% case can be taken as a near-baseline; all methods perform well. However, under the Symmetry-50% case, all methods except MentorNet and Co-Teaching drop below 90% accuracy. Under the Pair-45% case, all methods except MentorNet and Co-Teaching drop below 60%. Under both high-noise conditions, the Co-Teaching method produces the highest accuracy. Similar patterns can be seen in the two additional sets of test results, though the specific accuracy values are different. Co-Teaching performs best under the high-noise situations | |||

The images labelled 'Figure 3' show test accuracy with respect to epoch of the various algorithms. Many algorithms show evidence of over-fitting or being influenced by noisy data, after reaching initial high accuracy. MentorNet and Co-Teaching experience this less than other methods, and Co-Teaching generally achieves higher accuracy than MentorNet. | |||

Robustness of the proposed method to noise which plays an important rule in the evaluation, is evident in the plots which is better or comparable to the other methods. | |||

[[File:Co-Teaching Table 4.png|550px|center]] | [[File:Co-Teaching Table 4.png|550px|center]] | ||

[[File:Co-Teaching Graphs MNIST.PNG|center]] | [[File:Co-Teaching Graphs MNIST.PNG|center]] | ||

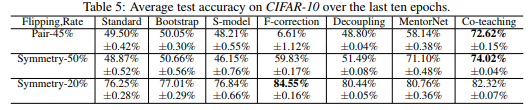

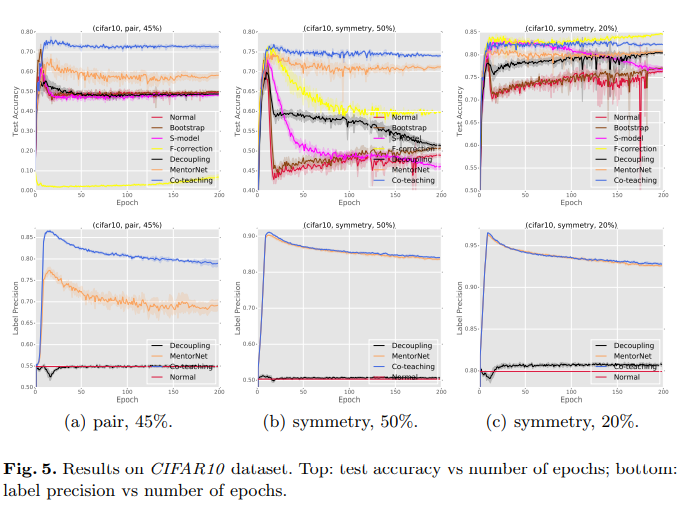

==CIFAR10== | ==CIFAR10== | ||

The observations here are consistently the same as these for MNIST dataset. | |||

[[File:Co-Teaching Table 5.png|550px|center]] | [[File:Co-Teaching Table 5.png|550px|center]] | ||

| Line 90: | Line 115: | ||

[[File: Co-Teaching Graphs CIFAR100.PNG|center]] | [[File: Co-Teaching Graphs CIFAR100.PNG|center]] | ||

==Choice of R(T) and <math> \tau</math>== | |||

There were some principles they followed when it came to choosing R(T) and <math> \tau</math>. R(T)=1, there was no instance needed at the beginning. They could safely update parameters in the early stage using the whole noise data since the deep neural networks would not memorize the noisy data. However, they need to drop more instances at the later stage. Because the model would eventually try to fit noisy data. | |||

R(T)=1-<math> \tau </math> *min{<math>T^{c}/T_{k},1 </math>} with <math> \tau=\epsilon </math>, where <math> \epsilon </math> is noise level. | |||

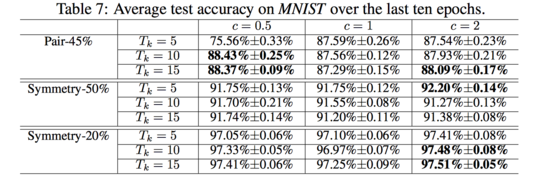

In this case, we consider c={0.5,1,2}. From Table 7, the test accuracy is stable. | |||

[[File: Co-Teaching Table 7.png|550px|center]] | |||

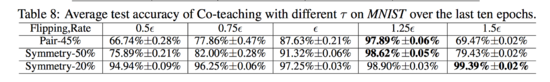

For <math> \tau</math>, we consider <math> \tau={0.5,0.75,1,1.25,1.5}\epsilon</math>. From Table 8, the performance can be improved with dropping more instances. | |||

[[File: Co-Teaching Table 8.png|550px|center]] | |||

=Conclusions= | =Conclusions= | ||

The main goal of the paper is to introduce “Co-teaching” learning paradigm that uses two deep neural networks learning | The main goal of the paper is to introduce the “Co-teaching” learning paradigm that uses two deep neural networks learning simultaneously to avoid noisy labels. Experiments are performed on several datasets such as MNIST, CIFAR-10, and CIFAR-100. The performance varied depending on the noise level in different scenarios. In the simulated ‘extreme noise’ scenarios, (pair-45% and symmetry-50%), the co-teaching methods outperforms baseline methods in terms of accuracy. This suggests that the co-teaching method is superior to the baseline methods in scenarios of extreme noise. The co-teaching method also performs competitively in the low-noise scenario (symmetry-20%). | ||

=Future Work= | |||

For future work, the paper can be extended in following ways: First , the the Co-teaching program can be adapted to train deep models under weak supervisions , e.g positive and unlabeled data. Second theoretical guarantees for Co-teaching can be investigated. The current approach seems to be have potential application in eliminating noisy labels/data from biomedical signals for example in the case of EEG data. This is important as EEG data are generally collected based on an experimental protocol and under controlled lab conditions. When data is collected in this way, even though the underlying brain process does not correspond to the EEG signals being collected, they can be labelled incorrectly based on the experimental protocol. Such cases of wrong labeling/data need to be eliminated from the training process and this is one scenario where co-teaching could possibly be applied. Also, this method seems to have potential application in data collected via crowd-sourcing or same data being labelled by multiple human subjects. Further, there is no analysis for generalization performance on deep learning with noisy labels which can also be studied in future. | |||

=Critique= | =Critique= | ||

The paper evaluates the performance considering the complexity of computations and implementations of the algorithms. Co-teaching methodology seems an interesting idea but can possibly become tricky to implement. Technically, such complexity can negatively impact the performance of the algorithm. | |||

==Lack of Task Diversity== | ==Lack of Task Diversity== | ||

The datasets used in this experiment are all image classification tasks – these results may not generalize to other deep learning applications, such as classifications from data with lower or higher dimensionality. | The datasets used in this experiment are all image classification tasks – these results may not generalize to other deep learning applications, such as classifications from data with lower or higher dimensionality. | ||

| Line 100: | Line 139: | ||

Adaptation of the co-teaching method to train under other weak supervision (e.g. positive and unlabeled data) could expand the applicability of the paradigm. | Adaptation of the co-teaching method to train under other weak supervision (e.g. positive and unlabeled data) could expand the applicability of the paradigm. | ||

==Lack of Theoretical Development (Mentioned in conclusion)== | ==Lack of Theoretical Development (Mentioned in conclusion)== | ||

This paper lacks any theoretical guarantees for co-teaching. Proving that the results shown in this study are generalizable would bolster the findings significantly. | This paper lacks any theoretical guarantees for co-teaching. Proving that the results shown in this study are generalizable would bolster the findings significantly. | ||

=References= | =References= | ||

[1] | [1] B. Van Rooyen, A. Menon, and B. Williamson. Learning with symmetric label noise: The | ||

importance of being unhinged. In NIPS, 2015. | |||

[2] | [2] S. Reed, H. Lee, D. Anguelov, C. Szegedy, D. Erhan, and A. Rabinovich. Training deep neural | ||

networks on noisy labels with bootstrapping. In ICLR, 2015. | |||

[3] | [3] J. Goldberger and E. Ben-Reuven. Training deep neural-networks using a noise adaptation layer. | ||

In ICLR, 2017. | |||

[4] | [4] G. Patrini, A. Rozza, A. Menon, R. Nock, and L. Qu. Making deep neural networks robust to | ||

label noise: A loss correction approach. In CVPR, 2017. | |||

[5] | [5] E. Malach and S. Shalev-Shwartz. Decoupling" when to update" from" how to update". In | ||

NIPS, 2017. | |||

[6] | [6] L. Jiang, Z. Zhou, T. Leung, L. Li, and L. Fei-Fei. Mentornet: Learning data-driven curriculum | ||

for very deep neural networks on corrupted labels. In ICML, 2018. | |||

Latest revision as of 21:02, 11 December 2018

Introduction

Title of Paper

Co-teaching: Robust Training Deep Neural Networks with Extremely Noisy Labels

Contributions

The paper proposes a novel approach to training deep neural networks on data with noisy labels. The proposed architecture, named ‘co-teaching’, maintains two networks simultaneously, which focuses on training on selected clean instances and avoids estimating the noise transition matrix. In addition, using stochastic optimization with momentum to train the deep networks and clean data can be memorized by nonlinear deep networks, which becomes robust. The experiments are conducted on noisy versions of MNIST, CIFAR-10 and CIFAR-100 datasets. Empirical results demonstrate that, under extremely noisy circumstances (i.e., 45% of noisy labels), the robustness of deep learning models trained by the co-teaching approach is much superior to state-of-the-art baselines

Terminology

Ground-Truth Labels: The proper objective labels (i.e. the real, or ‘true’, labels) of the data.

Noisy Labels: Labels that are corrupted (either manually or through the data collection process) from ground-truth labels. This can result in false positives.

Intuition

The Co-teaching architecture maintains two networks with different learning abilities simultaneously. The reason why Co-teaching is more robust can be explained as follows. Usually when learning on a batch of noisy data, only the error from the network itself is transferred back to facilitate learning. But in the case of Co-teaching, the two networks are able to filter different type of errors, and flow back to itself and the other network. As a result, the two models learn together, from the network itself and the partner network.

Motivation

The paper draws motivation from two key facts:

• That many data collection processes yield noisy labels.

• That deep neural networks have a high capacity to overfit to noisy labels.

Because of these facts, it is challenging to train deep networks to be robust with noisy labels.

Related Works

1. Statistical learning methods: Some approaches use statistical learning methods for the problem of learning from extremely noisy labels. These approaches can be divided into 3 strands: surrogate loss, noise estimation, and probabilistic modelling. In the surrogate loss category, one work proposes an unbiased estimator to provide the noise corrected loss approach. Another work presented a robust non-convex loss, which is the special case in a family of robust losses. In the noise rate estimation category, some authors propose a class-probability estimator using order statistics on the range of scores. Another work presented the same estimator using the slope of ROC curve. In the probabilistic modelling category, there is a two coin model proposed to handle noise labels from multiple annotators.

2. Deep learning methods: There are also deep learning approaches that can be used to approach data with noisy labels. One work proposed a unified framework to distill knowledge from clean labels and knowledge graphs. Another work trained a label cleaning network by a small set of clean labels and used it to reduce the noise in large-scale noisy labels. There is also a proposed joint optimization framework to learn parameters and estimate true labels simultaneously. Another work leverages an additional validation set to adaptively assign weights to training examples in every iteration. One particular paper ads a crowd layer after the output layer for noisy labels from multiple annotators.

3. Learning to teach methods: It is another approach to this problem. The methods are made up by the teacher and student networks. The teacher network selects more informative instances for better training of student networks. Most works did not account for noisy labels, with exception to MentorNet, which applied the idea on data with noisy labels.

Co-Teaching Algorithm

The idea as shown in the algorithm above is to train two deep networks simultaneously. In each mini-batch using mini-batch gradient descent, each network selects its small-loss instances as useful knowledge and then teaches these useful instances to the peer network. [math]\displaystyle{ R(T) }[/math] governs the percentage of small-loss instances to be used in updating the parameters of each network.

Summary of Experiment

Proposed Method

The proposed co-teaching method trains two networks simultaneously, and samples instances with small loss at each mini batch as useful knowledge. The sample of small-loss instances is then taught to the peer network for updating the parameters.

The co-teaching method relies on research that suggests deep networks learn clean and easy patterns in initial epochs, but are susceptible to overfitting noisy labels as the number of epochs grows. To counteract this, the co-teaching method reduces the mini-batch size by gradually increasing a drop rate (i.e., noisy instances with higher loss will be dropped at an increasing rate). The mini-batches are swapped between peer networks due to the underlying intuition that different classifiers will generate different decision boundaries. Swapping the mini-batches constitutes a sort of ‘peer-reviewing’ that promotes noise reduction since the error from a network is not directly transferred back to itself. To summarize, as error from one network will not be directly transferred back itself, the authors expect that the Co-teaching method will be able to deal with heavier noise compared with the self-evolving one.

Dataset Corruption

The datasets incorporated by this paper include MNIST, CIFAR-10 and CIFAR-100. A summary of these datasets are shown as below.

To simulate learning with noisy labels, the datasets (which are clean by default) are manually corrupted by applying a noise transformation matrix[math]\displaystyle{ Q }[/math], where where [math]\displaystyle{ Q_{ij} = Pr(\widetilde{y} = j|y = i) }[/math] given that noisy [math]\displaystyle{ \widetilde{y} }[/math] is flipped from clean [math]\displaystyle{ y }[/math]. Two methods are used for generating such noise transformation matrices: pair flipping and symmetry.

Three noise conditions are simulated for comparing co-teaching with baseline methods.

Note: Corruption of Dataset here means randomly choosing a wrong label instead of the target label by applying noise.

| Method | Noise Rate | Rationale |

| Pair Flipping | 45% | Almost half of the instances have noisy labels. Simulates erroneous labels which are similar to true labels. |

| Symmetry | 50% | Half of the instances have noisy labels. Labels have a constant probability of being corrupted. Further rationale can be found at [1]. |

| Symmetry | 20% | Verify the robustness of co-teaching in a low-level noise scenario. |

Baseline Comparisons

The co-teaching method is compared with several baseline approaches, which have varying:

• proficiency in dealing with a large number of classes,

• ability to resist heavy noise,

• need to combine with specific network architectures, and

• need to be pretrained.

Bootstrap

The general idea behind bootstrapping is to dynamically change (correct) noisy labels during training. The idea is to take a value derived from the original and predicted class. The final label is some convex combination of the two. It should be noted that the weighting of the prediction is increased over time to account for the model itself improving. Of course, this procedure needs to be finely tuned to prevent it from rampantly changing correct labels before it becomes accurate. [2].

S-Model

Using an additional softmax layer to model the noise transition matrix [3].

F-Correction

Correcting the prediction by using a noise transition matrix which is estimated by a standard network [4].

Decoupling

Two separate classifiers are used in this technique. Parameters are updated using only the samples that are classified differently between the two models [5].

MentorNet

A mentor network weights the probability of data instances being clean/noisy in order to train the student network on cleaner instances [6].

As shown in the above table - few of the advantages of Co-teaaching method include - Co-teaching method does not rely on any specific network architectures, which can also deal with a large number of classes and is more robust to noise. Besides, it can be trained from scratch. This makes teaching more appealing for practical usage.

Implementation Details

Two CNN models using the same architecture (shown below) are used as the peer networks for the co-teaching method. They are initialized with different parameters in order to be significantly different from one another (different initial parameters can lead to different local minima). An Adam optimizer (momentum=0.9), a learning rate of 0.001, a batch size of 128, and 200 epochs are used for each dataset. The networks also utilize dropout and batch normalization.

Results and Discussion

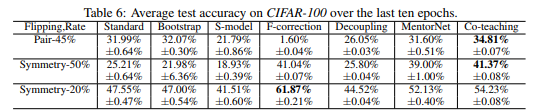

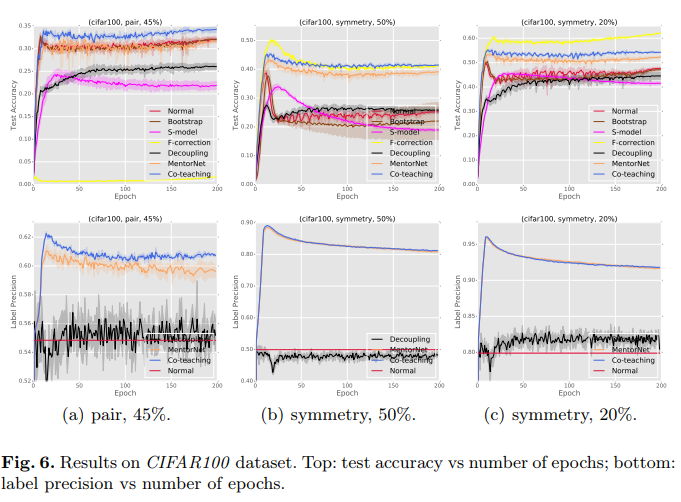

The co-teaching algorithm is compared to the baseline approaches under the noise conditions previously described. The results are as follows.

MNIST

The results of testing on the MNIST dataset are shown below. The Symmetry-20% case can be taken as a near-baseline; all methods perform well. However, under the Symmetry-50% case, all methods except MentorNet and Co-Teaching drop below 90% accuracy. Under the Pair-45% case, all methods except MentorNet and Co-Teaching drop below 60%. Under both high-noise conditions, the Co-Teaching method produces the highest accuracy. Similar patterns can be seen in the two additional sets of test results, though the specific accuracy values are different. Co-Teaching performs best under the high-noise situations

The images labelled 'Figure 3' show test accuracy with respect to epoch of the various algorithms. Many algorithms show evidence of over-fitting or being influenced by noisy data, after reaching initial high accuracy. MentorNet and Co-Teaching experience this less than other methods, and Co-Teaching generally achieves higher accuracy than MentorNet.

Robustness of the proposed method to noise which plays an important rule in the evaluation, is evident in the plots which is better or comparable to the other methods.

CIFAR10

The observations here are consistently the same as these for MNIST dataset.

CIFAR100

Choice of R(T) and [math]\displaystyle{ \tau }[/math]

There were some principles they followed when it came to choosing R(T) and [math]\displaystyle{ \tau }[/math]. R(T)=1, there was no instance needed at the beginning. They could safely update parameters in the early stage using the whole noise data since the deep neural networks would not memorize the noisy data. However, they need to drop more instances at the later stage. Because the model would eventually try to fit noisy data.

R(T)=1-[math]\displaystyle{ \tau }[/math] *min{[math]\displaystyle{ T^{c}/T_{k},1 }[/math]} with [math]\displaystyle{ \tau=\epsilon }[/math], where [math]\displaystyle{ \epsilon }[/math] is noise level. In this case, we consider c={0.5,1,2}. From Table 7, the test accuracy is stable.

For [math]\displaystyle{ \tau }[/math], we consider [math]\displaystyle{ \tau={0.5,0.75,1,1.25,1.5}\epsilon }[/math]. From Table 8, the performance can be improved with dropping more instances.

Conclusions

The main goal of the paper is to introduce the “Co-teaching” learning paradigm that uses two deep neural networks learning simultaneously to avoid noisy labels. Experiments are performed on several datasets such as MNIST, CIFAR-10, and CIFAR-100. The performance varied depending on the noise level in different scenarios. In the simulated ‘extreme noise’ scenarios, (pair-45% and symmetry-50%), the co-teaching methods outperforms baseline methods in terms of accuracy. This suggests that the co-teaching method is superior to the baseline methods in scenarios of extreme noise. The co-teaching method also performs competitively in the low-noise scenario (symmetry-20%).

Future Work

For future work, the paper can be extended in following ways: First , the the Co-teaching program can be adapted to train deep models under weak supervisions , e.g positive and unlabeled data. Second theoretical guarantees for Co-teaching can be investigated. The current approach seems to be have potential application in eliminating noisy labels/data from biomedical signals for example in the case of EEG data. This is important as EEG data are generally collected based on an experimental protocol and under controlled lab conditions. When data is collected in this way, even though the underlying brain process does not correspond to the EEG signals being collected, they can be labelled incorrectly based on the experimental protocol. Such cases of wrong labeling/data need to be eliminated from the training process and this is one scenario where co-teaching could possibly be applied. Also, this method seems to have potential application in data collected via crowd-sourcing or same data being labelled by multiple human subjects. Further, there is no analysis for generalization performance on deep learning with noisy labels which can also be studied in future.

Critique

The paper evaluates the performance considering the complexity of computations and implementations of the algorithms. Co-teaching methodology seems an interesting idea but can possibly become tricky to implement. Technically, such complexity can negatively impact the performance of the algorithm.

Lack of Task Diversity

The datasets used in this experiment are all image classification tasks – these results may not generalize to other deep learning applications, such as classifications from data with lower or higher dimensionality.

Needs to be expanded to other weak supervisions (Mentioned in conclusion)

Adaptation of the co-teaching method to train under other weak supervision (e.g. positive and unlabeled data) could expand the applicability of the paradigm.

Lack of Theoretical Development (Mentioned in conclusion)

This paper lacks any theoretical guarantees for co-teaching. Proving that the results shown in this study are generalizable would bolster the findings significantly.

References

[1] B. Van Rooyen, A. Menon, and B. Williamson. Learning with symmetric label noise: The importance of being unhinged. In NIPS, 2015.

[2] S. Reed, H. Lee, D. Anguelov, C. Szegedy, D. Erhan, and A. Rabinovich. Training deep neural networks on noisy labels with bootstrapping. In ICLR, 2015.

[3] J. Goldberger and E. Ben-Reuven. Training deep neural-networks using a noise adaptation layer. In ICLR, 2017.

[4] G. Patrini, A. Rozza, A. Menon, R. Nock, and L. Qu. Making deep neural networks robust to label noise: A loss correction approach. In CVPR, 2017.

[5] E. Malach and S. Shalev-Shwartz. Decoupling" when to update" from" how to update". In NIPS, 2017.

[6] L. Jiang, Z. Zhou, T. Leung, L. Li, and L. Fei-Fei. Mentornet: Learning data-driven curriculum for very deep neural networks on corrupted labels. In ICML, 2018.