Convolutional Neural Networks for Sentence Classification: Difference between revisions

No edit summary |

No edit summary |

||

| (9 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

This is a summary | This is a summary of the paper, Convolutional Neural Networks for Sentence Classification by Yoon Kim <sup>[[#References|[1]]]</sup>. | ||

= Presented by = | = Presented by = | ||

*M.Bayati | *M.Bayati | ||

*S. | *S.Malekmohammadi | ||

*V.Luong | *V.Luong | ||

= Introduction = | = Introduction = | ||

In this paper, sentence classification using convolutioanl neural networks is studied. Each sentence is encoded by concatenation of the word vectors of its words and the encoded representation is fed to a model consisting of a convolutional layer followed by a dense layer for doing the classification task. Different variants of this model have been introduced by the authors, two of which try to learn task-specific word vectors for words. It is observed that learning task-specific vectors (instead of using the pre-trained vectors without any change) offers further gains in performance. | |||

= The Used | = The Used Models = | ||

Using neural models to learn vector representation for words is one of the most important contributions within natural language processing. The vector representations are obtained from projecting 1-hot representation of words (a sparse representation) onto a lower dimensional space. Continuous Bag of Words (COBW) neural language model, trained by Mikolov et al. (2013), is one of the unsupervised algorithms providing such a low dimensional vector representation in which semantic features of words are encoded. Having the low-dimensional vector representations, one can feed them to different models for doing different tasks. For instance, they can be fed to CNNs for document or sentence classification. The vector representations used in this paper are obtained from the CBOW. | Using neural models to learn vector representation for words is one of the most important contributions within natural language processing. The vector representations are obtained from projecting 1-hot representation of words (a sparse representation) onto a lower dimensional space. Continuous Bag of Words (COBW) neural language model, trained by Mikolov et al. (2013), is one of the unsupervised algorithms providing such a low dimensional vector representation in which semantic features of words are encoded. Having the low-dimensional vector representations, one can feed them to different models for doing different tasks. For instance, they can be fed to CNNs for document or sentence classification. The vector representations used in this paper are obtained from the CBOW. | ||

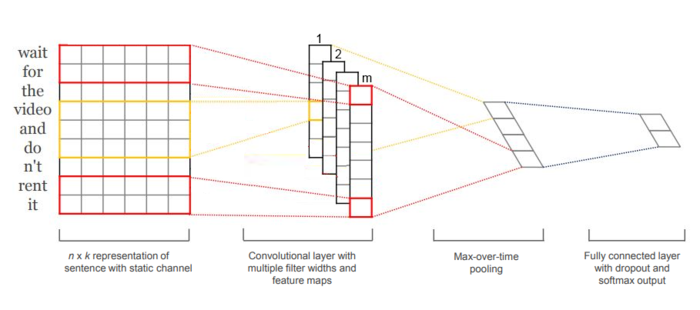

The model that the authors use constitutes of a convolutional layer followed by a dense layer. In the convolutional layer, there are | The model that the authors use constitutes of a convolutional layer followed by a dense layer. In the convolutional layer, there are m different filters (kernels) each resulting in one feature map. The resulting m feature maps form the penultimate layer which is passed to a fully connected softmax layer, as shown in the following: | ||

[[File:one.PNG|700px|thumb|center]] | |||

The authors introduce several variants of the model that are briefly explained in the following: \newline | |||

They first consider a baseline model, which is called CNN-rand. In this variant, they do not use any pretrained vector representation for words. All words are assigned a random vector representation and the assigned vectors are fed to the model. The random vectors get modified during training. | |||

In the second variant of the model, which is called CNN-static, they use the pretrained word2vec vectors. They keep the pretrained vectors static; i.e., during training, the vectors do not change and only the other parameters of the model (edge weights and kernels) get learned. They observed that this simple model achieves excellent results on multiple benchmarks. Note that the used word vectors are pre-trained (regardless of the given classification task and its data set) and the model achieves excellent results when using them, while when feeding another set of publicly available word vectors (trained by Collobert et al. (2011) on Wikipedia) to the same model, the performance of the model is not as good as when word2vec word vectors are used. Based on this observation, the authors stated that the pre-trained word2vec vectors are good encoded representation for words and they can be utilized for different classification tasks. | |||

The third variant that the authors consider is called CNN-non-static. Here is the first time that the authors try to learn task-specific word vectors for words. In the model, they use the pre-trained word2vec vectors to initialize the task-specific vectors, but after getting initialized, the vectors get fine-tuned during training via backpropagation. | |||

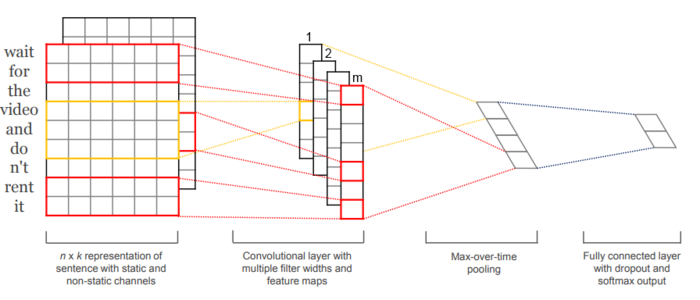

In the | In the fourth variant introduced for the model (CNN-multichannel), the authors add a simple modification to the structure of the model to make it capable of using both the pre-trained and the task-specific vectors. What they have done, is adding another channel of inputs to the model structure as shown in the following: | ||

[[File:two.PNG|700px|thumb|center]] | |||

In this model, as shown, there are two sets (channels) of word vectors. The first one is the pre-trained word2vec vectors that are static and do not change during training. The other channel is initialized with the pretrained word2vec vectors, but it gets fine-tuned during training via backpropagation. | |||

= Results = | |||

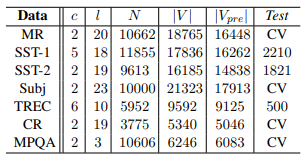

The authors have experimented the model on various benchmarks. The following figure shows a summary of the used data sets: | |||

[[File:data.PNG|700px|thumb|center]] | |||

The columns after the first data column show number of target classes, average sentence length, data set size, vocabulary size, number of words present in the set of pre-trained word2vec vectors and test size respectively. | |||

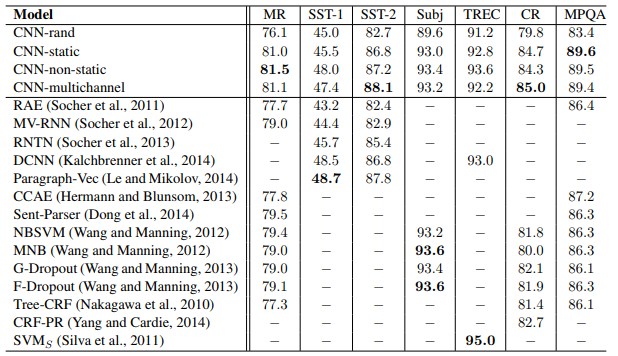

The results obtained from the models introduced above and other methods in the literature are shown in the following figure: | |||

[[File:results.PNG|700px|thumb|center]] | |||

The obtained results show that the baseline model with randomly initialized word vectors does not perform well on its own. Althoug it is surprising that the accuracy rate obtained from the baseline model is not that far from the CNN-static which uses the pre-trained word vectors. Also, we can observe the gain obtained from using CNN: even a simple model using CNN with static word2vec vectors performs remarkably well such that its results are competitive against those of more sophisticated deep learning models. Also, CNN-nonstatic which tunes the word vectors for each task gives more improvement. Despite of our expectation to have a better performance for the CNN-multichannel (compared to the CNN-non-static and CNN-static) the obtained results are mixed. The authors have claimed that by regularizing the fine tuning process of task specific vectors the performance of CNN-multichannel can get improved. | |||

= Conclusion = | =Conclusion= | ||

In both the CNN-non-static and the CNN-multichannel, they observed that the models are able to fine-tune the word vectors to make them more specific for each given task. For example, they observed that in word2vec, the most similar word to “good” is “great”, while “nice” seems closer to that as long as the goal is expressing sentiment. This can be observed in the learned vectors reflected by CNN-non-static and CNN-multichannel: for the word vectors in CNN-non-static and those in the second channel of CNN-multichannel, the most similar word to “good” is “nice”. So fine-tuning allows the model to learn more meaningful representation for words depending on the task in hand. This can be counted as the most important contribution of the paper, which adds improvement to the performance of the model compared to when it uses the pre-trained word2vec vectors in a static way and regardless of the given task. | In both the CNN-non-static and the CNN-multichannel, they observed that the models are able to fine-tune the word vectors to make them more specific for each given task. For example, they observed that in word2vec, the most similar word to “good” is “great”, while “nice” seems closer to that as long as the goal is expressing sentiment. This can be observed in the learned vectors reflected by CNN-non-static and CNN-multichannel: for the word vectors in CNN-non-static and those in the second channel of CNN-multichannel, the most similar word to “good” is “nice”. So fine-tuning allows the model to learn more meaningful representation for words depending on the task in hand. This can be counted as the most important contribution of the paper, which adds improvement to the performance of the model compared to when it uses the pre-trained word2vec vectors in a static way and regardless of the given task. | ||

=References= | |||

* <sup>[1]</sup>Kim, Y. (2014). Convolutional Neural Networks for Sentence Classification. Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP). doi:10.3115/v1/d14-1181. | * <sup>[1]</sup>Kim, Y. (2014). Convolutional Neural Networks for Sentence Classification. Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP). doi:10.3115/v1/d14-1181. | ||

Latest revision as of 22:49, 13 November 2018

This is a summary of the paper, Convolutional Neural Networks for Sentence Classification by Yoon Kim [1].

Presented by

- M.Bayati

- S.Malekmohammadi

- V.Luong

Introduction

In this paper, sentence classification using convolutioanl neural networks is studied. Each sentence is encoded by concatenation of the word vectors of its words and the encoded representation is fed to a model consisting of a convolutional layer followed by a dense layer for doing the classification task. Different variants of this model have been introduced by the authors, two of which try to learn task-specific word vectors for words. It is observed that learning task-specific vectors (instead of using the pre-trained vectors without any change) offers further gains in performance.

The Used Models

Using neural models to learn vector representation for words is one of the most important contributions within natural language processing. The vector representations are obtained from projecting 1-hot representation of words (a sparse representation) onto a lower dimensional space. Continuous Bag of Words (COBW) neural language model, trained by Mikolov et al. (2013), is one of the unsupervised algorithms providing such a low dimensional vector representation in which semantic features of words are encoded. Having the low-dimensional vector representations, one can feed them to different models for doing different tasks. For instance, they can be fed to CNNs for document or sentence classification. The vector representations used in this paper are obtained from the CBOW.

The model that the authors use constitutes of a convolutional layer followed by a dense layer. In the convolutional layer, there are m different filters (kernels) each resulting in one feature map. The resulting m feature maps form the penultimate layer which is passed to a fully connected softmax layer, as shown in the following:

The authors introduce several variants of the model that are briefly explained in the following: \newline

They first consider a baseline model, which is called CNN-rand. In this variant, they do not use any pretrained vector representation for words. All words are assigned a random vector representation and the assigned vectors are fed to the model. The random vectors get modified during training.

In the second variant of the model, which is called CNN-static, they use the pretrained word2vec vectors. They keep the pretrained vectors static; i.e., during training, the vectors do not change and only the other parameters of the model (edge weights and kernels) get learned. They observed that this simple model achieves excellent results on multiple benchmarks. Note that the used word vectors are pre-trained (regardless of the given classification task and its data set) and the model achieves excellent results when using them, while when feeding another set of publicly available word vectors (trained by Collobert et al. (2011) on Wikipedia) to the same model, the performance of the model is not as good as when word2vec word vectors are used. Based on this observation, the authors stated that the pre-trained word2vec vectors are good encoded representation for words and they can be utilized for different classification tasks.

The third variant that the authors consider is called CNN-non-static. Here is the first time that the authors try to learn task-specific word vectors for words. In the model, they use the pre-trained word2vec vectors to initialize the task-specific vectors, but after getting initialized, the vectors get fine-tuned during training via backpropagation.

In the fourth variant introduced for the model (CNN-multichannel), the authors add a simple modification to the structure of the model to make it capable of using both the pre-trained and the task-specific vectors. What they have done, is adding another channel of inputs to the model structure as shown in the following:

In this model, as shown, there are two sets (channels) of word vectors. The first one is the pre-trained word2vec vectors that are static and do not change during training. The other channel is initialized with the pretrained word2vec vectors, but it gets fine-tuned during training via backpropagation.

Results

The authors have experimented the model on various benchmarks. The following figure shows a summary of the used data sets:

The columns after the first data column show number of target classes, average sentence length, data set size, vocabulary size, number of words present in the set of pre-trained word2vec vectors and test size respectively.

The results obtained from the models introduced above and other methods in the literature are shown in the following figure:

The obtained results show that the baseline model with randomly initialized word vectors does not perform well on its own. Althoug it is surprising that the accuracy rate obtained from the baseline model is not that far from the CNN-static which uses the pre-trained word vectors. Also, we can observe the gain obtained from using CNN: even a simple model using CNN with static word2vec vectors performs remarkably well such that its results are competitive against those of more sophisticated deep learning models. Also, CNN-nonstatic which tunes the word vectors for each task gives more improvement. Despite of our expectation to have a better performance for the CNN-multichannel (compared to the CNN-non-static and CNN-static) the obtained results are mixed. The authors have claimed that by regularizing the fine tuning process of task specific vectors the performance of CNN-multichannel can get improved.

Conclusion

In both the CNN-non-static and the CNN-multichannel, they observed that the models are able to fine-tune the word vectors to make them more specific for each given task. For example, they observed that in word2vec, the most similar word to “good” is “great”, while “nice” seems closer to that as long as the goal is expressing sentiment. This can be observed in the learned vectors reflected by CNN-non-static and CNN-multichannel: for the word vectors in CNN-non-static and those in the second channel of CNN-multichannel, the most similar word to “good” is “nice”. So fine-tuning allows the model to learn more meaningful representation for words depending on the task in hand. This can be counted as the most important contribution of the paper, which adds improvement to the performance of the model compared to when it uses the pre-trained word2vec vectors in a static way and regardless of the given task.

References

- [1]Kim, Y. (2014). Convolutional Neural Networks for Sentence Classification. Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP). doi:10.3115/v1/d14-1181.