deep Convolutional Neural Networks For LVCSR: Difference between revisions

m (Conversion script moved page Deep Convolutional Neural Networks For LVCSR to deep Convolutional Neural Networks For LVCSR: Converting page titles to lowercase) |

|||

| (16 intermediate revisions by 4 users not shown) | |||

| Line 84: | Line 84: | ||

|- | |- | ||

|} | |} | ||

A slight improvement can be obtained by using 128 hidden units for the first convolutional layer and 256 for the second layer, which uses more hidden units in the convolutional layers, as many hidden units are needed to capture the locality differences between different frequency regions in speech. | |||

== Optimal Feature Set == | == Optimal Feature Set == | ||

We should note that the Linear Discriminant Analysis (LDA) cannot be used with CNNs because it removes local correlation in frequency. So they use Mel filter-bank (FB) features which exhibit this locality property. | |||

The following features are used to build the table below, WER is used to decide the best set of features to be used. | The following features are used to build the table below, WER is used to decide the best set of features to be used. | ||

# Vocal Tract Length Normalization (VTLN)-warping to help map features into a canonical space. | # Vocal Tract Length Normalization (VTLN)-warping to help map features into a canonical space. | ||

| Line 142: | Line 146: | ||

= Results with Proposed Architecture = | = Results with Proposed Architecture = | ||

The architecture described in the previous section is used in the experiments. A 50-hr English Broadcast News (BN) dataset is used for training and EARS dev04f and rt04 datasets are used for testing. Five different systems are used for comparisons as shown in the following table. The hybrid approach means that either the DNN or CNN is used to produce the likelihood probabilities for the HMM. While CNN/DNN-based features means that CNN or DNN were used to produce features to be used by the GMM/HMM system. We can see that using Hybrid CNN offers 15% relative improvement over GMM-HMM system, and 3-5% relative improvement over Hybrid DNN. Also CNN-based feature offers 5-6% relative improvement over DNN-based features. | The optimal architecture described in the previous section is used in the experiments. A 50-hr English Broadcast News (BN) dataset is used for training and EARS dev04f and rt04 datasets are used for testing. Five different systems are used for comparisons as shown in the following table. The hybrid approach means that either the DNN or CNN is used to produce the likelihood probabilities for the HMM. While CNN/DNN-based features means that CNN or DNN were used to produce features to be used by the GMM/HMM system. We can see that using Hybrid CNN offers 15% relative improvement over GMM-HMM system, and 3-5% relative improvement over Hybrid DNN. Also CNN-based feature offers 5-6% relative improvement over DNN-based features. | ||

{| class="wikitable" | {| class="wikitable" | ||

| Line 173: | Line 177: | ||

= Results on Large Tasks = | = Results on Large Tasks = | ||

After tuning the CNN configuration on a small dataset, the CNN-based features system is tested on two larger datasets. | |||

== Broadcast News == | |||

Broadcast News consists of 400 hours of speech data and it was used for training. DARPA EARS rt04 and def04f datasets were used for testing. The following table shows that CNN-based features offer 13-18% relative improvment over GMM/HMM system and 10-12% over DNN-based features. | |||

{| class="wikitable" | |||

|+ WER on Broadcast News, 400 hrs. | |||

! Model | |||

! dev04f | |||

! rt04 | |||

|- | |||

| Baseline GMM/HMM | |||

| 16.0 | |||

| 13.8 | |||

|- | |||

| Hybrid DNN | |||

| 15.1 | |||

| 13.4 | |||

|- | |||

| DNN-based features | |||

| 14.9 | |||

| 13.4 | |||

|- | |||

| CNN-based features | |||

| 13.1 | |||

| 12.0 | |||

|- | |||

|} | |||

== Switchboard == | |||

Switchboard dataset is a 300 hours of conversational American English telephony data. Hub5'00 dataset is used as validation set, while rt03 set is used for testing. Switchboard (SWB) and Fisher (FSH) are portions of the set, and the results are reported separately for each set. Three systems, as shown in the following table, were used in comparisons. CNN-based features over 13-33% relative improvement over GMM/HMM system, and 4-7% relative improvement over hybrid DNN system. These results show that CNNs are superior to both GMMs and DNNs. | |||

{| class="wikitable" | |||

|+ WER on Switchboard, 300 hrs. | |||

! Model | |||

! Hub5’00 SWB | |||

! rt03 FSH | |||

! rt03 SWB | |||

|- | |||

| Baseline GMM/HMM | |||

| 14.5 | |||

| 17.0 | |||

| 25.2 | |||

|- | |||

| Hybrid DNN | |||

| 12.2 | |||

| 14.9 | |||

| 23.5 | |||

|- | |||

| CNN-based features | |||

| 11.5 | |||

| 14.3 | |||

| 21.9 | |||

|- | |||

|} | |||

= Conclusions and Discussions = | = Conclusions and Discussions = | ||

Hybrid CNN wasn't tested on larger dataset, the authors didn't give a reason for that and it might be due to a scalability issues. | This paper demonstrates that CNNs perform well for LVCSR and shows that multiple convolutional layers gives even more improvement when the convolutional layers have a large number of feature maps. In this work, using CNNs was explored and it was shown that they are superior to both GMMs and DNNs on a small speech recognition task. CNNs were used to produce features for the GMMs, the performance of this system is tested on larger datasets and it outperformed both the GMM and DNN based systems. Also, the Mel filter-bank is regarded as a suitable feature for the CNN since it exhibits this locality property. | ||

In fact, CNN’s are able to capture translational invariance for different speakers with by replicating weights in time and frequency domain, and they can model local correlations of speech. | |||

In this paper, the authors draw the conclusion that having 2 convolutional and 4 fully connected layers is optimal for CNNs. But from previous table we can see the result for 2 convolutional and 4 fully connected layers is close to the result of 3 convolutional and 3 fully connected layers. More experiments and assumptions may be needed to draw this conclusion statistically. | |||

The authors setup the experiments without clarifying the following: | |||

# Hybrid CNN wasn't tested on larger dataset, the authors didn't give a reason for that and it might be due to a scalability issues. | |||

# They didn't compare to the CNN system proposed by Osama et. al. <ref name=convDNN></ref>. | |||

= References = | = References = | ||

<references /> | <references /> | ||

Latest revision as of 09:46, 30 August 2017

Introduction

Deep Neural Networks (DNNs) have been explored in the area of speech recognition. They outperformed the-state-of-the-art Gaussian Mixture Models-Hidden Markov Model (GMM-HMM) systems in both small and large speech recognition tasks <ref name=firstDBN> A. Mohamed, G. Dahl, and G. Hinton, “Deep belief networks for phone recognition,” in Proc. NIPS Workshop Deep Learning for Speech Recognition and Related Applications, 2009. </ref> <ref name=tuning_fb_DBN>A. Mohamed, G. Dahl, and G. Hinton, “Acoustic modeling using deep belief networks,” IEEE Trans. Audio Speech Lang. Processing, vol. 20, no. 1, pp. 14–22, Jan. 2012.</ref> <ref name=finetuningDNN> A. Mohamed, D. Yu, and L. Deng, “Investigation of full-sequence training of deep belief networks for speech recognition,” in Proc. Interspeech, 2010, pp. 2846–2849. </ref> <ref name=bing> G. Dahl, D. Yu, L. Deng, and A. Acero, “Context-dependent pretrained deep neural networks for large-vocabulary speech recognition,” IEEE Trans. Audio Speech Lang. Processing, vol. 20, no. 1, pp. 30–42, Jan. 2012. </ref> <ref name=scrf> N. Jaitly, P. Nguyen, A. Senior, and V. Vanhoucke, “An application of pretrained deep neural networks to large vocabulary speech recognition,” submitted for publication. </ref>. Convolutional Neural Networks (CNNs) can model temporal/spacial variations while reduce translation variances. CNNs are attractive in the area of speech recognition for two reasons: first, they are translation invariant which makes them an alternative to various speaker adaptation techniques. Second, spectral representation of the speech has strong local correlations, CNN can naturally capture these type of correlations.

CNNs have been explored in speech recognition <ref name=convDNN> O. Abdel-Hamid, A. Mohamed, H. Jiang, and G. Penn, “Applying convolutional neural networks concepts to hybrid NN-HMM model for speech recognition,” in Proc. ICASSP, 2012, pp. 4277–4280. </ref>, but only one convolutional layer was used. This paper explores using multiple convolutional layers, and the system is tested on one small dataset and two large datasets. The results show that CNNs outperform DNNs in all of these tasks.

CNN Architecture

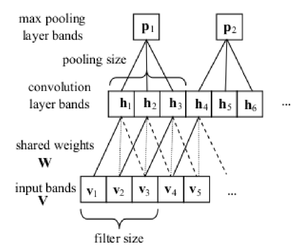

A typical CNN, as shown in Fig 1, consists of a convolutional layer for which the weights are shared across the input space, and a max-poolig layer.

Experimental Setup

A small 40-hour dataset is used to learn the behaviour of CNNs for speech tasks. The results are reported on EARS dev04f dataset. Features of 40-dimentional log mel-filter bank coeffs are used. The size of the hidden fully connected layer is 1024, and the softmax layer size is 512. For fine-tuning, the learning rate is halved after each iteration for which the objective function doesn't improve sufficiently on a held-out validation set. After 5 times of halving the learning rate, the training stops.

Number of Convolutional vs. Fully Connected Layers

In image recognition tasks, a few convolutional layers are used before fully connected layers. These convolutional layers tend to reduce spectral varitaion, while fully connected layers use the local information learned by the the convolutional layers to do classification. In this work and unlike what have been explored before for speech recognition tasks <ref name=convDNN></ref>, multiple convolutional layers are used followed by fully connected layers similar to image recognition framework. The following table shows the word error rate (WER) for different number of convolutional and fully connected layers.

| Number of convolutional and fully-connected layers | WER |

|---|---|

| No conv, 6 full | 24.8 |

| 1 conv, 5 full | 23.5 |

| 2 conv, 4 full | 22.1 |

| 3 conv, 3 full | 22.4 |

Number of Hidden Units

Speech is different than images in the sense that different frequencies have different features, hence Osama et. al. <ref name=convDNN></ref> proposed to have weight sharing across nearby frequencies only. Although this solves the problem, it limits adding multiple convolutional layers. In this work, weights sharing is done across the entire feature space while using more filters - compared to vision - to capture the differences in the low and high frequencies. The following table shows the WER for different number of hidden units for convolutional layers for 2 convolutional and 4 fully-connected configuration. The parameters of the network is kept constant for fair comparisons.

| Number of hidden units | WER |

|---|---|

| 64 | 24.1 |

| 128 | 23.0 |

| 220 | 22.1 |

| 128/256 | 21.9 |

A slight improvement can be obtained by using 128 hidden units for the first convolutional layer and 256 for the second layer, which uses more hidden units in the convolutional layers, as many hidden units are needed to capture the locality differences between different frequency regions in speech.

Optimal Feature Set

We should note that the Linear Discriminant Analysis (LDA) cannot be used with CNNs because it removes local correlation in frequency. So they use Mel filter-bank (FB) features which exhibit this locality property.

The following features are used to build the table below, WER is used to decide the best set of features to be used.

- Vocal Tract Length Normalization (VTLN)-warping to help map features into a canonical space.

- feature space Maximum Likelihood Linear Regression (fMLLR).

- Delte (d) which is the difference between features in consecutive frames and double delta (dd).

- Energy feature.

| Feature | WER |

|---|---|

| Mel FB | 21.9 |

| VTLN-warped mel FB | 21.3 |

| VTLN-warped mel FB + fMLLR | 21.2 |

| VTLN-warped mel FB + d + dd | 20.7 |

| VTLN-warped mel FB + d + dd + energy | 21.0 |

Pooling Experiments

Pooling helps with reducing spectral variance in the input features. The pooling is done only on the frequency domain which was shown to be working better for speech <ref name=convDNN></ref>. The word error rate is tested on two different dataset with two different sampling rates (8khz switchboard telephone conversations SWB and 16khz English Broadcast news BN), and the pooling size of 3 is found to be the optimal size.

| Pooling size | WER-SWB | WER-BN |

|---|---|---|

| No pooling | 23.7 | '-' |

| pool=2 | 23.4 | 20.7 |

| pool=3 | 22.9 | 20.7 |

| pool=4 | 22.9 | 21.4 |

Results with Proposed Architecture

The optimal architecture described in the previous section is used in the experiments. A 50-hr English Broadcast News (BN) dataset is used for training and EARS dev04f and rt04 datasets are used for testing. Five different systems are used for comparisons as shown in the following table. The hybrid approach means that either the DNN or CNN is used to produce the likelihood probabilities for the HMM. While CNN/DNN-based features means that CNN or DNN were used to produce features to be used by the GMM/HMM system. We can see that using Hybrid CNN offers 15% relative improvement over GMM-HMM system, and 3-5% relative improvement over Hybrid DNN. Also CNN-based feature offers 5-6% relative improvement over DNN-based features.

| Model | dev04f | rt04 |

|---|---|---|

| Baseline GMM/HMM | 18.8 | 18.1 |

| Hybrid DNN | 16.3 | 15.8 |

| DNN-based features | 16.7 | 16.0 |

| Hybrid CNN | 15.8 | 15.0 |

| CNN-based features | 15.2 | 15.0 |

Results on Large Tasks

After tuning the CNN configuration on a small dataset, the CNN-based features system is tested on two larger datasets.

Broadcast News

Broadcast News consists of 400 hours of speech data and it was used for training. DARPA EARS rt04 and def04f datasets were used for testing. The following table shows that CNN-based features offer 13-18% relative improvment over GMM/HMM system and 10-12% over DNN-based features.

| Model | dev04f | rt04 |

|---|---|---|

| Baseline GMM/HMM | 16.0 | 13.8 |

| Hybrid DNN | 15.1 | 13.4 |

| DNN-based features | 14.9 | 13.4 |

| CNN-based features | 13.1 | 12.0 |

Switchboard

Switchboard dataset is a 300 hours of conversational American English telephony data. Hub5'00 dataset is used as validation set, while rt03 set is used for testing. Switchboard (SWB) and Fisher (FSH) are portions of the set, and the results are reported separately for each set. Three systems, as shown in the following table, were used in comparisons. CNN-based features over 13-33% relative improvement over GMM/HMM system, and 4-7% relative improvement over hybrid DNN system. These results show that CNNs are superior to both GMMs and DNNs.

| Model | Hub5’00 SWB | rt03 FSH | rt03 SWB |

|---|---|---|---|

| Baseline GMM/HMM | 14.5 | 17.0 | 25.2 |

| Hybrid DNN | 12.2 | 14.9 | 23.5 |

| CNN-based features | 11.5 | 14.3 | 21.9 |

Conclusions and Discussions

This paper demonstrates that CNNs perform well for LVCSR and shows that multiple convolutional layers gives even more improvement when the convolutional layers have a large number of feature maps. In this work, using CNNs was explored and it was shown that they are superior to both GMMs and DNNs on a small speech recognition task. CNNs were used to produce features for the GMMs, the performance of this system is tested on larger datasets and it outperformed both the GMM and DNN based systems. Also, the Mel filter-bank is regarded as a suitable feature for the CNN since it exhibits this locality property. In fact, CNN’s are able to capture translational invariance for different speakers with by replicating weights in time and frequency domain, and they can model local correlations of speech.

In this paper, the authors draw the conclusion that having 2 convolutional and 4 fully connected layers is optimal for CNNs. But from previous table we can see the result for 2 convolutional and 4 fully connected layers is close to the result of 3 convolutional and 3 fully connected layers. More experiments and assumptions may be needed to draw this conclusion statistically.

The authors setup the experiments without clarifying the following:

- Hybrid CNN wasn't tested on larger dataset, the authors didn't give a reason for that and it might be due to a scalability issues.

- They didn't compare to the CNN system proposed by Osama et. al. <ref name=convDNN></ref>.

References

<references />