importance Sampling and Monte Carlo Simulation: Difference between revisions

No edit summary |

m (Conversion script moved page Importance Sampling and Monte Carlo Simulation to importance Sampling and Monte Carlo Simulation: Converting page titles to lowercase) |

||

| (4 intermediate revisions by 2 users not shown) | |||

| Line 2: | Line 2: | ||

<!-- UNDER CONSTRUCTION! --> | <!-- UNDER CONSTRUCTION! --> | ||

During this lecture we covered two more examples of Monte Carlo simulation, finishing that topic, and | During this lecture we covered two more examples of Monte Carlo simulation, finishing that topic, and began talking about Importance Sampling. | ||

====Binomial Probability Monte Carlo Simulations==== | ====Binomial Probability Monte Carlo Simulations==== | ||

| Line 74: | Line 74: | ||

====Importance Sampling==== | ====Importance Sampling==== | ||

In <math>I = \displaystyle\int h(x)f(x)\,dx</math>, Monte Carlo simulation can be used only if it easy to sample from f(x). Otherwise, another method must be applied. If sampling from f(x) is difficult but there exists a probability distribution function g(x) | In <math>I = \displaystyle\int h(x)f(x)\,dx</math>, Monte Carlo simulation can be used only if it easy to sample from f(x). Otherwise, another method must be applied. If sampling from f(x) is difficult but there exists a probability distribution function g(x) that is easy to sample from, then <math>I</math> can be written as<br> | ||

:: <math>I = \displaystyle\int h(x)f(x)\,dx </math> | :: <math>I = \displaystyle\int h(x)f(x)\,dx </math> | ||

:: <math>= \displaystyle\int \frac{h(x)f(x)}{g(x)}g(x)\,dx</math> | :: <math>= \displaystyle\int \frac{h(x)f(x)}{g(x)}g(x)\,dx</math> | ||

| Line 81: | Line 81: | ||

'''Process'''<br> | '''Process'''<br> | ||

# Choose <math>\displaystyle g(x)</math> | # Choose <math>\displaystyle g(x)</math> from which it's easy to sample from. | ||

# Compute <math>\displaystyle w(x)=\frac{h(x)f(x)}{g(x)}</math> | # Compute <math>\displaystyle w(x)=\frac{h(x)f(x)}{g(x)}</math> | ||

# <math>\displaystyle \hat{I} = \frac{\displaystyle\sum_{i=1}^{N} w(x_i)}{N}</math><br><br> | # <math>\displaystyle \hat{I} = \frac{\displaystyle\sum_{i=1}^{N} w(x_i)}{N}</math><br><br> | ||

'''"Weighted" average'''<br> | '''"Weighted" average'''<br> | ||

:The term "importance sampling" is used to describe this method because a higher 'importance' or 'weighting' is given to the values sampled from <math>\displaystyle g(x)</math> that are closer to <math>\displaystyle f(x)</math>, | :The term "importance sampling" is used to describe this method because a higher 'importance' or 'weighting' is given to the values sampled from <math>\displaystyle g(x)</math> that are closer to the original distribution <math>\displaystyle f(x)</math>, which we would ideally like to sample from (but cannot because it is too difficult).<br> | ||

:<math>\displaystyle I = \int\frac{h(x)f(x)}{g(x)}g(x)\,dx</math> | :<math>\displaystyle I = \int\frac{h(x)f(x)}{g(x)}g(x)\,dx</math> | ||

:<math>=\displaystyle \int \frac{f(x)}{g(x)}h(x)g(x)\,dx</math> | :<math>=\displaystyle \int \frac{f(x)}{g(x)}h(x)g(x)\,dx</math> | ||

Latest revision as of 09:45, 30 August 2017

Importance Sampling and Monte Carlo Simulation - May 28, 2009

During this lecture we covered two more examples of Monte Carlo simulation, finishing that topic, and began talking about Importance Sampling.

Binomial Probability Monte Carlo Simulations

Example 1:

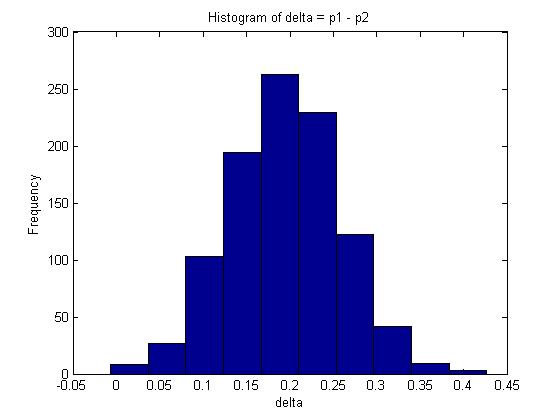

You are given two independent Binomial distributions with probabilities [math]\displaystyle{ \displaystyle p_1\text{, }p_2 }[/math]. Using a Monte Carlo simulation, approximate the value of [math]\displaystyle{ \displaystyle \delta }[/math], where [math]\displaystyle{ \displaystyle \delta = p_1 - p_2 }[/math].

- [math]\displaystyle{ \displaystyle X \sim BIN(n, p_1) }[/math]; [math]\displaystyle{ \displaystyle Y \sim BIN(n, p_2) }[/math]; [math]\displaystyle{ \displaystyle \delta = p_1 - p_2 }[/math]

So [math]\displaystyle{ \displaystyle f(p_1, p_2 | x,y) = \frac{f(x, y|p_1, p_2)*f(p_1,p_2)}{f(x,y)} }[/math] where [math]\displaystyle{ \displaystyle f(x,y) }[/math] is a flat distribution and the expected value of [math]\displaystyle{ \displaystyle \delta }[/math] is as follows:

- [math]\displaystyle{ \displaystyle \hat{\delta} = \int\int\delta f(p_1,p_2|X,Y)\,dp_1dp_2 }[/math]

Since X, Y are independent, we can split the conditional probability distribution:

- [math]\displaystyle{ \displaystyle f(p_1,p_2|X,Y) \propto f(p_1|X)f(p_2|Y) }[/math]

We need to find conditional distribution functions for [math]\displaystyle{ \displaystyle p_1, p_2 }[/math] to draw samples from. In order to get a distribution for the probability 'p' of a Binomial, we have to divide the Binomial distribution by n. This new distribution has the same shape as the original, but is scaled. A Beta distribution is a suitable approximation. Let

- [math]\displaystyle{ \displaystyle f(p_1 | X) \sim \text{Beta}(x+1, n-x+1) }[/math] and [math]\displaystyle{ \displaystyle f(p_2 | Y) \sim \text{Beta}(y+1, n-y+1) }[/math], where

- [math]\displaystyle{ \displaystyle \text{Beta}(\alpha,\beta) = \frac{\Gamma(\alpha+\beta)}{\Gamma(\alpha)\Gamma(\beta)}p^{\alpha-1}(1-p)^{\beta-1} }[/math]

Process:

- Draw samples for [math]\displaystyle{ \displaystyle p_1 }[/math] and [math]\displaystyle{ \displaystyle p_2 }[/math]: [math]\displaystyle{ \displaystyle (p_1,p_2)^{(1)} }[/math], [math]\displaystyle{ \displaystyle (p_1,p_2)^{(2)} }[/math], ..., [math]\displaystyle{ \displaystyle (p_1,p_2)^{(n)} }[/math];

- Compute [math]\displaystyle{ \displaystyle \delta = p_1 - p_2 }[/math] in order to get n values for [math]\displaystyle{ \displaystyle \delta }[/math];

- [math]\displaystyle{ \displaystyle \hat{\delta}=\frac{\displaystyle\sum_{\forall i}\delta^{(i)}}{N} }[/math].

Matlab Code:

- The Matlab code for recreating the above example is as follows:

n=100; %number of trials for X m=100; %number of trials for Y x=80; %number of successes for X trials y=60; %number of successes for y trials p1=betarnd(x+1, n-x+1, 1, 1000); p2=betarnd(y+1, m-y+1, 1, 1000); delta=p1-p2; mean(delta);

The mean in this example is given by 0.1938.

A 95% confidence interval for [math]\displaystyle{ \delta }[/math] is represented by the interval between the 2.5% and 97.5% quantiles which covers 95% of the probability distribution. In Matlab, this can be calculated as follows:

q1=quantile(delta,0.025); q2=quantile(delta,0.975);

The interval is approximately [math]\displaystyle{ 95% CI \approx (0.06606, 0.32204) }[/math]

Note: In this case, we can also find [math]\displaystyle{ E(\delta) }[/math] analytically since [math]\displaystyle{ E(\delta) = E(p_1 - p_2) = E(p_1) - E(p_2) = \frac{x+1}{n+2} - \frac{y+1}{m+2} \approx 0.1961 }[/math]. Compare this with the maximum likelihood estimate for [math]\displaystyle{ \delta }[/math]: [math]\displaystyle{ \frac{x}{n} - \frac{y}{m} = 0.2 }[/math].

Example 2:

We conduct an experiment by giving rats one of ten possible doses of a drug, where each subsequent dose is more lethal than the previous one:

- [math]\displaystyle{ \displaystyle x_1\lt x_2\lt ...\lt x_{10} }[/math]

For each dose [math]\displaystyle{ \displaystyle x_i }[/math] we test n rats and observe [math]\displaystyle{ \displaystyle Y_i }[/math], the number of rats that survive. Therefore,

- [math]\displaystyle{ \displaystyle Y_i \sim~ BIN(n, p_i) }[/math]

.

We can assume that the probability of death grows with the concentration of drug given, i.e. [math]\displaystyle{ \displaystyle p_1\lt p_2\lt ...\lt p_{10} }[/math]. Estimate the dose at which the animals have at least 50% chance of dying.

- Let [math]\displaystyle{ \displaystyle \delta=x_j }[/math] where [math]\displaystyle{ \displaystyle j=min\{i|p_i\geq0.5\} }[/math]

- We are interested in [math]\displaystyle{ \displaystyle \delta }[/math] since any higher concentrations are known to have a higher death rate.

Solving this analytically is difficult:

- [math]\displaystyle{ \displaystyle \delta = g(p_1, p_2, ..., p_{10}) }[/math] where g is an unknown function

- [math]\displaystyle{ \displaystyle \hat{\delta} = \int \int..\int_A \delta f(p_1,p_2,...,p_{10}|Y_1,Y_2,...,Y_{10})\,dp_1dp_2...dp_{10} }[/math]

- where [math]\displaystyle{ \displaystyle A=\{(p_1,p_2,...,p_{10})|p_1\leq p_2\leq ...\leq p_{10} \} }[/math]

- where [math]\displaystyle{ \displaystyle A=\{(p_1,p_2,...,p_{10})|p_1\leq p_2\leq ...\leq p_{10} \} }[/math]

Process: Monte Carlo

We assume that

- Draw [math]\displaystyle{ \displaystyle p_i \sim~ BETA(y_i+1, n-y_i+1) }[/math]

- Keep sample only if it satisfies [math]\displaystyle{ \displaystyle p_1\leq p_2\leq ...\leq p_{10} }[/math], otherwise discard and try again.

- Compute [math]\displaystyle{ \displaystyle \delta }[/math] by finding the first [math]\displaystyle{ \displaystyle p_i }[/math] sample with over 50% deaths.

- Repeat process n times to get n estimates for [math]\displaystyle{ \displaystyle \delta_1, \delta_2, ..., \delta_N }[/math].

- [math]\displaystyle{ \displaystyle \bar{\delta} = \frac{\displaystyle\sum_{\forall i} \delta_i}{N} }[/math].

Importance Sampling

In [math]\displaystyle{ I = \displaystyle\int h(x)f(x)\,dx }[/math], Monte Carlo simulation can be used only if it easy to sample from f(x). Otherwise, another method must be applied. If sampling from f(x) is difficult but there exists a probability distribution function g(x) that is easy to sample from, then [math]\displaystyle{ I }[/math] can be written as

- [math]\displaystyle{ I = \displaystyle\int h(x)f(x)\,dx }[/math]

- [math]\displaystyle{ = \displaystyle\int \frac{h(x)f(x)}{g(x)}g(x)\,dx }[/math]

- [math]\displaystyle{ = \displaystyle E_g(w(x)) \rightarrow }[/math]the expectation of w(x) with respect to g(x)

- [math]\displaystyle{ = \frac{\displaystyle\sum_{i=1}^{N} w(x_i)}{N} }[/math] where [math]\displaystyle{ \displaystyle w(x) = \frac{h(x)f(x)}{g(x)} }[/math]

Process

- Choose [math]\displaystyle{ \displaystyle g(x) }[/math] from which it's easy to sample from.

- Compute [math]\displaystyle{ \displaystyle w(x)=\frac{h(x)f(x)}{g(x)} }[/math]

- [math]\displaystyle{ \displaystyle \hat{I} = \frac{\displaystyle\sum_{i=1}^{N} w(x_i)}{N} }[/math]

"Weighted" average

- The term "importance sampling" is used to describe this method because a higher 'importance' or 'weighting' is given to the values sampled from [math]\displaystyle{ \displaystyle g(x) }[/math] that are closer to the original distribution [math]\displaystyle{ \displaystyle f(x) }[/math], which we would ideally like to sample from (but cannot because it is too difficult).

- [math]\displaystyle{ \displaystyle I = \int\frac{h(x)f(x)}{g(x)}g(x)\,dx }[/math]

- [math]\displaystyle{ =\displaystyle \int \frac{f(x)}{g(x)}h(x)g(x)\,dx }[/math]

- [math]\displaystyle{ =\displaystyle \int \frac{f(x)}{g(x)}E_g(h(x))\,dx }[/math] which is the same thing as saying that we are applying a regular Monte Carlo Simulation method to [math]\displaystyle{ \displaystyle\int h(x)g(x)\,dx }[/math], taking each result from this process and weighting the more accurate ones (i.e. the ones for which [math]\displaystyle{ \displaystyle \frac{f(x)}{g(x)} }[/math] is high) higher.

One can view [math]\displaystyle{ \frac{f(x)}{g(x)}\ = B(x) }[/math] as a weight.

Then [math]\displaystyle{ \displaystyle \hat{I} = \frac{\displaystyle\sum_{i=1}^{N} w(x_i)}{N} = \frac{\displaystyle\sum_{i=1}^{N} B(x_i)*h(x_i)}{N} }[/math]

i.e. we are computing a weighted sum of [math]\displaystyle{ h(x_i) }[/math] instead of a sum