measuring and testing dependence by correlation of distances

Background

dCov is another dependence measurement between two random vectors. For all random variables with finite first moments, the dCov coefficient generalizes the idea of correlation in two ways. First, dCov can be applied to any two random variables with any dimensions, that is the two random variables could be in different dimensions. Second, dCov is equal to zero if and only is the two variables are independent.

Definition

The dCov coefficient is defined as a weighted L2 distance between the joint and the product of the marginal characteristic functions of the random vectors. The choice of the weights is crucial and ensures the independence property. The dCov coefficient can also be written in terms of the expectations of Euclidean distances which is easier to interpret:

[math]\displaystyle{ \mathcal{V}^2=E[|X_1-X_2||Y_1-Y_2|]+E[|X_1-X_2|)E(|Y_1-Y_2|]-E[|X_1-X_2||Y_1-Y_3|] }[/math] in which [math]\displaystyle{ X_1,X_2,Y_1,Y_2 }[/math] are independent copies of the randoms variables X and Y. |X_1,X_2| is the Euclidean distance. A straightforward empirical estimate [math]\displaystyle{ \mathcal{V}_n^2 }[/math] is known as [math]\displaystyle{ dCov_n^2(X,Y) }[/math]:

[math]\displaystyle{ dCov_n^2(X,Y)=\frac{1}{n^2}\sum_{i,j=1}^n d_{ij}^Xd_{ij}^Y+d_{..}^Xd_{..}^Y-2\frac{1}{n}\sum_{i=1}^n d_{i.}^Xd_{i.}^Y }[/math]

[math]\displaystyle{ =\frac{1}{n^2}\sum_{i,j=1}^n (d_{ij}^X-d_{i.}^X-d_{.j}^X+d_{..}^X)(d_{ij}^Y-d_{i.}^Y-d_{.j}^Y+d_{..}^Y) }[/math]

once [math]\displaystyle{ dCov_n^2(X,Y) }[/math] is defined, the correlation coefficient can be defined:

[math]\displaystyle{ dCor_n^2(X,Y)=\frac{\lt C\Delta_XC,C\Delta_YC\gt }{||C\Delta_XC|| ||C\Delta_YC||} }[/math]

Because the Euclidean distance used in dCor is not squared Euclidean, dCor is able to detect non-linear correlations.

Experiment

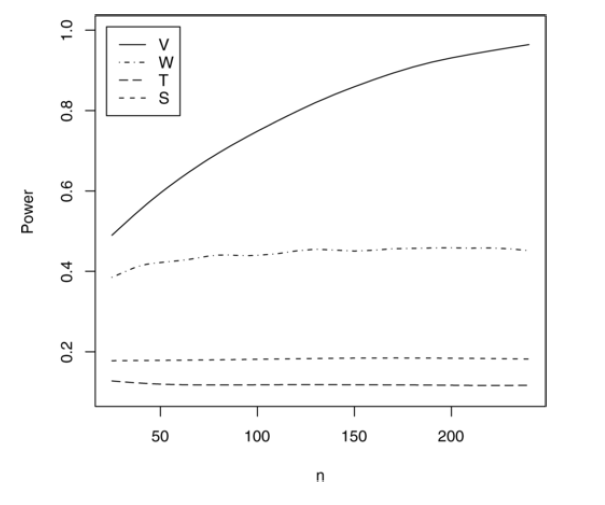

Example 1. The distribution of X is standard multivariate normal with dimension 5, and [math]\displaystyle{ Y_{kj}=X_{kj}\epsilon_{kj},j=1,\dots,5 }[/math], where [math]\displaystyle{ \epsilon_{kj} }[/math] are independent standard normal variables and independent of X. Comparisons are based on 10000 tests for each of the sample sizes. Each example compares the empirical power of the dCov test (labeled V ) with theWilks Lambda statistic (W), Puri–Sen rank correlation statistic (S) and Puri–Sen sign statistic (T). dCor shows superior result than alternatives.

Fig. 1. Example 1: Empirical power at 0.1 significance and sample size n against the alternative [math]\displaystyle{ Y = X\epsilon }[/math].

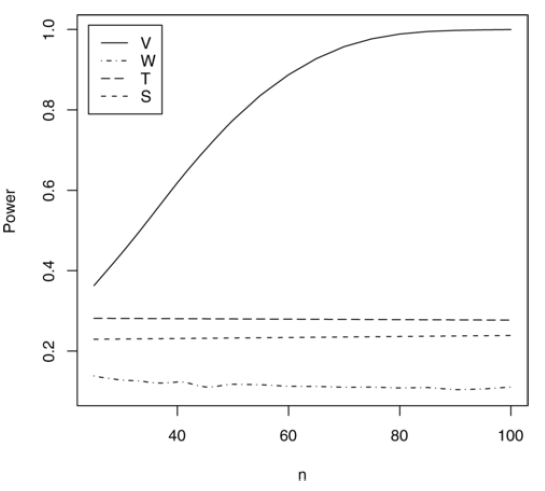

Example 2. Like Example 1, but [math]\displaystyle{ Y=log(X^2) }[/math]. It shows that in non-linear relations, dCor achieves a very good power while none of the others performs well.

Example 2: Empirical power at 0.1 significance and sample size n against the alternative [math]\displaystyle{ Y=log(X^2) }[/math].

Summery

These features are very similar to HSIC(Hilbert Schmidt Independence Criteria). The relationship between the two coefficient has been a open question. A recent research [2] shows that HSIC is a extension of dCov by being able to choose different kernels. The limitation of dCor is that it can only be used for datasets in Euclidean spaces and cannot work in non-Euclidean domains, such as text, graphs and groups. Then generalized version(such as HSCI) is needed.

References

[1] Székely, Gábor J., Maria L. Rizzo, and Nail K. Bakirov. "Measuring and testing dependence by correlation of distances." The Annals of Statistics 35.6 (2007): 2769-2794.

[2] Sejdinovic, Dino, et al. "Equivalence of distance-based and RKHS-based statistics in hypothesis testing." arXiv preprint arXiv:1207.6076 (2012).

[3] Fukumizu, Kenji, Francis R. Bach, and Michael I. Jordan. "Kernel dimension reduction in regression." The Annals of Statistics 37.4 (2009): 1871-1905.