Incremental Boosting Convolutional Neural Network for Facial Action Unit Recognition

Introduction

Facial expression is one of the most natural ways that human beings express the emotions. The Facial Action Coding System (FACS) attempts to systemically categorize each facial expression by specifying a basic set of muscle contractions or relaxations, formally called Action Units (AUs). FACS as we know today consists of five major categories (i) main, (ii) head movement, (iii) eye movement, (iv) visibility and (v) gross behavior . For example, "AU 1" stands for the inner portion of the brows being raised, and "AU 6" stands for the cheeks being raised. Such a framework helps in describing any facial expression/emotion as the combination of different AUs. The complete list of AUs in the FACS can be seen here. However, during the course of an average day, most human beings do not experience drastically varying emotions and therefore their facial expressions might change only subtly. Additionally, there might also be a lot of subjectivity involved if this task were to be done manually. To address these issues, it is imperative to automate this task. In addition to applications in human behavior analysis, automating AU recognition also has potential applications in human-computer interaction (HCI), online education, interactive gaming, among other domains.

As pointed out by Ekman et al. and Zeng et al., many techniques are in place to capture the facial features from videos or images to characterize the appearance or geometric changes caused by AU. But these hand-crafted methods are not tailored or optimized for facial AU recognition. Because of the recent advancements in object detection and categorization tasks, CNNs are an appealing go-to for the facial AU recognition task described above. However, compared to the former, the training sets available for the AU recognition task are not very large, and therefore the learned CNNs suffer from overfitting. To overcome this problem, this work builds on the idea of integrating boosting within CNN. Boosting is a technique wherein multiple weak learners are combined together to yield a strong learner. It attempts to produce new classifiers that are better able to predict examples for which the current ensemble’s performance is poor. Moreover, this work also modifies the mechanics of learning a CNN by breaking large datasets in mini-batches. Typically, in a batch strategy, each iteration uses a batch only to update the parameters and the features learned in that iteration are discarded for subsequent iterations. Herein the authors incorporate incremental learning by building a classifier that incrementally learns from all the iterations/batches. Hence the genesis of the name Incremental Boosting - Convolutional Neural Network (IB-CNN) is justified. The IB-CNN introduced here outperforms the state-of-the-art CNNs on four standard AU-coded databases.

Related Work

There have been many papers which use manually designed features for the AU-recognition task, unlike the CNN-based methods which integrate feature learning. Some of them are Gabor Wavelets, Local Binary Patterns (LBP), Histogram of Oriented Gradients (HOG), Scale Invariant Feature Transform (SIFT) features, Discriminant Non-negative Matrix Factorization(DNMF), Histograms of Local Phase Quantization (LPQ), and their spatiotemporal extensions. Newer approaches include the one proposed by Tong, Liao and Ji [9], which uses a dynamic Bayesian network (DBN) to model the relationships among different AUs. Automated methodologies include the Automatic Facial Analysis system which recognizes both permanent and transient AUs with high accuracy rates [6]. Hand-crafted feature descriptors like the LBP are very powerful facial recognition algorithms; they are very robust and do not require training - hence, there is no "overfitting" of data. LBP, in particular, provides a very dense and concise descriptor (i.e. a histogram feature) of facial features without the use of GPU. In essence, the feature vector for LBPs are small, yet powerful enough that its accuracy comparable to that of a trained CNN. One of the major breakthroughs to reduce overfitting in CNNs was the dropout, introduced by Hinton et al [5]. Dropout can be regarded a special bagging ensembling method over "different" networks that share same weights. This work can be seen as a refinement of the previous random dropout approach in the sense that only neurons with no contribution towards classification are dropped, while retaining the more discriminative neurons. Moreover, adopting a batch strategy with incremental learning also circumvents the issue of not-so-large datasets.

Methodology

CNNs

Convolutional Neural Networks are neural networks that contain at least one convolutional layer. Typically, the convolutional layer is accompanied by a pooling layer. A convolutional layer, as the name suggests, convolves the input matrix (or tensor) with a filter (or kernel). This operation produces a feature map which, roughly speaking, shows the presence or absence of the filter in the image. Note that the parameters in the kernel are not pre-defined and are learned as part of training. A pooling layer reduces the dimensionality of the data by summarizing the activation map by some means (such as max or average of local patches). Following this, the matrix (or tensor) data is vectorized using a fully-connected (FC) layer. The neurons in the FC layer have connections to all the activations in the previous layer. Finally, there is a decision layer that has as many neurons as the number of classes. The decision layer outputs a class label based on a score function using the activations of the FC layer. Generally, an inner-product score function is used, which is replaced by the boosting score function in the current paper.

Boosting

Ever since boosting was first introduced in the 1990s, it has become one of the most powerful learning method to improve model performance. A particularly effective boosting algorithm, AdaBoost, was introduced by Freund and Schapire in 1995. Since then it has inspired many more sophisticated boosting methods [11]. Alternative methods of CNN boosting for images (in counting tasks) also involve Selective Sampling which has been proven to increase accuracy and reduce processing time [7]. The curse of dimensionality is a major bottleneck in many machine learning tasks, whereby evaluation of parameters can reduce the speed of classification, as well as the predictive power. AdaBoost can only choose those parameters which improve the predictive power of the model, and reduce the dimensionality. The essential idea of boosting is to use a weighted combination of a sequence of weak classifiers to make a strong one. The key point to this process is after each iteration of data modification (through any type of model), the boosting algorithm will place more weight on observations that are difficult to classify correctly. Therefore, the “weak” classifiers can then focus on those observations that are hard to predict (Hastie, Tibshirani, & Friedman, 2017). These heuristics can be summarized using the following formula:

[math]\displaystyle{ G(x) = sign(\sum\limits_{l=1}^L \alpha_l G_l(x)) }[/math] Where [math]\displaystyle{ \alpha_l }[/math] are computed by boosting algorithm and [math]\displaystyle{ G_l(x) }[/math] denotes a weak classifier.

One of the advantages of AdaBoost is that it is more robust to overfitting and hence generalizes well even when there is less data since it mimics a maximum margin classifier.

Boosting CNN

Let $X = [x_1, \dots, x_M]$ denote the activation features of a training data batch of size $M$, where each $x_i$ has $K$ activation features. Also, let $Y = [y_1, \dots , y_M]$ denote the corresponding labels, i.e. each $y_i \in \{-1, +1\}$. In other words, the vector $x_i$ contains all the activations of the FC layer, which is typically multiplied with the weights corresponding to the connections between the FC layer and the decision layer. However, as mentioned earlier, this work achieves that same task of scoring via boosting as opposed to computing the inner product. Denoting the weak classifiers by $h(\cdot)$, we obtain the strong classifier as: \begin{equation} \label{boosting} H(x_i) = \sum\limits_{j = 1}^K \alpha_j h(x_{ij}; \lambda_j) \end{equation} where $x_{ij}$ is the $j^{th}$ activation feature calculated on the $i^{th}$ image. Moreover, $\alpha_j \geq 0$ denotes the weight of the $j^{th}$ weak classifier and $\sum\limits_{i=1}^K\alpha_i = 1$. Here we define $h(\cdot)$ to be a differentiable surrogate for the sign function as: \begin{equation} h(x_{ij}; \lambda_j) = \dfrac {f(x_{ij}; \lambda_j)}{\sqrt{f(x_{ij}; \lambda_j)^2 + \eta^2}} \end{equation} where $f(x_{ij}; \lambda_j)$ denotes a decision-stump (one-level decision tree) with $\lambda_j$ being the threshold. The parameter $\eta$ controls the slope of the function $\dfrac {f(\cdot)}{\sqrt{f(\cdot)^2 + \eta^2}}$.

Note that while the paper takes inspiration from decision stumps in defining the function $h(\cdot)$, it should be emphasized that the weak classifiers being used in IB-CNN are not decision trees. Rather, the weak classifiers are obtained as a version of the CNN with certain nodes dropped from the last fully connected layer.

The authors set this parameter according to the distribution of $f(\cdot)$ as $\eta = \frac{\sigma}{c}$, where $\sigma$ is the standard deviation of $f(\cdot)$ and $c$ is a constant. This parameter is experimented with extensively in Section 4.4 of the paper. Values of $c$ which are less than $0.5$, while more correctly approximating the true $sign(\cdot)$ causes slower training and convergence (due to a near zero derivative) and lead to significantly different $F1$ scores of the model. However, for values of $c$ which are greater than or equal to $0.5$, there is strong evidence that the $F1$ score is robust to the choice of $c$, for $c \in [0.5,16]$. The authors chose $c = 2$ based on this empirical analysis.

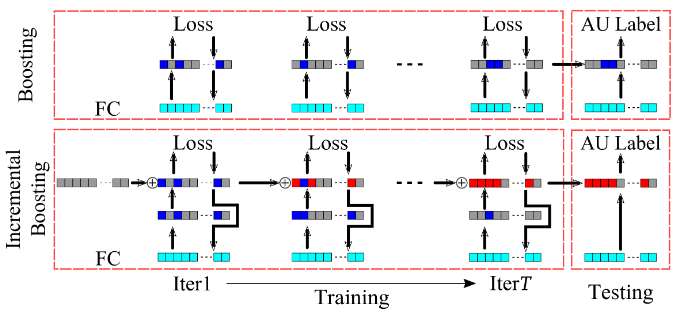

Note that for a certain strong classifier $H$ if a certain $\alpha_i = 0$, then the activation feature $x_i$ has no contribution to the output of the classifier $H$. In other words, the corresponding neuron can be considered to be inactive. (Refer to the Figure for the schematic diagram of B-CNN.)

Incremental Boosting

In vanilla B-CNN, the information learned in a certain batch, i.e. the weights and the thresholds of the active neurons is discarded for subsequent batches. To address this issue, in this work the idea of incremental learning is incorporated. Formally, the incremental strong classifier at the $t^{th}$ iteration, $H_I^t$, is given as: \begin{equation} \label{incr} H_I^t(x_i^t) = \frac{(t-1)H_I^{t-1}(x_i^t) + H^t(x_i^t)}{t} \end{equation} where $H_I^{t-1}$ is the incremental strong classifier obtained at the $(t-1)^{th}$ iteration and $H^t$ is the boosted strong classifier at the $t^{th}$ iteration. Manipulating the equations, we obtain: \begin{equation} H_I^t(x_i^t) = \sum\limits_{j=1}^K \alpha_j^t h^t(x_{ij}; \lambda_j^t);\quad \alpha_j^t = \frac{(t-1)\alpha_j^{t-1}+\hat{\alpha}_j^t}{t} \end{equation} where $\hat{\alpha}_j^t$ is the weak classifier weight calculated in the $t^{th}$ iteration by boosting and $\alpha_j^t$ is the cumulative weight considering previous iterations. (Refer to the Figure for a schematic diagram of IB-CNN.) Typically, boosting algorithms minimize an objective function that captures the loss of the strong classifier. However, the loss of the strong classifier may be dominated by some weak classifiers with large weights, which might lead to overfitting. Therefore, to exercise better control, the loss function at a certain iteration $t$, $\epsilon^{IB}$ is expressed as a convex combination of the loss of the incremental strong classifier at iteration $t$ and the loss of the weak classifiers determined at iteration $t$. That is, \begin{equation} \epsilon^{IB} = \beta\epsilon_{strong}^{IB} + (1-\beta)\epsilon_{weak} \end{equation} where \begin{equation} \epsilon_{strong}^{IB} = \frac{1}{M}\sum\limits_{i=1}^M[H_I^t(x_i^t)-y_i^t]^2;\quad \epsilon_{weak} = \frac{1}{MN}\sum\limits_{i=1}^M\sum\limits_{\substack{1 \leq j \leq K \\ \alpha_j > 0}}[h(x_{ij}, \lambda_j)-y_i]^2. \end{equation}

To learn the parameters of the IB-CNN, stochastic gradient descent is used, and the descent directions are obtained by differentiating the loss above with respect to the parameters $x_{ij}^t$ and $\lambda_j^t$ as follows: \begin{equation} \dfrac{\partial \epsilon^{IB}}{\partial x_{ij}^t} = \beta\dfrac{\partial \epsilon_{strong}^{IB}}{\partial H_I^t(x_i^t)}\dfrac{\partial H_I^t(x_i^t)}{\partial x_{ij}^t} + (1-\beta)\dfrac{\partial \epsilon_{weak}^{IB}}{\partial h^t(x_{ij}^t;\lambda_j^t)}\dfrac{\partial h^t(x_{ij}^t;\lambda_j^t)}{\partial x_{ij}^t} \\ \dfrac{\partial \epsilon^{IB}}{\partial \lambda_j^t} = \beta\sum\limits_{i = 1}^M\dfrac{\partial \epsilon_{strong}^{IB}}{\partial H_I^t(x_i^t)}\dfrac{\partial H_I^t(x_i^t)}{\partial x_{ij}^t} + (1-\beta)\sum\limits_{i = 1}^M\dfrac{\partial \epsilon_{weak}^{IB}}{\partial h^t(x_{ij}^t;\lambda_j^t)}\dfrac{\partial h^t(x_{ij}^t;\lambda_j^t)}{\partial x_{ij}^t} \end{equation} where $\frac{\partial \epsilon^{IB}}{\partial x_{ij}^t}$ and $\frac{\partial \epsilon^{IB}}{\partial \lambda_j^t}$ only need to be calculated for the active neurons. Overall, the pseudocode for the incremental boosting algorithm for the IB-CNN is as follows.

Input: The number of iterations (mini-batches) $T$ and activation features $X$ with the size of $M \times K$, where $M$ is the number of images in a mini-batch and $K$ is the dimension of the activation feature vector of one image.

$\quad$ 1: for each input activation $j$ from $1$ to $K$ do

$\quad$ 2: $\quad \alpha_j^1 = 0$

$\quad$ 3: end for

$\quad$ 4: for each mini-batch $t$ from $1$ to $T$ do

$\quad$ 5: $\quad$ Feed-forward to the fully connected layer;

$\quad$ 6: $\quad$ Select active features by boosting and calculate weights $\hat{\alpha}^t$ based on the standard AdaBoost;

$\quad$ 7: $\quad$ Update the incremental strong classifier;

$\quad$ 8: $\quad$ Calculate the overall loss of IB-CNN;

$\quad$ 9: $\quad$ Backpropagate the loss based using the SGD equations;

$\quad$ 10: $\quad$ Continue backpropagation to lower layers;

$\quad$ 11: end for

Experiments

Experiments have been conducted on four AU-coded databases whose details are as follows.

1. CK database: contains 486 image sequences from 97 subjects, and 14 AUs.

2. FERA2015 SEMAINE database: contains 31 subjects with 93,000 images and 6 AUs.

3. FERA2015 BP4D database: contains 41 subjects with 146,847 images and 11 AUs.

4. DISFA database: contains 27 subjects with 130,814 images and 12 AUs.

Data Pre-Processing

The images are subjected to some preprocessing initially in order to scale and align the face regions, and to also remove out-of-plane rotations. Face regions are aligned based on three fiducial points: the centers of the two eyes and the mouth, and scaled to a size of 128 by 96. Face images are warped to a frontal view based on landmarks. A total of 23 facial landmarks that are less affected by facial movements are selected as control points to warp images.

Additionally, each image is normalized based on the mean and standard deviation of short video clips (800 frames, 30 frames per second). This time sequence normalization was intended to to reduce "identity related information and highlight appearance and geometrical changes due to AU activation." The normalization aids in the models ability to generalize, removing variation that is present in single individuals.

IB-CNN Architecture

The IB-CNN architecture is as follows. The authors built the network by modifying the architecture of a CNN model that was originally used for CIFAR-10 dataset (original implementation was in caffe: cifar10_quick). The first two layers are convolutional layers having 32 filters with a size of 5 X 5 with a stride of 1. The activation maps then are sent to a rectified layer which is then followed by an average pooling layer with a stride of 3. This is followed again by a convolutional layer with 64 filters of size 5 X 5, the activation maps from which are sent to an FC layer with 128 nodes. The FC layer, in turn, feeds into the decision layer via the boosting mechanism.

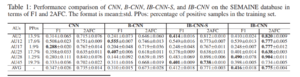

The following table compares the performance of the IB-CNN with other state-of-the-art methods that include CNN-based methods.

Lastly, brief arguments are provided supporting the robustness of the IB-CNN to variations in: the slope parameter $\eta$, the number of input neurons, and the learning rate $\gamma$. A smaller $\eta$ simulates the sign function more accurately and thus strengthen the classifier $h(x_{ij}, \lambda_j)$, but will slow down the convergence of learning process, so there should be a balance between small and large $\eta$. In the paper we set $\eta = \sigma / 2$. As to learning rate $\gamma$, the author show by F1 score that IB-CNN is less sensitive than traditional CNN's in $\gamma$.

Conclusion

To deal with the issue of relatively small-sized datasets in the domain of facial AU-recognition, the authors incorporate boosting and incremental learning to CNNs. Boosting helps the model to generalize well by preventing overfitting, and incremental learning exploits more information from the mini-batches but retaining more learned information. Moreover, the loss function is also changed so as to have more control over fine-tuning the model. The proposed IB-CNN shows improvement over the existent methods over four standard databases, showing a pronounced improvement in recognizing infrequent AUs.

There are two immediate extensions to this work. Firstly, the IB-CNN may be applied to other problems wherein the data is limited. Secondly, the model may also be modified to go beyond binary classification and achieve multiclass boosted classification. Modern-day AdaBoost allows the weights $\alpha_i$, where i is the classifier, to be negative as well which gives the intuition that, "do exactly opposite of what this classifier says". In IB-CNN, the authors have restricted the weights of the input (FC units) to the boosting to be $\geq 0$. Considering that the IB-CNN is advantageous to models where datasets of a certain class are less in number, it would help the CNN to identify activation units in the FC layer which consistently contribute to the wrong prediction so that their consistency can be utilized in making correct predictions. From the AdaBoost classifier weight update, we conclude that the classifiers which should be considered to be inactive are those which cannot be relied upon i.e. those which contribute consistently nearly equal amount of correct and incorrect predictions.

To add to the future possibilities, we could also explore if gradient boosting could be used instead of AdaBoost.

Criticism

The authors claim that the model both produces more complex decision boundaries and avoids overfitting; this seems to be a contradictory statement as the problem of overfitting is effectively an overly complex decision boundary. The authors motivate this strategy claiming the need in the context of mini-batching, but fail to provide any comparison to non-batched strategies.

References

[1] Han, S., Meng, H., Khan, A. S., Tong, Y. (2016) "Incremental Boosting Convolutional Neural Network for Facial Action Unit Recognition". NIPS.

[2] Tian, Y., Kanade, T., Cohn, J. F. (2001) "Recognizing Action Units for Facial Expression Analysis". IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 23., No. 2.

[3] Facial Action Coding System - https://en.wikipedia.org/wiki/Facial_Action_Coding_System

[4] YouTube Explanation of the Paper: https://www.youtube.com/watch?v=LZer5dW8h5c

[5] Srivastava, N., Hinton, G. E., Krizhevsky, A., Sutskever, I., & Salakhutdinov, R. (2014). Dropout: a simple way to prevent neural networks from overfitting. Journal of machine learning research, 15(1), 1929-1958.

[6] Tian Y, Kanade T, Cohn JF. Recognizing Action Units for Facial Expression Analysis. IEEE transactions on pattern analysis and machine intelligence. 2001;23(2):97-115. doi:10.1109/34.908962.

[7] Learning to Count with CNN Boosting. Elad Walach and Lior Wolf. The Blavatnik School of Computer Science. Tel Aviv University

[8] A Brief Introduction to Boosting, Robert E. Schapire, Proceedings of the Sixteenth International Joint Conference on Artificial Intelligence, 1999.

[9] Tong, Y., Liao, W., & Ji, Q. (2007). Facial action unit recognition by exploiting their dynamic and semantic relationships. IEEE transactions on pattern analysis and machine intelligence, 29(10).

[10] MOGHIMI ET AL.: BOOSTED CONVOLUTIONAL NEURAL NETWORKS - http://www.bmva.org/bmvc/2016/papers/paper024/paper024.pdf

[11] Freund Y. & Schapire E. A decision-theoretic generalization of on-line learning and an application to boosting. Journal of Computer and System Sciences, 55(1):119-139, August 1997.