a Direct Formulation For Sparse PCA Using Semidefinite Programming

Introduction

Principle Component Analysis is a popular technique for dimensionality reduction. It has been effectively and widely used in the areas of data analysis, data compression, and data visualization, and it is applicable in problems throughout science and engineering. For a given set of data points, PCA finds a linear combination of a subset of the variables that are uncorrelated with each other; these variables are called principal components, and they correspond to the directions of maximal variance in the data. The principal components can be representative of the whole data with minimum information loss. PCA commonly uses singular value decomposition (SVD) to obtain the principal components of a data set. Not only is PCA useful as a tool for dimensionality reduction, it also makes subsequent interpretations and analysis of the resulting data much simpler to do, because the variables (principal components) in the resulting data are all uncorrelated with each other. Though PCA has been found to be a very useful technique in many areas, it has a major disadvantage in that each principal component is usually a linear combination of every variable in the data, i.e. usually every weight (loading) in each principal component's linear combination of variables is non-zero. This disadvantage makes PCA difficult to work with in certain areas; especially, in areas where each variable (axis) has a physical meaning. One such area is gene analysis, where each variable (axis) might represent a specific gene. When PCA is applied data of this type, the interpretation of the principal components can be made much easier if each principal component is a linear combination of only a few variables. Furthermore, this disadvantage of PCA, makes it inefficient for financial asset trading data, in that, for financial asset trading strategies based on principal component techniques, fewer non-zero loadings directly leads to fewer transaction costs. This disadvantage of PCA is what led to the formulation of sparse PCA, whose aim is to find the sparse principal components. Sparse principal components, like principal components, are vectors that span a lower-dimensional space that explain most of variance in the original data. However, in order to find the sparse principal components using sparse PCA, it is necessary to make some sacrifices such as:

- There is a reduction in the explained variance in the original data captured by the sparse principal components as compared to PCA.

- There is a reduction in the orthogonality (independence or correlation) between the resulting variables (sparse principal components) as compared to PCA.

Semidefinite programs<ref name="VB1996">L. Vandenberghe and S. Boyd, Semidefinite programming, SIAM Review, 38 (1996), pp. 49–

95.</ref> are convex optimization problems. They optimize a linear function by constraining it to an affine combination of symmetric matrices which are positive semidefinite. Semidefinite programs can be solved in polynomial time by interior-point solvers like SEDUMI or SDPT3. Unfortunately, they are not viable nor practical for high dimensional data sets.

This paper suggests a direct approach to the convex formulation of the sparse PCA via semidefinite programming. Since interior-point solvers cannot handle large data sets, Nesterov’s smoothing technique <ref name='N2004'>Smoothing technique and its application in semidefinite optimization, CORE Discussion Paper No. 2004/73, (2004).</ref> is used efficiently to help solving large dimensional problems. First order methods require less memory which is an important issue in interior-point solvers. On the other hand, their convergence is slower but this is not a major concern in certain cases. So, the optimal first-order minimization algorithm is going to be applied for solving the optimization problem.

The content of the paper could be summarized as below:

First, the authors tried to maximize the variance projection by limiting the number of non-zero elements via semidefinite programming. Then, they show how this method can be used for decomposing a matrix into a limited number of variables. As their problem size is large and can not be solved by common techniques which are used for solving small convex optimization problems, they show how Nesterov's smoothing method <ref name="N2004"/> helps achieving the solution.

Semidefinite relaxation

Sparse variance maximization

Let [math]\displaystyle{ \textbf{S}^n }[/math] be the set of symmetric matrices of size [math]\displaystyle{ \, n }[/math]. Let [math]\displaystyle{ A \in \textbf{S}^n }[/math], then WLOG, assume [math]\displaystyle{ \,A }[/math] is a covariance matrix. The, we want to find the vector [math]\displaystyle{ x \in \textbf{R}^n }[/math] with maximum variance while constraining it to be sparse. Let [math]\displaystyle{ \, k }[/math] be an integer where [math]\displaystyle{ 1 \leq k \leq n }[/math] then the problem can be written formally as

[math]\displaystyle{ \textrm{ subject } \; \textrm{ to }\;\|x\|_2=1, }[/math]

Unfortunately, this problem is NP-hard, because of the cardinality constraint. Therefore, in order to solve the problem, we should look for a convex relaxation.

Semidefinite relaxation

Rewriting the above formulas, we get:

[math]\displaystyle{ \textrm{ subject } \; \textrm{ to } \; \textbf{Tr}({X})=1 }[/math],

[math]\displaystyle{ \textbf{Card}({X})\leq k^2, }[/math]

[math]\displaystyle{ {X}\succeq 0, }[/math]

where [math]\displaystyle{ X \in \textbf{S}^n. }[/math]

If [math]\displaystyle{ \, X }[/math] is a solution to the above problem then the last two constraints imply that [math]\displaystyle{ \,X=xx^T }[/math]. Finally, by replacing the only non-convex constraint,

[math]\displaystyle{ \textbf{Card}({X})\leq k^2 }[/math], by a weaker but convex one, we get a semidefinite relaxation of the sparse PCA problem for square matrices.

[math]\displaystyle{ \textrm{ subject } \; \textrm{ to } \; \textbf{Tr}({X})=1, }[/math]

[math]\displaystyle{ \textbf{1}^{T}|{X}|\textbf{1}\leq k, }[/math]

Note that if [math]\displaystyle{ \,X=xx^T }[/math] then [math]\displaystyle{ \textbf{1}^{T}|{X}|\textbf{1}\leq k }[/math] is equivalent to the constraint [math]\displaystyle{ \,(\sum|x_i|)^2 \leq k }[/math] or [math]\displaystyle{ \,\|x\|^2_1 \leq k }[/math].

The above formulation can be extended to the case of non-square matrices. In this case, the sparse variance maximization problem can be written as follows:

[math]\displaystyle{ \textrm{ subject } \; \textrm{ to } \; \|u\|_{2}=\|v\|_{2}=1, }[/math]

Changing the constraint to the convex one, in the same way as we did for the square case, gives the following formulation:

[math]\displaystyle{ \textrm{ subject } \; \textrm{ to } \; {X}\leq0, \textbf{Tr}({X}^{ii})=1, }[/math]

[math]\displaystyle{ \textbf{1}^{T}|{X}^{ii}|\textbf{1}\leq k_{i}, i=1,2, }[/math]

Sparse decomposition

To obtain the sparse equivalent to PCA we begin by solving the semidefinite relaxation problem. So, given the matrix [math]\displaystyle{ A_1 \in \textbf{S}^n }[/math], we solve

[math]\displaystyle{ \textrm{subject} \; \textrm{to} \;\textbf{Tr}({X})=1, }[/math]

[math]\displaystyle{ \textbf{1}^{T}|{X}|\textbf{1}\leq k, }[/math]

[math]\displaystyle{ {X}\succeq 0. }[/math]From the solution, [math]\displaystyle{ \,X }[/math], we keep only the dominant eigenvector [math]\displaystyle{ \,x_1 }[/math]. Then [math]\displaystyle{ \,x_1 }[/math] is the first sparse loading vector in our decomposition. To obtain the next component define

We can then use [math]\displaystyle{ \,A_2 }[/math] as our new data matrix and repeat the optimization problem. We can continue this process iteratively to obtain more components. But the issue is when should we stop?

When the values in [math]\displaystyle{ \,A_i }[/math] are all less than a constant [math]\displaystyle{ \,\rho }[/math], we must stop. [math]\displaystyle{ \,\rho }[/math] can be interpreted as the noise level and when the matrix can not be distinguished from noise, we should terminate the iterations.

Algorithm

SEDUMI and SDPT3 can be used to solve small semidefinite programming problems. But for larger problems, these methods are not practical since the second order information, necessary in interior-point methods, is impossible to store once the problem grows to a particular size. In this case, first order methods are more appropriate. These methods have a smaller memory cost per iteration but their convergence time is [math]\displaystyle{ O(1/\epsilon) }[/math] while interior-point methods will converge in [math]\displaystyle{ O(log(1/\epsilon)) }[/math]. Fortunately, convergence time is not important for our applications.

Smoothing technique

There are two important issues to consider when solving large scale semidefinite programming problems. First, we must consider the memory restrictions. To solve this problem we 'll consider first-order techniques. The other issue is smoothness. As [math]\displaystyle{ X\succeq 0 }[/math] is not smooth, the number of iterations required for first-order methods to reach an accuracy of [math]\displaystyle{ \epsilon }[/math] is given by [math]\displaystyle{ O(1/\epsilon ^2) }[/math]. This bound is tight without any further structural information about the structure. Since we have additional information available this complexity bound will be brought down to [math]\displaystyle{ O(1/\epsilon) }[/math].

As we have in the previous sections the optimization problem is:

[math]\displaystyle{ \textrm{subject} \; \textrm{to} \; \|x\|_2=1, }[/math]

[math]\displaystyle{ \textbf{Card}(x)\leq k. }[/math]We can rewrite the optimization problem by replacing the cardinality constraint with a penalty term in the objective function:

[math]\displaystyle{ \textrm{subject} \; \textrm{to} \; \|x\|_2=1 }[/math]

which is equivalent to:

[math]\displaystyle{ \textrm{subject} \; \textrm{to} \; \textbf{Tr}({X})=1, }[/math]

[math]\displaystyle{ {X}\succeq 0, }[/math]

[math]\displaystyle{ \textbf{Rank}({X})=1. }[/math]By changing the problem to a semidefinite programming problem have:

[math]\displaystyle{ \textrm{subject} \; \textrm{to} \; \textbf{Tr}({X})=1, }[/math]

[math]\displaystyle{ {X}\succeq 0, }[/math]which we can rewrite as:

which can be written in saddle-function format as:

where [math]\displaystyle{ \,f }[/math] is defined over a compact convex set [math]\displaystyle{ \,Q_1 }[/math] and [math]\displaystyle{ \,\hat{f} }[/math] is convex and differentiable. The function [math]\displaystyle{ \hat{\phi} (U) }[/math] is a continuous convex function over [math]\displaystyle{ \,Q_2 }[/math]. When a function can be written in a saddle-function format, then http://en.wikipedia.org/wiki/Smooth_function smoothing] techniques will help reduce the complexity of solving

from [math]\displaystyle{ O(1/\epsilon ^2) }[/math] to [math]\displaystyle{ O(1/\epsilon) }[/math].

This process can be done in two steps.

- Regularization: Regularization adds a strongly convex penalty to the saddle function and then estimate its [math]\displaystyle{ \epsilon- }[/math]approximation of [math]\displaystyle{ f }[/math] with Lipschitz continuous gradient.

- Optimal first-order minimization: It applies the optimal first-order scheme for Lipschitz continuous functions.

Application of sparse PCA

We consider the problem which is discussed in the previous section:

[math]\displaystyle{ \textrm{subject} \; \textrm{to} \; \textbf{Tr}({X})=1, }[/math]

[math]\displaystyle{ {X}\succeq 0, }[/math]where [math]\displaystyle{ \,\rho }[/math] is assumed to be one without loss of generality. Duality allows us to rewrite this in saddle-function format:

where

and

Exactly as in Nesterov's <ref name='N2004'/> paper, we define the Frobenius norm and prox-functions as follows:

where [math]\displaystyle{ U \in Q_1 }[/math] and [math]\displaystyle{ \,U_0 }[/math] is its center which satisfies [math]\displaystyle{ \,d_1(U_0)=0 }[/math] and is defined as

In addition we have:

The following can be concluded for [math]\displaystyle{ Q_2 }[/math] as well:

Next we estimate the norm [math]\displaystyle{ (1,2) }[/math] norm of operator [math]\displaystyle{ \,T }[/math], which is defined as:

[math]\displaystyle{ =\max_X\|X\|_2\;:\;\|X\|_F\leq 1 }[/math]

[math]\displaystyle{ \,=1. }[/math]So the parameter are set to the following values:

Regularization

As we discussed earlier and as is discussed in Nesterov's <ref name="N2004"/> paper, the regularization parameter which is [math]\displaystyle{ \,\mu }[/math],

changes the non-smooth function [math]\displaystyle{ \,f(X) }[/math] of the original problem to

where [math]\displaystyle{ \,f_{\mu} }[/math] is the penalized function with prox-function [math]\displaystyle{ d_2 }[/math]:

Now [math]\displaystyle{ \,f_\mu }[/math] is a smooth approximation of [math]\displaystyle{ \,f }[/math] on [math]\displaystyle{ \,Q_2 }[/math] and also [math]\displaystyle{ \,f_\mu }[/math] is a Lipschitz-continuous function with constant Lipshictz constant, [math]\displaystyle{ \,L }[/math], which is:

In our problem, the function can be computed explicitly as:

First order minimization

The input of this section is the smooth convex function [math]\displaystyle{ \,f_\mu }[/math] which is defined and computed in the previous section. Now the algorithm proceeds as follows <ref name="book2003">Introductory Lectures on Convex Optimization, Springer, 2003.</ref>:

Repeat

- Compute [math]\displaystyle{ \,f_\mu(U_k) }[/math] and [math]\displaystyle{ \,\nabla f_\mu (U_k). }[/math]

- Find [math]\displaystyle{ Y_k={\arg\min}_{Y\in Q_1}\lt \nabla f_\mu (U), Y\gt +\frac{1}{2}L\|U-Y\|_F^2. }[/math]

- Find [math]\displaystyle{ Z_k={\arg\min}_X\{\frac{L}{\sigma _1}d_1 (U)+\sum_{i=0}^{k}\frac{i+1}{2}\lt \nabla f_\mu (U_i), U-U_i\gt : U \in Q_1\}. }[/math]

- set [math]\displaystyle{ U_k= \frac{2}{k+3}Z_k+\frac{k+1}{k+3}Y_k. }[/math]

Until [math]\displaystyle{ \textrm{gap} \; \leq \epsilon }[/math]

The first step calculates the smooth function and its gradient. The second step estimates the gradient mapping and in the third and forth steps the sequence of [math]\displaystyle{ \,f_\mu }[/math] whose minimum could be calculated explicitly will be updated. For more details about these steps you can see <ref name='N1983'>Y. Nesterov, A method of solving a convex programming problem with convergence rate O(1/k2), Soviet Mathematics Doklady, 27 (1983), pp. 372–376</ref> and <ref name="N2003">Smoothing technique and its application in semidefinite optimization, CORE Discussion Paper No. 2004/73, (2004).</ref>.

An Experiment Comparing SPCA To PCA

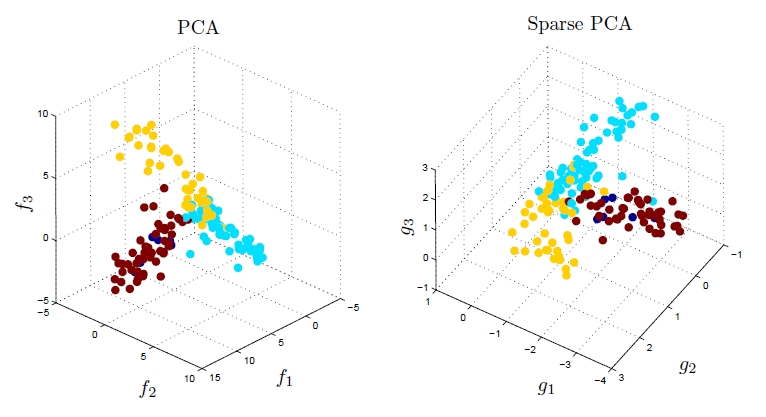

As mentioned in the Introduction section, in the area of gene expression analysis, PCA does not appear to be very suitable because the weights (loadings) of the resulting principal components are dense, i.e. the vast majority of them are non-zero; this makes subsequent interpretation and analysis difficult, because one could hardly tell which genes are highly expressed and which are not. The authors of the original paper of sparse PCA (a link to which can be found here) conducted an experiment by comparing what they referred to as DSPCA (Direct Approach Sparse PCA) to PCA on gene expression data. Using both PCA and DSPCA, the authors reduced the dimensionality of the gene data to only three, and then they looked for distinct clusters in the resulting low-dimensionality data using a simple assignment of color coding to each labeled data point that represents its known class. As expected by the authors, the sparsity in the weights (loadings) of the sparse principal components found by DSPCA made interpretation of the lower-dimensional gene expression data much easier as compared to PCA. According to the authors, there were only 14 nonzero gene coefficients (weights or loadings of the sparse principal components) in the case where DSPCA was used, whereas the nonzero gene coefficients (weights or loadings of the principal components) in the case where PCA was used were dense and they numbered about 1,500.

Fig. 1 (taken from <ref name="R6">Alexandre d’Aspremont, Laurent El Ghaoui, Michael I. Jordan, and Gert R. G. Lanckriet. A Direct Formulation For Sparse PCA Using Semidefinite Programming. SIAM Review, 49 (2007), pp. 434-448.</ref>) illustrates the results of this experiment.

Code

The numerical codes used in this paper are provided by A. d'Aspremont and can be found here.

References

<references />