stat841f10: Difference between revisions

m (Conversion script moved page Stat841f10 to stat841f10: Converting page titles to lowercase) |

|||

| Line 1: | Line 1: | ||

==[[Schedule of Project Presentations]] == | |||

==[[Proposal Fall 2010]] == | |||

==[[Mark your contribution here]]== | |||

==[[statf10841Scribe|Editor sign up]] == | ==[[statf10841Scribe|Editor sign up]] == | ||

== | {{Cleanup|date=October 8 2010|reason=Provide a summary for each topic here.}} | ||

==[[f10_Stat841_digest |Digest ]] == | |||

== | |||

== ''' Reference Textbook''' == | |||

The Elements of Statistical Learning: Data Mining, Inference, and Prediction, Second Edition, February 2009 Trevor Hastie, Robert Tibshirani, Jerome Friedman [http://www-stat.stanford.edu/~tibs/ElemStatLearn/ (3rd Edition is available)] | |||

== ''' Classification - September 21, 2010''' == | |||

=== Classification === | === Classification === | ||

| Line 18: | Line 17: | ||

To classify new data, a classifier first uses labeled (classes are known) [http://en.wikipedia.org/wiki/Training_set training data] to [http://en.wikipedia.org/wiki/Mathematical_model#Training train] a model, and then it uses a function known as its [http://en.wikipedia.org/wiki/Decision_rule classification rule] to assign a label to each new data input after feeding the input's known feature values into the model to determine how much the input belongs to each class. | To classify new data, a classifier first uses labeled (classes are known) [http://en.wikipedia.org/wiki/Training_set training data] to [http://en.wikipedia.org/wiki/Mathematical_model#Training train] a model, and then it uses a function known as its [http://en.wikipedia.org/wiki/Decision_rule classification rule] to assign a label to each new data input after feeding the input's known feature values into the model to determine how much the input belongs to each class. | ||

Classification has been an important task for people and society since the beginnings of history. According to [http://www.schools.utah.gov/curr/science/sciber00/7th/classify/sciber/history.htm this link], the earliest application of classification in human society was probably done by prehistory peoples for recognizing which wild animals were beneficial to people and which | Classification has been an important task for people and society since the beginnings of history. According to [http://www.schools.utah.gov/curr/science/sciber00/7th/classify/sciber/history.htm this link], the earliest application of classification in human society was probably done by prehistory peoples for recognizing which wild animals were beneficial to people and which ones were harmful, and the earliest systematic use of classification was done by the famous Greek philosopher Aristotle (384 BC - 322 BC) when he, for example, grouped all living things into the two groups of plants and animals. Classification is generally regarded as one of four major areas of statistics, with the other three major areas being [http://en.wikipedia.org/wiki/Regression_analysis regression], [http://en.wikipedia.org/wiki/Cluster_analysis clustering], and [http://en.wikipedia.org/wiki/Dimension_reduction dimensionality reduction] (feature extraction or manifold learning). Please be noted that some people consider classification to be a broad area that consists of both supervised and unsupervised methods of classifying data. In this view, as can be seen in [http://www.yale.edu/ceo/Projects/swap/landcover/Unsupervised_classification.htm this link], clustering is simply a special case of classification and it may be called '''unsupervised classification'''. | ||

In '''classical statistics''', classification techniques were developed to learn useful information using small data sets where there is usually not enough of data. When [http://en.wikipedia.org/wiki/Machine_learning machine learning] was developed after the application of computers to statistics, classification techniques were developed to work with very large data sets where there is usually too many data. A major challenge facing data mining using machine learning is how to efficiently find useful patterns in very large amounts of data. An interesting quote that describes this problem quite well is the following one made by the retired Yale University Librarian Rutherford D. Rogers. | In '''classical statistics''', classification techniques were developed to learn useful information using small data sets where there is usually not enough of data. When [http://en.wikipedia.org/wiki/Machine_learning machine learning] was developed after the application of computers to statistics, classification techniques were developed to work with very large data sets where there is usually too many data. A major challenge facing data mining using machine learning is how to efficiently find useful patterns in very large amounts of data. An interesting quote that describes this problem quite well is the following one made by the retired Yale University Librarian Rutherford D. Rogers, a link to a source of which can be found [http://www.e-knowledge.ca/quotes.php?topic=Knowledge here]. | ||

''"We are drowning in information and starving for knowledge."'' | ''"We are drowning in information and starving for knowledge."'' | ||

- Rutherford D. Rogers | - Rutherford D. Rogers | ||

In the Information Age, machine learning when it is combined with efficient classification techniques can be very useful for data mining using very large data sets. This is most useful when the structure of the data is not well understood but the data nevertheless exhibit strong statistical regularity. Areas in which machine learning and classification have been successfully used together include search and recommendation (e.g. Google, Amazon), automatic speech recognition and speaker verification, medical diagnosis, analysis of gene expression, drug discovery etc. | In the Information Age, machine learning when it is combined with efficient classification techniques can be very useful for data mining using very large data sets. This is most useful when the structure of the data is not well understood but the data nevertheless exhibit strong statistical regularity. Areas in which machine learning and classification have been successfully used together include search and recommendation (e.g. Google, Amazon), automatic speech recognition and speaker verification, medical diagnosis, analysis of gene expression, drug discovery etc. | ||

| Line 31: | Line 30: | ||

'''Definition''': Classification is the prediction of a discrete [http://en.wikipedia.org/wiki/Random_variable random variable] <math> \mathcal{Y} </math> from another random variable <math> \mathcal{X} </math>, where <math> \mathcal{Y} </math> represents the label assigned to a new data input and <math> \mathcal{X} </math> represents the known feature values of the input. | '''Definition''': Classification is the prediction of a discrete [http://en.wikipedia.org/wiki/Random_variable random variable] <math> \mathcal{Y} </math> from another random variable <math> \mathcal{X} </math>, where <math> \mathcal{Y} </math> represents the label assigned to a new data input and <math> \mathcal{X} </math> represents the known feature values of the input. | ||

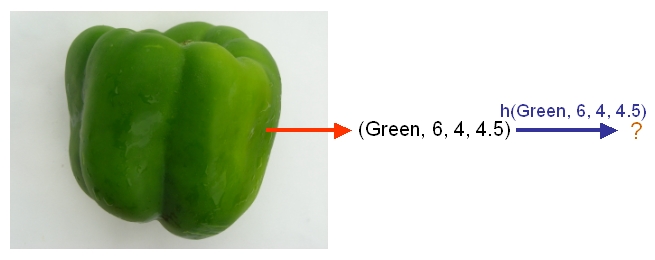

A set of training data used by a classifier to train its model consists of <math>\,n</math> [http://en.wikipedia.org/wiki/Independent_and_identically_distributed_random_variables independently and identically distributed (i.i.d)] ordered pairs <math>\,\{(X_{1},Y_{1}), (X_{2},Y_{2}), \dots , (X_{n},Y_{n})\}</math>, where the values of the <math>\,ith</math> training input's feature values <math>\,X_{i} = (\,X_{i1}, \dots , X_{id}) \in \mathcal{X} \subset \mathbb{R}^{d}</math> is a ''d''-dimensional vector and the label of the <math>\, ith</math> training input is <math>\,Y_{i} \in \mathcal{Y} </math> that | A set of training data used by a classifier to train its model consists of <math>\,n</math> [http://en.wikipedia.org/wiki/Independent_and_identically_distributed_random_variables independently and identically distributed (i.i.d)] ordered pairs <math>\,\{(X_{1},Y_{1}), (X_{2},Y_{2}), \dots , (X_{n},Y_{n})\}</math>, where the values of the <math>\,ith</math> training input's feature values <math>\,X_{i} = (\,X_{i1}, \dots , X_{id}) \in \mathcal{X} \subset \mathbb{R}^{d}</math> is a ''d''-dimensional vector and the label of the <math>\, ith</math> training input is <math>\,Y_{i} \in \mathcal{Y} </math> that can take a finite number of values. The classification rule used by a classifier has the form <math>\,h: \mathcal{X} \mapsto \mathcal{Y} </math>. After the model is trained, each new data input whose feature values is <math>\,x</math> is given the label <math>\,\hat{Y}=h(x)</math>. | ||

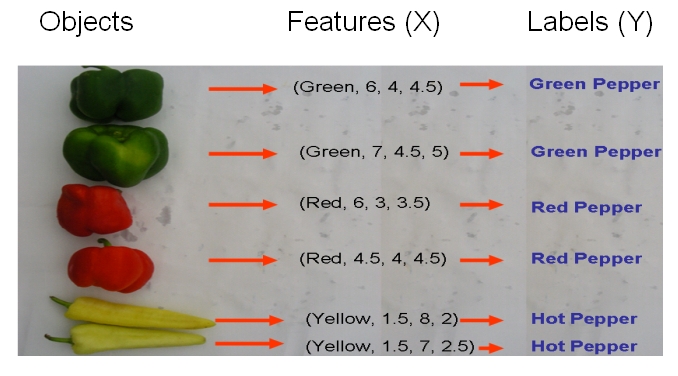

As an example, if we would like to classify some vegetables and fruits, then our training data might look something like the one shown in the following picture from Professor Ali Ghodsi's Fall 2010 STAT 841 slides. | As an example, if we would like to classify some vegetables and fruits, then our training data might look something like the one shown in the following picture from Professor Ali Ghodsi's Fall 2010 STAT 841 slides. | ||

| Line 42: | Line 41: | ||

As another example, suppose we wish to classify newly-given fruits into apples and oranges by considering three features of a fruit that comprise its color, its diameter, and its weight. After selecting a classifier and constructing a model using training data <math>\,\{(X_{color, 1}, X_{diameter, 1}, X_{weight, 1}, Y_{1}), \dots , (X_{color, n}, X_{diameter, n}, X_{weight, n}, Y_{n})\}</math>, we could then use the classifier's classification rule <math>\,h</math> to assign any newly-given fruit having known feature values <math>\,x = (\,x_{color}, x_{diameter} , x_{weight})</math> the label <math>\, \hat{Y}=h(x) \in \mathcal{Y}= \{apple,orange\}</math>. | As another example, suppose we wish to classify newly-given fruits into apples and oranges by considering three features of a fruit that comprise its color, its diameter, and its weight. After selecting a classifier and constructing a model using training data <math>\,\{(X_{color, 1}, X_{diameter, 1}, X_{weight, 1}, Y_{1}), \dots , (X_{color, n}, X_{diameter, n}, X_{weight, n}, Y_{n})\}</math>, we could then use the classifier's classification rule <math>\,h</math> to assign any newly-given fruit having known feature values <math>\,x = (\,x_{color}, x_{diameter} , x_{weight})</math> the label <math>\, \hat{Y}=h(x) \in \mathcal{Y}= \{apple,orange\}</math>. | ||

=== Examples of Classification === | |||

• Email spam filtering (spam vs not spam). | |||

• Detecting credit card fraud (fraudulent or legitimate). | |||

• Face detection in images (face or background). | |||

• Web page classification (sports vs politics vs entertainment etc). | |||

• Steering an autonomous car across the US (turn left, right, or go straight). | |||

• Medical diagnosis (classification of disease based on observed symptoms). | |||

=== Independent and Identically Distributed (iid) Data Assumption === | |||

Suppose that we have training data X which contains N data points. The Independent and Identically Distributed (IID) assumption declares that the datapoints are drawn independently from identical distributions. This assumption implies that ordering of the data points does not matter, and the assumption is used in many classification problems. For an example of data that is not IID, consider daily temperature: today's temperature is not independent of the yesterday's temperature -- rather, there is a strong correlation between the temperatures of the two days. | |||

=== Error rate === | === Error rate === | ||

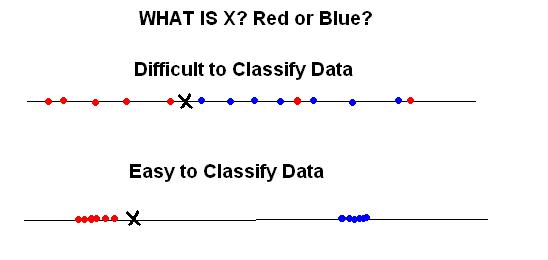

The '''empirical error rate''' (or '''training error rate''') of a classifier having classification rule <math>\,h</math> is defined as the frequency at which <math>\,h</math> does not correctly classify the data inputs in the training set, i.e., it is defined as | The '''empirical error rate''' (or '''training error rate''') of a classifier having classification rule <math>\,h</math> is defined as the frequency at which <math>\,h</math> does not correctly classify the data inputs in the training set, i.e., it is defined as | ||

<math>\,\hat{L}_{n} = \frac{1}{n} \sum_{i=1}^{n} I(h(X_{i}) \neq Y_{i})</math>, where <math>\,I</math> is an indicator variable and <math>\,I = \left\{\begin{matrix} 1 &\text{if } h(X_i) \neq Y_i \\ 0 &\text{if } h(X_i) = Y_i \end{matrix}\right.</math>. Here, | <math>\,\hat{L}_{n} = \frac{1}{n} \sum_{i=1}^{n} I(h(X_{i}) \neq Y_{i})</math>, where <math>\,I</math> is an indicator variable and <math>\,I = \left\{\begin{matrix} 1 &\text{if } h(X_i) \neq Y_i \\ 0 &\text{if } h(X_i) = Y_i \end{matrix}\right.</math>. Here, | ||

<math>\,X_{i} \in \mathcal{X}</math> and <math>\,Y_{i} \in \mathcal{Y}</math> are the known feature values and the true class of the <math>\,ith</math> training input, respectively. | <math>\,X_{i} \in \mathcal{X}</math> and <math>\,Y_{i} \in \mathcal{Y}</math> are the known feature values and the true class of the <math>\,ith</math> training input, respectively. | ||

The '''true error rate''' <math>\,L(h)</math> of a classifier having classification rule <math>\,h</math> is defined as the probability that <math>\,h</math> does not correctly classify any new data input, i.e., it is defined as <math>\,L(h)=P(h(X) \neq Y)</math>. Here, <math>\,X \in \mathcal{X}</math> and <math>\,Y \in \mathcal{Y}</math> are the known feature values and the true class of that input, respectively. | |||

In practice, the empirical error rate is obtained to estimate the true error rate, whose value is impossible to be known because the parameter values of the underlying process cannot be known but can only be estimated using available data. The empirical error rate, in practice, estimates the true error rate quite well in that, as mentioned [http://www.liebertonline.com/doi/pdf/10.1089/106652703321825928 here], it is an unbiased estimator of the true error rate. | |||

An Error Rate Comparison of Classification Methods [http://pdfserve.informaworld.com/311525_770885140_713826662.pdf] | |||

=== Decision Theory === | |||

we can identify three distinct approaches to solving decision problems, all of which have been used in practical applications. These are given, in decreasing order of complexity, by: | |||

a. First solve the inference problem of determining the class-conditional densities <math>\ p(x|C_k)</math> for each class <math>\ C_k</math> individually. Also separately infer the prior class probabilities <math>\ p(C_k)</math>. Then use Bayes’ theorem in the form | |||

<math>\begin{align}p(C_k|x)=\frac{p(x|C_k)p(C_k)}{p(x)} \end{align}</math> | |||

to find the posterior class probabilities <math>\ p(C_k|x)</math>. As usual, the denominator in Bayes’ theorem can be found in terms of the quantities appearing in the numerator, because | |||

<math>\begin{align}p(x)=\sum_{k} p(x|C_k)p(C_k) \end{align}</math> | |||

Equivalently, we can model the joint distribution <math>\ p(x, C_k)</math> directly and then normalize to obtain the posterior probabilities. Having found the posterior probabilities, we use decision theory to determine class membership for each new input <math>\ x</math>. Approaches that explicitly or implicitly model the distribution of inputs as well as outputs are known as "generative models", because by sampling from them it is possible to generate synthetic data points in the input space. | |||

b. First solve the inference problem of determining the posterior class probabilities <math>\ p(C_k|x)</math>, and then subsequently use decision theory to assign each new <math>\ x</math> to one of the classes. Approaches that model the posterior probabilities directly | |||

are called "discriminative models". | |||

c. Find a function <math>\ f(x)</math>, called a discriminant function, which maps each input <math>\ x</math> directly onto a class label. For instance, in the case of two-class problems, <math>\ f(.)</math> might be binary valued and such that <math>\ f = 0</math> represents class <math>\ C_1</math> and <math>\ f = 1</math> represents class <math>\ C_2</math>. In this case, probabilities play no role. | |||

This topic has brought to you from Pattern Recognition and Machine Learning by Christopher M. Bishop (Chapter 1) | |||

=== Bayes Classifier === | === Bayes Classifier === | ||

A Bayes classifier is a simple probabilistic classifier based on applying Bayes' | A Bayes classifier is a simple probabilistic classifier based on applying Bayes' Theorem (from Bayesian statistics) with strong [http://en.wikipedia.org/wiki/Naive_Bayes_classifier (naive)] independence assumptions. A more descriptive term for the underlying probability model would be "independent feature model". | ||

In simple terms, a Bayes classifier assumes that the presence (or absence) of a particular feature of a class is unrelated to the presence (or absence) of any other feature. For example, a fruit may be considered to be an apple if it is red, round, and about 4" in diameter. Even if these features depend on each other or upon the existence of the other features, a Bayes classifier considers all of these properties to independently contribute to the probability that this fruit is an apple. | In simple terms, a Bayes classifier assumes that the presence (or absence) of a particular feature of a class is unrelated to the presence (or absence) of any other feature. For example, a fruit may be considered to be an apple if it is red, round, and about 4" in diameter. Even if these features depend on each other or upon the existence of the other features, a Bayes classifier considers all of these properties to independently contribute to the probability that this fruit is an apple. | ||

| Line 63: | Line 106: | ||

An advantage of the naive Bayes classifier is that it requires a small amount of training data to estimate the parameters (means and variances of the variables) necessary for classification. Because independent variables are assumed, only the variances of the variables for each class need to be determined and not the entire [http://en.wikipedia.org/wiki/Covariance_matrix covariance matrix]. | An advantage of the naive Bayes classifier is that it requires a small amount of training data to estimate the parameters (means and variances of the variables) necessary for classification. Because independent variables are assumed, only the variances of the variables for each class need to be determined and not the entire [http://en.wikipedia.org/wiki/Covariance_matrix covariance matrix]. | ||

After training its model using training data, the '''Bayes classifier''' classifies any new data input in two steps. First, it uses the input's known feature values and the [http://en.wikipedia.org/wiki/Bayes_formula Bayes formula] to calculate the input's [http://en.wikipedia.org/wiki/Posterior_probability posterior probability] of belonging to each class. Then, it uses its classification rule to place the input into | After training its model using training data, the '''Bayes classifier''' classifies any new data input in two steps. First, it uses the input's known feature values and the [http://en.wikipedia.org/wiki/Bayes_formula Bayes formula] to calculate the input's [http://en.wikipedia.org/wiki/Posterior_probability posterior probability] of belonging to each class. Then, it uses its classification rule to place the input into the most-probable class, which is the one associated with the input's largest posterior probability. | ||

In mathematical terms, for a new data input having feature values <math>\,(X = x)\in \mathcal{X}</math>, the Bayes classifier labels the input as <math>(Y = y) \in \mathcal{Y}</math>, such that the input's posterior probability <math>\,P(Y = y|X = x)</math> is maximum over all of the members of <math>\mathcal{Y}</math>. | In mathematical terms, for a new data input having feature values <math>\,(X = x)\in \mathcal{X}</math>, the Bayes classifier labels the input as <math>(Y = y) \in \mathcal{Y}</math>, such that the input's posterior probability <math>\,P(Y = y|X = x)</math> is maximum over all of the members of <math>\mathcal{Y}</math>. | ||

Suppose there are <math>\,k</math> classes and we are given a new data input having feature values <math>\,x</math>. The following derivation shows how the Bayes classifier finds the input's posterior probability <math>\,P(Y = y|X = x)</math> of belonging to each class | Suppose there are <math>\,k</math> classes and we are given a new data input having feature values <math>\,x</math>. The following derivation shows how the Bayes classifier finds the input's posterior probability <math>\,P(Y = y|X = x)</math> of belonging to each class <math> y \in \mathcal{Y} </math>. | ||

:<math> | :<math> | ||

\begin{align} | \begin{align} | ||

| Line 76: | Line 119: | ||

Here, <math>\,P(Y=y|X=x)</math> is known as the posterior probability as mentioned above, <math>\,P(Y=y)</math> is known as the prior probability, <math>\,P(X=x|Y=y)</math> is known as the likelihood, and <math>\,P(X=x)</math> is known as the evidence. | Here, <math>\,P(Y=y|X=x)</math> is known as the posterior probability as mentioned above, <math>\,P(Y=y)</math> is known as the prior probability, <math>\,P(X=x|Y=y)</math> is known as the likelihood, and <math>\,P(X=x)</math> is known as the evidence. | ||

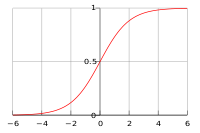

In the special case where there are two classes, i.e., <math>\, \mathcal{Y}=\{0, 1\}</math>, the Bayes classifier makes use of the function <math>\,r(x)=P\{Y=1|X=x\}</math> which is the | In the special case where there are two classes, i.e., <math>\, \mathcal{Y}=\{0, 1\}</math>, the Bayes classifier makes use of the function <math>\,r(x)=P\{Y=1|X=x\}</math> which is the posterior probability of a new data input having feature values <math>\,x</math> belonging to the class <math>\,Y = 1</math>. Following the above derivation for the posterior probabilities of a new data input, the Bayes classifier calculates <math>\,r(x)</math> as follows: | ||

:<math> | :<math> | ||

\begin{align} | \begin{align} | ||

| Line 91: | Line 134: | ||

0 &\mathrm{otherwise} \end{matrix}\right.</math>. | 0 &\mathrm{otherwise} \end{matrix}\right.</math>. | ||

Here, <math>\,x</math> is the feature values of a new data input and <math>\hat r(x)</math> is the estimated value of the function <math>\,r(x)</math> given by the Bayes classifier's model after feeding <math>\,x</math> into the model. Still in this special case of two classes, the Bayes classifier's [http://en.wikipedia.org/wiki/Decision_boundary decision boundary] is defined as the set <math>\,D(h)=\{x: P(Y=1|X=x)=P(Y=0|X=x)\}</math>. The decision boundary <math>\,D(h)</math> essentially combines together the trained model and the decision function <math>\,h</math>, and it is used by the Bayes classifier to assign any new data input to a label of either <math>\,Y = 0</math> or <math>\,Y = 1</math> depending on which side of the decision boundary the input lies in. From this decision boundary, it is easy to see that, in the case where there are two classes, the Bayes classifier's classification rule can be re-expressed as | Here, <math>\,x</math> is the feature values of a new data input and <math>\hat r(x)</math> is the estimated value of the function <math>\,r(x)</math> given by the Bayes classifier's model after feeding <math>\,x</math> into the model. Still in this special case of two classes, the Bayes classifier's [http://en.wikipedia.org/wiki/Decision_boundary decision boundary] is defined as the set <math>\,D(h)=\{x: P(Y=1|X=x)=P(Y=0|X=x)\}</math>. The decision boundary <math>\,D(h)</math> essentially combines together the trained model and the decision function <math>\,h^*</math>, and it is used by the Bayes classifier to assign any new data input to a label of either <math>\,Y = 0</math> or <math>\,Y = 1</math> depending on which side of the decision boundary the input lies in. From this decision boundary, it is easy to see that, in the case where there are two classes, the Bayes classifier's classification rule can be re-expressed as | ||

:<math>\, h^*(x)= \left\{\begin{matrix} | :<math>\, h^*(x)= \left\{\begin{matrix} | ||

| Line 98: | Line 141: | ||

'''Bayes Classification Rule Optimality Theorem''' | '''Bayes Classification Rule Optimality Theorem''' | ||

The Bayes classifier is the optimal classifier in that it | The Bayes classifier is the optimal classifier in that it results in the least possible true probability of misclassification for any given new data input, i.e., for any generic classifier having classification rule <math>\,h</math>, it is always true that <math>\,L(h^*(x)) \le L(h(x))</math>. Here, <math>\,L</math> represents the true error rate, <math>\,h^*</math> is the Bayes classifier's classification rule, and <math>\,x</math> is any given data input's feature values. | ||

Although the Bayes classifier is optimal in the theoretical sense, other classifiers may nevertheless outperform it in practice. The reason for this is that various components which make up the Bayes classifier's model, such as the likelihood and prior probabilities, must either be estimated using training data or be guessed with a certain degree of belief | Although the Bayes classifier is optimal in the theoretical sense, other classifiers may nevertheless outperform it in practice. The reason for this is that various components which make up the Bayes classifier's model, such as the likelihood and prior probabilities, must either be estimated using training data or be guessed with a certain degree of belief. As a result, the estimated values of the components in the trained model may deviate quite a bit from their true population values, and this can ultimately cause the calculated posterior probabilities of inputs to deviate quite a bit from their true values. Estimation of all these probability functions, as likelihood, prior probability, and evidence function is a very expensive task, computationally, which also makes some other classifiers more favorable than Bayes classifier. | ||

A | A detailed proof of this theorem is available [http://www.ee.columbia.edu/~vittorio/BayesProof.pdf here]. | ||

'''Defining the classification rule:''' | '''Defining the classification rule:''' | ||

In the special case of two classes, the Bayes classifier can use three main approaches to define its classification rule <math>\,h</math>: | In the special case of two classes, the Bayes classifier can use three main approaches to define its classification rule <math>\,h^*</math>: | ||

:1) Empirical Risk Minimization: Choose a set of classifiers <math>\mathcal{H}</math> and find <math>\,h^*\in \mathcal{H}</math> that minimizes some estimate of the true error rate <math>\,L(h)</math>. | :1) Empirical Risk Minimization: Choose a set of classifiers <math>\mathcal{H}</math> and find <math>\,h^*\in \mathcal{H}</math> that minimizes some estimate of the true error rate <math>\,L(h^*)</math>. | ||

:2) Regression: Find an estimate <math> \hat r </math> of the function <math> x </math> and define | :2) Regression: Find an estimate <math> \hat r </math> of the function <math> x </math> and define | ||

:<math>\, h(x)= \left\{\begin{matrix} | :<math>\, h^*(x)= \left\{\begin{matrix} | ||

1 &\text{if } \hat r(x)>\frac{1}{2} \\ | 1 &\text{if } \hat r(x)>\frac{1}{2} \\ | ||

0 &\mathrm{otherwise} \end{matrix}\right.</math>. | 0 &\mathrm{otherwise} \end{matrix}\right.</math>. | ||

:3) Density Estimation: Estimate <math>\,P(X=x|Y=0)</math> from the <math>\,X_{i}</math>'s for which <math>\,Y_{i} = 0</math>, estimate <math>\,P(X=x|Y=1)</math> from the <math>\,X_{i}</math>'s for which <math>\,Y_{i} = 1</math>, and estimate <math>\,P(Y = 1)</math> as <math>\,\frac{1}{n} \sum_{i=1}^{n} Y_{i}</math>. Then, calculate <math>\,\hat r(x) = \hat P(Y=1|X=x)</math> and define | :3) Density Estimation: Estimate <math>\,P(X=x|Y=0)</math> from the <math>\,X_{i}</math>'s for which <math>\,Y_{i} = 0</math>, estimate <math>\,P(X=x|Y=1)</math> from the <math>\,X_{i}</math>'s for which <math>\,Y_{i} = 1</math>, and estimate <math>\,P(Y = 1)</math> as <math>\,\frac{1}{n} \sum_{i=1}^{n} Y_{i}</math>. Then, calculate <math>\,\hat r(x) = \hat P(Y=1|X=x)</math> and define | ||

:<math>\, h(x)= \left\{\begin{matrix} | :<math>\, h^*(x)= \left\{\begin{matrix} | ||

1 &\text{if } \hat r(x)>\frac{1}{2} \\ | 1 &\text{if } \hat r(x)>\frac{1}{2} \\ | ||

0 &\mathrm{otherwise} \end{matrix}\right.</math>. | 0 &\mathrm{otherwise} \end{matrix}\right.</math>. | ||

| Line 145: | Line 188: | ||

In the general case where there are at least two classes, the Bayes classifier uses the following theorem to assign any new data input having feature values <math>\,x</math> into one of the <math>\,k</math> classes. | In the general case where there are at least two classes, the Bayes classifier uses the following theorem to assign any new data input having feature values <math>\,x</math> into one of the <math>\,k</math> classes. | ||

''Theorem'' | '''Theorem''' | ||

: Suppose that <math> \mathcal{Y}= \{1, \dots, k\}</math>, where <math>\,k \ge 2</math>. Then, the optimal classification rule is <math>\,h^*(x) = arg max_{i} P(Y=i|X=x)</math>, where <math>\,i \in \{1, \dots, k\}</math>. | : Suppose that <math> \mathcal{Y}= \{1, \dots, k\}</math>, where <math>\,k \ge 2</math>. Then, the optimal classification rule is <math>\,h^*(x) = arg max_{i} P(Y=i|X=x)</math>, where <math>\,i \in \{1, \dots, k\}</math>. | ||

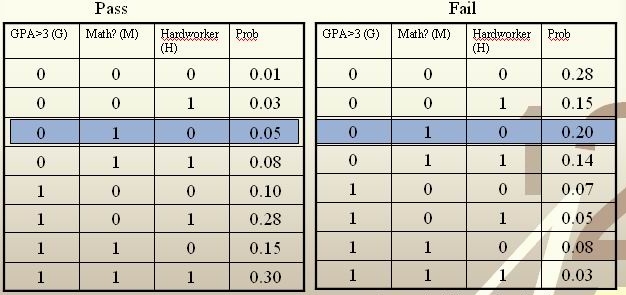

| Line 153: | Line 196: | ||

:Whether or not the student had a strong math background (M). | :Whether or not the student had a strong math background (M). | ||

:Whether or not the student was a hard worker (H). | :Whether or not the student was a hard worker (H). | ||

:Whether or not the student passed or failed the course. | :Whether or not the student passed or failed the course. ''Note: these are the known y values in the training data.'' | ||

These known data are summarized in the following tables: | These known data are summarized in the following tables: | ||

| Line 164: | Line 207: | ||

<br /> | <br /> | ||

<math>\, \hat r(x) = P(Y=1|X =(0,1,0))=\frac{P(X=(0,1,0)|Y=1)P(Y=1)}{P(X=(0,1,0)|Y=0)P(Y=0)+P(X=(0,1,0)|Y=1)P(Y=1)}=\frac{0.05*0.5}{0.05*0.5+0.2*0.5}=\frac{0.025}{0. | <math>\, \hat r(x) = P(Y=1|X =(0,1,0))=\frac{P(X=(0,1,0)|Y=1)P(Y=1)}{P(X=(0,1,0)|Y=0)P(Y=0)+P(X=(0,1,0)|Y=1)P(Y=1)}=\frac{0.05*0.5}{0.05*0.5+0.2*0.5}=\frac{0.025}{0.125}=\frac{1}{5}<\frac{1}{2}.</math><br /> | ||

The Bayes classifier assigns the new student into the class <math>\, h^*(x)=0 </math>. Therefore, we predict that the new student would fail the course. | The Bayes classifier assigns the new student into the class <math>\, h^*(x)=0 </math>. Therefore, we predict that the new student would fail the course. | ||

'''Naive Bayes Classifier:''' | |||

The naive Bayes classifier is a special (simpler) case of the Bayes classifier. It uses an extra assumption: that the presence (or absence) of a particular feature of a class is unrelated to the presence (or absence) of any other feature. This assumption allows for an easier likelihood function <math>\,f_y(x)</math> in the equation: | |||

:<math> | |||

\begin{align} | |||

P(Y=y|X=x) &=\frac{f_y(x)\pi_y}{\Sigma_{\forall i \in \mathcal{Y}} f_i(x)\pi_i} | |||

\end{align} | |||

</math> | |||

The simper form of the likelihood function seen in the naive Bayes is: | |||

:<math> | |||

\begin{align} | |||

f_y(x) = P(X=x|Y=y) = {\prod_{i=1}^{n} P(X_{i}=x_{i}|Y=y)} | |||

\end{align} | |||

</math> | |||

The Bayes classifier taught in class was not the naive Bayes classifier. | |||

=== Bayesian vs. Frequentist === | === Bayesian vs. Frequentist === | ||

| Line 172: | Line 231: | ||

The [http://en.wikipedia.org/wiki/Bayesian_probability Bayesian] view of probability and the [http://en.wikipedia.org/wiki/Frequency_probability frequentist] view of probability are the two major schools of thought in the field of statistics regarding how to interpret the probability of an event. | The [http://en.wikipedia.org/wiki/Bayesian_probability Bayesian] view of probability and the [http://en.wikipedia.org/wiki/Frequency_probability frequentist] view of probability are the two major schools of thought in the field of statistics regarding how to interpret the probability of an event. | ||

The Bayesian view of probability states that, for any event E, event E has a | |||

The Bayesian view of probability states that, for any event E, event E has a [http://en.wikipedia.org/wiki/Prior_probability prior probability] that represents how believable event E would occur prior to knowing anything about any other event whose occurrence could have an impact on event E's occurrence. Theoretically, this prior probability is a ''belief'' that represents the baseline probability for event E's occurrence. In practice, however, event E's prior probability is unknown, and therefore it must either be guessed at or be estimated using a sample of available data. After obtaining a guessed or estimated value of event E's prior probability, the Bayesian view holds that the probability, that is, the believability of event E's occurrence, can always be made more accurate should any new information regarding events that are relevant to event E become available. The Bayesian view also holds that the accuracy for the estimate of the probability of event E's occurrence is higher as long as there are more useful information available regarding events that are relevant to event E. The Bayesian view therefore holds that there is no ''intrinsic'' probability of occurrence associated with any event. If one adherers to the Bayesian view, one can then, for instance, predict tomorrow's weather as having a probability of, say, <math>\,50%</math> for rain. The Bayes classifier as described above is a good example of a classifier developed from the Bayesian view of probability. The earliest works that lay the framework for the Bayesian view of probability is accredited to [http://en.wikipedia.org/wiki/Thomas_Bayes Thomas Bayes] (1702–1761). | |||

In contrast to the Bayesian view of probability, the frequentist view of probability holds that there is an ''intrinsic'' probability of occurrence associated with every event to which one can carry out many, if not an infinite number, of well-defined [http://en.wikipedia.org/wiki/Independence_%28probability_theory%29 independent] [http://en.wikipedia.org/wiki/Random random] [http://en.wikipedia.org/wiki/Experiments trials]. In each trial for an event, the event either occurs or it does not occur. Suppose | In contrast to the Bayesian view of probability, the frequentist view of probability holds that there is an ''intrinsic'' probability of occurrence associated with every event to which one can carry out many, if not an infinite number, of well-defined [http://en.wikipedia.org/wiki/Independence_%28probability_theory%29 independent] [http://en.wikipedia.org/wiki/Random random] [http://en.wikipedia.org/wiki/Experiments trials]. In each trial for an event, the event either occurs or it does not occur. Suppose | ||

<math>n_x</math> denotes the number of times that an event occurs during its trials and <math>n_t</math> denotes the total number of trials carried out for the event. The frequentist view of probability holds that, in the ''long run'', where the number of trials for an event approaches infinity, one could theoretically approach the intrinsic value of the event's probability of occurrence to any arbitrary degree of accuracy, i.e., :<math>P(x) = \lim_{n_t\rightarrow \infty}\frac{n_x}{n_t} | <math>n_x</math> denotes the number of times that an event occurs during its trials and <math>n_t</math> denotes the total number of trials carried out for the event. The frequentist view of probability holds that, in the ''long run'', where the number of trials for an event approaches infinity, one could theoretically approach the intrinsic value of the event's probability of occurrence to any arbitrary degree of accuracy, i.e., :<math>P(x) = \lim_{n_t\rightarrow \infty}\frac{n_x}{n_t}</math>. In practice, however, one can only carry out a finite number of trials for an event and, as a result, the probability of the event's occurrence can only be approximated as <math>P(x) \approx \frac{n_x}{n_t}</math>. If one adherers to the frequentist view, one cannot, for instance, predict the probability that there would be rain tomorrow. This is because one cannot possibly carry out trials for any event that is set in the future. The founder of the frequentist school of thought is arguably the famous Greek philosopher [http://en.wikipedia.org/wiki/Aristotle Aristotle]. In his work [http://en.wikipedia.org/wiki/Rhetoric_%28Aristotle%29 ''Rhetoric''], Aristotle gave the famous line "'''''the probable is that which for the most part happens'''''". | ||

More information regarding the Bayesian and the frequentist schools of thought are available [http://www.statisticalengineering.com/frequentists_and_bayesians.htm here]. Furthermore, an interesting and informative youtube video that explains the Bayesian and frequentist views of probability is available [http://www.youtube.com/watch?v=hLKOKdAircA here]. | More information regarding the Bayesian and the frequentist schools of thought are available [http://www.statisticalengineering.com/frequentists_and_bayesians.htm here]. Furthermore, an interesting and informative youtube video that explains the Bayesian and frequentist views of probability is available [http://www.youtube.com/watch?v=hLKOKdAircA here]. | ||

There is useful information about Machine Learning, Neural and Statistical Classification in this link [http://www.amsta.leeds.ac.uk/~charles/statlog/] Machine Learning, Neural and Statistical Classification; there is some description of Classification in chapter 2 Classical Statistical Methods in chapter 3 and Modern Statistical Techniques in chapter 4. | |||

=== Extension: Statistical Classification Framework === | |||

In statistical classification, each object is represented by 'd' (a set of features) a measurement vector, and the goal of classifier becomes finding compact and disjoint regions for classes in a d-dimensional feature space. Such decision regions are defined by decision rules that are known or can be trained. The simplest configuration of a classification consists of a decision rule and multiple membership functions; each membership function represents a class. The following figures illustrate this general framework. | |||

: | [[File:cs1.png]] | ||

Simple Conceptual Classifier. | |||

: | [[File:cs2.png]] | ||

[http://www.orfeo-toolbox.org/SoftwareGuide/SoftwareGuidech17.html#x44-2480011 Statistical Classification Framework] | |||

The classification process can be described as follows: | |||

A measurement vector is input to each membership function. | |||

Membership functions feed the membership scores to the decision rule. | |||

A decision rule compares the membership scores and returns a class label. | |||

== '''Linear and Quadratic Discriminant Analysis''' == | |||

===Introduction=== | |||

'''Linear discriminant analysis''' ([http://en.wikipedia.org/wiki/Linear_discriminant_analysis LDA]) and the related '''Fisher's linear discriminant''' are methods used in statistics, pattern recognition and machine learning to find a linear combination of features which characterize or separate two or more classes of objects or events. The resulting combination may be used as a linear classifier, or, more commonly, for dimensionality reduction before later classification. | |||

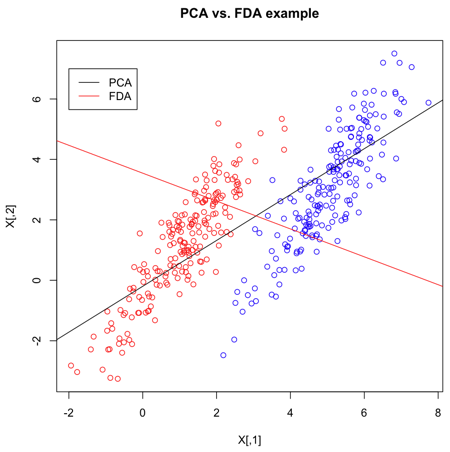

LDA is also closely related to principal component analysis ([http://en.wikipedia.org/wiki/Principal_component_analysis PCA]) and [http://en.wikipedia.org/wiki/Factor_analysis factor analysis] in that both look for linear combinations of variables which best explain the data. LDA explicitly attempts to model the difference between the classes of data. PCA on the other hand does not take into account any difference in class, and factor analysis builds the feature combinations based on differences rather than similarities. Discriminant analysis is also different from factor analysis in that it is not an interdependence technique: a distinction between independent variables and dependent variables (also called criterion variables) must be made. | |||

LDA works when the measurements made on independent variables for each observation are continuous quantities. When dealing with categorical independent variables, the equivalent technique is '''discriminant correspondence analysis'''. | |||

=== Content === | |||

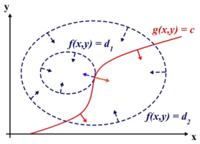

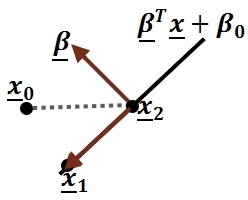

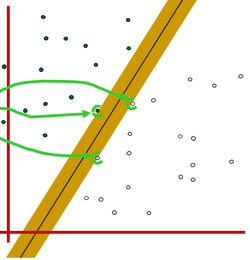

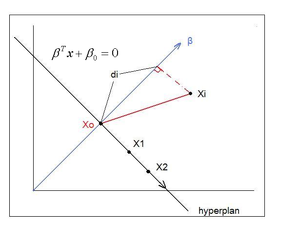

First, we shall limit ourselves to the case where there are two classes, i.e. <math>\, \mathcal{Y}=\{0, 1\}</math>. In the above discussion, we introduced the Bayes classifier's ''decision boundary'' <math>\,D(h^*)=\{x: P(Y=1|X=x)=P(Y=0|X=x)\}</math>, which represents a [http://en.wikipedia.org/wiki/Hyperplane hyperplane] that determines the class of any new data input depending on which side of the hyperplane the input lies in. Now, we shall look at how to derive the Bayes classifier's decision boundary under certain assumptions of the data. [http://en.wikipedia.org/wiki/Linear_discriminant_analysis Linear discriminant analysis (LDA)] and [http://en.wikipedia.org/wiki/Quadratic_classifier#Quadratic_discriminant_analysis quadratic discriminant analysis (QDA)] are two of the most well-known ways for deriving the Bayes classifier's decision boundary, and we shall look at each of them in turn. | |||

Let us denote the likelihood <math>\ P(X=x|Y=y) </math> as <math>\ f_y(x) </math> and the prior probability <math>\ P(Y=y) </math> as <math>\ \pi_y </math>. | |||

First, we shall examine LDA. As explained above, the Bayes classifier is optimal. However, in practice, the prior and conditional densities are not known. Under LDA, one gets around this problem by making the assumptions that both of the two classes have [http://en.wikipedia.org/wiki/Multivariate_normal_distribution multivariate normal (Gaussian) distributions] and the two classes have the same covariance matrix <math>\, \Sigma</math>. Under the assumptions of LDA, we have: <math>\ P(X=x|Y=y) = f_y(x) = \frac{1}{ (2\pi)^{d/2}|\Sigma|^{1/2} }\exp\left( -\frac{1}{2} (x - \mu_k)^\top \Sigma^{-1} (x - \mu_k) \right)</math>. Now, to derive the Bayes classifier's decision boundary using LDA, we equate <math>\, P(Y=1|X=x) </math> to <math>\, P(Y=0|X=x) </math> and proceed from there. The derivation of <math>\,D(h^*)</math> is as follows: | |||

:<math>\,Pr(Y=1|X=x)=Pr(Y=0|X=x)</math> | |||

:<math>\,\Rightarrow \frac{Pr(X=x|Y=1)Pr(Y=1)}{Pr(X=x)}=\frac{Pr(X=x|Y=0)Pr(Y=0)}{Pr(X=x)}</math> (using Bayes' Theorem) | |||

:<math>\,\Rightarrow Pr(X=x|Y=1)Pr(Y=1)=Pr(X=x|Y=0)Pr(Y=0)</math> (canceling the denominators) | |||

:<math>\,\Rightarrow f_1(x)\pi_1=f_0(x)\pi_0</math> | |||

:<math>\,\Rightarrow \frac{1}{ (2\pi)^{d/2}|\Sigma|^{1/2} }\exp\left( -\frac{1}{2} (x - \mu_1)^\top \Sigma^{-1} (x - \mu_1) \right)\pi_1=\frac{1}{ (2\pi)^{d/2}|\Sigma|^{1/2} }\exp\left( -\frac{1}{2} (x - \mu_0)^\top \Sigma^{-1} (x - \mu_0) \right)\pi_0</math> | |||

:<math>\,\Rightarrow \exp\left( -\frac{1}{2} (x - \mu_1)^\top \Sigma^{-1} (x - \mu_1) \right)\pi_1=\exp\left( -\frac{1}{2} (x - \mu_0)^\top \Sigma^{-1} (x - \mu_0) \right)\pi_0</math> | |||

:<math>\,\Rightarrow -\frac{1}{2} (x - \mu_1)^\top \Sigma^{-1} (x - \mu_1) + \log(\pi_1)=-\frac{1}{2} (x - \mu_0)^\top \Sigma^{-1} (x - \mu_0) +\log(\pi_0)</math> (taking the log of both sides). | |||

:<math>\,\Rightarrow \log(\frac{\pi_1}{\pi_0})-\frac{1}{2}\left( x^\top\Sigma^{-1}x + \mu_1^\top\Sigma^{-1}\mu_1 - 2x^\top\Sigma^{-1}\mu_1 - x^\top\Sigma^{-1}x - \mu_0^\top\Sigma^{-1}\mu_0 + 2x^\top\Sigma^{-1}\mu_0 \right)=0</math> (expanding out) | |||

= | :<math>\,\Rightarrow \log(\frac{\pi_1}{\pi_0})-\frac{1}{2}\left( \mu_1^\top\Sigma^{-1} | ||

\mu_1-\mu_0^\top\Sigma^{-1}\mu_0 - 2x^\top\Sigma^{-1}(\mu_1-\mu_0) \right)=0</math> (canceling out alike terms and factoring). | |||

= | It is easy to see that, under LDA, the Bayes's classifier's decision boundary <math>\,D(h^*)</math> has the form <math>\,ax+b=0</math> and it is linear in <math>\,x</math>. This is where the word ''linear'' in linear discriminant analysis comes from. | ||

LDA under the two-classes case can easily be generalized to the general case where there are <math>\,k \ge 2</math> classes. In the general case, suppose we wish to find the Bayes classifier's decision boundary between the two classes <math>\,m </math> and <math>\,n</math>, then all we need to do is follow a derivation very similar to the one shown above, except with the classes <math>\,1 </math> and <math>\,0</math> being replaced by the classes <math>\,m </math> and <math>\,n</math>. Following through with a similar derivation as the one shown above, one obtains the Bayes classifier's decision boundary <math>\,D(h^*)</math> between classes <math>\,m </math> and <math>\,n</math> to be <math>\,\log(\frac{\pi_m}{\pi_n})-\frac{1}{2}\left( \mu_m^\top\Sigma^{-1} | |||

\mu_m-\mu_n^\top\Sigma^{-1}\mu_n - 2x^\top\Sigma^{-1}(\mu_m-\mu_n) \right)=0</math> . In addition, for any two classes <math>\,m </math> and <math>\,n</math> for whom we would like to find the Bayes classifier's decision boundary using LDA, if <math>\,m </math> and <math>\,n</math> both have the same number of data, then, in this special case, the resulting decision boundary would lie exactly halfway between the centers (means) of <math>\,m </math> and <math>\,n</math>. | |||

The Bayes classifier's decision boundary for any two classes as derived using LDA looks something like the one that can be found in [http://www.outguess.org/detection.php this link]: | |||

Although the assumption under LDA may not hold true for most real-world data, it nevertheless usually performs quite well in practice, where it often provides near-optimal classifications. For instance, the Z-Score credit risk model that was designed by Edward Altman in 1968 and [http://pages.stern.nyu.edu/~ealtman/Zscores.pdf revisited in 2000], is essentially a weighted LDA. This model has demonstrated a 85-90% success rate in predicting bankruptcy, and for this reason it is still in use today. | |||

According to [http://www.lsv.uni-saarland.de/Vorlesung/Digital_Signal_Processing/Summer06/dsp06_chap9.pdf this link], some of the limitations of LDA include: | |||

* LDA implicitly assumes that the data in each class has a Gaussian distribution. | |||

* LDA implicitly assumes that the mean rather than the variance is the discriminating factor. | |||

* LDA may over-fit the training data. | |||

The following link provides a comparison of discriminant analysis and artificial neural networks [http://www.jstor.org/stable/2584434?seq=4] | |||

====Different Approaches to LDA==== | |||

Data sets can be transformed and test vectors can be classified in the transformed space by two | |||

different approaches. | |||

*Class-dependent transformation: This type of approach involves maximizing the ratio of between | |||

class variance to within class variance. The main objective is to maximize this ratio so that adequate | |||

class separability is obtained. The class-specific type approach involves using two optimizing criteria | |||

for transforming the data sets independently. | |||

: | *Class-independent transformation: This approach involves maximizing the ratio of overall variance | ||

to within class variance. This approach uses only one optimizing criterion to transform the data sets | |||

and hence all data points irrespective of their class identity are transformed using this transform. In | |||

this type of LDA, each class is considered as a separate class against all other classes. | |||

== Further reading == | |||

The following are some applications that use LDA and QDA: | |||

1- Linear discriminant analysis for improved large vocabulary continuous speech recognition [http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=225984 here] | |||

=== | 2- 2D-LDA: A statistical linear discriminant analysis for image matrix [http://www.sciencedirect.com/science?_ob=MImg&_imagekey=B6V15-4DK6B5P-4-1&_cdi=5665&_user=1067412&_pii=S0167865504002272&_origin=search&_coverDate=04%2F01%2F2005&_sk=999739994&view=c&wchp=dGLzVlz-zSkzV&md5=60ea1cf7ff045f76421f5bde64bf855a&ie=/sdarticle.pdf here] | ||

3- Regularization studies of linear discriminant analysis in small sample size scenarios with application to face recognition [http://www.sciencedirect.com/science?_ob=MImg&_imagekey=B6V15-4DTJVF4-2-9&_cdi=5665&_user=1067412&_pii=S0167865504002260&_origin=search&_coverDate=01%2F15%2F2005&_sk=999739997&view=c&wchp=dGLzVtb-zSkzk&md5=1bba55e357b1c79579987638dcbf6828&ie=/sdarticle.pdf here] | |||

4- The sparse discriminant vectors are useful for supervised dimension reduction for high dimensional data. | |||

Naive application of classical Fisher’s LDA to high dimensional, low sample size settings suffers from the data piling problem. In [http://www.iaeng.org/IJAM/issues_v39/issue_1/IJAM_39_1_06.pdf] they have use sparse LDA method which selects important variables for discriminant analysis and thereby | |||

yield improved classification. Introducing sparsity in the discriminant vectors is very effective in eliminating data piling and the associated overfitting | |||

problem. | |||

== '''Linear and Quadratic Discriminant Analysis cont'd - September 23, 2010''' == | |||

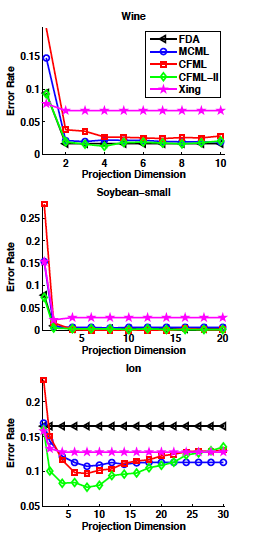

===LDA x QDA=== | |||

Linear discriminant analysis[http://en.wikipedia.org/wiki/Linear_discriminant_analysis] is a statistical method used to find the ''linear combination'' of features which best separate two or more classes of objects or events. It is widely applied in classifying diseases, positioning, product management, and marketing research. LDA assumes that the different classes have the same covariance matrix <math>\, \Sigma</math>. | |||

<math>\,\Sigma | Quadratic Discriminant Analysis[http://en.wikipedia.org/wiki/Quadratic_classifier], on the other hand, aims to find the ''quadratic combination'' of features. It is more general than linear discriminant analysis. Unlike LDA, QDA does not make the assumption that the different classes have the same covariance matrix <math>\, \Sigma</math>. Instead, QDA makes the assumption that each class <math>\, k</math> has its own covariance matrix <math>\, \Sigma_k</math>. | ||

This is | The derivation of the Bayes classifier's decision boundary <math>\,D(h^*)</math> under QDA is similar to that under LDA. Again, let us first consider the two-classes case where <math>\, \mathcal{Y}=\{0, 1\}</math>. This derivation is given as follows: | ||

=== | :<math>\,Pr(Y=1|X=x)=Pr(Y=0|X=x)</math> | ||

:<math>\,\Rightarrow \frac{Pr(X=x|Y=1)Pr(Y=1)}{Pr(X=x)}=\frac{Pr(X=x|Y=0)Pr(Y=0)}{Pr(X=x)}</math> (using Bayes' Theorem) | |||

:<math>\,\Rightarrow Pr(X=x|Y=1)Pr(Y=1)=Pr(X=x|Y=0)Pr(Y=0)</math> (canceling the denominators) | |||

:<math>\,\Rightarrow f_1(x)\pi_1=f_0(x)\pi_0</math> | |||

:<math>\,\Rightarrow \frac{1}{ (2\pi)^{d/2}|\Sigma_1|^{1/2} }\exp\left( -\frac{1}{2} (x - \mu_1)^\top \Sigma_1^{-1} (x - \mu_1) \right)\pi_1=\frac{1}{ (2\pi)^{d/2}|\Sigma_0|^{1/2} }\exp\left( -\frac{1}{2} (x - \mu_0)^\top \Sigma_0^{-1} (x - \mu_0) \right)\pi_0</math> | |||

:<math>\,\Rightarrow \frac{1}{|\Sigma_1|^{1/2} }\exp\left( -\frac{1}{2} (x - \mu_1)^\top \Sigma_1^{-1} (x - \mu_1) \right)\pi_1=\frac{1}{|\Sigma_0|^{1/2} }\exp\left( -\frac{1}{2} (x - \mu_0)^\top \Sigma_0^{-1} (x - \mu_0) \right)\pi_0</math> (by cancellation) | |||

:<math>\,\Rightarrow -\frac{1}{2}\log(|\Sigma_1|)-\frac{1}{2} (x - \mu_1)^\top \Sigma_1^{-1} (x - \mu_1)+\log(\pi_1)=-\frac{1}{2}\log(|\Sigma_0|)-\frac{1}{2} (x - \mu_0)^\top \Sigma_0^{-1} (x - \mu_0)+\log(\pi_0)</math> (by taking the log of both sides) | |||

:<math>\,\Rightarrow \log(\frac{\pi_1}{\pi_0})-\frac{1}{2}\log(\frac{|\Sigma_1|}{|\Sigma_0|})-\frac{1}{2}\left( x^\top\Sigma_1^{-1}x + \mu_1^\top\Sigma_1^{-1}\mu_1 - 2x^\top\Sigma_1^{-1}\mu_1 - x^\top\Sigma_0^{-1}x - \mu_0^\top\Sigma_0^{-1}\mu_0 + 2x^\top\Sigma_0^{-1}\mu_0 \right)=0</math> (by expanding out) | |||

:<math>\,\Rightarrow \log(\frac{\pi_1}{\pi_0})-\frac{1}{2}\log(\frac{|\Sigma_1|}{|\Sigma_0|})-\frac{1}{2}\left( x^\top(\Sigma_1^{-1}-\Sigma_0^{-1})x + \mu_1^\top\Sigma_1^{-1}\mu_1 - \mu_0^\top\Sigma_0^{-1}\mu_0 - 2x^\top(\Sigma_1^{-1}\mu_1-\Sigma_0^{-1}\mu_0) \right)=0</math> | |||

It is easy to see that, under QDA, the decision boundary <math>\,D(h^*)</math> has the form <math>\,ax^2+bx+c=0</math> and it is quadratic in <math>\,x</math>. This is where the word ''quadratic'' in quadratic discriminant analysis comes from. | |||

'' | As is the case with LDA, QDA under the two-classes case can easily be generalized to the general case where there are <math>\,k \ge 2</math> classes. In the general case, suppose we wish to find the Bayes classifier's decision boundary between the two classes <math>\,m </math> and <math>\,n</math>, then all we need to do is follow a derivation very similar to the one shown above, except with the classes <math>\,1 </math> and <math>\,0</math> being replaced by the classes <math>\,m </math> and <math>\,n</math>. Following through with a similar derivation as the one shown above, one obtains the Bayes classifier's decision boundary <math>\,D(h^*)</math> between classes <math>\,m </math> and <math>\,n</math> to be <math>\,\log(\frac{\pi_m}{\pi_n})-\frac{1}{2}\log(\frac{|\Sigma_m|}{|\Sigma_n|})-\frac{1}{2}\left( x^\top(\Sigma_m^{-1}-\Sigma_n^{-1})x + \mu_m^\top\Sigma_m^{-1}\mu_m - \mu_n^\top\Sigma_n^{-1}\mu_n - 2x^\top(\Sigma_m^{-1}\mu_m-\Sigma_n^{-1}\mu_n) \right)=0</math>. | ||

===Summarizing LDA and QDA=== | |||

We have | We can summarize what we have learned so far into the following theorem. | ||

'''Theorem''': | |||

Suppose that <math>\,Y \in \{1,\dots,K\}</math>, if <math>\,f_k(x) = Pr(X=x|Y=k)</math> is Gaussian, the Bayes Classifier rule is | |||

:<math>\,h^*(x) = \arg\max_{k} \delta_k(x)</math> | |||

where, | |||

* In the case of LDA, which assumes that a common covariance matrix is shared by all classes, <math> \,\delta_k(x) = x^\top\Sigma^{-1}\mu_k - \frac{1}{2}\mu_k^\top\Sigma^{-1}\mu_k + log (\pi_k) </math>, and the Bayes classifier's decision boundary <math>\,D(h^*)</math> is linear in <math>\,x</math>. | |||

* In the case of QDA, which assumes that each class has its own covariance matrix, <math> \,\delta_k(x) = - \frac{1}{2}log(|\Sigma_k|) - \frac{1}{2}(x-\mu_k)^\top\Sigma_k^{-1}(x-\mu_k) + log (\pi_k) </math>, and the Bayes classifier's decision boundary <math>\,D(h^*)</math> is quadratic in <math>\,x</math>. | |||

<math> \, \ | '''Note''' <math>\,\arg\max_{k} \delta_k(x)</math>returns the set of k for which <math>\,\delta_k(x)</math> attains its largest value. | ||

[http://www.stat.cmu.edu/~larry/=stat707/notes10.pdf See Theorem 46.6 Page 133] | |||

<math> \, \ | ===In practice=== | ||

We need to estimate the prior, so in order to do this, we use the Maximum Likelihood estimates from the sample for <math>\,\pi,\mu_k,\Sigma_k</math> in place of their true values, i.e. | |||

[[File:estimation.png|250px|thumb|right|Estimation of the probability of belonging to either class k or l]] | |||

<math>\,\hat{\pi_k} = \hat{Pr}(y=k) = \frac{n_k}{n}</math> | |||

<math>\,\hat{\mu_k} = \frac{1}{n_k}\sum_{i:y_i=k}x_i</math> | |||

</math> | |||

<math>\,\hat{\Sigma_k} = \frac{1}{n_k}\sum_{i:y_i=k}(x_i-\hat{\mu_k})(x_i-\hat{\mu_k})^\top</math> | |||

Common covariance, denoted <math>\Sigma</math>, is defined as the weighted average of the covariance for each class. | |||

In the case where we need a common covariance matrix, we get the estimate using the following equation: | |||

<math>\,\Sigma=\frac{\sum_{r=1}^{k}(n_r\Sigma_r)}{\sum_{l=1}^{k}(n_l)} </math> | |||

Where: <math>\,n_r</math> is the number of data points in class r, <math>\,\Sigma_r</math> is the covariance of class r and <math>\,n</math> is the total number of data points, | |||

<math>\,k</math> is the number of classes. | |||

See the details about the [http://en.wikipedia.org/wiki/Estimation_of_covariance_matrices estimation of covarience matrices]. | |||

===Computation For QDA And LDA=== | |||

First, let us consider QDA, and examine each of the following two cases. | |||

'''Case 1: (Example) <math>\, \Sigma_k = I </math> | |||

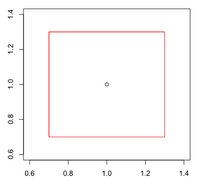

[[File:case1.jpg|300px|thumb|right]] | |||

<math>\, \Sigma_k = I </math> for every class <math>\,k</math> implies that our data is spherical. This means that the data of each class <math>\,k</math> is distributed symmetrically around the center <math>\,\mu_k</math>, i.e. the isocontours are all circles. | |||

We have: | |||

= | <math> \,\delta_k = - \frac{1}{2}log(|I|) - \frac{1}{2}(x-\mu_k)^\top I(x-\mu_k) + log (\pi_k) </math> | ||

We see that the first term in the above equation, <math>\,\frac{-1}{2}log(|I|)</math>, is zero since <math>\ |I|=1 </math>. The second term contains <math>\, (x-\mu_k)^\top I(x-\mu_k) = (x-\mu_k)^\top(x-\mu_k) </math>, which is the [http://www.improvedoutcomes.com/docs/WebSiteDocs/Clustering/Clustering_Parameters/Euclidean_and_Euclidean_Squared_Distance_Metrics.htm squared Euclidean distance] between <math>\,x</math> and <math>\,\mu_k</math>. Therefore we can find the distance between a point and each center and adjust it with the log of the prior, <math>\,log(\pi_k)</math>. The class that has the minimum distance will maximize <math>\,\delta_k</math>. According to the theorem, we can then classify the point to a specific class <math>\,k</math>. | |||

'''Case 2: (General Case) <math>\, \Sigma_k \ne I </math>''' | |||

We can decompose this as: | |||

=== | <math> \, \Sigma_k = U_kS_kV_k^\top = U_kS_kU_k^\top </math> (In general when <math>\,X=U_kS_kV_k^\top</math>, <math>\,U_k</math> is the eigenvectors of <math>\,X_kX_k^T</math> and <math>\,V_k</math> is the eigenvectors of <math>\,X_k^\top X_k</math>. | ||

So if <math>\, X_k</math> is symmetric, we will have <math>\, U_k=V_k</math>. Here <math>\, \Sigma_k </math> is symmetric, because it is the covariance matrix of <math> X_k </math>) and the inverse of <math>\,\Sigma_k</math> is | |||

<math> \, \Sigma_k^{-1} = (U_kS_kU_k^\top)^{-1} = (U_k^\top)^{-1}S_k^{-1}U_k^{-1} = U_kS_k^{-1}U_k^\top </math> (since <math>\,U_k</math> is orthonormal) | |||

<math> | So from the formula for <math>\,\delta_k</math>, the second term is | ||

:<math>\begin{align} | |||

(x-\mu_k)^\top\Sigma_k^{-1}(x-\mu_k)&= (x-\mu_k)^\top U_kS_k^{-1}U_k^T(x-\mu_k)\\ | |||

& = (U_k^\top x-U_k^\top\mu_k)^\top S_k^{-1}(U_k^\top x-U_k^\top \mu_k)\\ | |||

& = (U_k^\top x-U_k^\top\mu_k)^\top S_k^{-\frac{1}{2}}S_k^{-\frac{1}{2}}(U_k^\top x-U_k^\top\mu_k) \\ | |||

& = (S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top\mu_k)^\top I(S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top \mu_k) \\ | |||

& = (S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top\mu_k)^\top(S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top \mu_k) \\ | |||

\end{align} | |||

</math> | |||

where we have the squared Euclidean distance between <math> \, S_k^{-\frac{1}{2}}U_k^\top x </math> and <math>\, S_k^{-\frac{1}{2}}U_k^\top\mu_k</math>. | |||

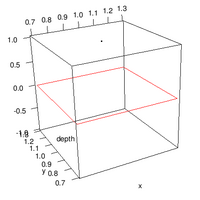

A transformation of all the data points can be done from <math>\,x</math> to <math>\,x^*</math> where <math> \, x^* \leftarrow S_k^{-\frac{1}{2}}U_k^\top x </math>. | |||

<math>\ | A similar transformation of all the centers can be done from <math>\,\mu_k</math> to <math>\,\mu_k^*</math> where <math> \, \mu_k^* \leftarrow S_k^{-\frac{1}{2}}U_k^\top \mu_k </math>. | ||

and | It is now possible to do classification with <math>\,x^*</math> and <math>\,\mu_k^*</math>, treating them as in Case 1 above. This strategy is correct because by transforming <math>\, x</math> to <math>\,x^*</math> where <math> \, x^* \leftarrow S_k^{-\frac{1}{2}}U_k^\top x </math>, the new variable variance is <math>I</math> | ||

<math> | Note that when we have multiple classes, we also need to compute <math>\, log{|\Sigma_k|}</math> respectively. Then we compute <math> \,\delta_k </math> for QDA . | ||

Note that when we have multiple classes, they must all have the same transformation, in another word, have same covariance <math>\,\Sigma_k</math>,else, ahead of time we would have to assume a data point belongs to one class or the other. All classes therefore need to have the same shape for classification to be applicable using this method. So this method works for LDA. | |||

If the classes have different shapes, in another word, have different covariance <math>\,\Sigma_k</math>, can we use the same method to transform all data points <math>\,x</math> to <math>\,x^*</math>? | |||

The answer is Yes. Consider that you have two classes with different shapes. Given a data point, justify which class this point belongs to. You just do the transformations corresponding to the 2 classes respectively, then you get <math>\,\delta_1 ,\delta_2 </math> ,then you determine which class the data point belongs to by comparing <math> \,\delta_1 </math> and <math> \,\delta_2 </math> . | |||

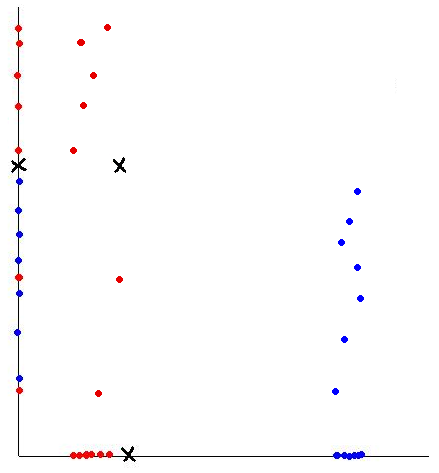

In summary, to apply QDA on a data set <math>\,X</math>, in the general case where <math>\, \Sigma_k \ne I </math> for each class <math>\,k</math>, one can proceed as follows: | |||

:: Step 1: For each class <math>\,k</math>, apply singular value decomposition on <math>\,X_k</math> to obtain <math>\,S_k</math> and <math>\,U_k</math>. | |||

: | :: Step 2: For each class <math>\,k</math>, transform each <math>\,x</math> belonging to that class to <math>\,x_k^* = S_k^{-\frac{1}{2}}U_k^\top x</math>, and transform its center <math>\,\mu_k</math> to <math>\,\mu_k^* = S_k^{-\frac{1}{2}}U_k^\top \mu_k</math>. | ||

:: Step 3: For each data point <math>\,x \in X</math>, find the squared Euclidean distance between the transformed data point <math>\,x_k^*</math> and the transformed center <math>\,\mu_k^*</math> of each class <math>\,k</math>, and assign <math>\,x</math> to class <math>\,k</math> such that the squared Euclidean distance between <math>\,x_k^*</math> and <math>\,\mu_k^*</math> is the least for all possible <math>\,k</math>'s. | |||

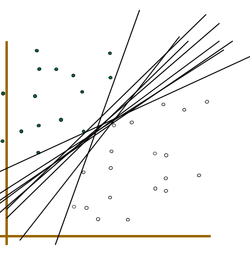

Now, let us consider LDA. | |||

Here, one can derive a classification scheme that is quite similar to that shown above. The main difference is the assumption of a common variance across the classes, so we perform the Singular Value Decomposition once, as opposed to k times. | |||

: | To apply LDA on a data set <math>\,X</math>, one can proceed as follows: | ||

:: Step 1: Apply singular value decomposition on <math>\,X</math> to obtain <math>\,S</math> and <math>\,U</math>. | |||

:: Step 2: For each <math>\,x \in X</math>, transform <math>\,x</math> to <math>\,x^* = S^{-\frac{1}{2}}U^\top x</math>, and transform each center <math>\,\mu</math> to <math>\,\mu^* = S^{-\frac{1}{2}}U^\top \mu</math>. | |||

: | :: Step 3: For each data point <math>\,x \in X</math>, find the squared Euclidean distance between the transformed data point <math>\,x^*</math> and the transformed center <math>\,\mu^*</math> of each class, and assign <math>\,x</math> to the class such that the squared Euclidean distance between <math>\,x^*</math> and <math>\,\mu^*</math> is the least over all of the classes. | ||

[http://portal.acm.org/citation.cfm?id=1340851 Kernel QDA] | |||

In actual data scenarios, it is generally true that QDA will provide a better classifier for the data then LDA because QDA does not assume that the covariance matrix for each class is identical, as LDA assumes. However, QDA still assumes that the class conditional distribution is Gaussian, which is not always the case in real-life scenarios. The link provided at the beginning of this paragraph describes a kernel-based QDA method which does not have the Gaussian distribution assumption. | |||

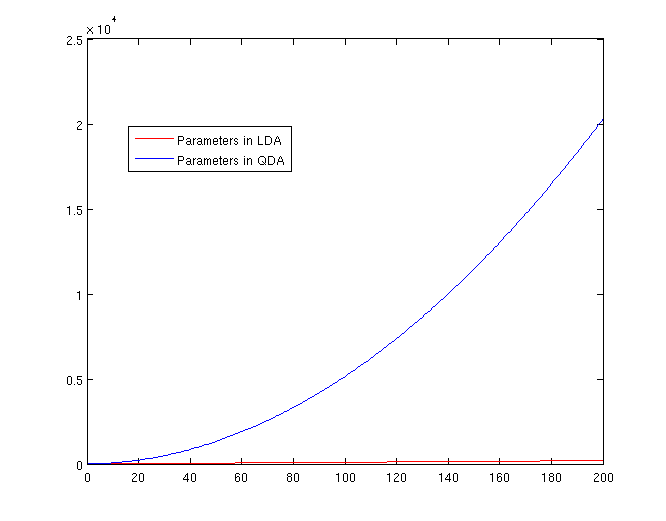

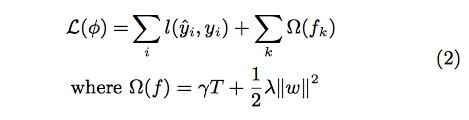

===The Number of Parameters in LDA and QDA=== | |||

Both LDA and QDA require us to estimate parameters. The more estimation we have to do, the less robust our classification algorithm will be. | |||

: | LDA: Since we just need to compare the differences between one given class and remaining <math>\,K-1</math> classes, totally, there are <math>\,K-1</math> differences. For each of them, <math>\,a^{T}x+b</math> requires <math>\,d+1</math> parameters. Therefore, there are <math>\,(K-1)\times(d+1)</math> parameters. | ||

QDA: For each of the differences, <math>\,x^{T}ax + b^{T}x + c</math> requires <math>\frac{1}{2}(d+1)\times d + d + 1 = \frac{d(d+3)}{2}+1</math> parameters. Therefore, there are <math>(K-1)(\frac{d(d+3)}{2}+1)</math> parameters. | |||

: | [[File:Lda-qda-parameters.png|frame|center|A plot of the number of parameters that must be estimated, in terms of (K-1). The x-axis represents the number of dimensions in the data. As is easy to see, QDA is far less robust than LDA for high-dimensional data sets.]] | ||

===More information on Regularized Discriminant Analysis (RDA)=== | |||

Discriminant analysis (DA) is widely used in classification problems. Except LDA and QDA, there is also an intermediate method between LDA and QDA, a regularized version of discriminant analysis (RDA) proposed by Friedman [1989], and it has been shown to be more flexible in dealing with various class distributions. RDA applies the regularization techniques by using two regularization parameters, which are selected to jointly maximize the classification performance. The optimal pair of parameters is commonly estimated via cross-validation from a set of candidate pairs. More detail about this method can be found in the book by Hastie et al. [2001]. On the other hand, the time of computing last long for high dimensional data, especially when the candidate set is large, which limits the applications of RDA to low dimensional data. In 2006, Ye Jieping and Wang Tie develop a novel algorithm for RDA for high dimensional data. It can estimate the optimal regularization parameters from a large set of parameter candidates efficiently. Experiments on a variety of datasets confirm the claimed theoretical estimate of the efficiency, and also show that, for a properly chosen pair of regularization parameters, RDA performs favourably in classification, in comparison with other existing classification methods. For more details, see Ye, Jieping; Wang, Tie | |||

Regularized discriminant analysis for high dimensional, low sample size data Conference on Knowledge Discovery in Data: Proceedings of the 12th ACM SIGKDD international conference on Knowledge discovery and data mining; 20-23 Aug. 2006 | |||

===Further Reading for Regularized Discriminant Analysis (RDA)=== | |||

1. Regularized Discriminant Analysis and Reduced-Rank LDA | |||

[http://www.stat.psu.edu/~jiali/course/stat597e/notes2/lda2.pdf] | |||

: | 2. Regularized discriminant analysis for the small sample size in face recognition | ||

[http://www.google.ca/url?sa=t&source=web&cd=2&sqi=2&ved=0CCQQFjAB&url=http%3A%2F%2Fciteseerx.ist.psu.edu%2Fviewdoc%2Fdownload%3Fdoi%3D10.1.1.84.6960%26rep%3Drep1%26type%3Dpdf&rct=j&q=Regularized%20Discriminant%20Analysis&ei=IPr2TJ_2MKWV4gaP5eH-Bg&usg=AFQjCNHB3fk6eVe5fSjlQCMfK44kU1-lug&sig2=5EJv_AV3W_ngSVFIa1nfRg&cad=rja.pdf] | |||

3. Regularized Discriminant Analysis and Its Application in Microarrays | |||

[http://www-stat.stanford.edu/~hastie/Papers/RDA-6.pdf] | |||

: | == Trick: Using LDA to do QDA - September 28, 2010== | ||

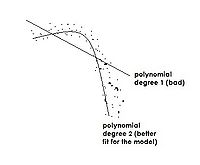

There is a trick that allows us to use the linear discriminant analysis (LDA) algorithm to generate as its output a quadratic function that can be used to classify data. This trick is similar to, but more primitive than, the [http://en.wikipedia.org/wiki/Kernel_trick Kernel trick] that will be discussed later in the course. | |||

Essentially, the trick involves adding one or more new features (i.e. new dimensions) that just contain our original data projected to that dimension. We then do LDA on our new higher-dimensional data. The answer provided by LDA can then be collapsed onto a lower dimension, giving us a quadratic answer. | |||

=== Motivation === | |||

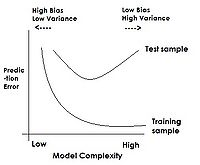

Why would we want to use LDA over QDA? In situations where we have fewer data points, LDA turns out to be more robust. | |||

If we look back at the equations for LDA and QDA, we see that in LDA we must estimate <math>\,\mu_1</math>, <math>\,\mu_2</math> and <math>\,\Sigma</math>. In QDA we must estimate all of those, plus another <math>\,\Sigma</math>; the extra <math>\,\frac{d(d-1)}{2}</math> estimations make QDA less robust with fewer data points. | |||

=== Theoretically === | |||

Suppose we can estimate some vector <math>\underline{w}^T</math> such that | |||

<math>y = \underline{w}^T\underline{x}</math> | |||

where <math>\underline{w}</math> is a d-dimensional column vector, and <math style="vertical-align:0%;">\underline{x}\ \epsilon\ \mathbb{R}^d</math> (vector in d dimensions). | |||

We also have a non-linear function <math>g(x) = y = \underline{x}^Tv\underline{x} + \underline{w}^T\underline{x}</math> that we cannot estimate. | |||

Using our trick, we create two new vectors, <math>\,\underline{w}^*</math> and <math>\,\underline{x}^*</math> such that: | |||

<math>\underline{w}^{*T} = [w_1,w_2,...,w_d,v_1,v_2,...,v_d]</math> | |||

and | |||

[ | <math>\underline{x}^{*T} = [x_1,x_2,...,x_d,{x_1}^2,{x_2}^2,...,{x_d}^2]</math> | ||

< | We can then estimate a new function, <math>g^*(\underline{x},\underline{x}^2) = y^* = \underline{w}^{*T}\underline{x}^*</math>. | ||

Note that we can do this for any <math>\, x</math> and in any dimension; we could extend a <math>D \times n</math> matrix to a quadratic dimension by appending another <math>D \times n</math> matrix with the original matrix squared, to a cubic dimension with the original matrix cubed, or even with a different function altogether, such as a <math>\,sin(x)</math> dimension. Pay attention, We don't do QDA with LDA. If we try QDA directly on this problem the resulting decision boundary will be different. Here we try to find a nonlinear boundary for a better possible boundary but it is different with general QDA method. We can call it nonlinear LDA. | |||

=== By Example === | |||

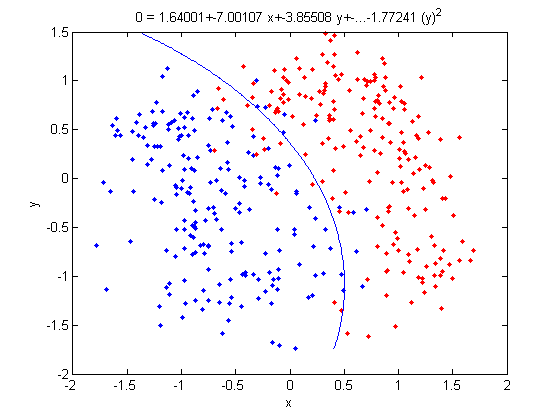

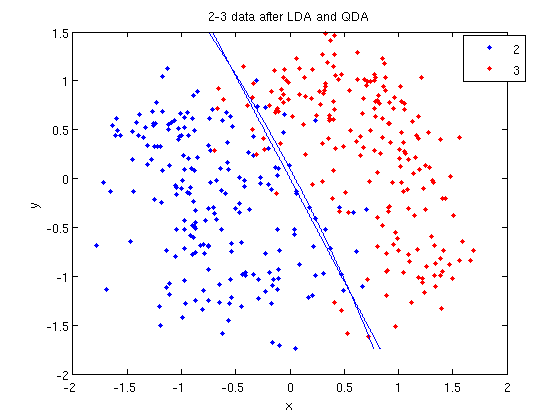

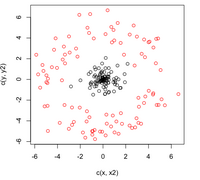

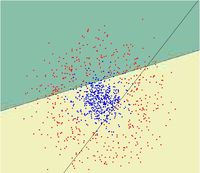

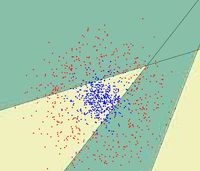

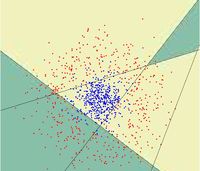

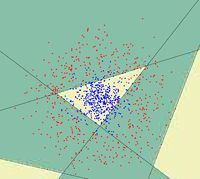

Let's use our trick to do a quadratic analysis of the 2_3 data using LDA. | |||

>> load 2_3; | |||

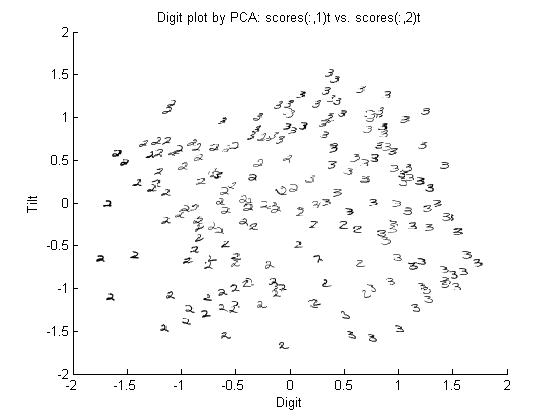

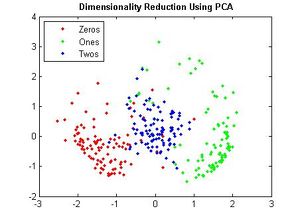

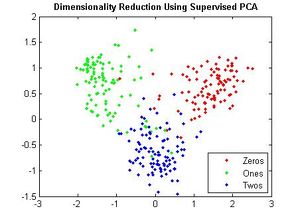

>> [U, sample] = princomp(X'); | |||

>> sample = sample(:,1:2); | |||

:We start off the same way, by using PCA to reduce the dimensionality of our data to 2. | |||

>> X_star = zeros(400,4); | |||

>> X_star(:,1:2) = sample(:,:); | |||

>> for i=1:400 | |||

for j=1:2 | |||

X_star(i,j+2) = X_star(i,j)^2; | |||

end | |||

end | |||

:This projects our sample into two more dimensions by squaring our initial two dimensional data set. | |||

>> group = ones(400,1); | |||

>> group(201:400) = 2; | |||

>> [class, error, POSTERIOR, logp, coeff] = classify(X_star, X_star, group, 'linear'); | |||

>> sum (class==group) | |||

ans = | |||

375 | |||

:We can now display our results. | |||

>> k = coeff(1,2).const; | |||

>> l = coeff(1,2).linear; | |||

>> f = sprintf('0 = %g+%g*x+%g*y+%g*(x)^2+%g*(y)^2', k, l(1), l(2),l(3),l(4)); | |||

>> ezplot(f,[min(sample(:,1)), max(sample(:,1)), min(sample(:,2)), max(sample(:,2))]); | |||

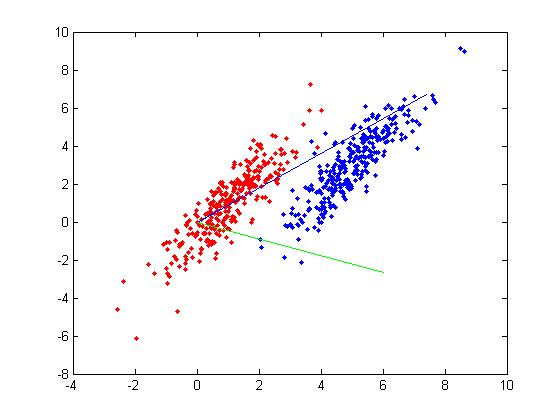

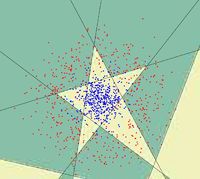

: | [[File: 2_3LDA.png|center|frame| The plot shows the quadratic decision boundary obtained using LDA in the four-dimensional space on the 2_3.mat data. Counting the blue and red points that are on the wrong side of the decision boundary, we can confirm that we have correctly classified 375 data points.]] | ||

:Not only does LDA give us a better result than it did previously, it actually beats QDA, which only correctly classified 371 data points for this data set. Continuing this procedure by adding another two dimensions with <math>x^4</math> (i.e. we set <code>X_star(i,j+2) = X_star(i,j)^4</code>) we can correctly classify 376 points. | |||

===Working Example - Diabetes Data Set=== | |||

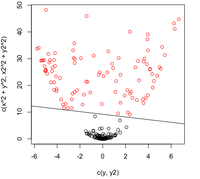

Let's take a look at specific data set. This is a [http://archive.ics.uci.edu/ml/datasets/Diabetes diabetes data set] from the UC Irvine Machine Learning Repository. It is a fairly small data set by today's standards. The original data had eight variable dimensions. What I did here was to obtain the two prominent principal components from these eight variables. Instead of using the original eight dimensions we will just use these two principal components for this example. | |||

The Diabetes data set has two types of samples in it. One sample type are healthy individuals the other are individuals with a higher risk of diabetes. Here are the prior probabilities estimated for both of the sample types, first for the healthy individuals and second for those individuals at risk: | |||

[[File:eq1.png]] | |||

The first type has a prior probability estimated at 0.651. This means that among the data set, (250 to 300 data points), about 65% of these belong to class one and the other 35% belong to class two. Next, we computed the mean vector for the two classes separately:[[File:eq2.png]] | |||

Then we computed [[File:eq3.jpg]] using the formulas discussed earlier. | |||

[ | Once we have done all of this, we compute the linear discriminant function and found the classification rule. Classification rule:[[File:eq4.jpg]] | ||

In this example, if you give me an <math>\, x</math>, I then plug this value into the above linear function. If the result is greater than or equal to zero, I claim that it is in class one. Otherwise, it is in class two. | |||

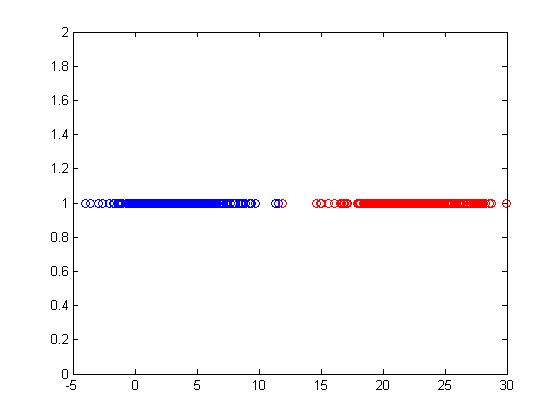

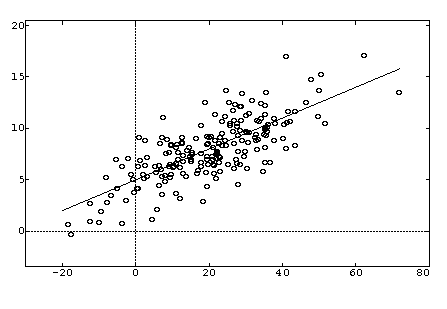

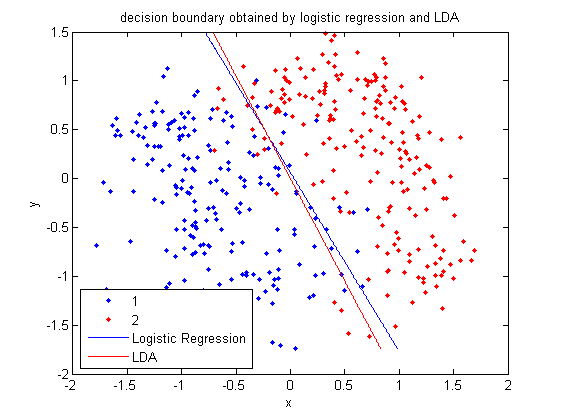

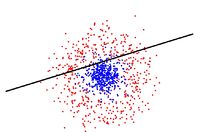

Below is a scatter plot of the dominant principle components. The two classes are represented. The first, without diabetes, is shown with red stars (class 1), and the second class, with diabetes, is shown with blue circles (class 2). The solid line represents the classification boundary obtained by LDA. It appears the two classes are not that well separated. The dashed or dotted line is the boundary obtained by linear regression of indicator matrix. In this case, the results of the two different linear boundaries are very close. | |||

[ | [[File:eq5.jpg]] | ||

It is always good practice to visualize the scheme to check for any obvious mistakes. | |||

• Within training data classification error rate: 28.26%. | |||

• Sensitivity: 45.90%. | |||

• Specificity: 85.60%. | |||

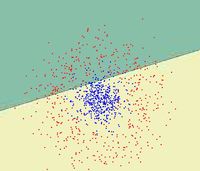

Below is the contour plot for the density of the diabetes data (the marginal density for <math>\, x</math> is a mixture of two Gaussians, 2 classes). It looks like a single Gaussian distribution. The reason for this is that the two classes are so close together that they merge into a single mode. | |||

[ | [[File:eq6.jpg]] | ||

=== LDA and QDA in Matlab === | |||

We have examined the theory behind Linear Discriminant Analysis (LDA) and Quadratic Discriminant Analysis (QDA) above; how do we use these algorithms in practice? Matlab offers us a function called [http://www.mathworks.com/access/helpdesk/help/toolbox/stats/index.html?/access/helpdesk/help/toolbox/stats/classify.html <code>classify</code>] that allows us to perform LDA and QDA quickly and easily. | |||

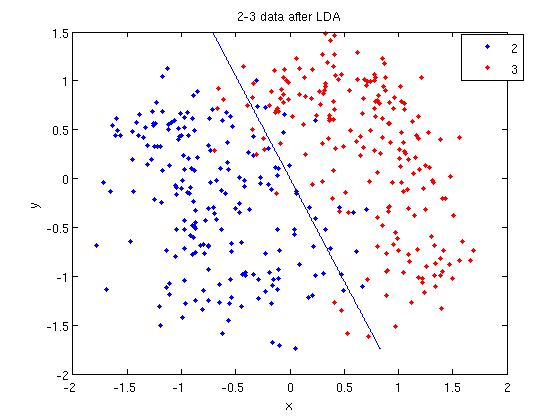

In class, we were shown an example of using LDA and QDA on the 2_3 data that is used in the first assignment. The code below applies LDA to the same data set and reproduces that example, slightly modified, and explains each step. | |||

>> load 2_3; | |||

>> [U, sample] = princomp(X'); | |||

>> sample = sample(:,1:2); | |||

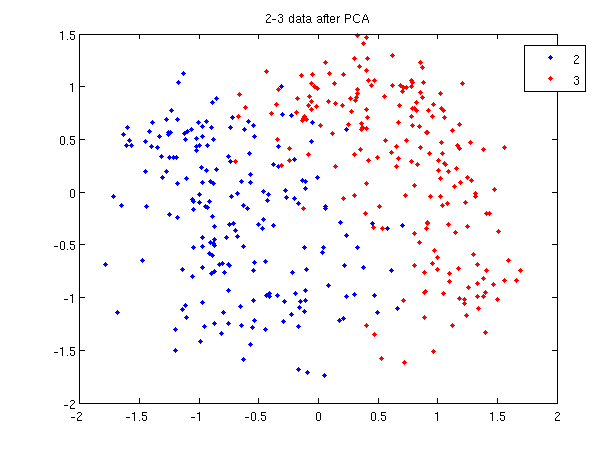

:First, we do principal component analysis (PCA) on the 2_3 data to reduce the dimensionality of the original data from 64 dimensions to 2. Doing this makes it much easier to visualize the results of the LDA and QDA algorithms. | |||

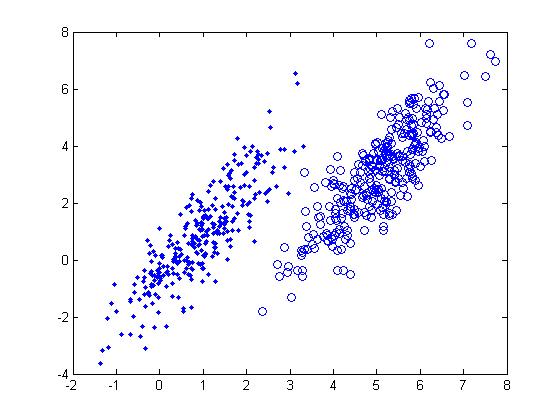

>> plot (sample(1:200,1), sample(1:200,2), '.'); | |||

>> hold on; | |||

>> plot (sample(201:400,1), sample(201:400,2), 'r.'); | |||

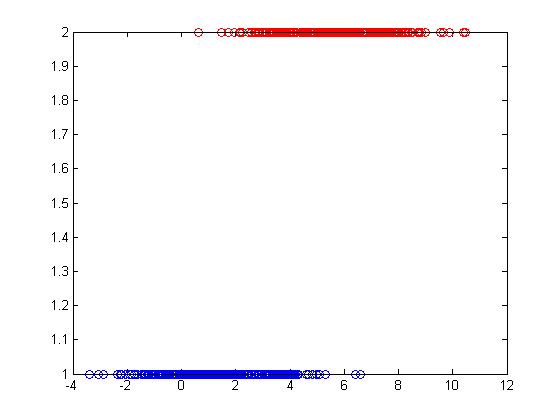

:Recall that in the 2_3 data, the first 200 elements are images of the number two handwritten and the last 200 elements are images of the number three handwritten. This code sets up a plot of the data such that the points that represent a 2 are blue, while the points that represent a 3 are red. | |||

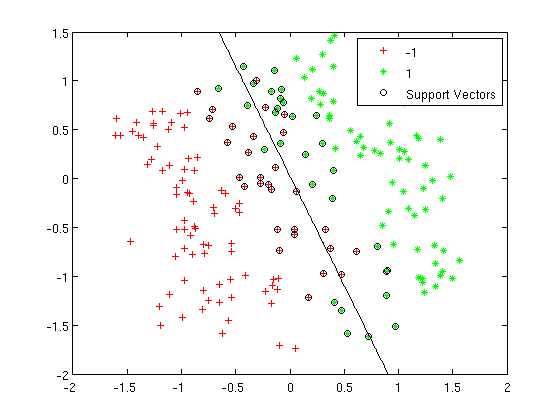

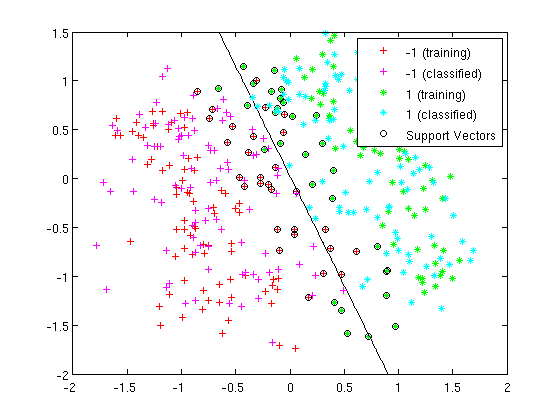

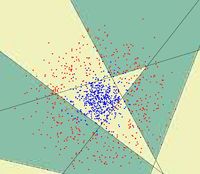

[[File:2-3-pca.png|frame|center|See [http://www.mathworks.com/access/helpdesk/help/techdoc/index.html?/access/helpdesk/help/techdoc/ref/title.html <code>title</code>] and [http://www.mathworks.com/access/helpdesk/help/techdoc/index.html?/access/helpdesk/help/techdoc/ref/legend.html <code>legend</code>] for information on adding the title and legend.]] | |||

[ | |||

:Before using [http://www.mathworks.com/access/helpdesk/help/toolbox/stats/index.html?/access/helpdesk/help/toolbox/stats/classify.html <code>classify</code>] we can set up a vector that contains the actual labels for our data, to train the classification algorithm. If we don't know the labels for the data, then the element in the <code>group</code> vector should be an empty string or <code>NaN</code>. (See [http://www.mathworks.com/access/helpdesk/help/toolbox/stats/index.html?/access/helpdesk/help/toolbox/stats/bqziops.html grouping data] for more information.) | |||

>> group = ones(400,1); | |||

>> group(201:400) = 2; | |||

:We can now classify our data. | |||

[ | >> [class, error, POSTERIOR, logp, coeff] = classify(sample, sample, group, 'linear'); | ||

:The full details of this line can be examined in the Matlab help file linked above. What we care about are <code>class</code>, which contains the labels that the algorithm thinks that each data point belongs to, and <code>coeff</code>, which contains information about the line that the algorithm created to separate the data into the two classes. | |||

:We can see the efficacy of the algorithm by comparing <code>class</code> to <code>group</code>. | |||

>> sum (class==group) | |||

ans = | |||

369 | |||

:This compares the value in <code>class</code> to the value in <code>group</code>. The answer of 369 tells us that the algorithm correctly determined the classes of the points 369 times, out of a possible 400 data points. This gives us an ''empirical error rate'' of 0.0775. | |||

:We can see the line produced by LDA using <code>coeff</code>. | |||

[ | >> k = coeff(1,2).const; | ||

>> l = coeff(1,2).linear; | |||