sparse PCA: Difference between revisions

Mirzazadeh (talk | contribs) |

m (Conversion script moved page Sparse PCA to sparse PCA: Converting page titles to lowercase) |

||

| (44 intermediate revisions by 8 users not shown) | |||

| Line 1: | Line 1: | ||

==Introduction== | ==Introduction== | ||

Given <math>n</math> observations on <math>d</math> variables (or in other words <math>n</math> <math>d</math>-dimensional data points), | In PCA, Given <math>n</math> observations on <math>d</math> variables (or in other words <math>n</math> <math>d</math>-dimensional data points), our goal is to find directions in the space of the data set that correspond to the directions with biggest variance in the input data. In practice each of the <math>d</math> variables has its own special meaning and it may be desirable to come up with some directions, as principal components, each of which is a combination of just a few of these variables. This makes the directions more interpretable and meaningful. But this is not something that usually happens as the original result of PCA method. Each of resulting directions from PCA in most cases is a linear combination of all variable with no zero coefficients. | ||

To address the above concerns we add a sparsity constraint to the PCA problem, which makes the PCA problem much harder to solve. That's because we have just added a combinatorial constraint to optimization problem. This paper is showing us how to find directions in the data space with maximum variance that have a limited number of non-zero elements. In other words, this helps us to perform feature selection, by selecting a subset of features in each direction. | |||

==Contribution== | |||

In this paper, a direct approach (called DSPCA) that improves the sparsity of the principle components is presented. This is done in 2 stages. First, incorporating a sparsity criterion in the PCA formulation. Second, forming a convex relation of the problem that is a semidefinite program. For small problems, semidifinite programs can be solved via general purpose interior-point methods. However, these methods can not be used for high dimensional problems. In this case, a saddle point problem can express our particular problem. For this kind of problems, smoothing argument algorithms combined with an optimal first-order smooth minimization algorithm offer a significant reduction in computational time and therefore can be used instead of generic interior point SDP solvers. | |||

== Notation == | |||

The following notations are used in this note. | |||

<math>S^n \,</math> is the set of symmetric matrices of size <math>n \,</math>.<br /> | |||

<math> \textbf{1} \,</math> is a column vector of ones.<br /> | |||

<math> \textbf{Card}(x) \, </math> denotes the cardinality (number of non-zero elements) of a vector <math>x \, </math><br /> | |||

<math> \textbf{Card}(X) \, </math> denotes the cardinality (number of non-zero elements) of a matrix <math>X \, </math><br /> | |||

For <math> X \in S^n \, </math>, <math> X \succeq 0 \, </math> means <math>X \,</math> is positive semi-definite.<br /> | |||

<math>|X| \,</math> is the matrix whose elements are the absolute values of the elements of <math> X \, </math> | |||

==Problem Formulation== | ==Problem Formulation== | ||

Given the covariance matrix <math>A</math>, the problem can be written as:<br> | Given the covariance matrix <math>A</math>, the problem can be written as:<br> | ||

| Line 17: | Line 31: | ||

</td><td valign=top> <br> (1) </td></tr></table> | </td><td valign=top> <br> (1) </td></tr></table> | ||

Defining <math>X= | The cardinality constraint makes this problem hard (NP-hard) and we are looking for a convex and efficient relaxation.<br /> | ||

Defining <math>X=xx^T</math>, the above formula can be rewritten as | |||

<table width=95%><tr><td width=45%> </td><td width=45%> | <table width=95%><tr><td width=45%> </td><td width=45%> | ||

| Line 24: | Line 40: | ||

\textrm{maximize}& \textbf{Tr}(AX)\\ | \textrm{maximize}& \textbf{Tr}(AX)\\ | ||

\textrm{subject\ to}&\textbf{Tr}(X)=1\\ | \textrm{subject\ to}&\textbf{Tr}(X)=1\\ | ||

&\textbf{Card}( | &\textbf{Card}(X)\leq k^2\\ | ||

&X\succeq 0, \textbf{Rank}(X)=1\\ | &X\succeq 0, \textbf{Rank}(X)=1\\ | ||

\end{array} | \end{array} | ||

| Line 30: | Line 46: | ||

</td><td valign=top> <br> (2) </td></tr></table> | </td><td valign=top> <br> (2) </td></tr></table> | ||

The conditions <math>X\succeq 0</math> and <math>\textbf{Rank}(X)=1</math> in formula 2 guarantees that <math>X</math> can be written as <math>xx^T</math>, for some <math>x</math>. But this formulation should be relaxed before it can be solved efficiently, because the constraints <math>\textbf{Card}(X)\leq k^2</math> and <math>\textbf{Rank}(X)=1</math> are not convex. So we replace the cardinality constraint with a weaker one: <math>\textbf{1}^T|X|\textbf 1\leq k</math>. We also drop the rank constraint. So we get: | |||

The conditions <math>X\succeq 0</math> and <math>\textbf{Rank}(X)=1</math> in formula 2 guarantees that <math>X</math> can be written as <math> | |||

<table width=95%><tr><td width=45%> </td><td width=45%> | <table width=95%><tr><td width=45%> </td><td width=45%> | ||

| Line 39: | Line 54: | ||

\textrm{subject\ to}&\textbf{Tr}(X)=1\\ | \textrm{subject\ to}&\textbf{Tr}(X)=1\\ | ||

&\textbf{1}^T|X|\textbf 1\leq k\\ | &\textbf{1}^T|X|\textbf 1\leq k\\ | ||

&X\succeq 0\\ | |||

\end{array} | |||

</math> | |||

</td><td valign=top> <br> (i) </td></tr></table> | |||

The above semidefinite relaxation can even be generalised to a non square matrix <math> A \in R^{mxn}</math> as follows: | |||

<table width=95%><tr><td width=45%> </td><td width=45%> | |||

<math> | |||

\begin{array}{ll} | |||

\textrm{maximize}& \textbf{Tr}(AX^{12})\\ | |||

\textrm{subject\ to}&\textbf{Tr}(X^{ii})=1\\ | |||

&\textbf{1}^T|X^{ii}|\textbf 1\leq k_i, i=1,2\\ | |||

&\textbf{1}^T|X^{12}|\textbf 1\leq \sqrt{k_1k_2}\\ | |||

&X\succeq 0\\ | &X\succeq 0\\ | ||

\end{array} | \end{array} | ||

</math> | </math> | ||

</td><td valign=top> </td></tr></table> | </td><td valign=top> </td></tr></table> | ||

in the variable <math> X \in S^{m+n} </math> with blocks <math> X^{ij} </math> for i,j=1,2. | |||

We then change the modified cardinality constraint to a penalty term in the goal function with some positive factor <math>\rho</math>. So we get a semidefinite form of the problem: | We then change the modified cardinality constraint to a penalty term in the goal function with some positive factor <math>\rho</math>. So we get a semidefinite form of the problem: | ||

| Line 56: | Line 87: | ||

</td><td valign=top> <br> (3) </td></tr></table> | </td><td valign=top> <br> (3) </td></tr></table> | ||

where, <math> \rho </math> controls the penalty magnitude. | |||

The goal function can be rewritten as <math>\textbf{Tr}(AX)-\rho\textbf{1}^T|X|\textbf 1=\min_{|U_{ij}|\leq\rho}\textbf{Tr}((A+U)X)</math>. So the problem (3) is equivalent to: | The goal function can be rewritten as <math>\textbf{Tr}(AX)-\rho\textbf{1}^T|X|\textbf 1=\min_{|U_{ij}|\leq\rho}\textbf{Tr}((A+U)X)</math>. So the problem (3) is equivalent to: | ||

| Line 62: | Line 94: | ||

<math> | <math> | ||

\begin{array}{ll} | \begin{array}{ll} | ||

\textrm{maximize}& \min_{|U_{ij}|\leq\rho}\textbf{Tr}(X(A+U)\\ | \textrm{maximize}& \min_{|U_{ij}|\leq\rho}\textbf{Tr}(X(A+U))\\ | ||

\textrm{subject\ to}&\textbf{Tr}(X)=1\\ | \textrm{subject\ to}&\textbf{Tr}(X)=1\\ | ||

&X\succeq 0\\ | &X\succeq 0\\ | ||

| Line 69: | Line 101: | ||

</td><td valign=top> <br> (4) </td></tr></table> | </td><td valign=top> <br> (4) </td></tr></table> | ||

or equivalently: | or equivalently, due to convexity: | ||

<table width=95%><tr><td width=45%> </td><td width=45%> | <table width=95%><tr><td width=45%> </td><td width=45%> | ||

<math> | <math> | ||

\begin{array}{lll} | \begin{array}{lll} | ||

\textrm{minimize}& \lambda^{\max}(A+U | \textrm{minimize}& \lambda^{\max}(A+U)\\ | ||

\textrm{subject\ to}&|U_{ij}|\leq\rho,&i,j=1\cdots,n\\ | \textrm{subject\ to}&|U_{ij}|\leq\rho,&i,j=1\cdots,n\\ | ||

\end{array} | \end{array} | ||

| Line 80: | Line 112: | ||

</td><td valign=top> <br> (5) </td></tr></table> | </td><td valign=top> <br> (5) </td></tr></table> | ||

The problem as described in formulation (5) can be seen as computing a robust version of maximum eigenvalue: it is the least possible value of maximum eigenvalue, given that each element can be changed by at most noise value <math>\rho</math>. | where <math>\lambda^{\max}(M)</math> is the largest eigenvalue of the matrix <math>M</math>. | ||

The problem as described in formulation (5) can be seen as computing a robust version of maximum eigenvalue: it is the least possible value of maximum eigenvalue, given that each element can be changed by at most noise value <math>\rho</math>. Also, it corresponds to a worst-case maximum eigenvalue computation with a bounded noise of intensity <math>\rho</math> in each component on the matrix coefficients.<br> | |||

The KKT conditions for optimization problems (3) and (5) are given by:<br> | |||

<table width=95%><tr><td width=45%> </td><td width=45%> | |||

<math> | |||

\left\{ | |||

\begin{array}{rl} | |||

&(A+U)X=\lambda^{\max}(A+U)X\\ | |||

&U\circ X=\rho |X| \\ | |||

&\text{Tr}(X)=1,\,\,\,X\succeq 0 \\ | |||

&|U_{i,j}|\leq \rho,\,\,\, i,j=1,\cdots ,n | |||

\end{array} \right. | |||

</math> | |||

</td><td valign=top> <br> </td></tr></table><br> | |||

If the <math>\lambda^{\max}</math> in the first equation is simple (meaning it is of multiplicity 1) and <math>\rho</math> is sufficiently small, from the first equation it follows that <math>\textbf{Rank}(X)=1</math>. In fact, the form of this equation implies that all columns of <math>\,X</math> are eigenvectors of matrix <math>A+U</math> corresponding to its maximum eigenvalue. So the rank one constraint is automatically satisfied in this special case. | |||

==The Algorithm== | ==The Algorithm== | ||

===The Main Loop=== | ===The Main Loop=== | ||

The algorithm should iteratively create the semidefinite program (4) and solve it to obtain the next most important sparse principle component. At each iteration | The algorithm should iteratively create the semidefinite program (4) and solve it to obtain the next most important sparse principle component. At each iteration, we should first obtain the solution <math>x</math> of the corresponding problem of form (1), if <math>X</math> is the optimal solution of the optimization problem. That will be straightforward if <math>X</math> is of rank 1, but since we have dropped the rank constraint, this may not be true and in those cases we need to obtain the ''dominant'' eigenvalue of <math>X</math> by the methods that are known in the literature; for example we can use the power method which efficiently provides us with the largest eigenvectors of a matrix. Note, however, that in this case the resulting vectors are not guaranteed to be as sparse as the matrix itself. | ||

After obtaining a (hopefully) sparse vector <math>x</math> we replace the matrix <math>A</math> with <math>A-(x_1^TAx_1)x_1x_1^T</math> and repeat the above steps to obtain next sparse component values. | After obtaining a (hopefully) sparse vector <math>x</math> we replace the matrix <math>A</math> with <math>A-(x_1^TAx_1)x_1x_1^T</math> and repeat the above steps to obtain the next sparse component values. | ||

The question then is "when to stop?". Two approaches are proposed. First, at each iteration <math>i</math>, for all <math>i<j</math>, we include the constraint <math>x_i^TXx_i=0</math> to make sure that each principal component we compute is orthogonal to the previous ones. Then the procedure stops after <math>n</math> steps automatically (there will be no solution to the <math>n+1</math>'th problem). | |||

The other way is stop as soon as all members of <math>A</math> get less than <math>\rho</math>, because at that point elements of <math>A</math> will be less than the noise value <math>\rho</math>. | |||

=== A Smoothing Technique === | |||

The numerical difficulties arising in large scale semidefinite programs stem from two distinct origins. | |||

I) Memory issue: beyond a certain problem size n, it becomes essentially impossible to form and store any second order information (Hessian) on the problem, which is the key to the numerical efficiency of interior-point SDP solvers. | |||

II) Smoothness issue: the constraint <math> X \geq 0 </math> is not smooth, hence the number of iterations required to solve problem (i) using first-order methods to an accuracy <math> \epsilon </math> is given by <math> 1/ \epsilon^2 </math> | |||

===Solving the Semidefinite Problem=== | ===Solving the Semidefinite Problem=== | ||

The cardinality constraint in the formulation (or its corresponding term in the penalized form) introduces a quadratic number of terms in the problem. This makes it practically impossible to use interior-point method to solve the problem for large values of the input dimension. So we need to use other existing methods for solving the problem, but then, there will be a matter of speed. Denoting the required accuracy by <math>\epsilon</math>, we can expect an interior-point-based program to converge after <math>\textstyle O(\log\frac1{\epsilon})</math> iterations. Here we will manage to solve the problem using <math>\textstyle O(\frac1{\epsilon})</math> iterations | The cardinality constraint in the formulation (or its corresponding term in the penalized form) introduces a quadratic number of terms in the problem. This makes it practically impossible to use interior-point method to solve the problem for large values of the input dimension. So we need to use other existing methods for solving the problem, but then, there will be a matter of speed. Denoting the required accuracy by <math>\epsilon</math>, we can expect an interior-point-based program to converge after <math>\textstyle O(F(n)\log\frac1{\epsilon})</math> iterations, for some function <math>F</math> of input size <math>n</math>. Here we will manage to solve the problem using <math>\textstyle O(\frac{F(n)}{\epsilon})</math> iterations using a first-order scheme, for some function <math>F</math> of input size. | ||

First-order method can solve a problem after <math>\textstyle O(\frac1{\epsilon})</math> iterations, for some function <math>F</math> of input size, if the problem satisfy a ''smoothness'' constraint. But in our case, <math>X\succeq 0</math> is not ''smooth'' and as a result, an application of first-order method to the our problem will result in an algorithm stopping after <math>\textstyle O(\frac1{\epsilon^2})</math> iterations, for some function <math>F</math> of input size, which is too slow. To address this problem, we consider the formulation (5) and then we define a smooth approximation of the function <math>\lambda^{\max}</math>. | |||

To come up with a smooth approximation of our goal function, we define <math>f_{\mu}(X)=\mu\log\textbf{Tr}(e^{\frac X\mu})</math>. Then, one can verify that <math>\lambda^{\max}(X)\leq f_\mu(X)\leq\lambda^{\max}(X)+\mu\log n</math> and so, for <math>\textstyle\mu=\frac{\epsilon}{\log n}</math>, <math>f_{\mu}</math> is a smooth approximation of <math>\lambda^{\max}</math> with an additive error of <math>\epsilon</math>. | |||

This way we obtain a scheme for solving the program in <math>\textstyle\frac d{\epsilon}\sqrt{\log d}</math> iterations, each taking <math>O(d^3)</math> time. | |||

==Experimental Results== | |||

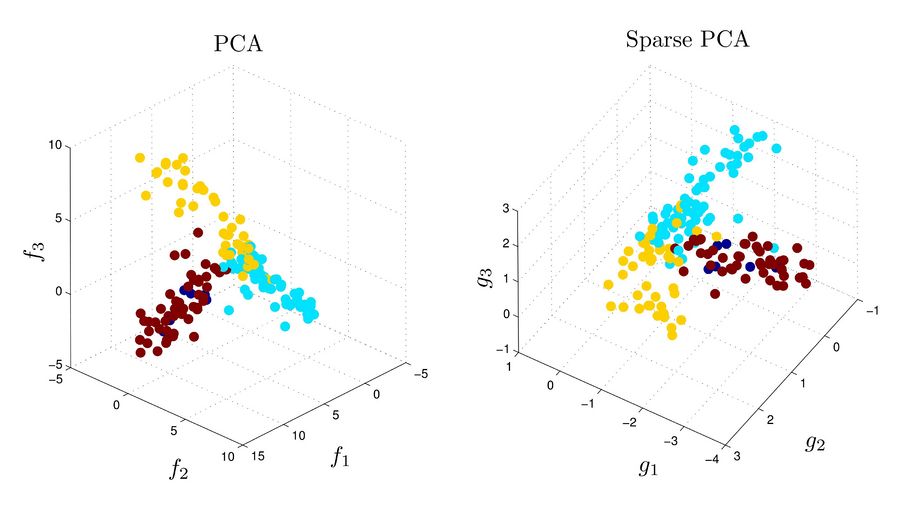

Each point in figures below corresponds to an experiment on 500 genes. The points are pre-clustered to 4 clusters based some prior knowledge. The top three principal components are computed using each of PCA and Sparse PCA methods, and the points are plotted in the bases defined by these | |||

three components. For the PCA, each principal component is a combination of all 500 variables (corresponding to 500 genes) while in sparse PCA each involves variables corresponding to at most 6 genes. | |||

[[Image:Spca-g.jpg|thumb|900px|center|Figure 1. Distribution of gene expression data in the PCA vs. Sparse PCA. The point colors are based on an pre-computed independent clustering.]] | |||

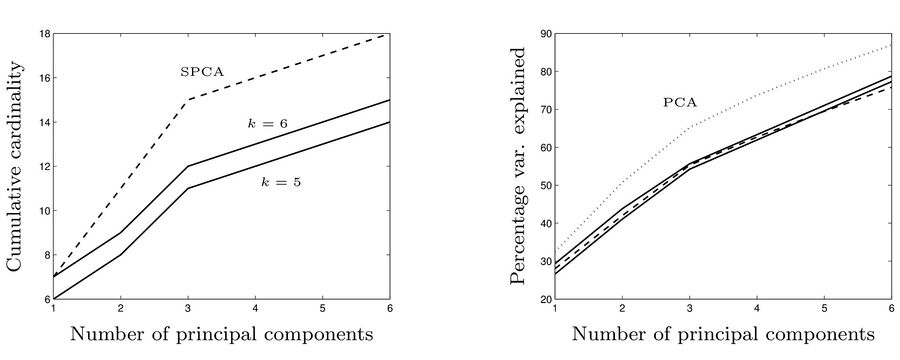

In the next figure, the left diagram compares the cumulative number of non-zero elements in principal components in three methods: SPCA, the method we explaind with <math>k=5</math>, and the method we explaind with <math>k=6</math>. In the right diagram, the cumulative percentage of total variance explained by the first principle components resulted from PCA, SPCA (dashed) and the method we explained with <math>k=5</math> and <math>k=6</math> (solid lines). | |||

[[Image:Spca-a.jpg|thumb|900px|center|Figure 2. Cumulative cardinality and total percentage of total variance explained by the first principle components resulted from PCA, SPCA (dashed) and the method we explained with <math>k=5</math> and <math>k=6</math> (solid lines).]] | |||

In sparse PCA, the sparsity of the factors helps the clustering algorithm to be attributed to fewer genes, which maks interpretation easier. | |||

Latest revision as of 08:45, 30 August 2017

Introduction

In PCA, Given [math]\displaystyle{ n }[/math] observations on [math]\displaystyle{ d }[/math] variables (or in other words [math]\displaystyle{ n }[/math] [math]\displaystyle{ d }[/math]-dimensional data points), our goal is to find directions in the space of the data set that correspond to the directions with biggest variance in the input data. In practice each of the [math]\displaystyle{ d }[/math] variables has its own special meaning and it may be desirable to come up with some directions, as principal components, each of which is a combination of just a few of these variables. This makes the directions more interpretable and meaningful. But this is not something that usually happens as the original result of PCA method. Each of resulting directions from PCA in most cases is a linear combination of all variable with no zero coefficients.

To address the above concerns we add a sparsity constraint to the PCA problem, which makes the PCA problem much harder to solve. That's because we have just added a combinatorial constraint to optimization problem. This paper is showing us how to find directions in the data space with maximum variance that have a limited number of non-zero elements. In other words, this helps us to perform feature selection, by selecting a subset of features in each direction.

Contribution

In this paper, a direct approach (called DSPCA) that improves the sparsity of the principle components is presented. This is done in 2 stages. First, incorporating a sparsity criterion in the PCA formulation. Second, forming a convex relation of the problem that is a semidefinite program. For small problems, semidifinite programs can be solved via general purpose interior-point methods. However, these methods can not be used for high dimensional problems. In this case, a saddle point problem can express our particular problem. For this kind of problems, smoothing argument algorithms combined with an optimal first-order smooth minimization algorithm offer a significant reduction in computational time and therefore can be used instead of generic interior point SDP solvers.

Notation

The following notations are used in this note.

[math]\displaystyle{ S^n \, }[/math] is the set of symmetric matrices of size [math]\displaystyle{ n \, }[/math].

[math]\displaystyle{ \textbf{1} \, }[/math] is a column vector of ones.

[math]\displaystyle{ \textbf{Card}(x) \, }[/math] denotes the cardinality (number of non-zero elements) of a vector [math]\displaystyle{ x \, }[/math]

[math]\displaystyle{ \textbf{Card}(X) \, }[/math] denotes the cardinality (number of non-zero elements) of a matrix [math]\displaystyle{ X \, }[/math]

For [math]\displaystyle{ X \in S^n \, }[/math], [math]\displaystyle{ X \succeq 0 \, }[/math] means [math]\displaystyle{ X \, }[/math] is positive semi-definite.

[math]\displaystyle{ |X| \, }[/math] is the matrix whose elements are the absolute values of the elements of [math]\displaystyle{ X \, }[/math]

Problem Formulation

Given the covariance matrix [math]\displaystyle{ A }[/math], the problem can be written as:

|

[math]\displaystyle{ \begin{array}{ll} \textrm{maximize}& x^TAx\\ \textrm{subject\ to}& ||x||_2=1\\ &\textbf{Card}(x)\leq k \end{array} }[/math] | (1) |

The cardinality constraint makes this problem hard (NP-hard) and we are looking for a convex and efficient relaxation.

Defining [math]\displaystyle{ X=xx^T }[/math], the above formula can be rewritten as

|

[math]\displaystyle{ \begin{array}{ll} \textrm{maximize}& \textbf{Tr}(AX)\\ \textrm{subject\ to}&\textbf{Tr}(X)=1\\ &\textbf{Card}(X)\leq k^2\\ &X\succeq 0, \textbf{Rank}(X)=1\\ \end{array} }[/math] | (2) |

The conditions [math]\displaystyle{ X\succeq 0 }[/math] and [math]\displaystyle{ \textbf{Rank}(X)=1 }[/math] in formula 2 guarantees that [math]\displaystyle{ X }[/math] can be written as [math]\displaystyle{ xx^T }[/math], for some [math]\displaystyle{ x }[/math]. But this formulation should be relaxed before it can be solved efficiently, because the constraints [math]\displaystyle{ \textbf{Card}(X)\leq k^2 }[/math] and [math]\displaystyle{ \textbf{Rank}(X)=1 }[/math] are not convex. So we replace the cardinality constraint with a weaker one: [math]\displaystyle{ \textbf{1}^T|X|\textbf 1\leq k }[/math]. We also drop the rank constraint. So we get:

|

[math]\displaystyle{ \begin{array}{ll} \textrm{maximize}& \textbf{Tr}(AX)\\ \textrm{subject\ to}&\textbf{Tr}(X)=1\\ &\textbf{1}^T|X|\textbf 1\leq k\\ &X\succeq 0\\ \end{array} }[/math] | (i) |

The above semidefinite relaxation can even be generalised to a non square matrix [math]\displaystyle{ A \in R^{mxn} }[/math] as follows:

|

[math]\displaystyle{ \begin{array}{ll} \textrm{maximize}& \textbf{Tr}(AX^{12})\\ \textrm{subject\ to}&\textbf{Tr}(X^{ii})=1\\ &\textbf{1}^T|X^{ii}|\textbf 1\leq k_i, i=1,2\\ &\textbf{1}^T|X^{12}|\textbf 1\leq \sqrt{k_1k_2}\\ &X\succeq 0\\ \end{array} }[/math] |

in the variable [math]\displaystyle{ X \in S^{m+n} }[/math] with blocks [math]\displaystyle{ X^{ij} }[/math] for i,j=1,2.

We then change the modified cardinality constraint to a penalty term in the goal function with some positive factor [math]\displaystyle{ \rho }[/math]. So we get a semidefinite form of the problem:

|

[math]\displaystyle{ \begin{array}{ll} \textrm{maximize}& \textbf{Tr}(AX)-\rho\textbf{1}^T|X|\textbf 1\\ \textrm{subject\ to}&\textbf{Tr}(X)=1\\ &X\succeq 0\\ \end{array} }[/math] | (3) |

where, [math]\displaystyle{ \rho }[/math] controls the penalty magnitude.

The goal function can be rewritten as [math]\displaystyle{ \textbf{Tr}(AX)-\rho\textbf{1}^T|X|\textbf 1=\min_{|U_{ij}|\leq\rho}\textbf{Tr}((A+U)X) }[/math]. So the problem (3) is equivalent to:

|

[math]\displaystyle{ \begin{array}{ll} \textrm{maximize}& \min_{|U_{ij}|\leq\rho}\textbf{Tr}(X(A+U))\\ \textrm{subject\ to}&\textbf{Tr}(X)=1\\ &X\succeq 0\\ \end{array} }[/math] | (4) |

or equivalently, due to convexity:

|

[math]\displaystyle{ \begin{array}{lll} \textrm{minimize}& \lambda^{\max}(A+U)\\ \textrm{subject\ to}&|U_{ij}|\leq\rho,&i,j=1\cdots,n\\ \end{array} }[/math] | (5) |

where [math]\displaystyle{ \lambda^{\max}(M) }[/math] is the largest eigenvalue of the matrix [math]\displaystyle{ M }[/math].

The problem as described in formulation (5) can be seen as computing a robust version of maximum eigenvalue: it is the least possible value of maximum eigenvalue, given that each element can be changed by at most noise value [math]\displaystyle{ \rho }[/math]. Also, it corresponds to a worst-case maximum eigenvalue computation with a bounded noise of intensity [math]\displaystyle{ \rho }[/math] in each component on the matrix coefficients.

The KKT conditions for optimization problems (3) and (5) are given by:

|

[math]\displaystyle{ \left\{ \begin{array}{rl} &(A+U)X=\lambda^{\max}(A+U)X\\ &U\circ X=\rho |X| \\ &\text{Tr}(X)=1,\,\,\,X\succeq 0 \\ &|U_{i,j}|\leq \rho,\,\,\, i,j=1,\cdots ,n \end{array} \right. }[/math] | |

If the [math]\displaystyle{ \lambda^{\max} }[/math] in the first equation is simple (meaning it is of multiplicity 1) and [math]\displaystyle{ \rho }[/math] is sufficiently small, from the first equation it follows that [math]\displaystyle{ \textbf{Rank}(X)=1 }[/math]. In fact, the form of this equation implies that all columns of [math]\displaystyle{ \,X }[/math] are eigenvectors of matrix [math]\displaystyle{ A+U }[/math] corresponding to its maximum eigenvalue. So the rank one constraint is automatically satisfied in this special case.

The Algorithm

The Main Loop

The algorithm should iteratively create the semidefinite program (4) and solve it to obtain the next most important sparse principle component. At each iteration, we should first obtain the solution [math]\displaystyle{ x }[/math] of the corresponding problem of form (1), if [math]\displaystyle{ X }[/math] is the optimal solution of the optimization problem. That will be straightforward if [math]\displaystyle{ X }[/math] is of rank 1, but since we have dropped the rank constraint, this may not be true and in those cases we need to obtain the dominant eigenvalue of [math]\displaystyle{ X }[/math] by the methods that are known in the literature; for example we can use the power method which efficiently provides us with the largest eigenvectors of a matrix. Note, however, that in this case the resulting vectors are not guaranteed to be as sparse as the matrix itself. After obtaining a (hopefully) sparse vector [math]\displaystyle{ x }[/math] we replace the matrix [math]\displaystyle{ A }[/math] with [math]\displaystyle{ A-(x_1^TAx_1)x_1x_1^T }[/math] and repeat the above steps to obtain the next sparse component values.

The question then is "when to stop?". Two approaches are proposed. First, at each iteration [math]\displaystyle{ i }[/math], for all [math]\displaystyle{ i\lt j }[/math], we include the constraint [math]\displaystyle{ x_i^TXx_i=0 }[/math] to make sure that each principal component we compute is orthogonal to the previous ones. Then the procedure stops after [math]\displaystyle{ n }[/math] steps automatically (there will be no solution to the [math]\displaystyle{ n+1 }[/math]'th problem).

The other way is stop as soon as all members of [math]\displaystyle{ A }[/math] get less than [math]\displaystyle{ \rho }[/math], because at that point elements of [math]\displaystyle{ A }[/math] will be less than the noise value [math]\displaystyle{ \rho }[/math].

A Smoothing Technique

The numerical difficulties arising in large scale semidefinite programs stem from two distinct origins.

I) Memory issue: beyond a certain problem size n, it becomes essentially impossible to form and store any second order information (Hessian) on the problem, which is the key to the numerical efficiency of interior-point SDP solvers.

II) Smoothness issue: the constraint [math]\displaystyle{ X \geq 0 }[/math] is not smooth, hence the number of iterations required to solve problem (i) using first-order methods to an accuracy [math]\displaystyle{ \epsilon }[/math] is given by [math]\displaystyle{ 1/ \epsilon^2 }[/math]

Solving the Semidefinite Problem

The cardinality constraint in the formulation (or its corresponding term in the penalized form) introduces a quadratic number of terms in the problem. This makes it practically impossible to use interior-point method to solve the problem for large values of the input dimension. So we need to use other existing methods for solving the problem, but then, there will be a matter of speed. Denoting the required accuracy by [math]\displaystyle{ \epsilon }[/math], we can expect an interior-point-based program to converge after [math]\displaystyle{ \textstyle O(F(n)\log\frac1{\epsilon}) }[/math] iterations, for some function [math]\displaystyle{ F }[/math] of input size [math]\displaystyle{ n }[/math]. Here we will manage to solve the problem using [math]\displaystyle{ \textstyle O(\frac{F(n)}{\epsilon}) }[/math] iterations using a first-order scheme, for some function [math]\displaystyle{ F }[/math] of input size.

First-order method can solve a problem after [math]\displaystyle{ \textstyle O(\frac1{\epsilon}) }[/math] iterations, for some function [math]\displaystyle{ F }[/math] of input size, if the problem satisfy a smoothness constraint. But in our case, [math]\displaystyle{ X\succeq 0 }[/math] is not smooth and as a result, an application of first-order method to the our problem will result in an algorithm stopping after [math]\displaystyle{ \textstyle O(\frac1{\epsilon^2}) }[/math] iterations, for some function [math]\displaystyle{ F }[/math] of input size, which is too slow. To address this problem, we consider the formulation (5) and then we define a smooth approximation of the function [math]\displaystyle{ \lambda^{\max} }[/math].

To come up with a smooth approximation of our goal function, we define [math]\displaystyle{ f_{\mu}(X)=\mu\log\textbf{Tr}(e^{\frac X\mu}) }[/math]. Then, one can verify that [math]\displaystyle{ \lambda^{\max}(X)\leq f_\mu(X)\leq\lambda^{\max}(X)+\mu\log n }[/math] and so, for [math]\displaystyle{ \textstyle\mu=\frac{\epsilon}{\log n} }[/math], [math]\displaystyle{ f_{\mu} }[/math] is a smooth approximation of [math]\displaystyle{ \lambda^{\max} }[/math] with an additive error of [math]\displaystyle{ \epsilon }[/math]. This way we obtain a scheme for solving the program in [math]\displaystyle{ \textstyle\frac d{\epsilon}\sqrt{\log d} }[/math] iterations, each taking [math]\displaystyle{ O(d^3) }[/math] time.

Experimental Results

Each point in figures below corresponds to an experiment on 500 genes. The points are pre-clustered to 4 clusters based some prior knowledge. The top three principal components are computed using each of PCA and Sparse PCA methods, and the points are plotted in the bases defined by these three components. For the PCA, each principal component is a combination of all 500 variables (corresponding to 500 genes) while in sparse PCA each involves variables corresponding to at most 6 genes.

In the next figure, the left diagram compares the cumulative number of non-zero elements in principal components in three methods: SPCA, the method we explaind with [math]\displaystyle{ k=5 }[/math], and the method we explaind with [math]\displaystyle{ k=6 }[/math]. In the right diagram, the cumulative percentage of total variance explained by the first principle components resulted from PCA, SPCA (dashed) and the method we explained with [math]\displaystyle{ k=5 }[/math] and [math]\displaystyle{ k=6 }[/math] (solid lines).

In sparse PCA, the sparsity of the factors helps the clustering algorithm to be attributed to fewer genes, which maks interpretation easier.